The Kardashev Scale: Our Path to Becoming a Type-I Civilization

Imagine a future where humanity has mastered planetary energy resources, marking our first monumental achievement on the Kardashev scale—a method devised by Soviet physicist Nikolai Kardashev in 1964 to measure the technological advancement of civilizations based on their energy consumption. Kardashev proposed three main types of civilizations: Type-I, which harnesses all available planetary energy; Type-II, which taps into the energy output of its local star; and Type-III, which commands energy on a galactic scale. Today, humanity is not even Type-I, ranking closer to Type 0.5. However, with technological advancements in renewable energy, there’s a serious discussion about what it will take to propel us to that next level.

Given my interest and experience in artificial intelligence (AI), automation, and quantum computing, the pursuit of massive energy resources to scale technology is a compelling topic. From my time at Harvard working on self-driving robots to my current role as a technology consultant, these aspirations are more than science fiction—they underline the pressing need for sustainable energy solutions that can power both future innovations and today’s increasing AI-driven technologies.

Defining a Type-I Civilization

At its core, a Type-I civilization controls all of the Earth’s available energy, which includes renewable sources such as solar, wind, ocean currents, and geothermal power. To grasp the challenge, consider that humanity currently consumes about 10^13 watts globally. To qualify as Type-I, however, we need to harness about 10^17 watts—roughly 10,000 times more energy than we do today.

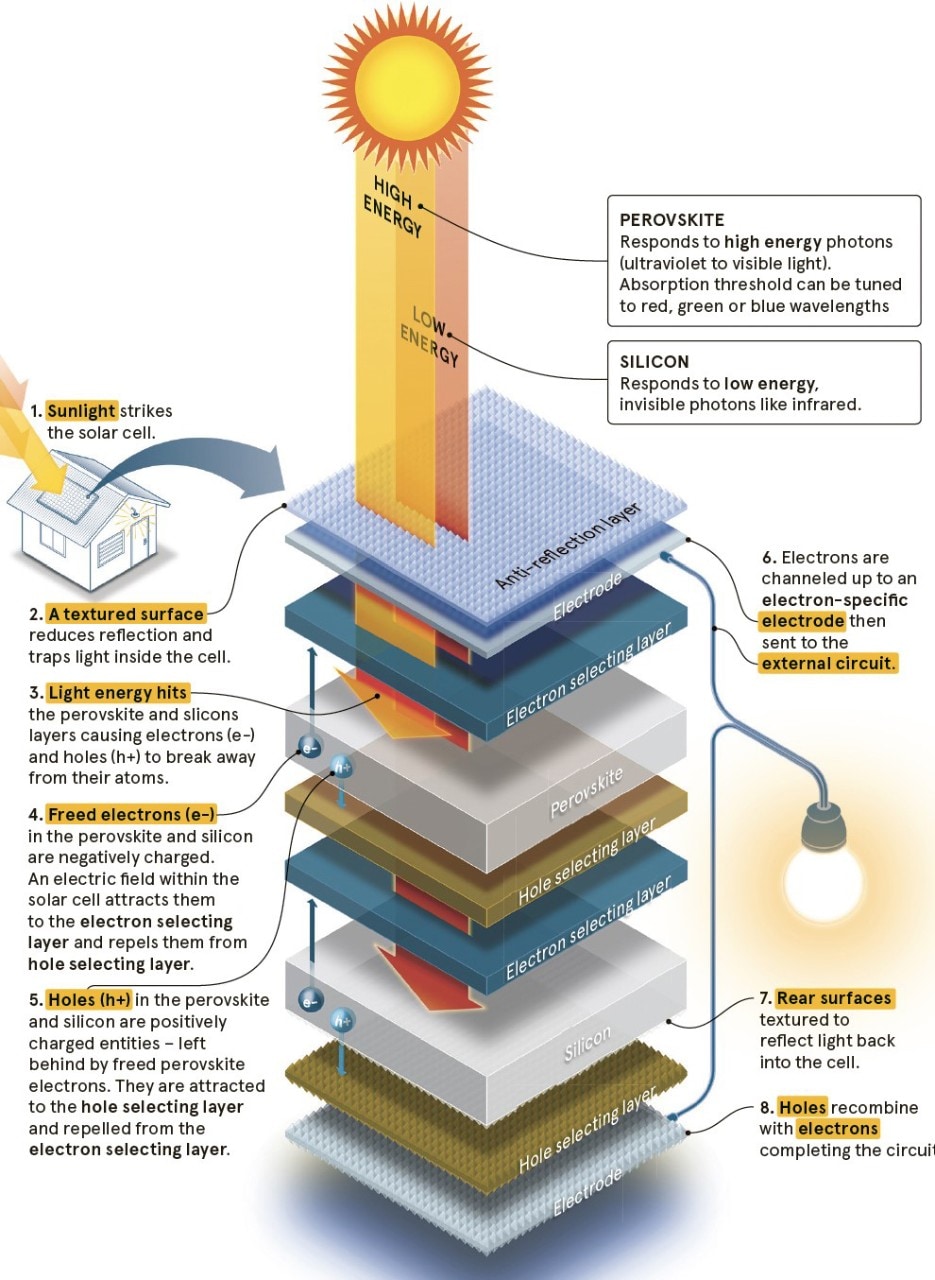

The most promising energy source? Solar power. Solar irradiance delivers around 1.7 x 10^17 watts to Earth’s surface, making it the most abundant and technically feasible option for achieving such consumption rates. Converting even a fraction of this energy efficiently would allow us to meet the demands of a Type-I civilization.

< >

>

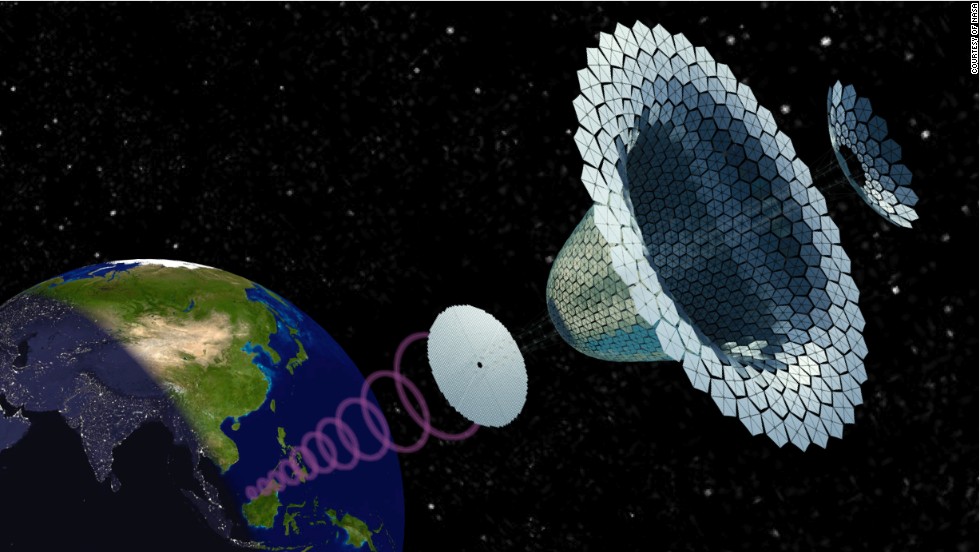

Solar Solutions and Space-Based Power

Solar energy is not limited to terrestrial solar panels. The idea of space-based solar farms—arrays of photovoltaic cells orbiting the Earth or stationed at Lagrange points—has been gaining traction, particularly given that space boasts several advantages: 24-hour sunlight exposure, no atmospheric interference, and higher energy outputs due to lack of UV-blocking gases. However, one significant challenge with such systems is transferring energy from space to Earth, where microwave transmission or even laser-based methods could be explored. With advancements in quantum computing and AI, optimizing power distribution systems could make this more attainable.

< >

>

Space-based systems are often seen as a stepping stone toward Type-II civilization capabilities, where we could capture the entirety of the Sun’s energy output. However, we need to focus our current technological development on becoming a full-fledged Type-I first. To reach this goal, we must continue improving both terrestrial and space-based technologies.

Fusion: Humanity’s Future Power Source?

Beyond solar energy, nuclear fusion presents another intriguing power-generating method. In the fusion process, hydrogen nuclei combine to form helium, converting a fraction of their mass into large amounts of energy. Deuterium and tritium are two hydrogen isotopes used in these reactions, and Earth’s oceans contain a vast supply of deuterium, providing enough fuel for hundreds of millions of years. Mastering fusion could be the breakthrough technology that gives us unlimited, clean power.

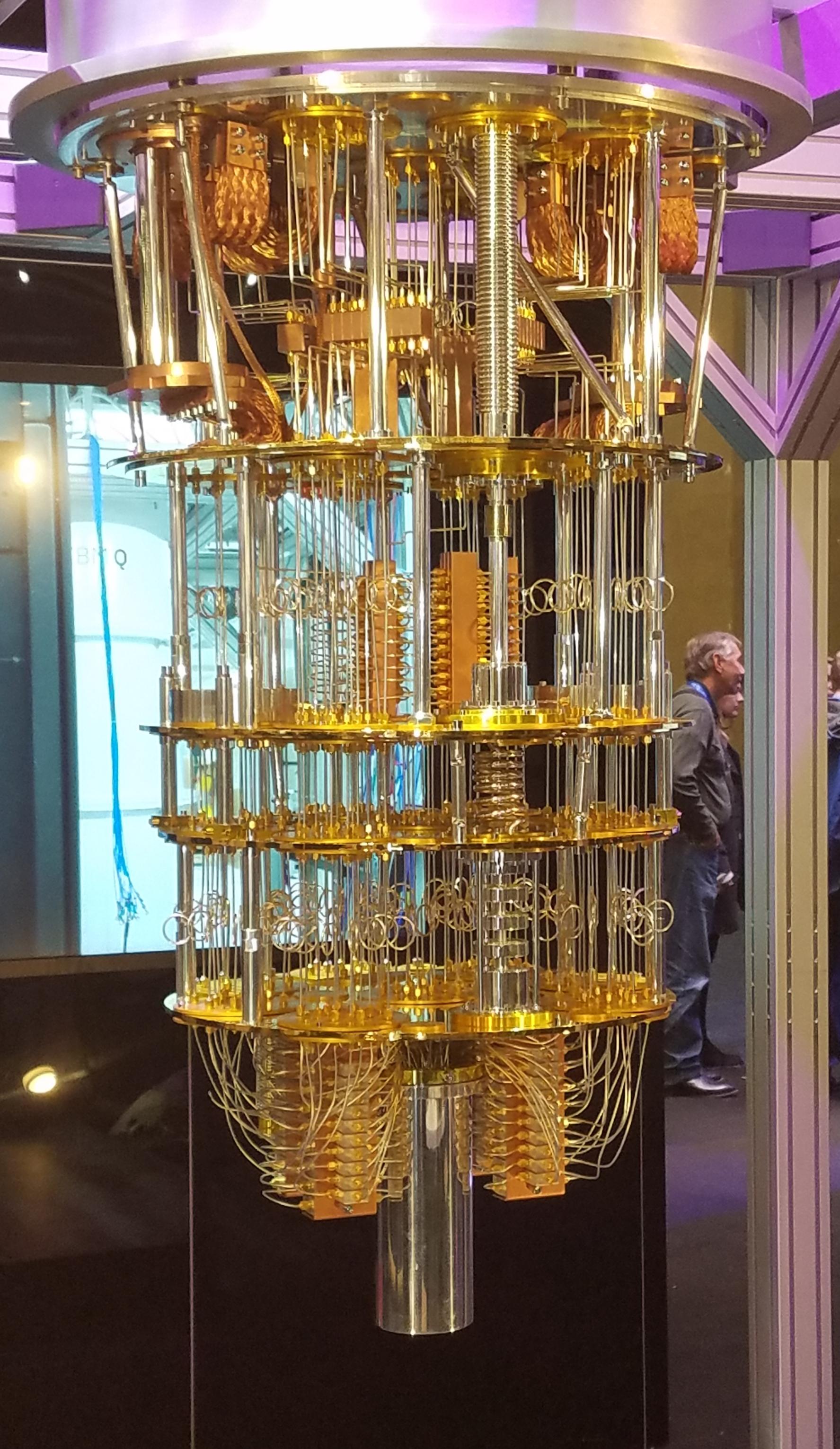

Projects like ITER (International Thermonuclear Experimental Reactor) in France are spearheading efforts to make nuclear fusion viable. While fusion is always touted as being “just 30 years away,” the advancements in AI-driven simulations and control systems are helping us inch closer to making fusion energy a reality. If humanity can develop stable fusion reactors capable of producing output on the magnitude of 10^17 watts, then we’ll be one step closer to Type-I energy levels.

<

>

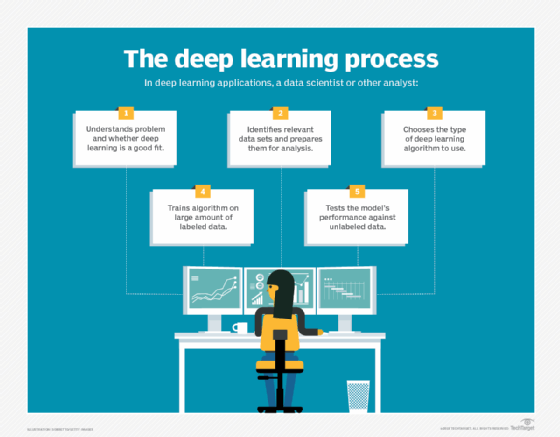

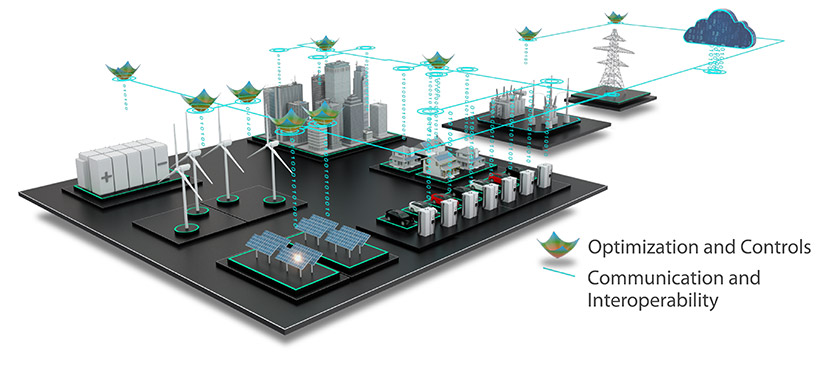

Global Energy Infrastructure and AI

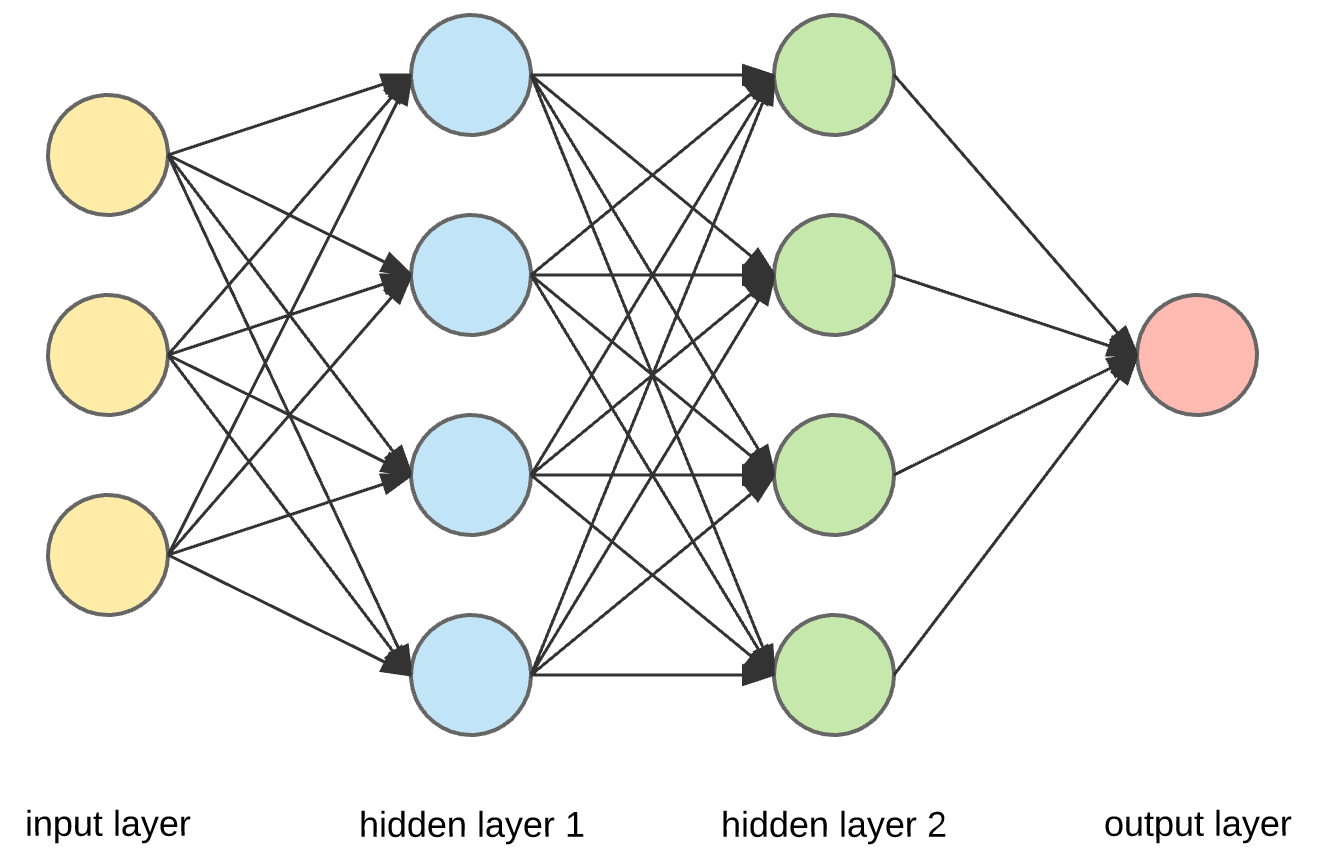

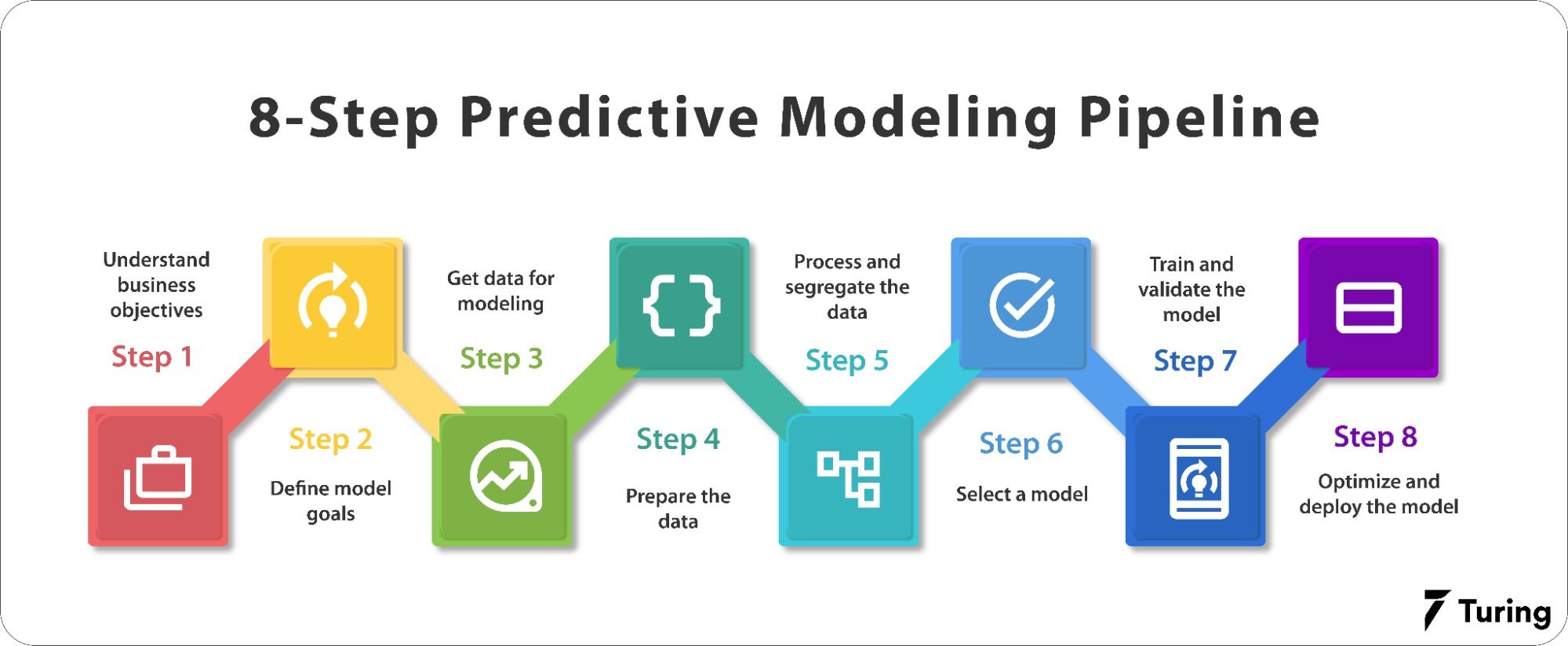

What’s particularly fascinating about reaching the Type-I benchmark is that in addition to energy, we’ll need advanced, AI-driven energy management systems. Efficient distribution of power will require a global supergrid, potentially leveraging high-temperature superconductors to minimize energy loss. My work with multi-cloud deployments and AI offers an excellent example of how to couple computational power with scalable infrastructure.

The biggest challenge in designing these infrastructures won’t just be physical; they will also require smart, adaptive systems that balance supply and demand. Imagine AI-driven processors monitoring energy consumption across the globe in real-time, optimizing the flow of energy from both terrestrial and space-based solar farms, as well as fusion reactors. This is the type of highly linked infrastructure that will drive the future—a future I deeply believe in given my background in process automation and AI advancements.

< >

>

Challenges Beyond Energy: Societal and Geopolitical Factors

Energy is just one piece of the Type-I puzzle. Achieving this level will also demand global cooperation, the resolution of geopolitical tensions, and collective efforts to mitigate societal disparities. These issues lie outside the realm of technology but are intertwined with the resource management necessary for such an ambitious transition. In a world deeply divided by political and economic inequalities, mobilizing resources on a planetary level will require unprecedented collaboration, which is perhaps a greater challenge than the technical aspects.

Inspiration from the Kardashev Scale

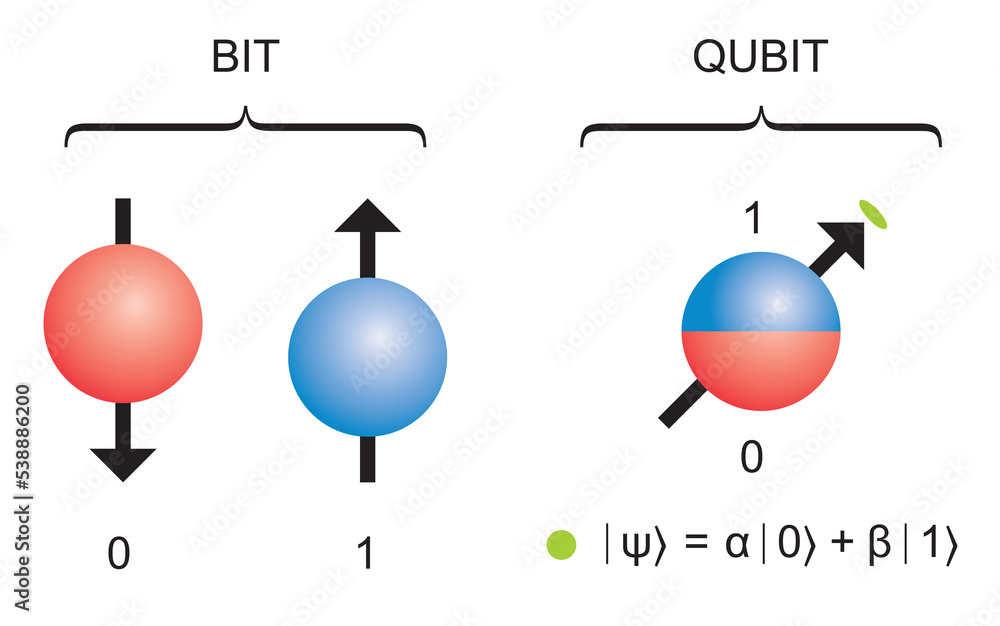

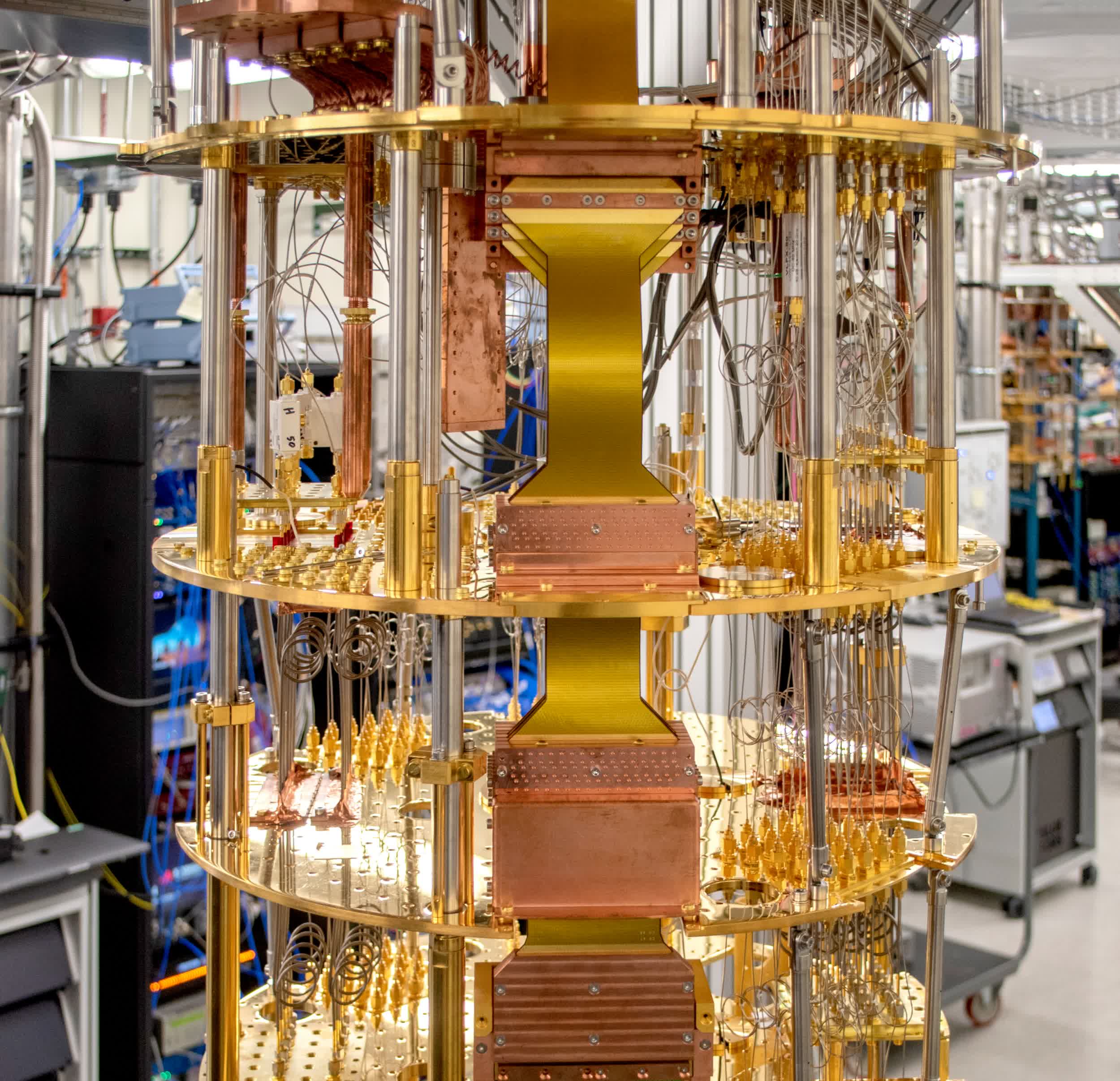

The Kardashev scale provides an exciting framework, especially when viewed through the lens of modern advancements like AI and renewable energy. With AI, quantum computing, and energy innovations laying the groundwork, we may witness the rise of humanity as a Type-I civilization within several centuries. But to get there, we must focus on building the necessary energy infrastructure now—whether through fusion, solar, or something yet undiscovered.

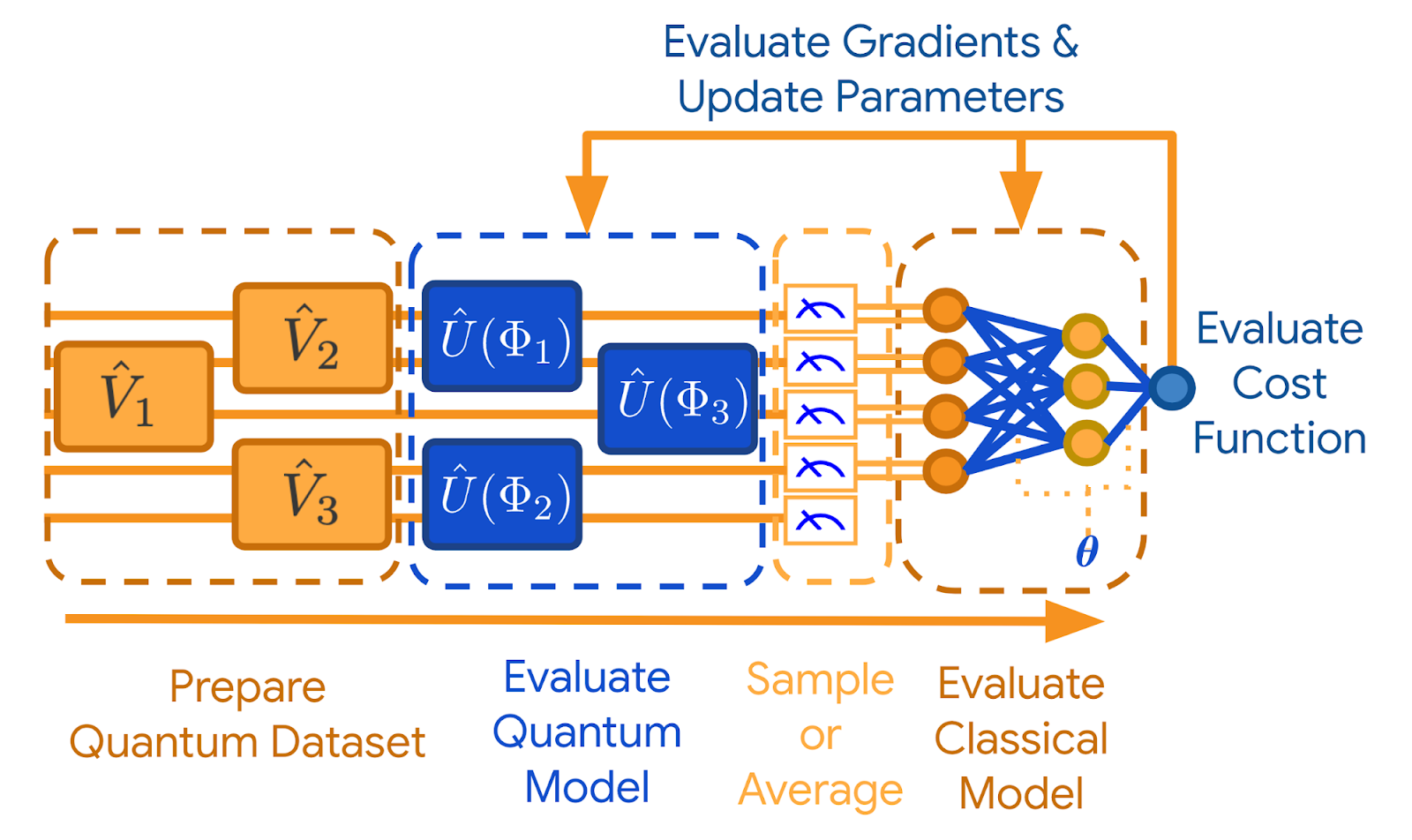

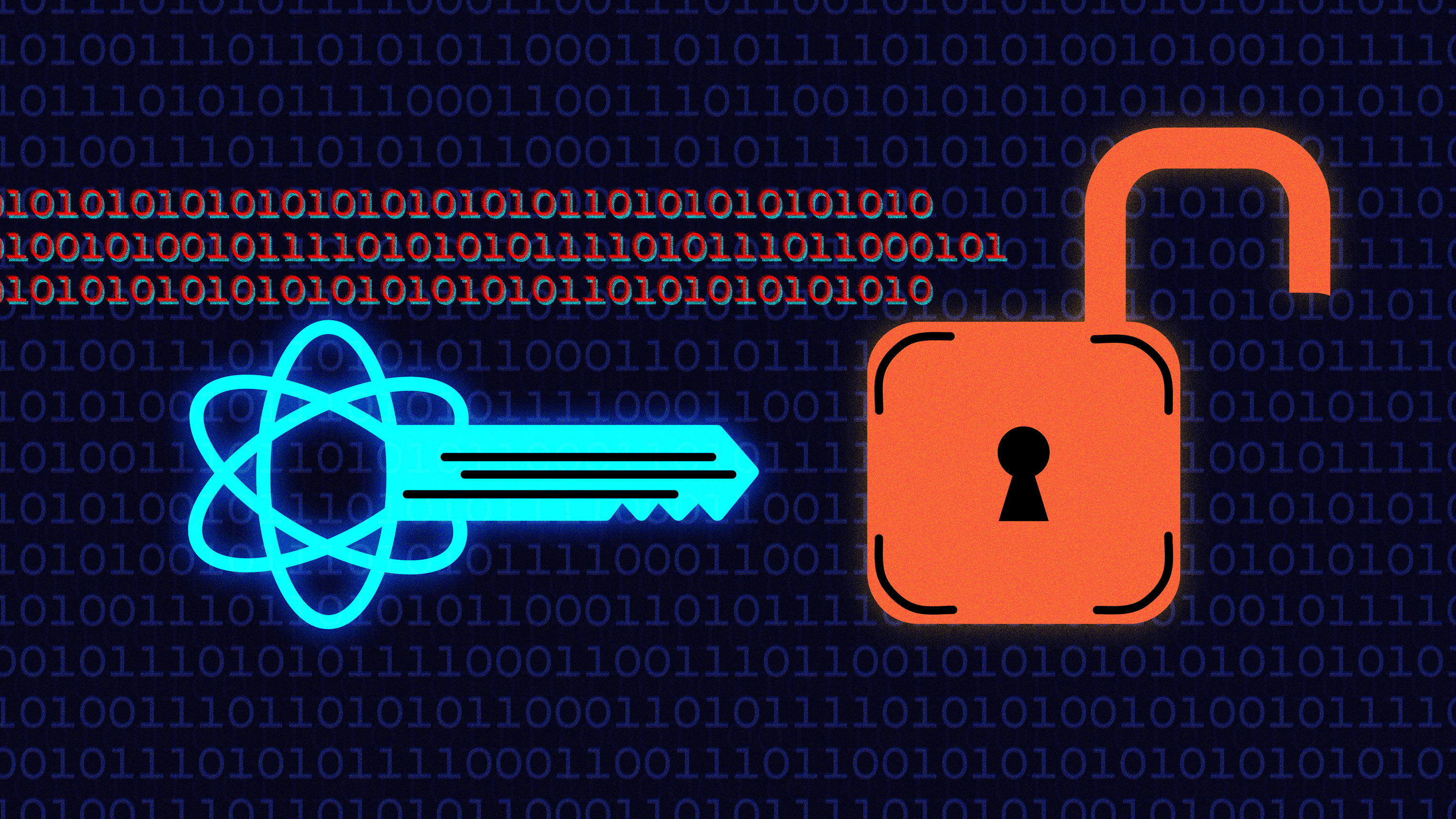

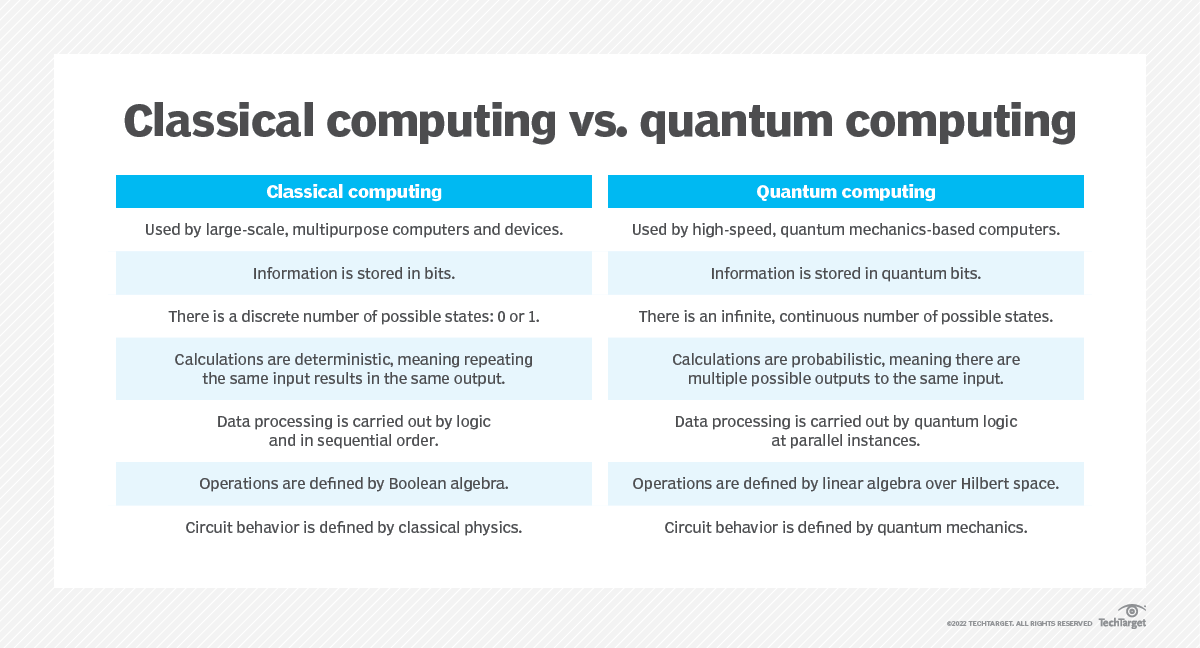

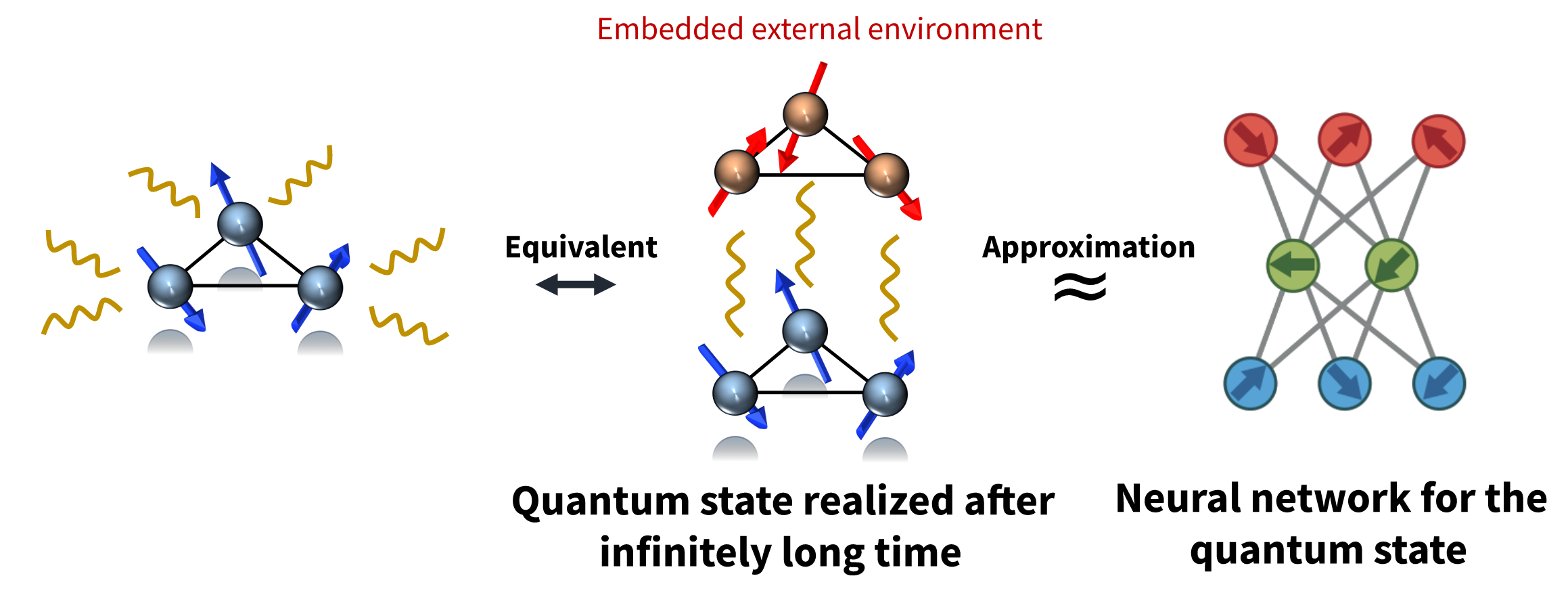

I’ve written previously about how technologies like machine learning and quantum computing have the potential to transform industries, and the same philosophy applies to energy. In pieces like “The Revolutionary Impact of Quantum Computing on Artificial Intelligence and Machine Learning,” I’ve discussed how computational advancements accelerate innovation. As we solve these technological challenges, perhaps we are on the cusp of tapping into far greater energy resources than Kardashev ever imagined.

Focus Keyphrase: Kardashev Scale Type-I Civilization

>

> >

> >

> >

> >

> >

>