NVIDIA’s Breakthrough in Ray Tracing: Fusing Realism and Speed for Next-Gen Graphics

As someone who’s lived and breathed in the world of tech and AI, especially with a background at Harvard focusing on machine learning and cloud infrastructure during my tenure at DBGM Consulting, Inc., I’ve witnessed some incredible breakthroughs. But what NVIDIA is rolling out with their latest work on real-time graphics rendering feels like a major paradigm shift. It’s something many thought was impossible: combining the fidelity of ray tracing with the astonishing speed of Gaussian splatting. Let’s break this down and explore why this could be a complete game-changer for the future of gaming, simulation, and even virtual reality.

What is Ray Tracing?

Before I dive into the ground-breaking fusion, let’s first understand what ray tracing is. Ray tracing, in essence, simulates how light beams interact with virtual objects. It traces the path of millions of individual rays that can reflect off of surfaces, create realistic shadows, and replicate complex material properties such as glass refraction or human skin textures. In the world of visual graphics, it is responsible for generating some of the most breathtaking, photorealistic imagery we’ve ever seen.

However, ray tracing is a resource-intensive affair, often taking seconds to minutes (if not hours) per frame, making it less feasible for real-time applications like live video games or interactive virtual worlds. It’s beautiful, but also slow.

In a previous article, I discussed the advancements in AI and machine learning and their role in real-time ray tracing in gaming graphics. What we see now with NVIDIA’s new hybrid approach feels like the next step in that trajectory.

Enter Gaussian Splatting

On the opposite end, we have a newer technique known as Gaussian splatting. Instead of dealing with complex 3D geometries, this method represents scenes using particles (points). It’s incredibly fast, capable of rendering visuals faster than real-time, making it highly suitable for live games or faster workflows in graphics design. Yet, it has its downsides—most notably, issues with reflective surfaces and detailed light transport, making it less suited for high-end, realism-heavy applications.

While Gaussian splatting is fast, it suffers in areas where ray tracing excels, especially when it comes to sophisticated reflections or material rendering.

Fusing the Best of Both Worlds

Now, here’s what sparks my excitement: NVIDIA’s latest innovation involves combining these two vastly different techniques into one unified algorithm. Think of it as blending the precision and quality of ray tracing with the efficiency of Gaussian splatting—something previously thought impossible. When I first read about this, I was skeptical, given how different the two approaches are. One is geometry, the other is particles. One is slow but perfect, the other fast but flawed.

But after seeing some initial results and experiments, I’m warming up to this concept. NVIDIA has managed to address several long-standing issues with Gaussian splatting, boosting the overall detail and reducing the “blurry patches” that plagued the technique. In fact, their results rival traditional ray tracing in several key areas like specular reflections, shadows, and refractions.

And what’s even more stunning? These improvements come without ballooning the memory requirements, a key concern with large-scale simulations where memory constraints are often the bottleneck.

Breaking Down the Experiments

The landmark paper showcases four key experiments that push this hybrid technique to its limits:

| Experiment | What’s Tested | Key Results |

|---|---|---|

| Experiment 1 | Synthetic Objects | Ray tracing of points works well with synthetic data. |

| Experiment 2 | Real-World Scenes | Remarkable improvement in reflections and material rendering. |

| Experiment 3 | Large-Scale Scenes | Efficient memory usage despite complex rendering tasks. |

| Experiment 4 | Advanced Light Transport (Reflections, Shadows, Refractions) | High realism maintained for crucial light behaviors. |

Experiment 2: Where It All Changes

Perhaps the most stand-out of these is Experiment 2, where real-world scenes are rendered. The results are breathtaking. Reflections are cleaner, realistic objects like glass and marble react to light more precisely, and material properties like glossiness and texture are impeccably portrayed. These improvements bring the visual depth you’d expect from ray tracing, but at a speed and efficiency that wouldn’t eat up the memory budgets of your hardware.

Experiment 4: Light Transport in Action

One of my favorite aspects of the experiments is the light transport simulation. The ability of the system to handle real-time reflections, shadows, and even complex refractions (like light bending through transparent objects) is truly a sight to behold. These are the “make or break” aspects that usually differentiate CGI rendering from live-action realism in movies or games.

Real-Time Rendering at Incredible Speeds

Perhaps even more important than the stunning graphical output is the speed. Rendering dozens of frames per second—up to 78 frames per second on some scenes—is unheard of in this quality tier. This could eventually lead to ray tracing technology becoming the backbone of future mainstream real-time graphics engines. Just a couple of years ago, hitting even 1 FPS for such visuals was a challenge, but now we’re seeing near real-time performance in complex scenes.

And, for a final cherry on top, it uses half the memory compared to Gaussian splatting, making it a highly efficient solution that doesn’t eat into our precious VRAM budget, which, especially in gaming and real-time simulation, is always a scarce and expensive resource.

A New Era for Real-Time Photorealism?

The implications of this tech advancement are profound. We are approaching an era where real-time rendering of photorealistic environments may become the standard, not the exception. Beyond gaming, industries like architectural visualization, filmmaking, and autonomous driving simulations will see monumental benefits from such advancements.

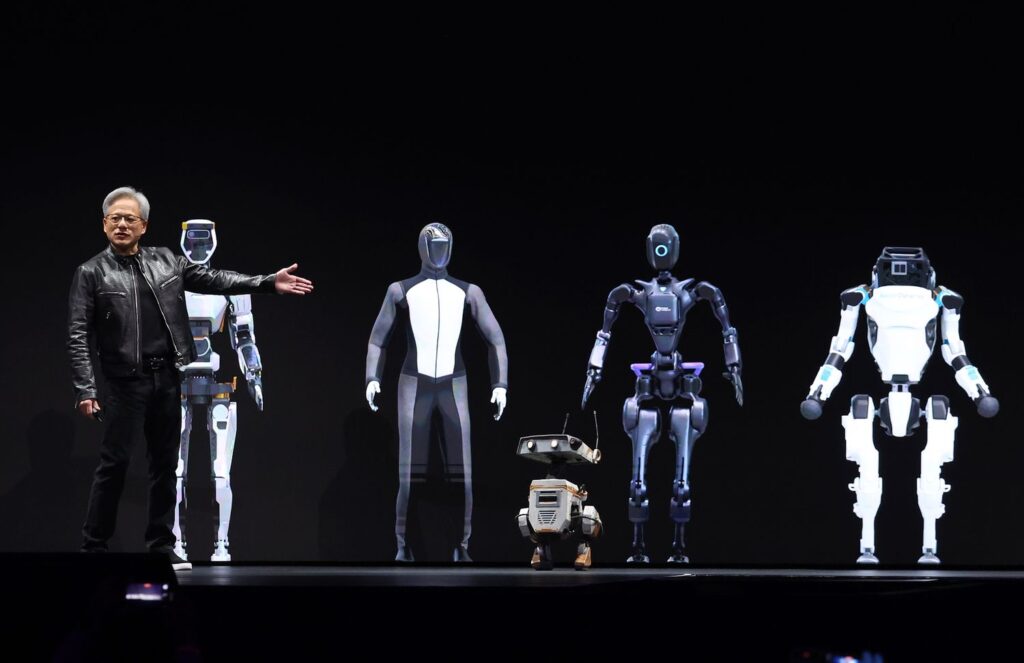

When it comes to autonomous driving, a topic I’ve covered in previous posts, real-time rendering of data could be used to enhance sensor-based simulations, helping vehicles make better decisions by processing visual cues faster while still maintaining accuracy. The vast applications of this breakthrough extend far beyond entertainment.

The Future is Bright (and Real-Time)

So, is this the future of rendering? Quite possibly. The confluence of speed and realism that NVIDIA has hit here is remarkable and has the potential to shift both gaming and many other industries. While there’s room for improvement—there are still occasional blurry patches—this technology is pushing boundaries further than most imagined possible. Surely, with time, research, and more iterations, we’ll see even greater refinements in the near future.

Ultimately, I find this type of innovation in visual technologies incredibly optimistic in what it says about the future of AI and computational graphics.

Focus Keyphrase: Real-time ray tracing hybrid solution

>

> >

> >

>