Advancing Model Diagnostics in Machine Learning: A Deep Dive

In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), the reliability and efficacy of models determine the success of an application. As we continue from our last discussion on the essentials of model diagnostics, it’s imperative to delve deeper into the intricacies of diagnosing ML models, the challenges encountered, and emerging solutions paving the way for more robust, trustworthy AI systems.

Understanding the Core of Model Diagnostics

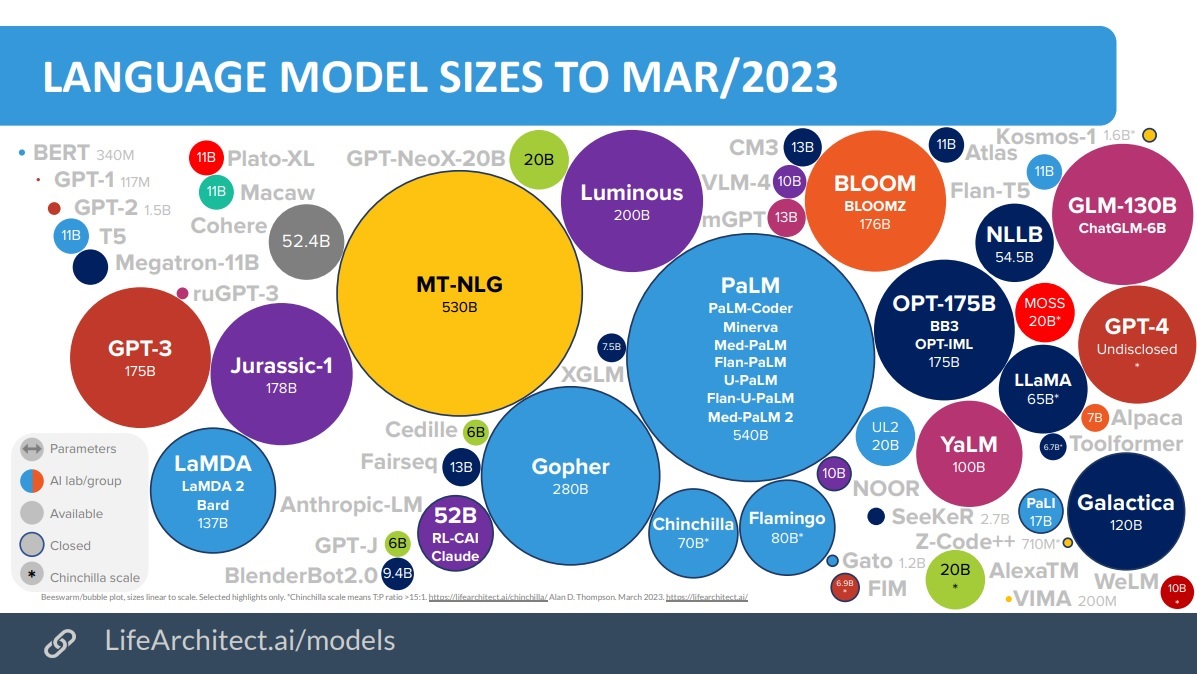

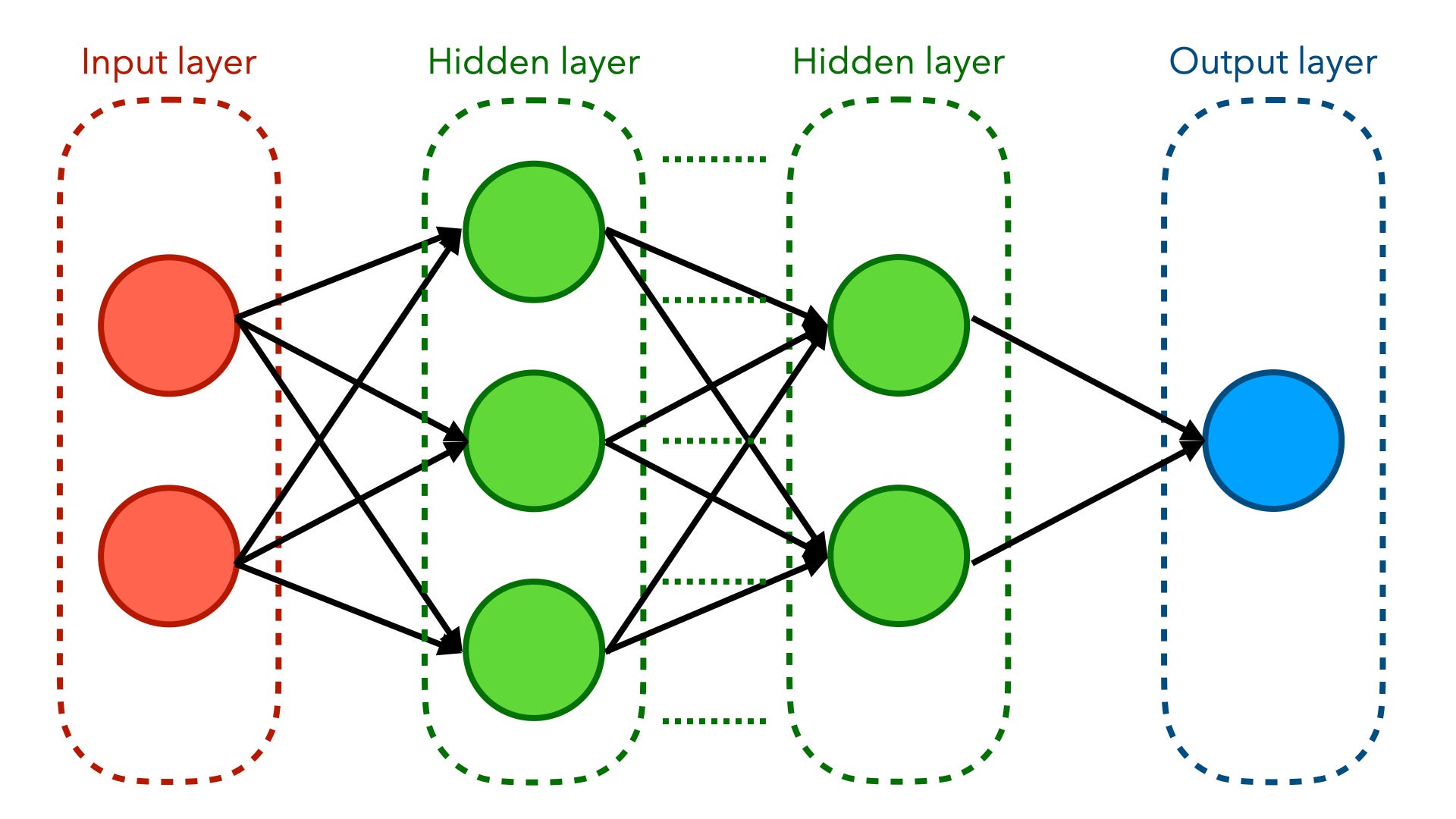

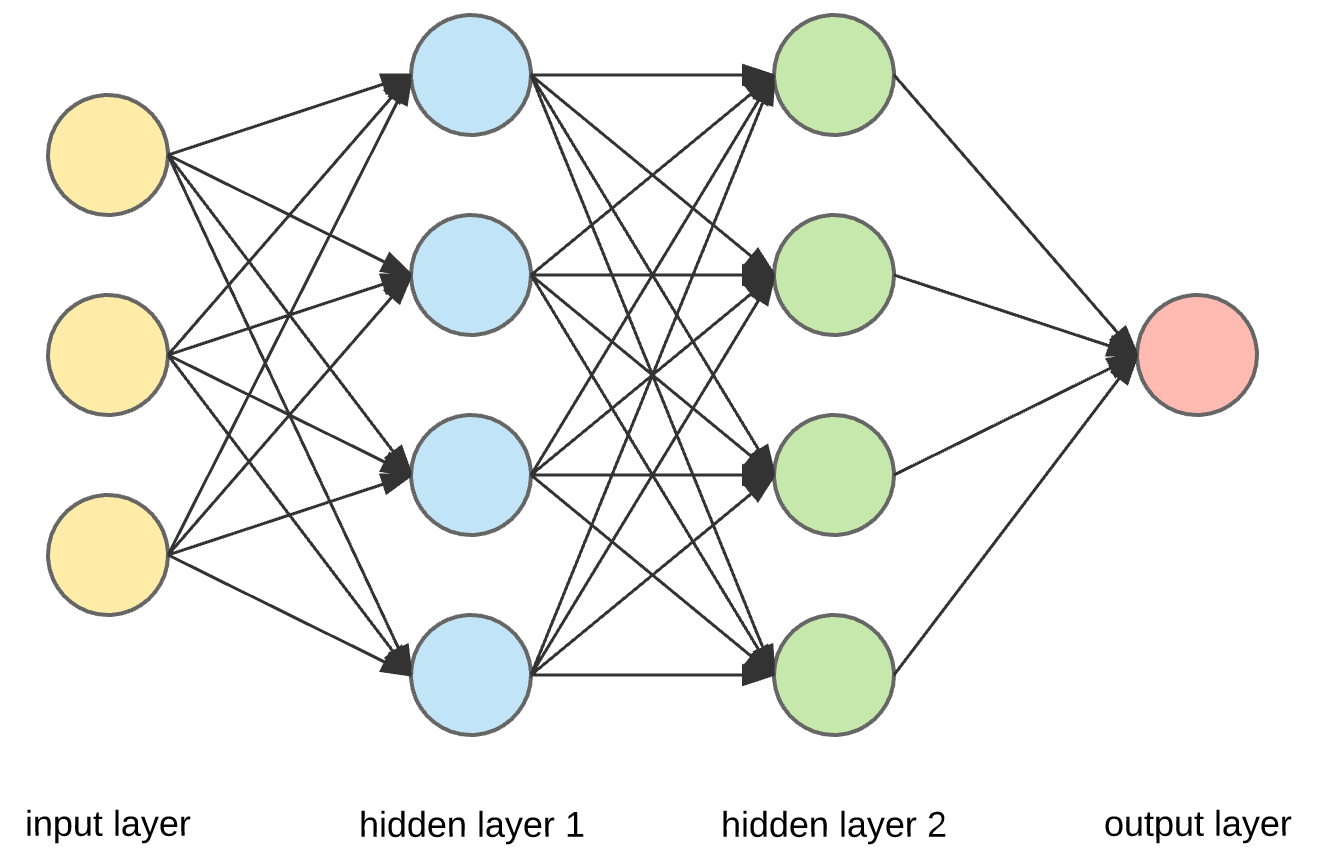

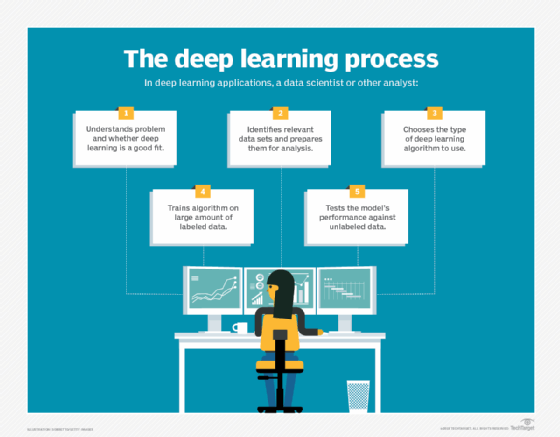

Model diagnostics in machine learning encompass a variety of techniques and practices aimed at evaluating the performance and reliability of models under diverse conditions. These techniques provide insights into how models interact with data, identifying potential biases, variances, and errors that could compromise outcomes. With the complexity of models escalating, especially with the advent of Large Language Models (LLMs), the necessity for advanced diagnostic methods has never been more critical.

Crucial Aspects of Model Diagnostics

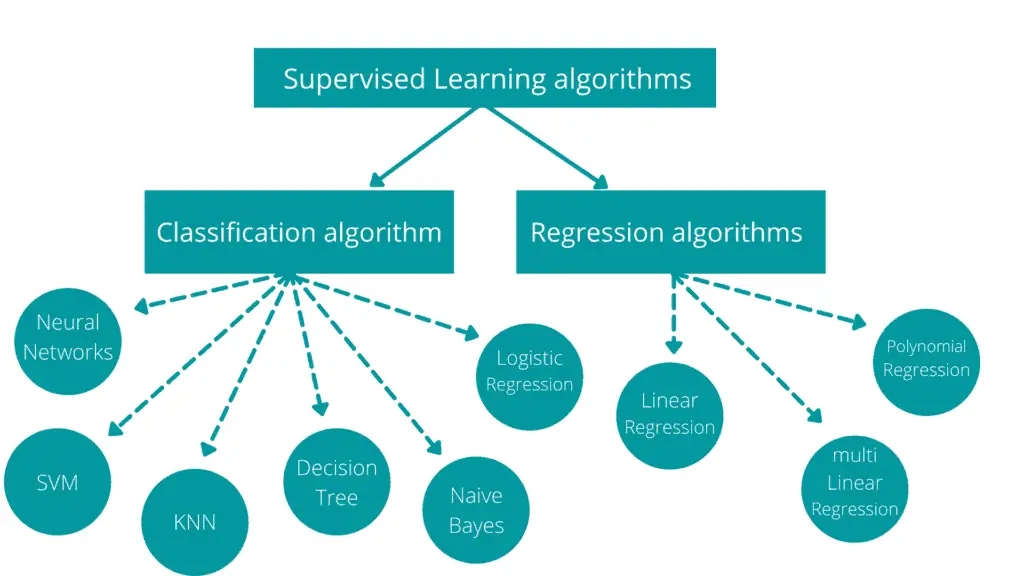

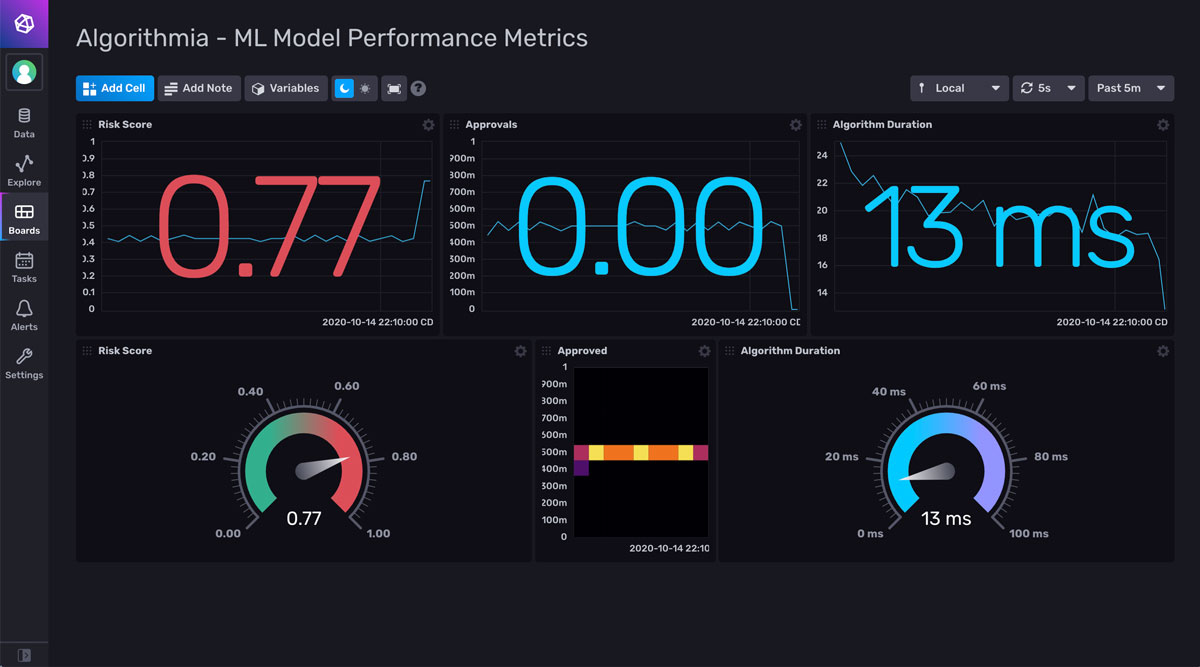

- Performance Metrics: Accuracy, precision, recall, and F1 score for classification models; mean squared error (MSE), and R-squared for regression models.

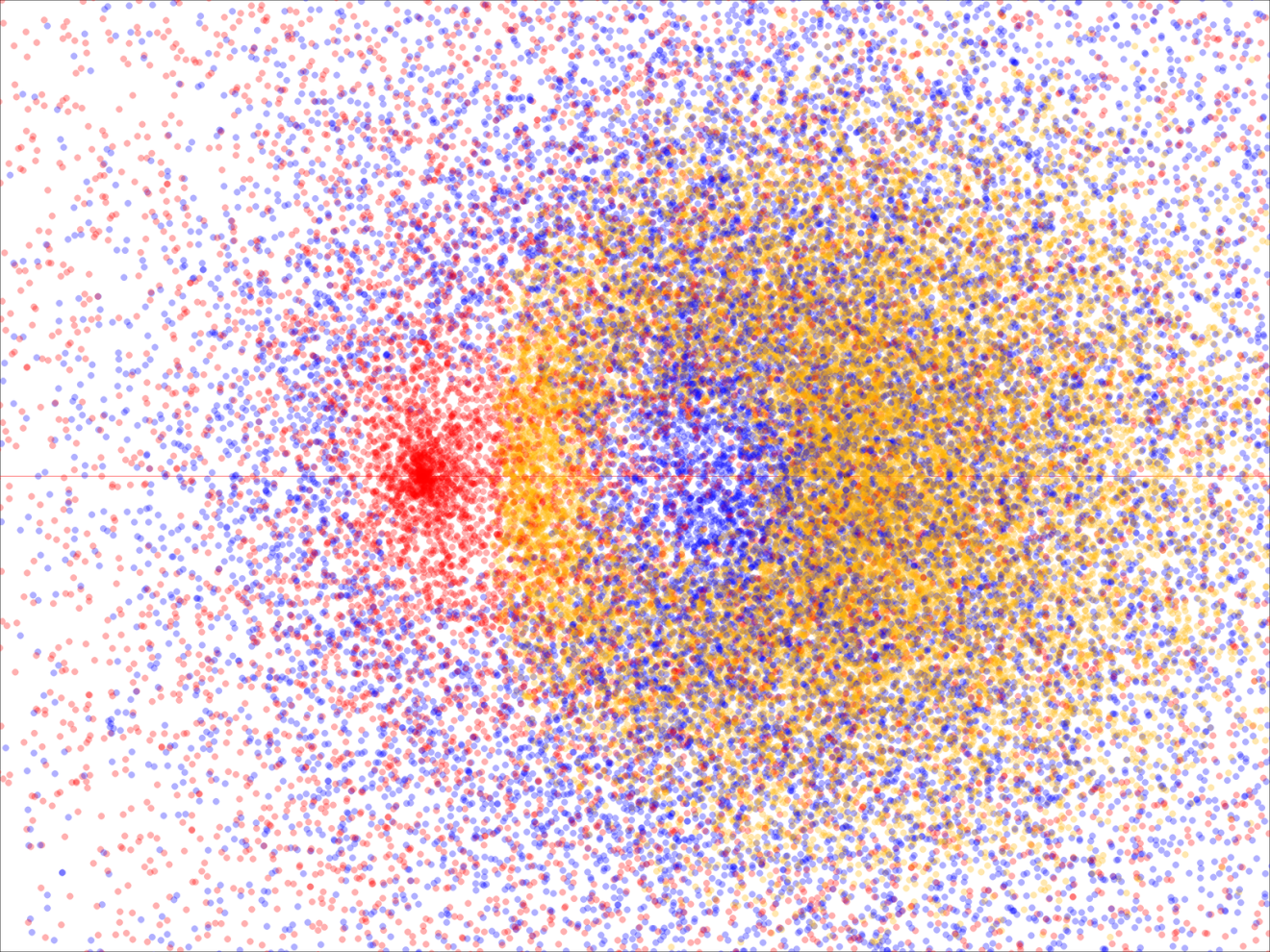

- Error Analysis: Detailed examination of error types and distributions to pinpoint systemic issues within the model.

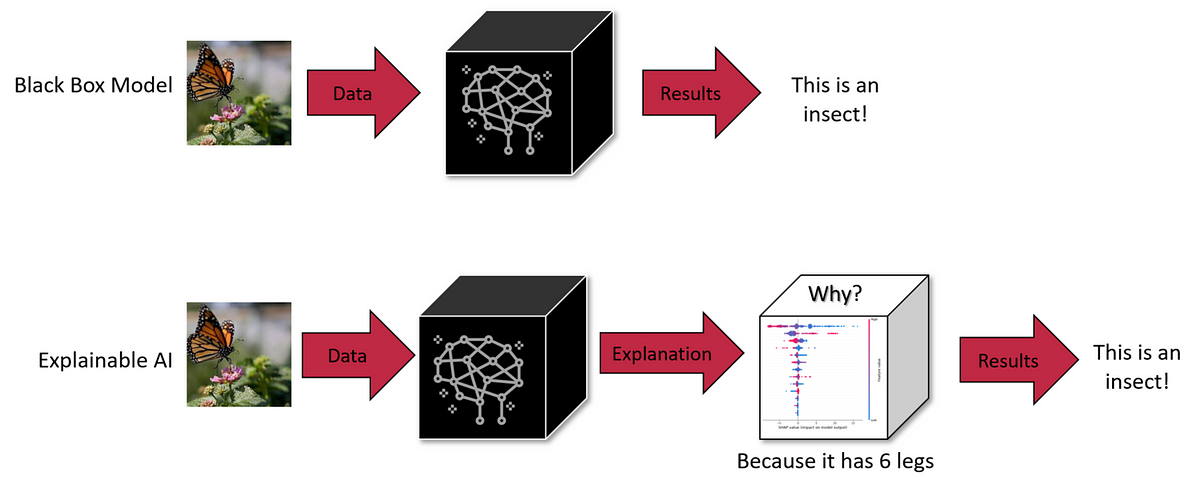

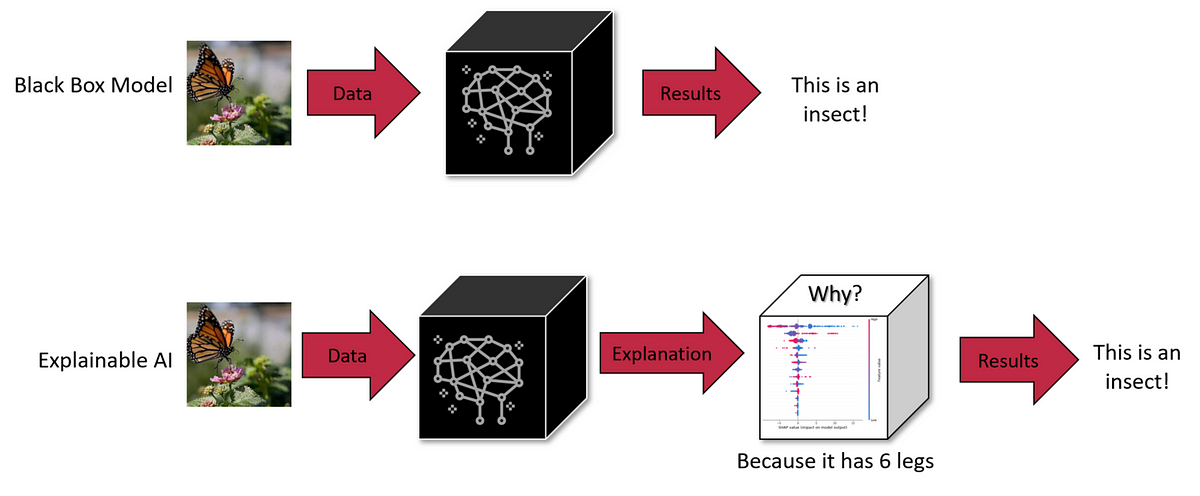

- Model Explainability: Tools and methodologies such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) that unveil the reasoning behind model predictions.

Emerging Challenges in Model Diagnostics

With the deepening complexity of machine learning models, especially those designed for tasks such as natural language processing (NLP) and autonomous systems, diagnosing models has become an increasingly intricate task. Large Language Models, like those powered by GPT (Generative Pre-trained Transformer) architectures, present unique challenges:

- Transparency: LLMs operate as “black boxes,” making it challenging to understand their decision-making processes.

- Scalability: Diagnosing models at scale, especially when they are integrated into varied applications, introduces logistical and computational hurdles.

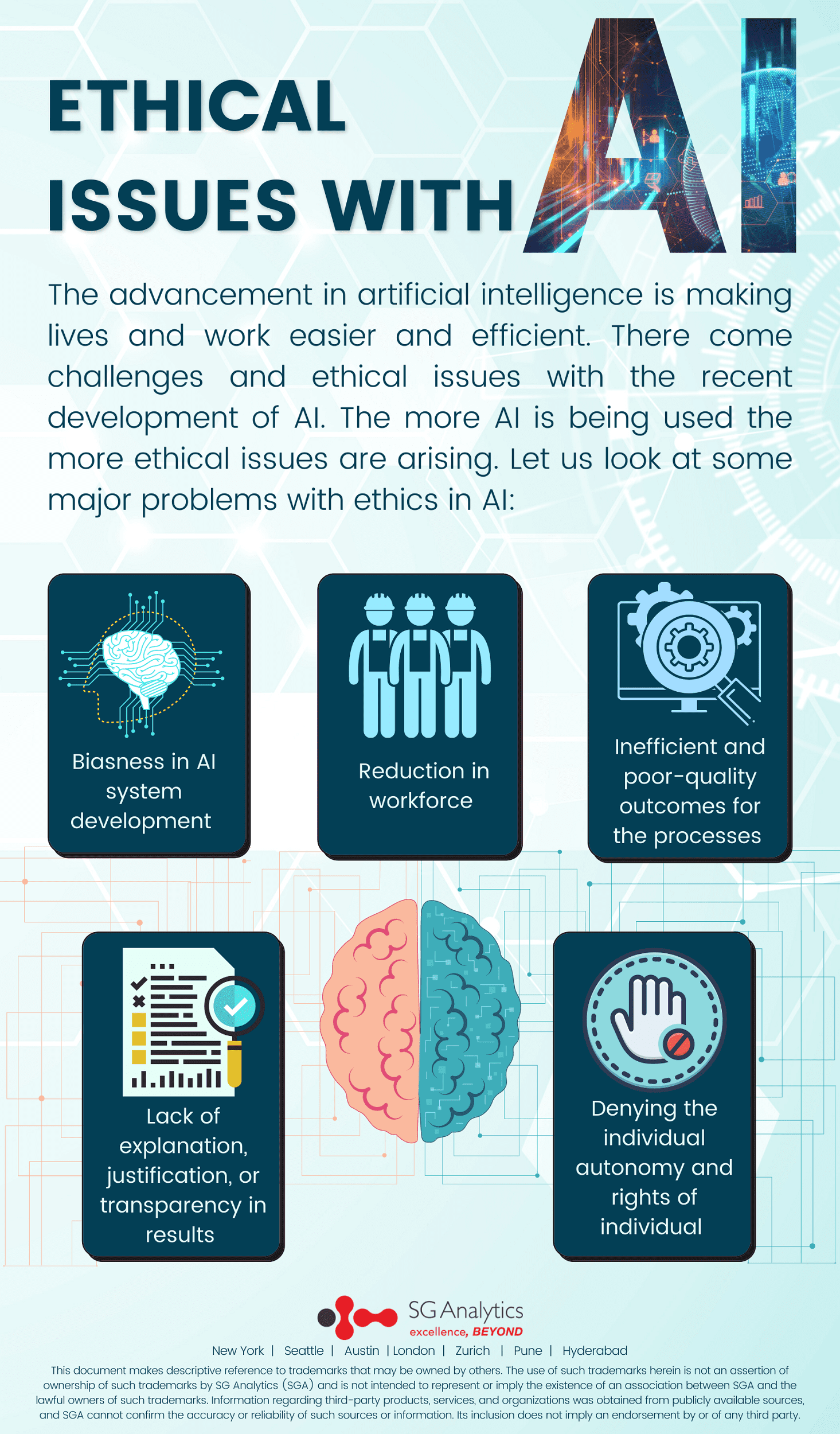

- Data Bias and Ethics: Identifying and mitigating biases within models to ensure fair and ethical outcomes.

As a consultant specializing in AI and machine learning, tackling these challenges is at the forefront of my work. Leveraging my background in Information Systems from Harvard University, and my experience with machine learning algorithms in autonomous robotics, I’ve witnessed firsthand the evolution of diagnostic methodologies aimed at enhancing model transparency and reliability.

Innovations in Model Diagnostics

The landscape of model diagnostics is continually evolving, with new tools and techniques emerging to address the complexities of today’s ML models. Some of the promising developments include:

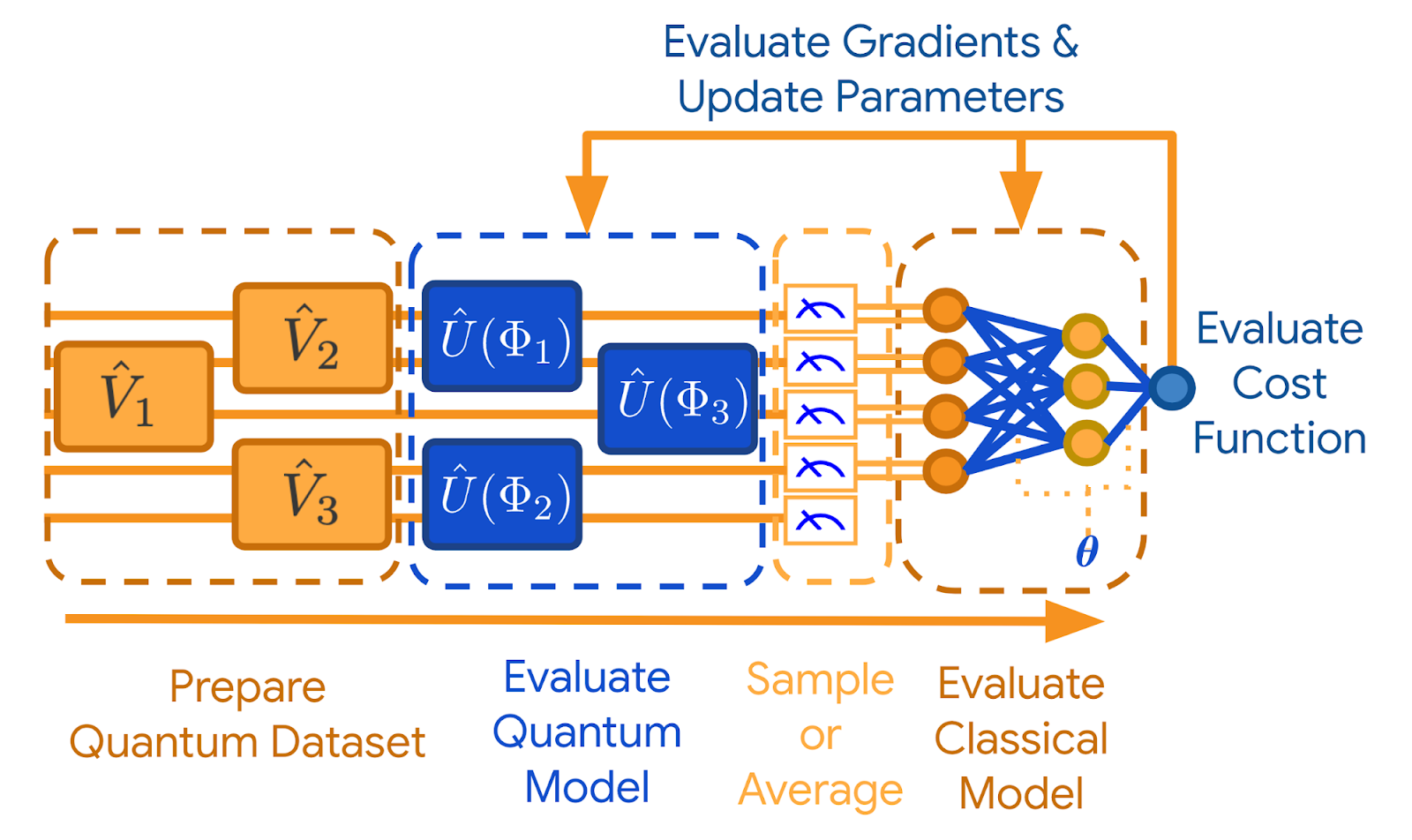

- Automated Diagnostic Tools: Automation frameworks that streamline the diagnostic process, improving efficiency and accuracy.

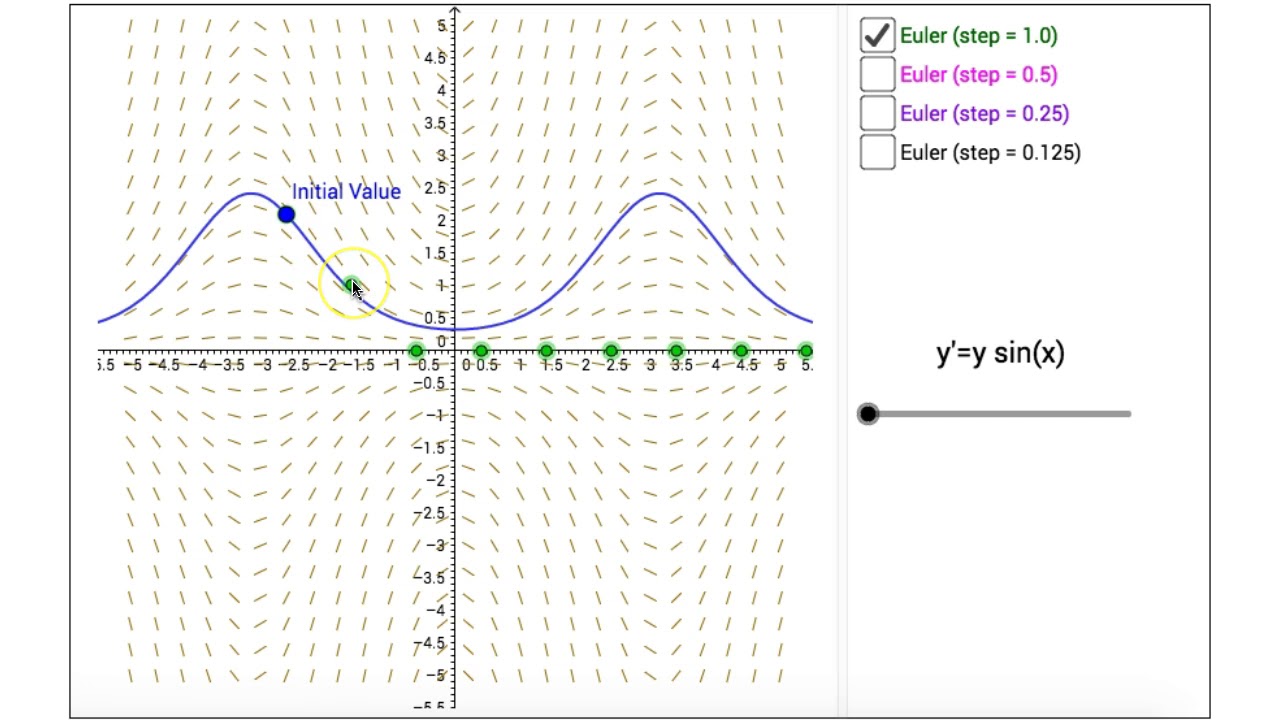

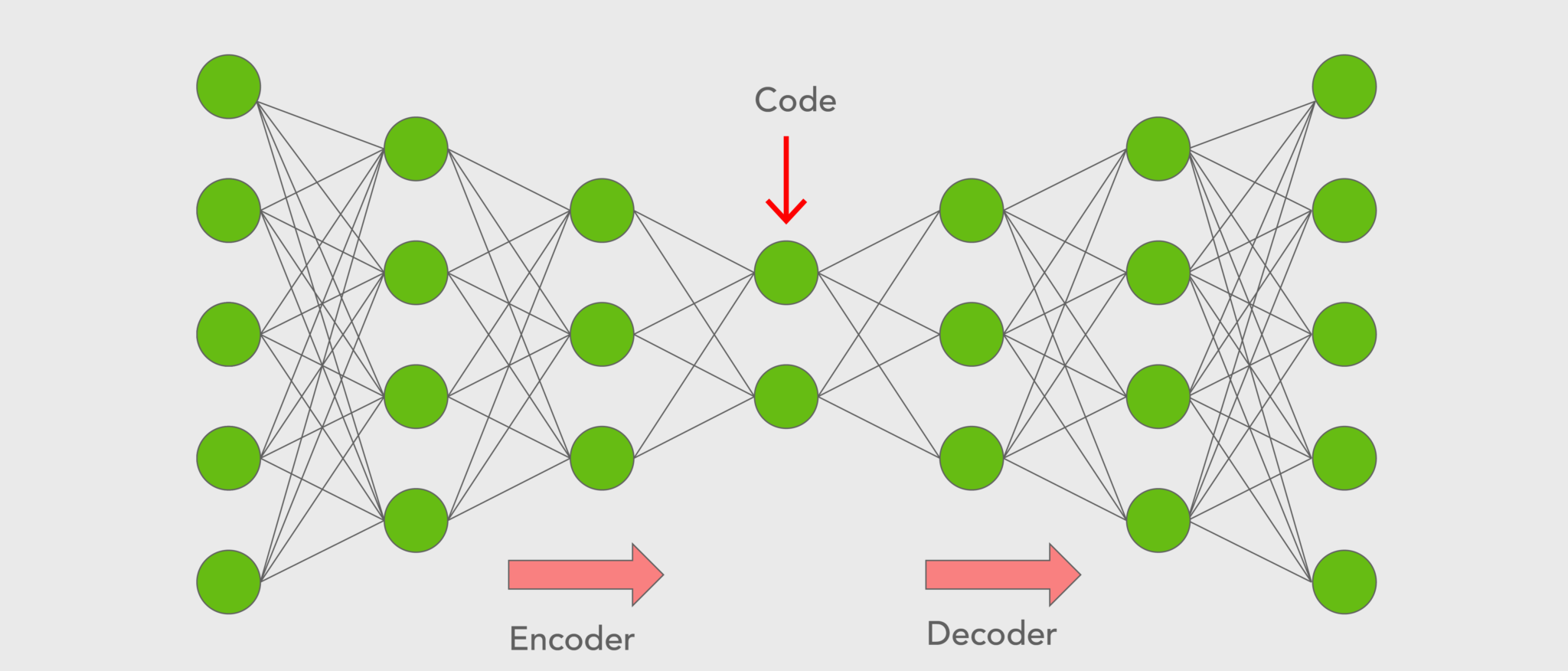

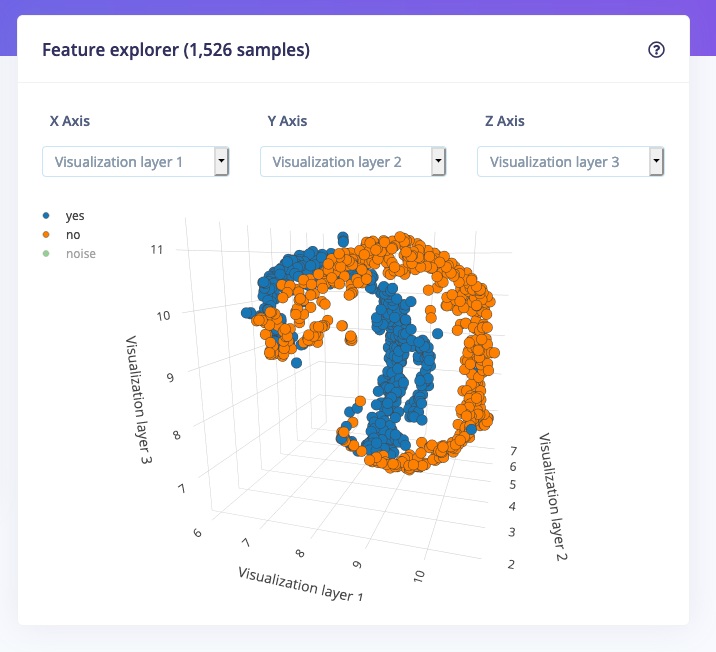

- Visualization Tools: Advanced visualization software that offers intuitive insights into model behavior and performance.

- AI Ethics and Bias Detection: Tools designed to detect and mitigate biases within AI models, ensuring fair and ethical outcomes.

Conclusion: The Future of Model Diagnostics

As we venture further into the age of AI, the role of model diagnostics will only grow in importance. Ensuring the reliability, transparency, and ethical compliance of AI systems is not just a technical necessity but a societal imperative. The challenges are significant, but with ongoing research, collaboration, and innovation, we can navigate these complexities to harness the full potential of machine learning technologies.

Staying informed and equipped with the latest diagnostic tools and techniques is crucial for any professional in the field of AI and machine learning. As we push the boundaries of what these technologies can achieve, let us also commit to the rigorous, detailed work of diagnosing and improving our models. The future of AI depends on it.

>

> >

> >

>

>

> >

> >

> >

> >

>