Integrating Machine Learning and AI into Modern Businesses: A Personal Insight

In the rapidly evolving landscape of technology, Artificial Intelligence (AI) and Machine Learning (ML) are not just buzzwords but integral components of innovative business strategies. As someone who has navigated the complexities of these technologies, both academically at Harvard and professionally through DBGM Consulting, Inc., I’ve experienced firsthand the transformative power they hold. In this article, I aim to shed light on how businesses can leverage AI and ML, drawing from my journey and the lessons learned along the way.

Understanding the Role of AI and ML in Business

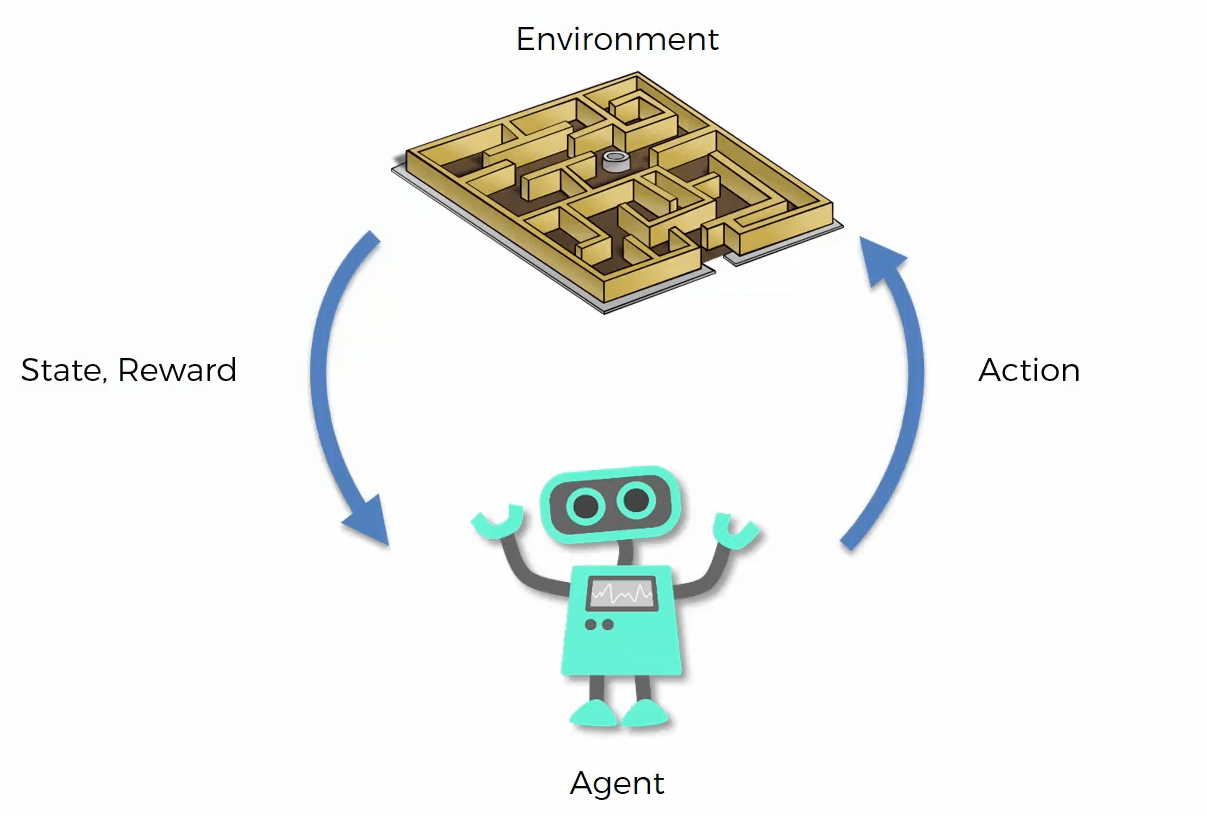

At the core, AI and ML technologies offer a unique proposition: the ability to process and analyze data at a scale and speed unattainable by human capabilities alone. For businesses, this means enhanced efficiency, predictive capabilities in market trends, and personalized customer experiences. My experience working on machine learning algorithms for self-driving robots at Harvard demonstrated the potential of these technologies to not only automate processes but also innovate solutions in ways previously unimaginable.

AI and ML in My Consulting Practice

Running DBGM Consulting, Inc., has provided a unique vantage point to observe and implement AI and ML solutions across industries. From automating mundane tasks with chatbots to deploying sophisticated ML models that predict consumer behavior, the applications are as varied as they are impactful. My tenure at Microsoft as a Senior Solutions Architect further compounded my belief in the transformative potential of cloud-computed AI services and tools for businesses eager to step into the future.

Case Study: Process Automation in Healthcare

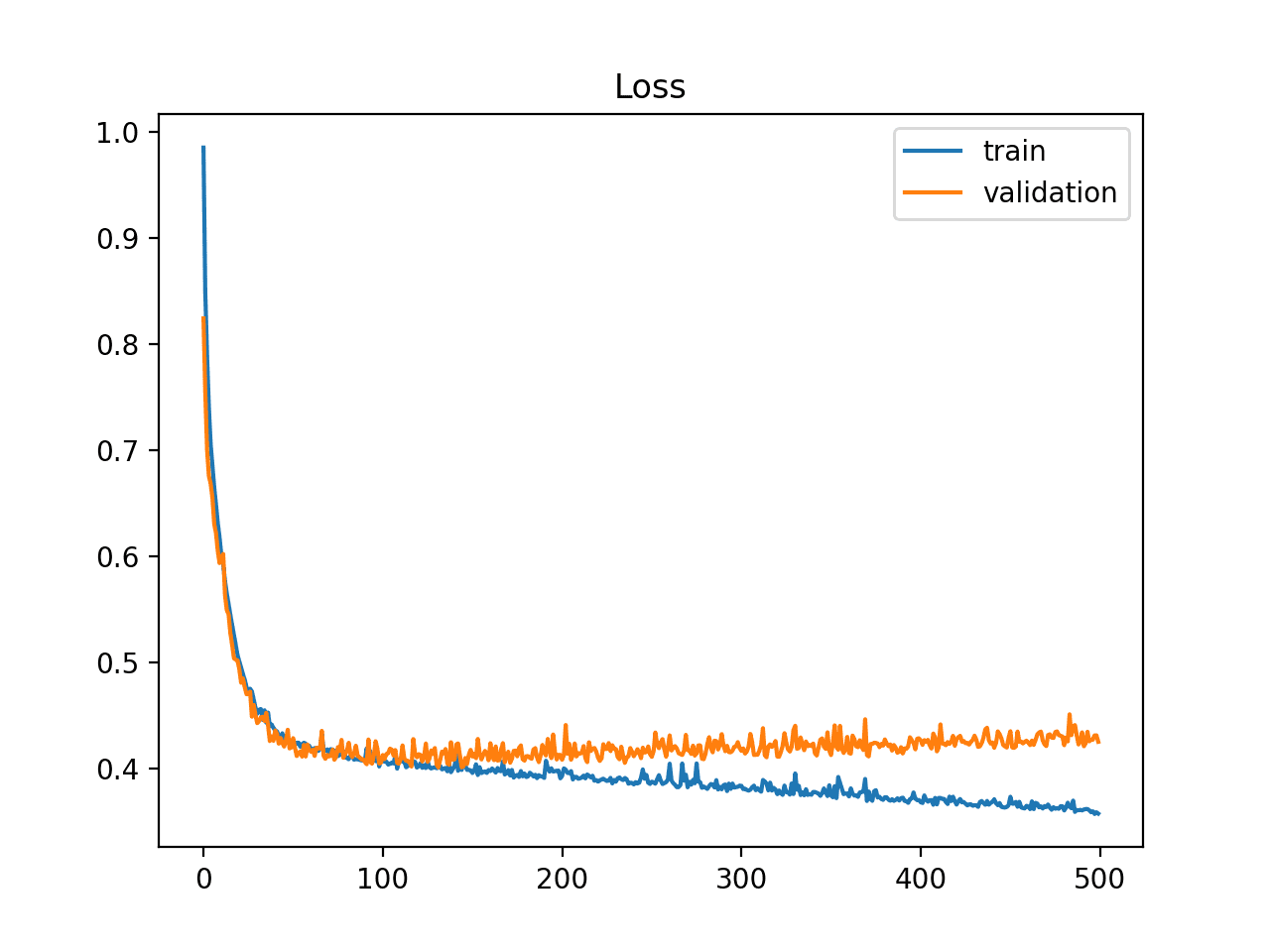

One notable project under my firm involved developing a machine learning model for a healthcare client. This model was designed to predict patient no-shows, combining historical data and patient behavior patterns. Not only did this reduce operational costs, but it also enabled better resource allocation, ensuring that patients needing immediate care were prioritized.

Challenges and Considerations

- Data Privacy and Security: With great power comes great responsibility. Ensuring the privacy and security of data used to train AI and ML models is paramount. In my work, especially in the security aspect of consulting, instilling robust access governance and compliance protocols is a non-negotiable foundation.

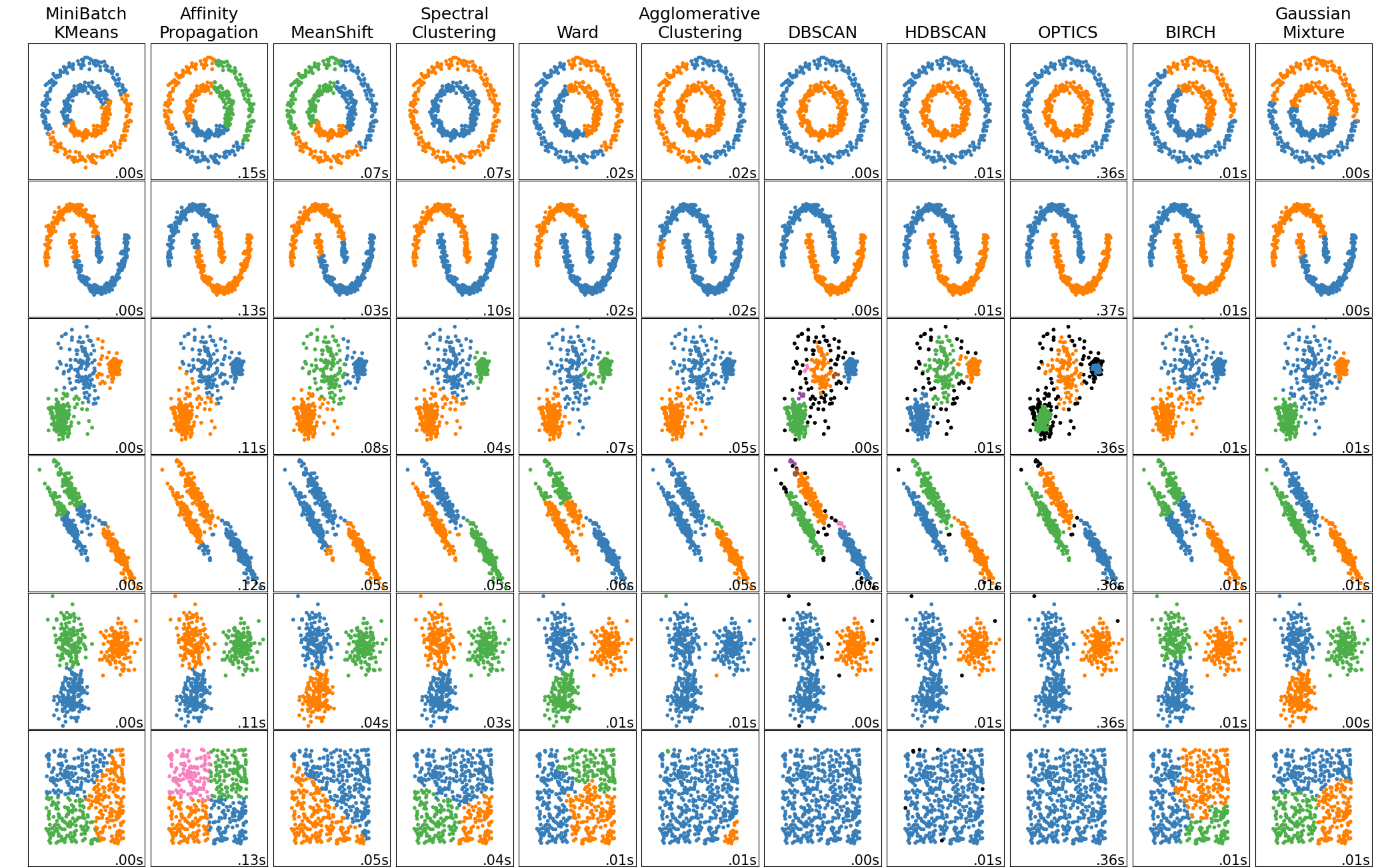

- Algorithm Bias: AI and ML models are only as unbiased as the data fed into them. Ensuring a diverse data set to train these models is crucial to prevent discrimination and bias, something I constantly advocate for in my projects.

- Integration Challenges: Merging AI and ML into existing legacy systems presents its own set of challenges. My expertise in legacy infrastructure, particularly in SCCM and PowerShell, has been invaluable in navigating these waters.

Looking Forward

I am both optimistic and cautious about the future of AI and ML in business. These technologies hold immense potential for positive change, yet must be deployed thoughtfully to avoid unintended consequences. Drawing from philosophers like Alan Watts, I acknowledge that it’s about finding balance – leveraging AI and ML to enhance our capabilities, not replace them.

In conclusion, the journey into integrating AI and ML into business operations is not without its hurdles. However, with a clear understanding of the technologies, coupled with strategic planning and ethical considerations, businesses can unlock unparalleled opportunities for growth and innovation. As we move forward, I remain committed to exploring the frontiers of AI and ML, ensuring that my firm, DBGM Consulting, Inc., stays at the cutting edge of this digital revolution.

References and Further Reading

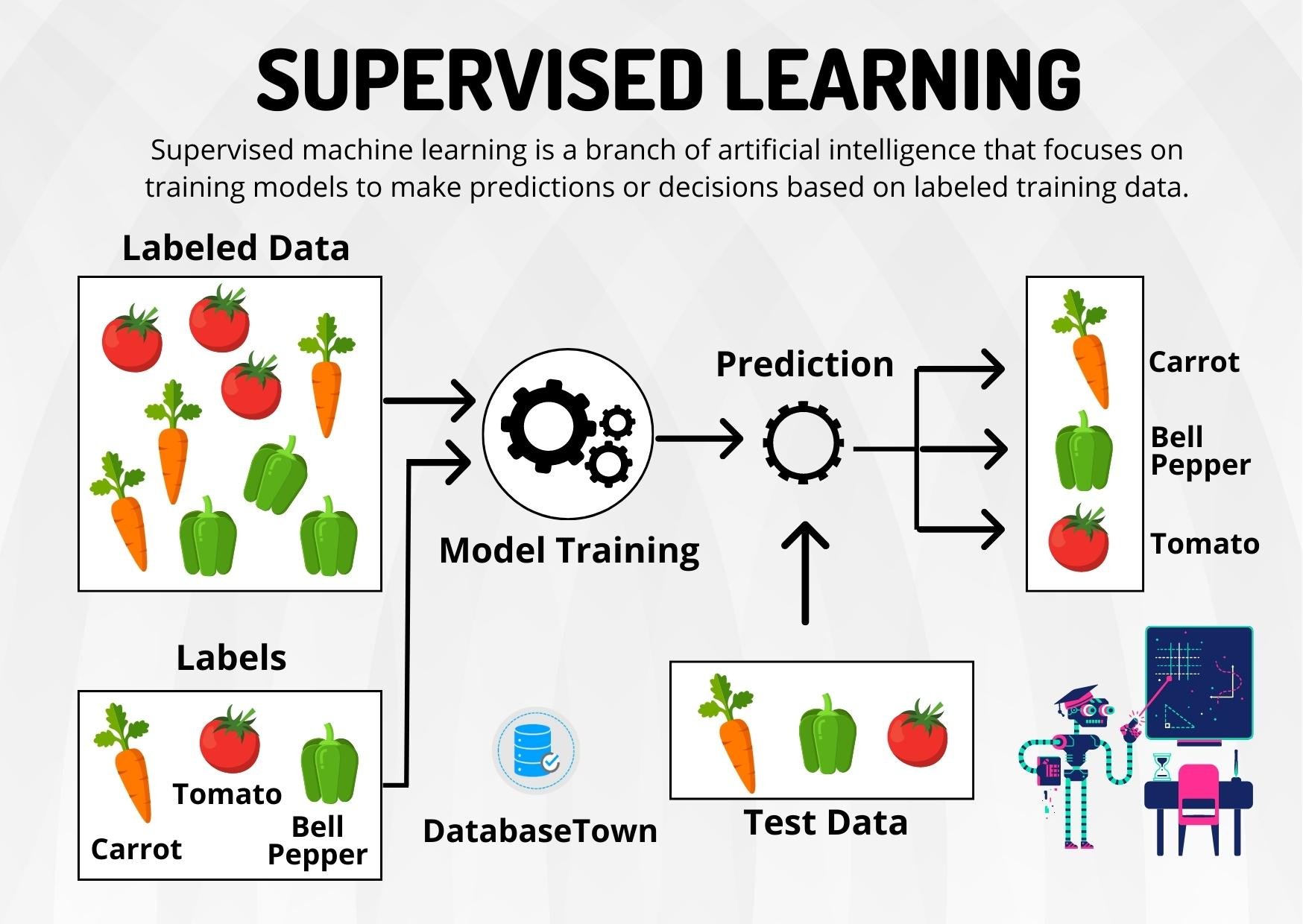

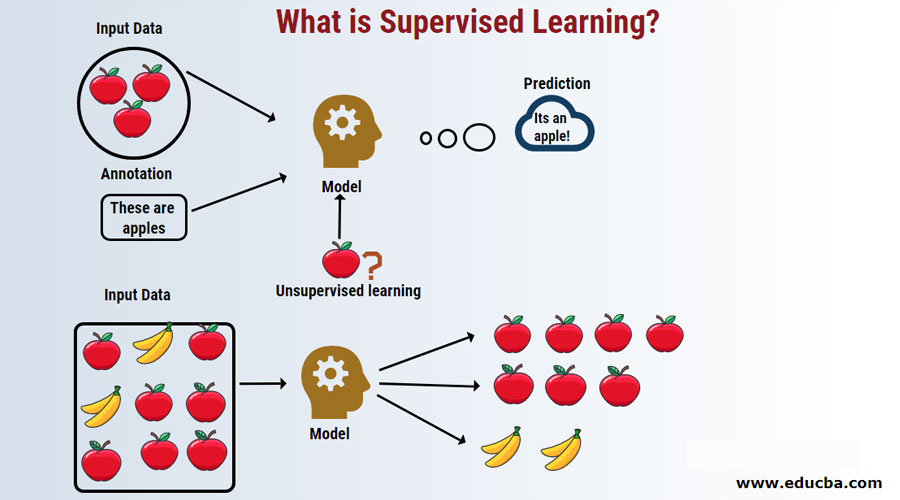

For those interested in delving deeper into the world of AI and ML in business, I recommend referencing the recent articles on my blog, including Exploring Supervised Learning’s Role in Future AI Technologies and Exploring Hybrid Powertrain Engineering: Bridging Sustainability and Performance, which provide valuable insights into the practical applications and ethical considerations of these technologies.

>

> >

> >

>

>

> >

> >

>