Deep Learning’s Role in Advancing Machine Learning: A Realistic Appraisal

As someone deeply entrenched in the realms of Artificial Intelligence (AI) and Machine Learning (ML), it’s impossible to ignore the monumental strides made possible through Deep Learning (DL). The fusion of my expertise in AI, gained both academically and through hands-on experience at DBGM Consulting, Inc., along with a passion for evidence-based science, positions me uniquely to dissect the realistic advances and future pathways of DL within AI and ML.

Understanding Deep Learning’s Current Landscape

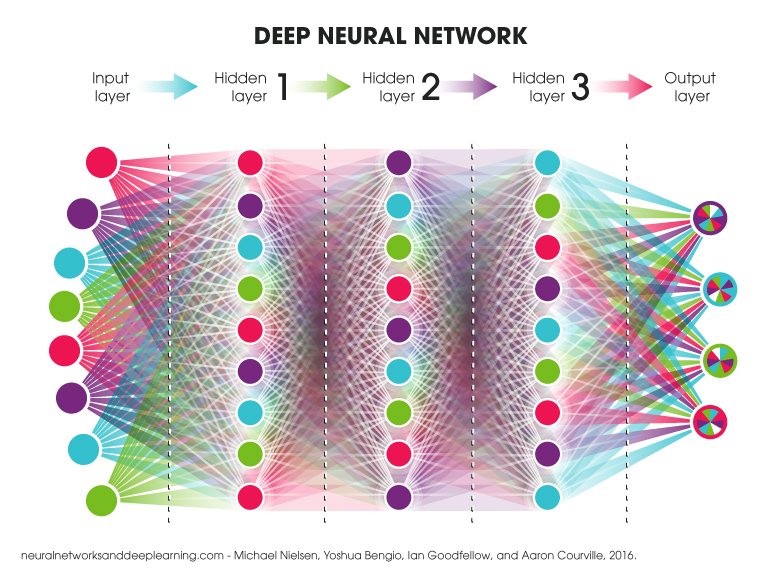

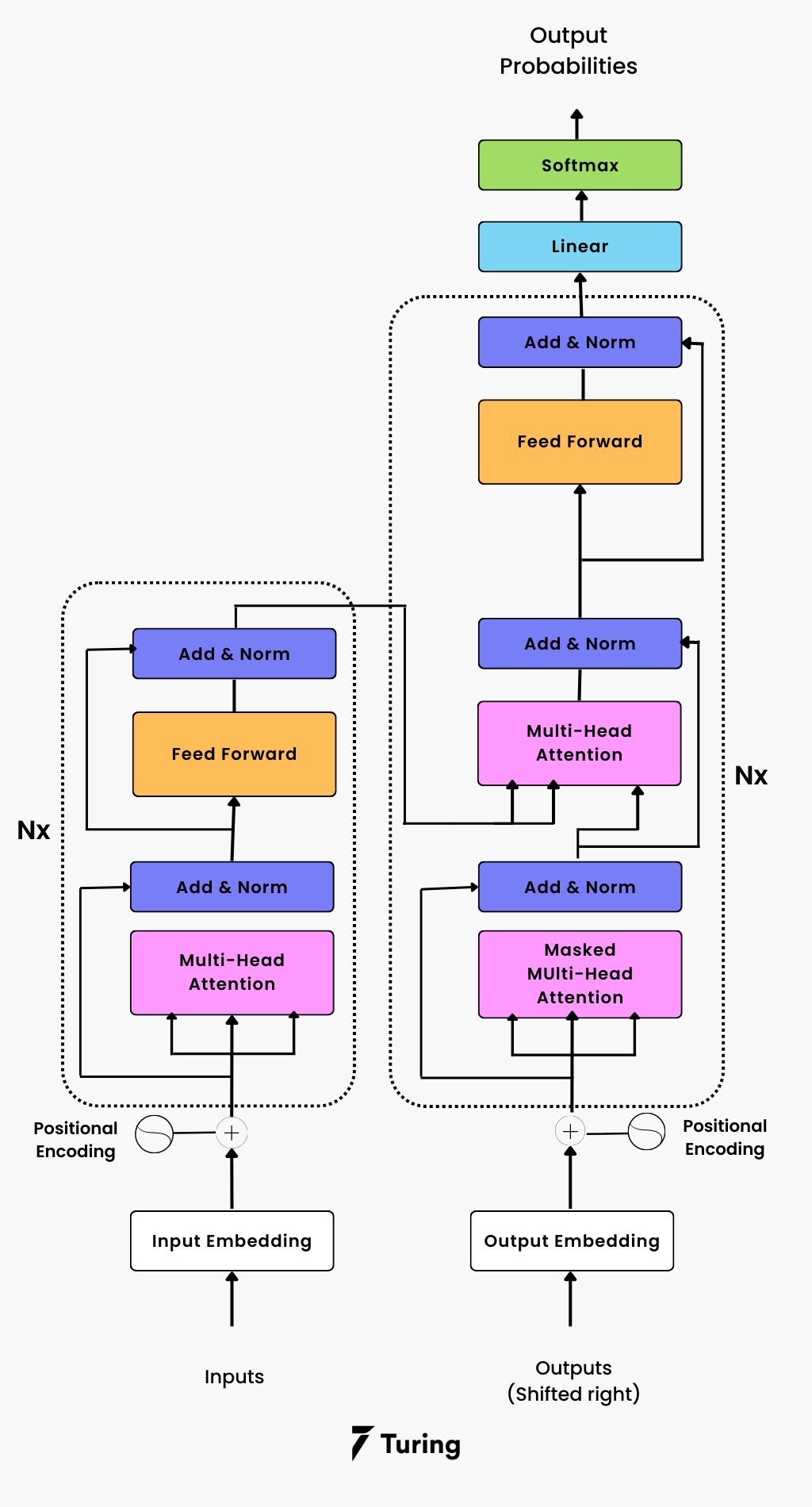

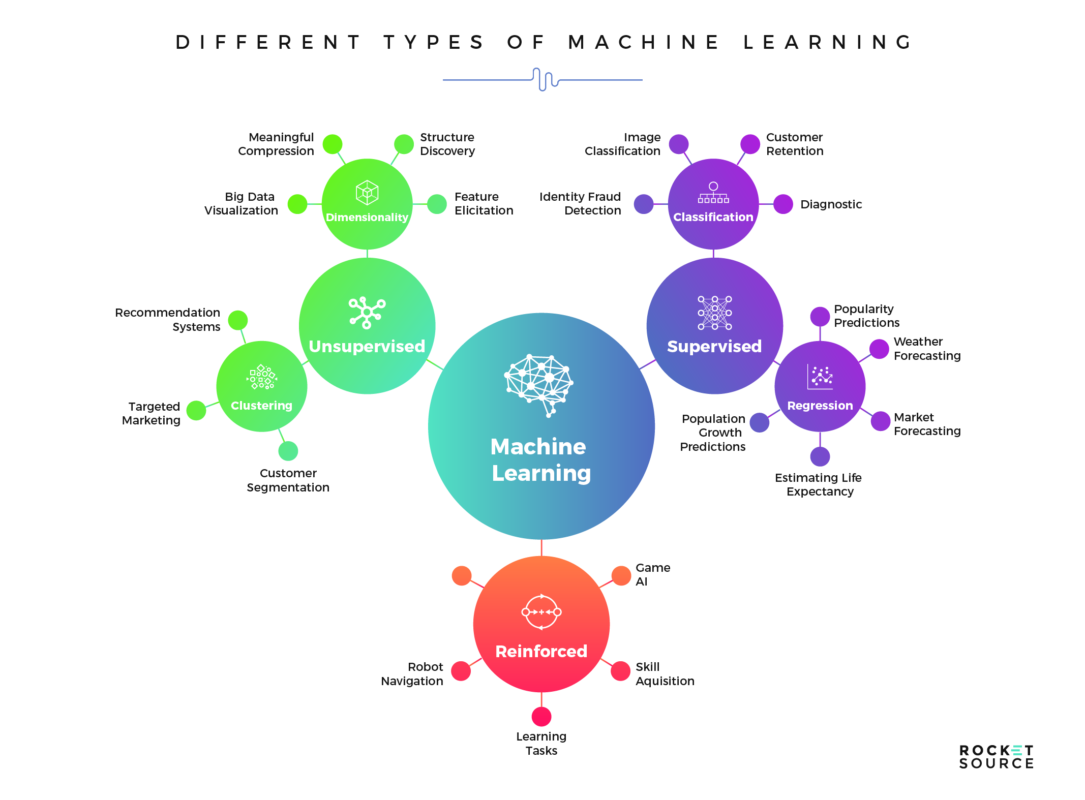

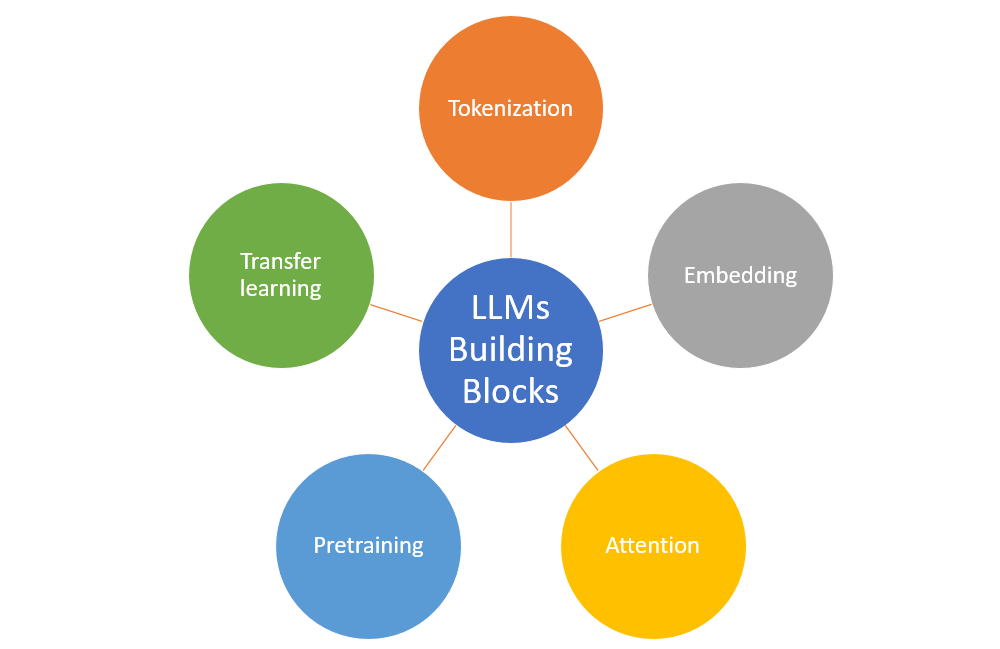

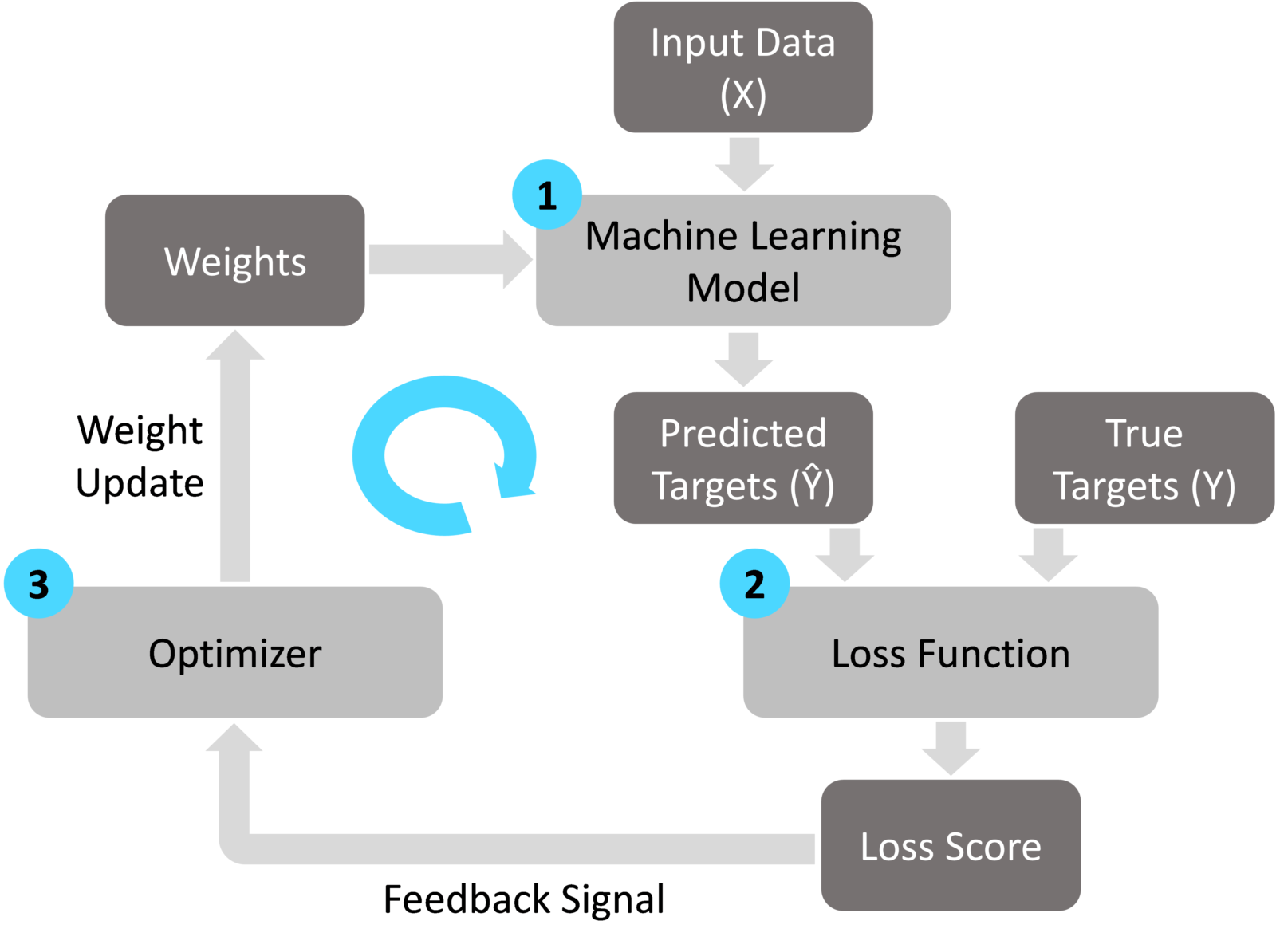

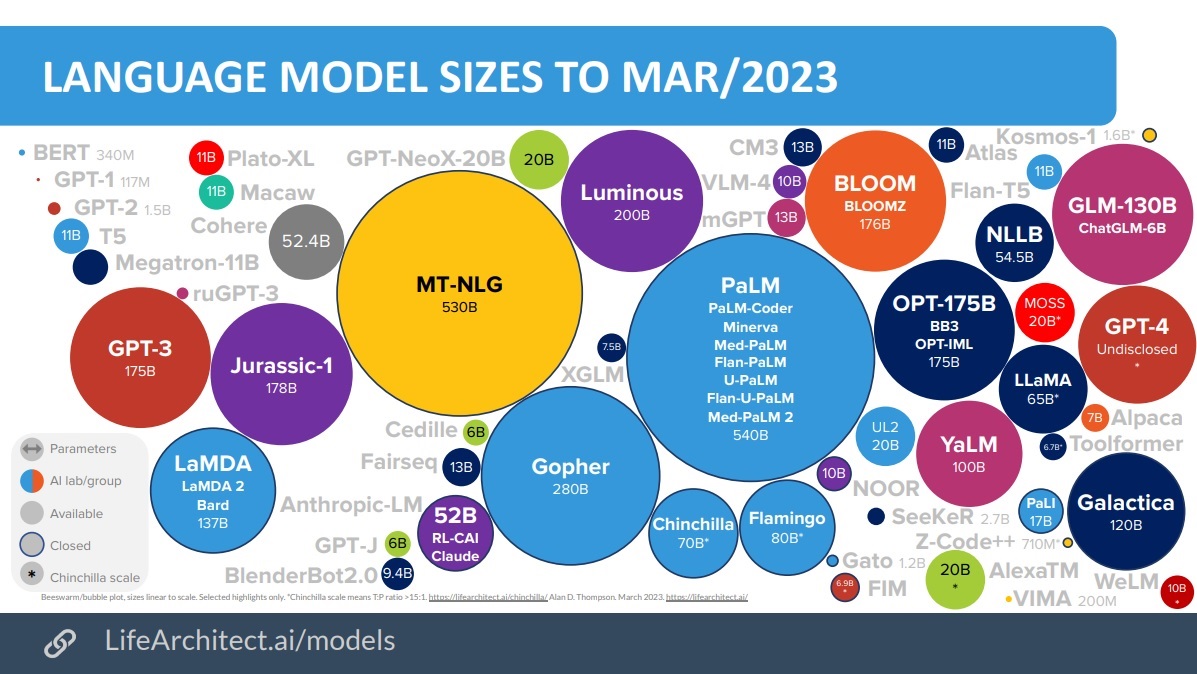

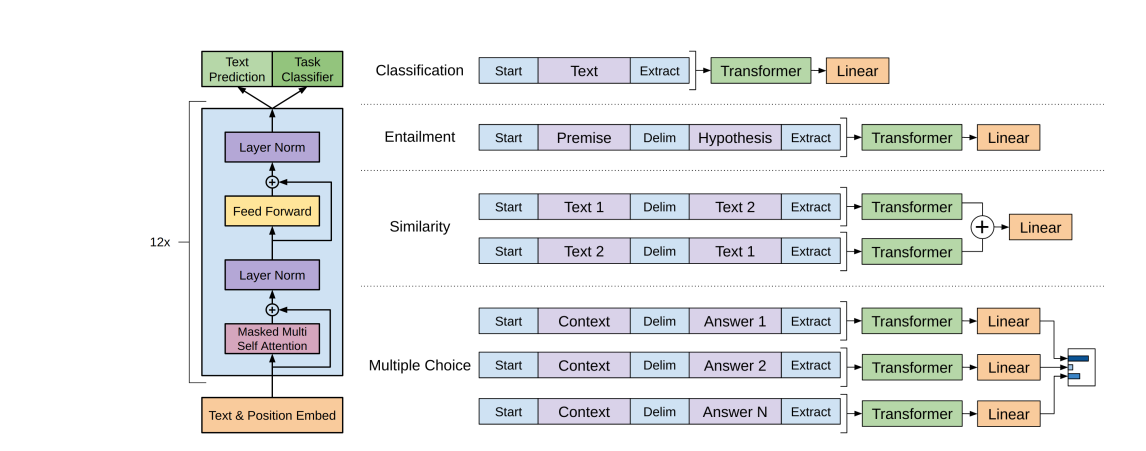

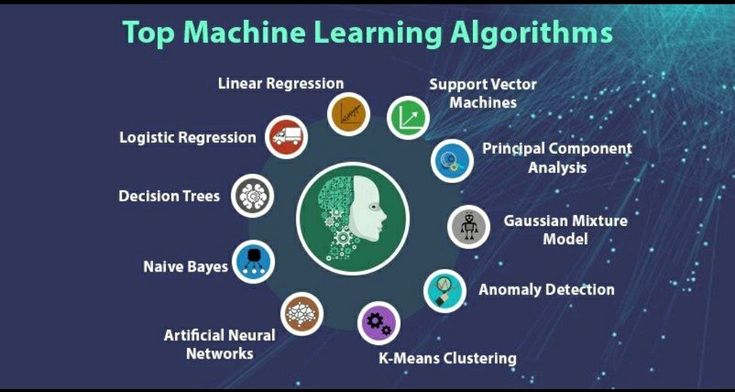

Deep Learning, a subset of ML powered by artificial neural networks with representation learning, has transcended traditional algorithmic boundaries of pattern recognition. It’s fascinating how DL models, through their depth and complexity, effectively mimic the human brain’s neural pathways to process data in a nonlinear approach. The evolution of Large Language Models (LLMs) I discussed earlier showcases the pinnacle of DL’s capabilities in understanding, generating, and interpreting human language at an unprecedented scale.

Applications and Challenges

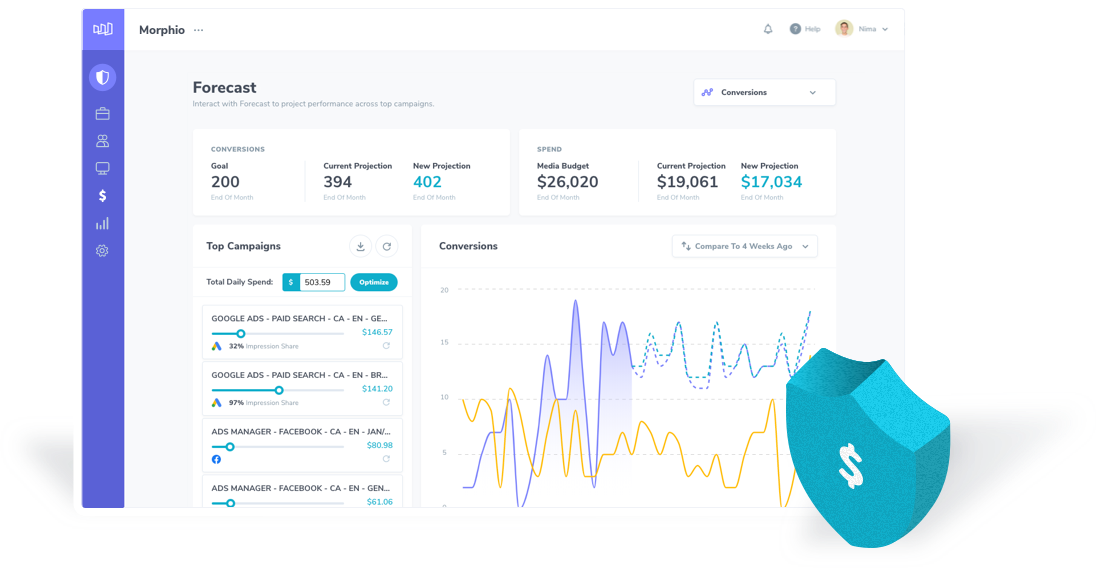

DL’s prowess extends beyond just textual applications; it is revolutionizing fields such as image recognition, speech to text conversion, and even predictive analytics. During my time at Microsoft, I observed first-hand the practical applications of DL in cloud solutions and automation, witnessing its transformative potential across industries. However, DL is not without challenges; it demands vast datasets and immense computing power, presenting scalability and environmental concerns.

Realistic Expectations and Ethical Considerations

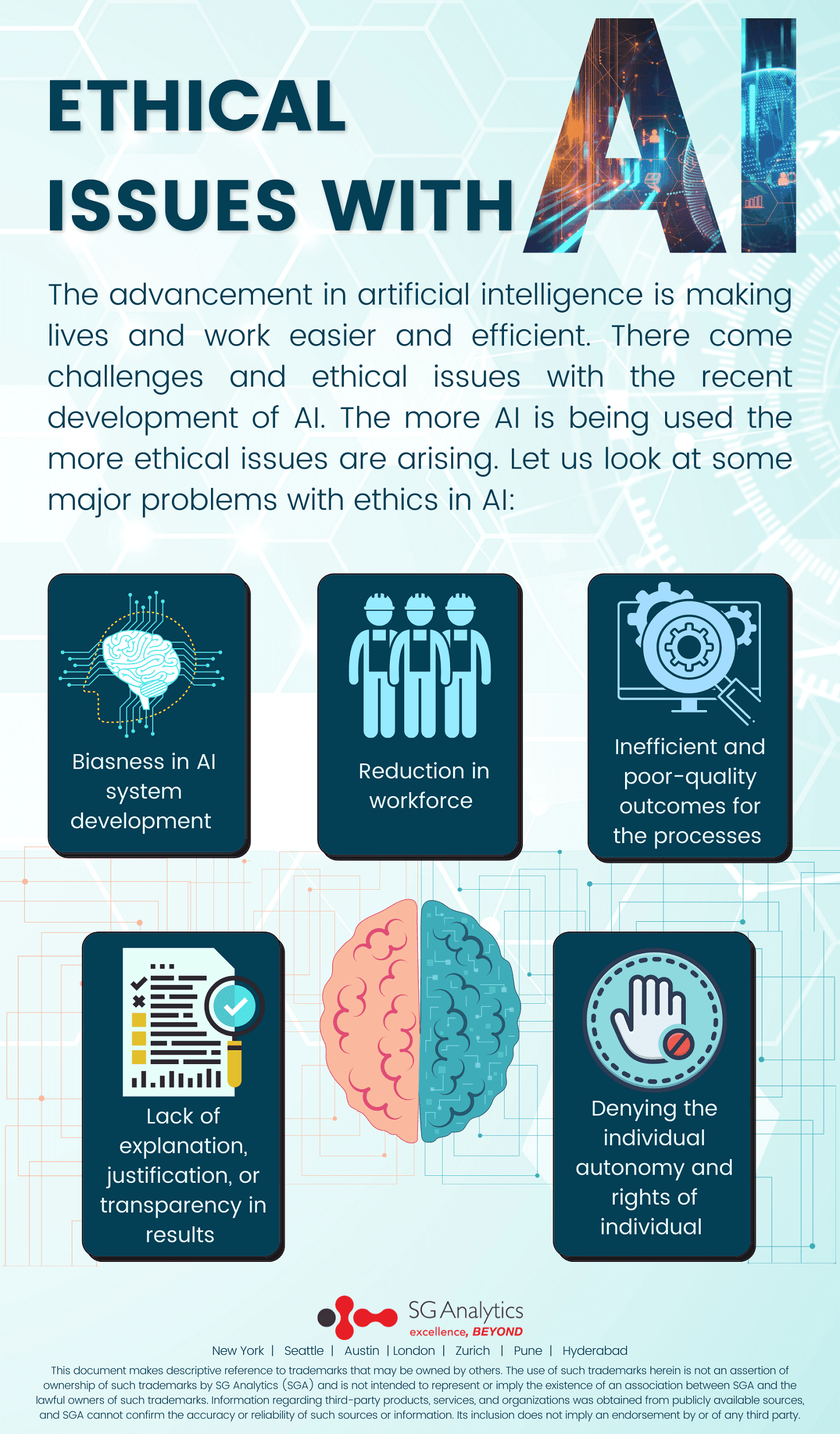

The discourse around AI often veers into the utopian or dystopian, but a balanced perspective rooted in realism is crucial. DL models are tools—extraordinarily complex, yet ultimately limited by the data they are trained on and the objectives they are designed to achieve. The ethical implications, particularly in privacy, bias, and accountability, necessitate a cautious approach. Balancing innovation with ethical considerations has been a recurring theme in my exploration of AI and ML, underscoring the need for transparent and responsible AI development.

Integrating Deep Learning With Human Creativity

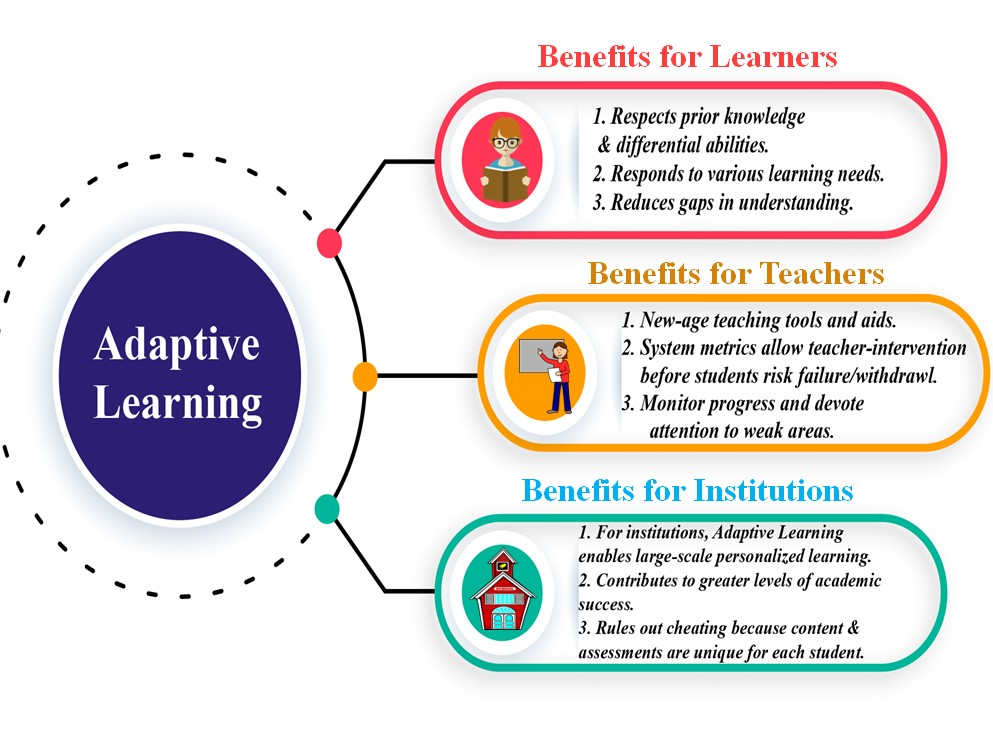

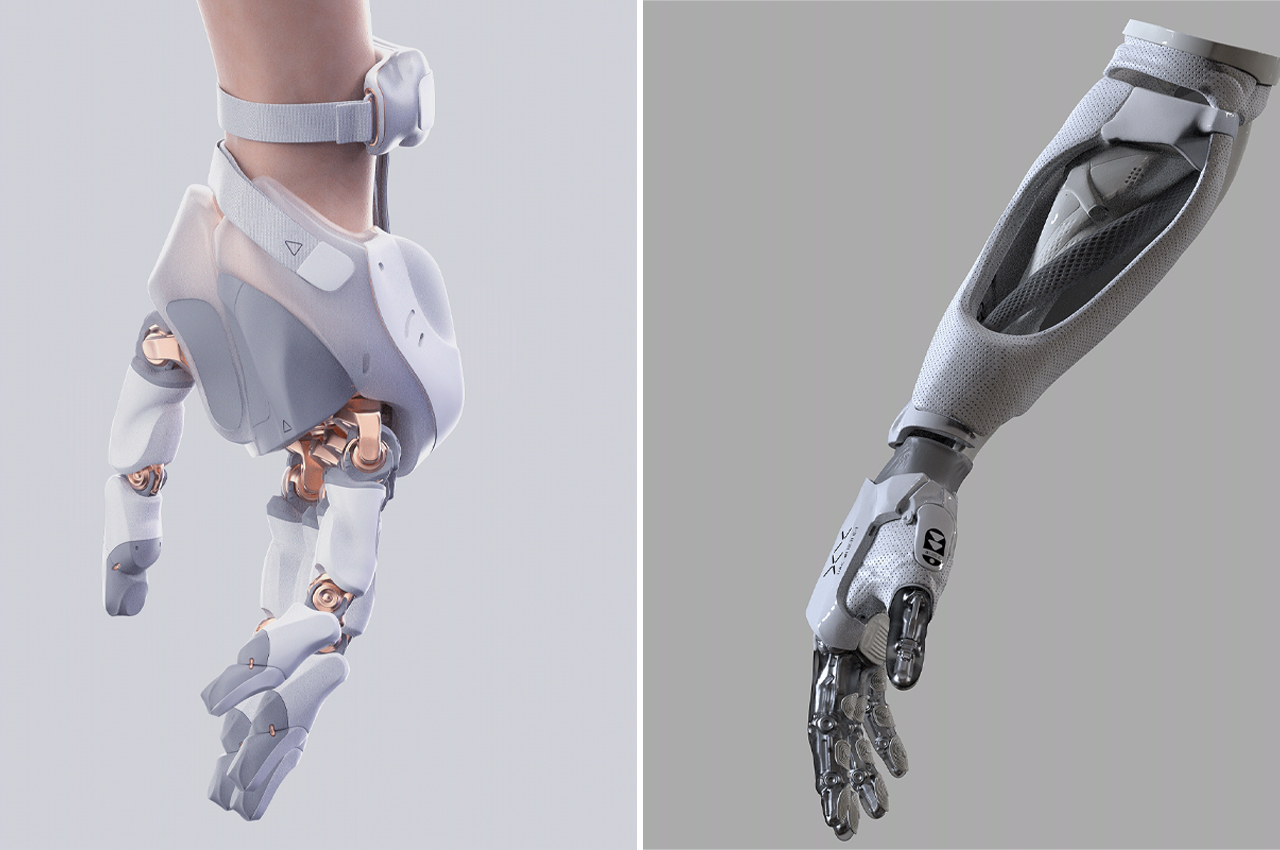

One of the most exciting aspects of DL is its potential to augment human creativity and problem-solving. From enhancing artistic endeavors to solving complex scientific problems, DL can be a partner in the creative process. Nevertheless, it’s important to recognize that DL models lack the intuitive understanding of context and ethics that humans inherently possess. Thus, while DL can replicate or even surpass human performance in specific tasks, it cannot replace the nuanced understanding and ethical judgment that humans bring to the table.

Path Forward

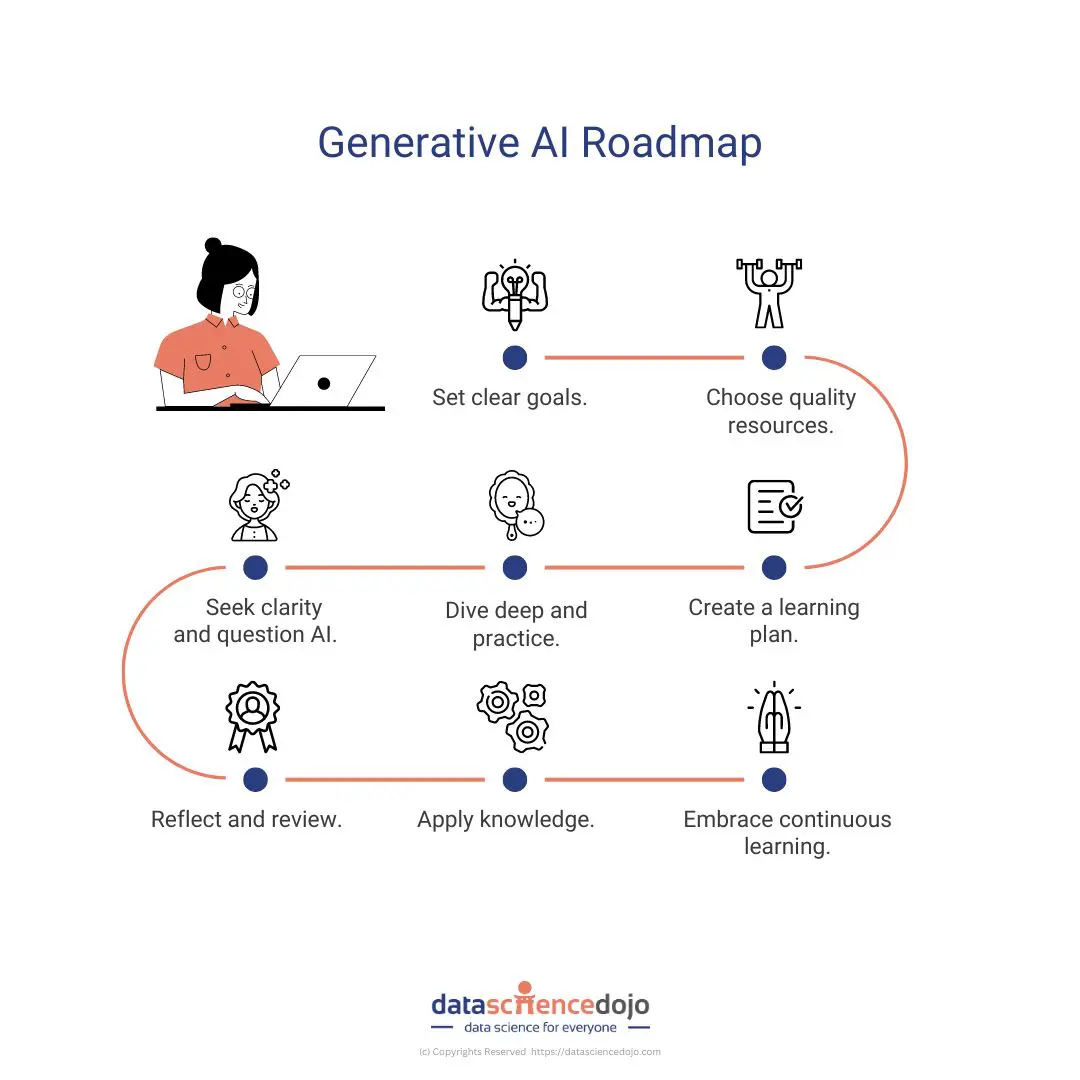

Looking ahead, the path forward for DL in AI and ML is one of cautious optimism. As we refine DL models and techniques, their integration into daily life will become increasingly seamless and indistinguishable from traditional computing methods. However, this progress must be coupled with vigilant oversight and an unwavering commitment to ethical principles. My journey from my studies at Harvard to my professional endeavors has solidified my belief in the transformative potential of technology when guided by a moral compass.

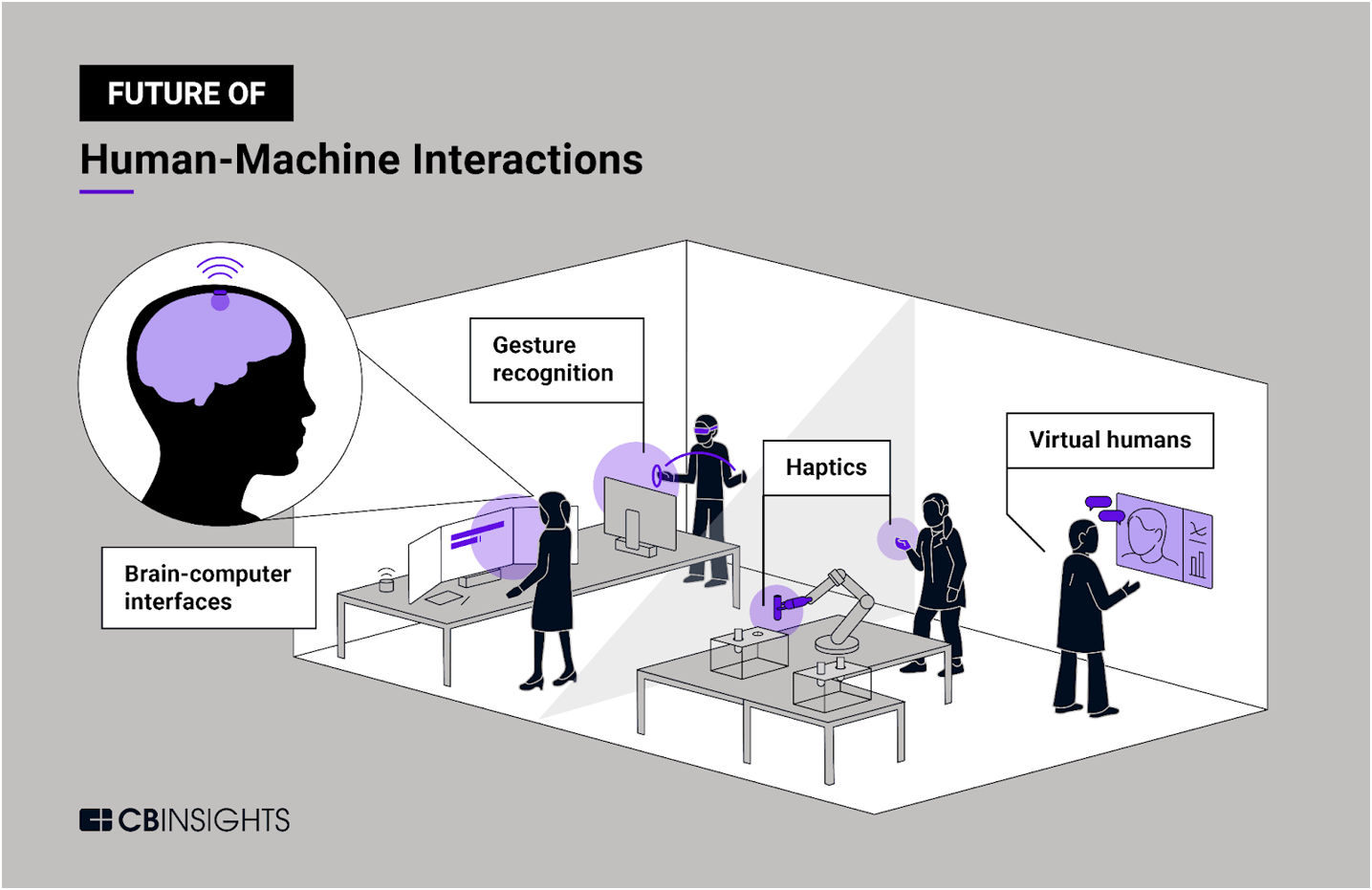

Convergence of Deep Learning and Emerging Technologies

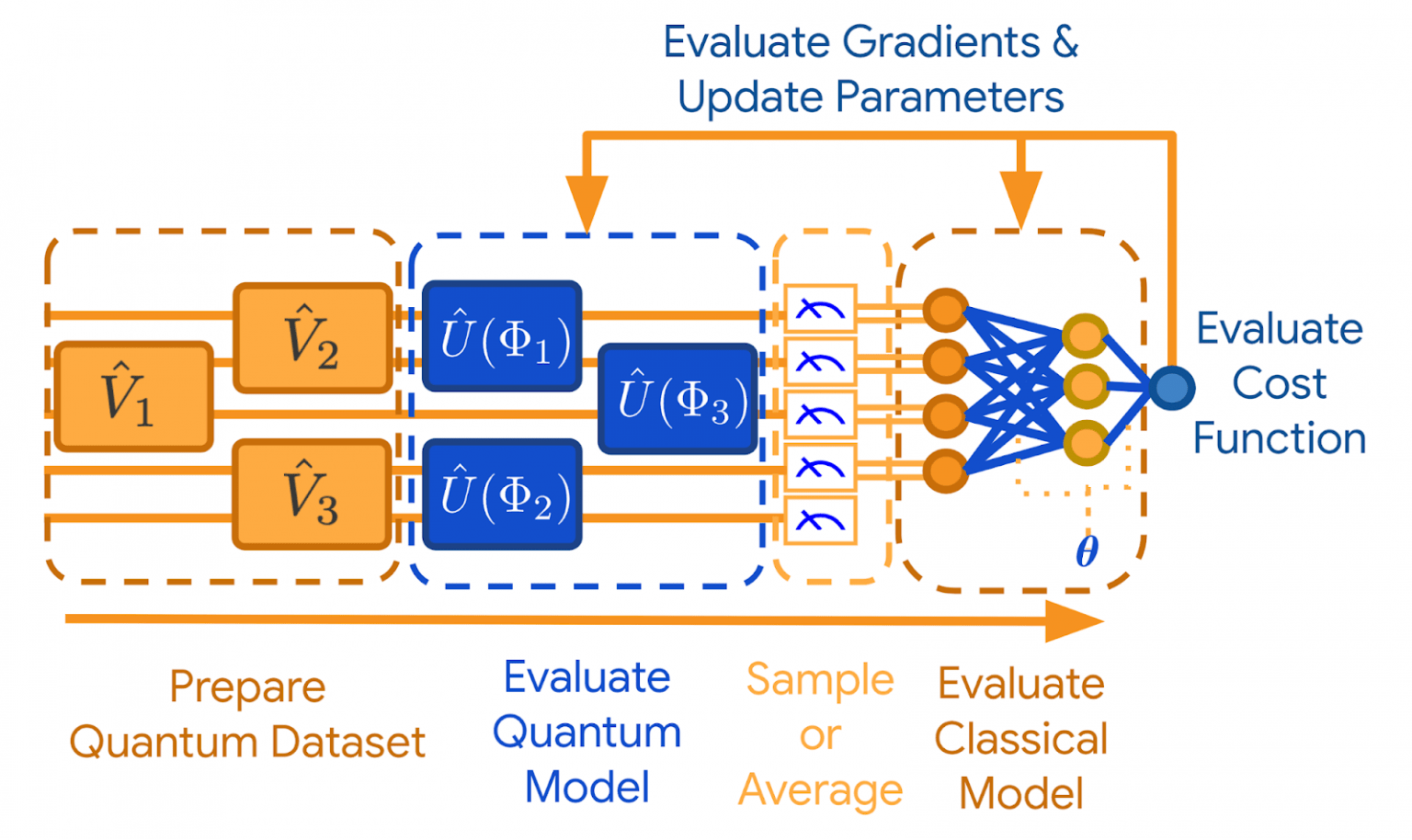

The convergence of DL with quantum computing, edge computing, and the Internet of Things (IoT) heralds a new era of innovation, offering solutions to current limitations in processing power and efficiency. This synergy, grounded in scientific principles and real-world applicability, will be crucial in overcoming the existing barriers to DL’s scalability and environmental impact.

In conclusion, Deep Learning continues to be at the forefront of AI and ML advancements. As we navigate its potential and pitfalls, it’s imperative to maintain a balance between enthusiasm for its capabilities and caution for its ethical and practical challenges. The journey of AI, much like my personal and professional experiences, is one of continuous learning and adaptation, always with an eye towards a better, more informed future.

Focus Keyphrase: Deep Learning in AI and ML

>

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

>  –>

–> >

> >

> >

>