Unraveling the Intricacies of Machine Learning Problems with a Deep Dive into Large Language Models

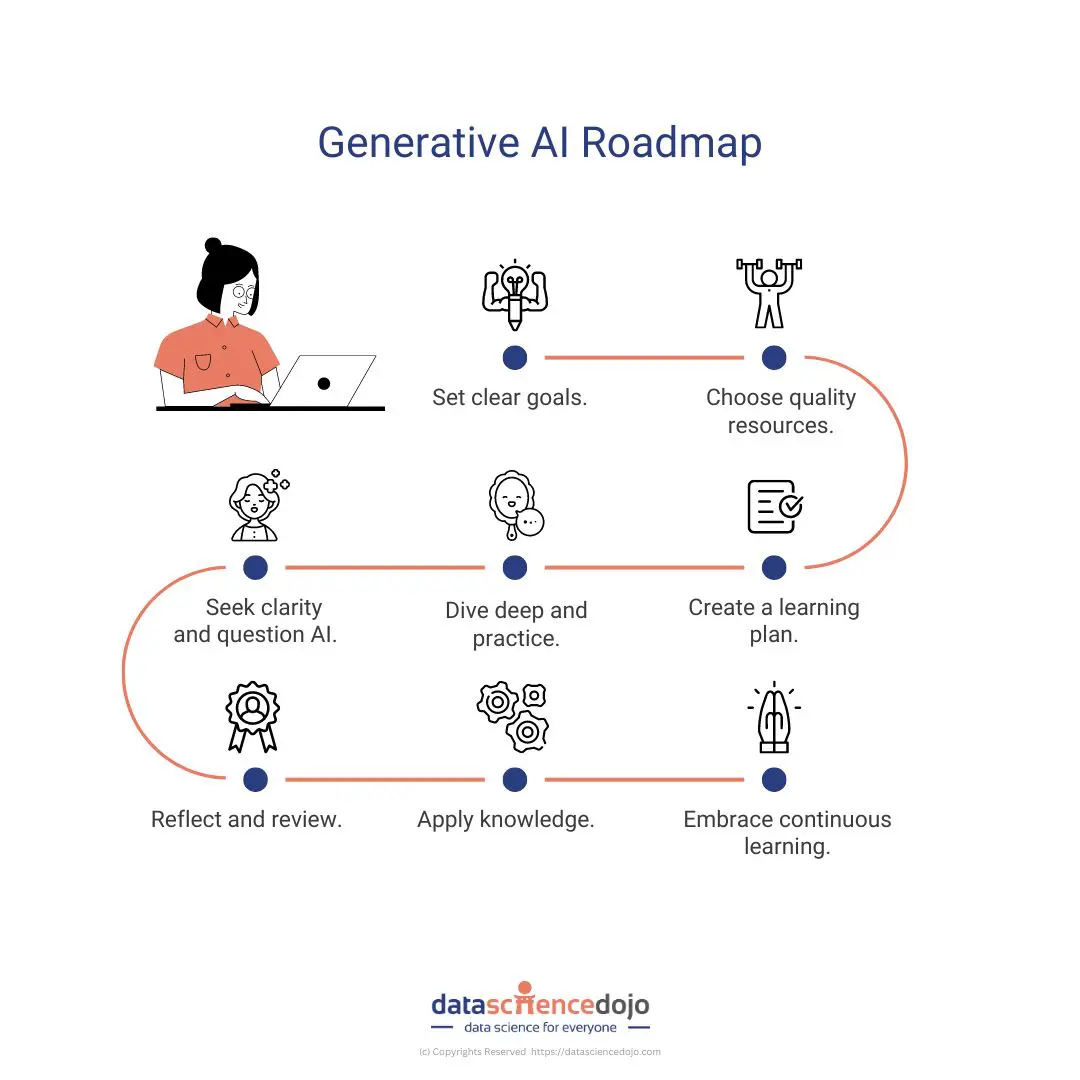

In our continuous exploration of Machine Learning (ML) and its vast landscape, we’ve previously touched upon various dimensions including the mathematical foundations and significant contributions such as large language models (LLMs). Building upon those discussions, it’s essential to delve deeper into the problems facing machine learning today, particularly when examining the complexities and future directions of LLMs. This article aims to explore the nuanced challenges within ML and how LLMs, with their transformative potential, are both a part of the solution and a source of new hurdles to overcome.

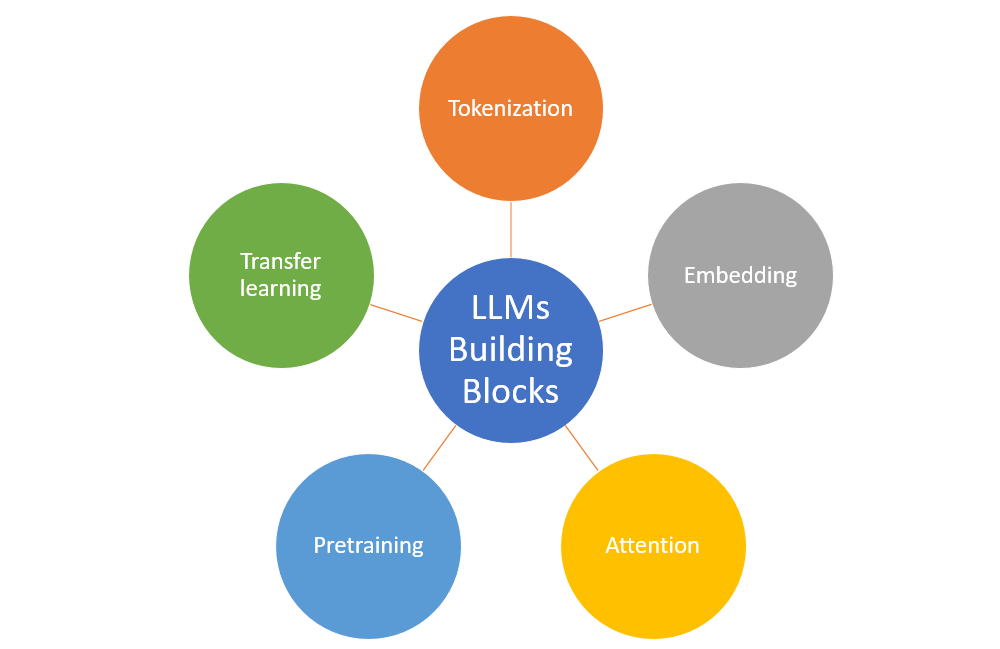

Understanding Large Language Models (LLMs): An Overview

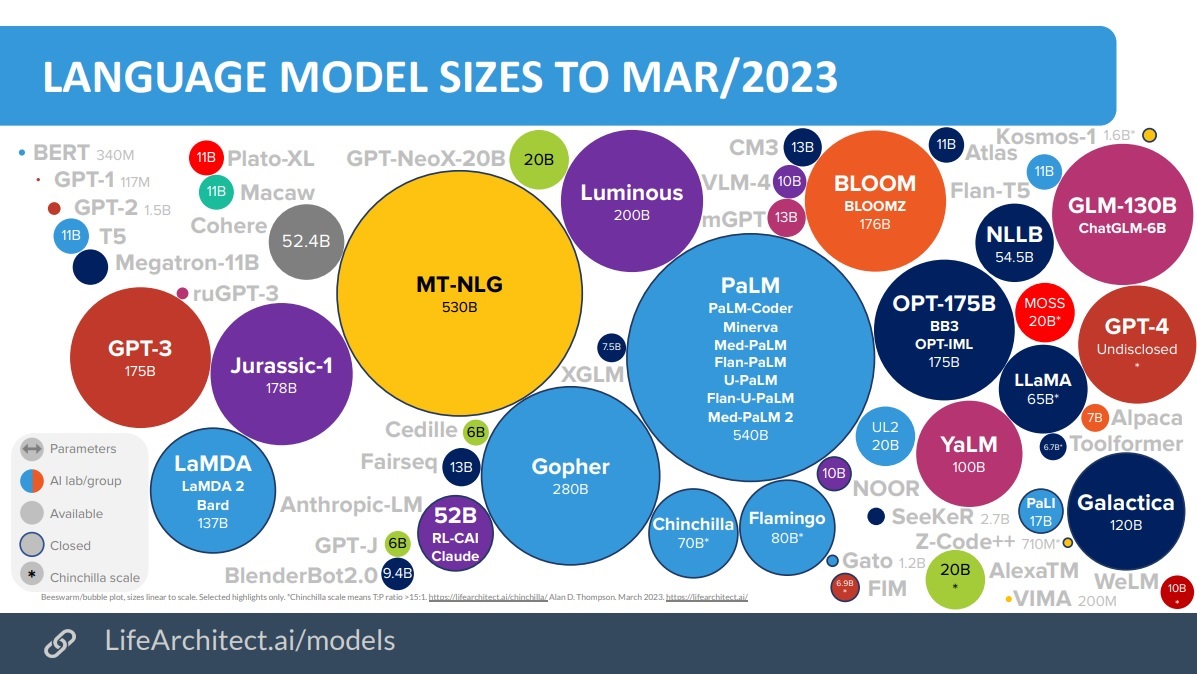

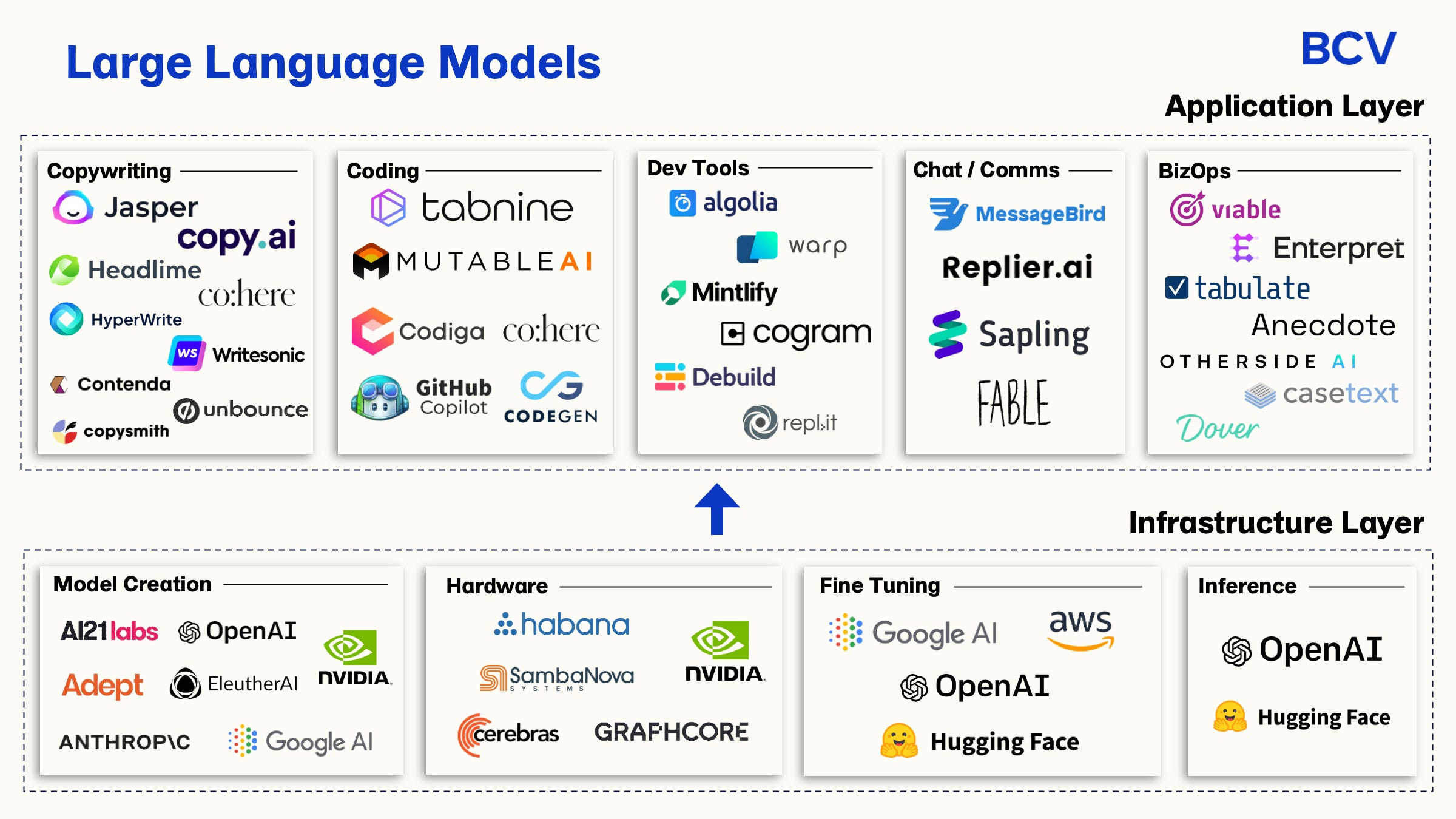

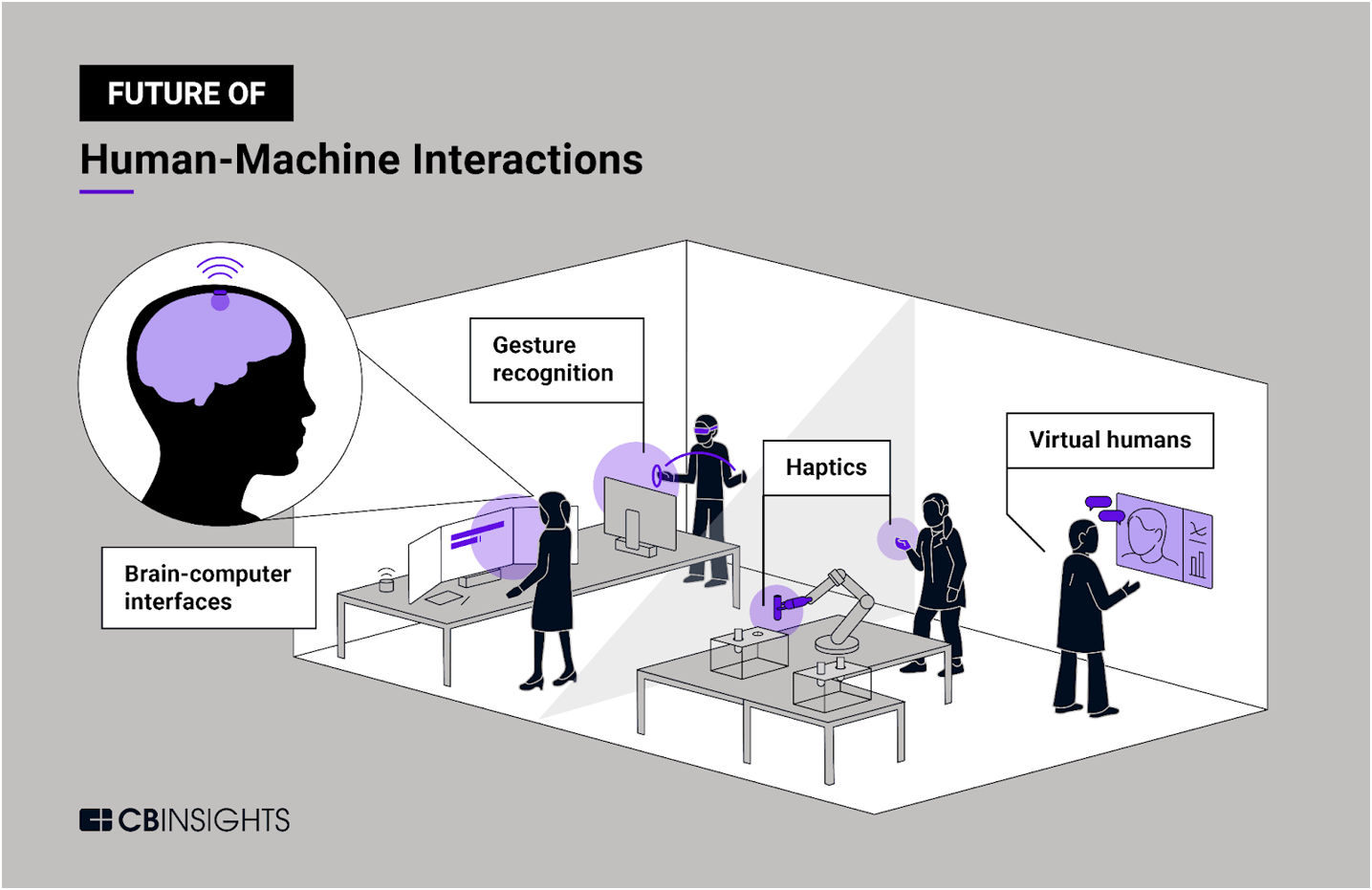

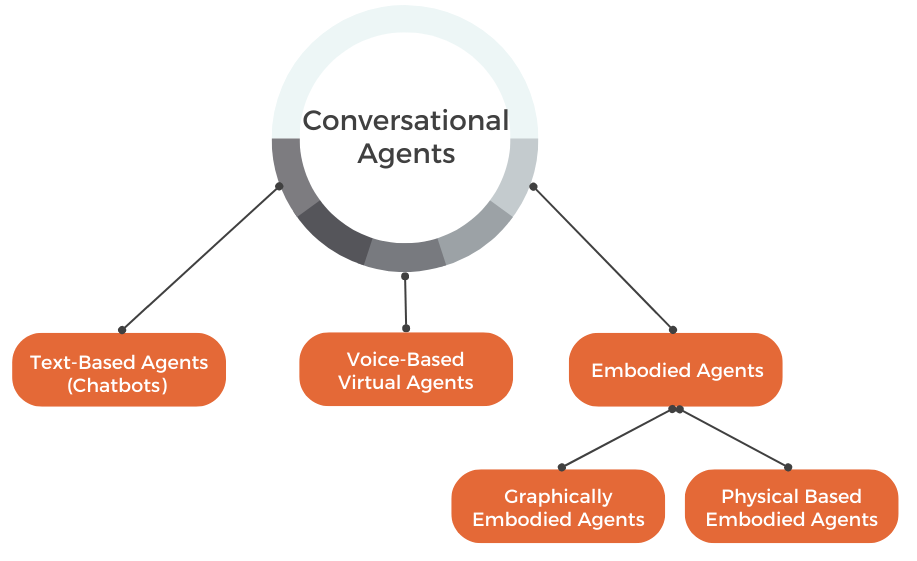

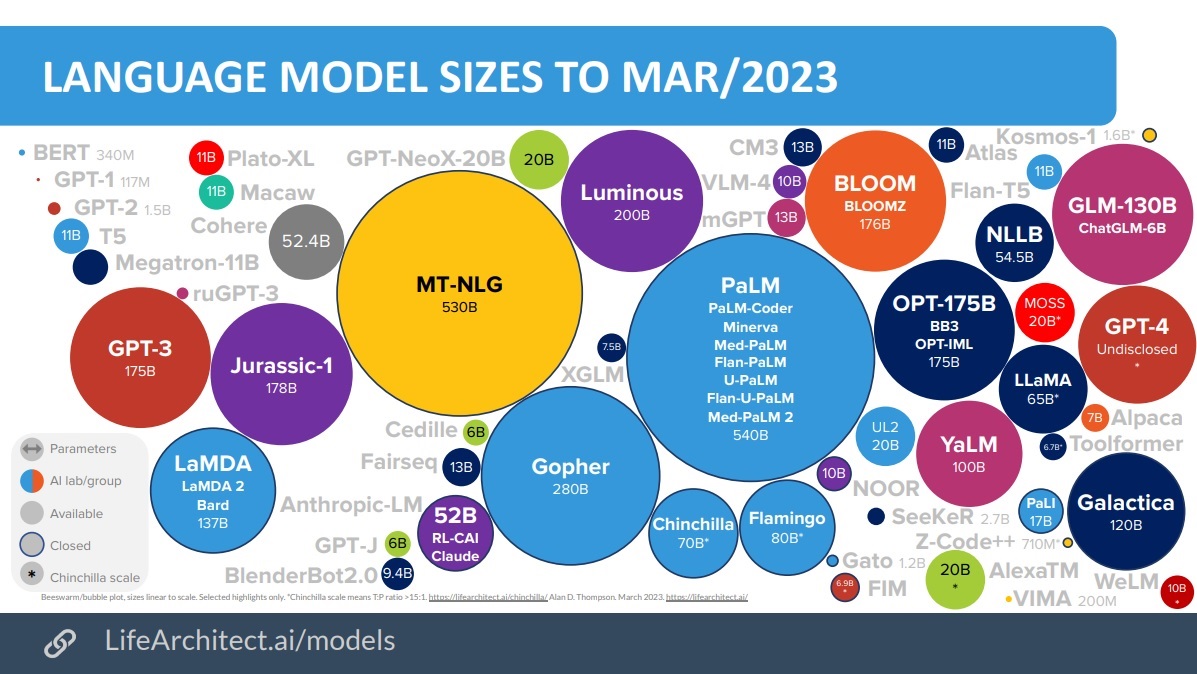

Large Language Models have undeniably shifted the paradigm of what artificial intelligence (AI) can achieve. They process and generate human-like text, allowing for more intuitive human-computer interactions, and have shown promising capabilities across various applications from content creation to complex problem solving. However, their advancement brings forth significant technical and ethical challenges that need addressing.

One central problem LLMs confront is their energy consumption and environmental impact. Training models of this magnitude requires substantial computational resources, which in turn, demands a considerable amount of energy – an aspect that is often critiqued for its environmental implications.

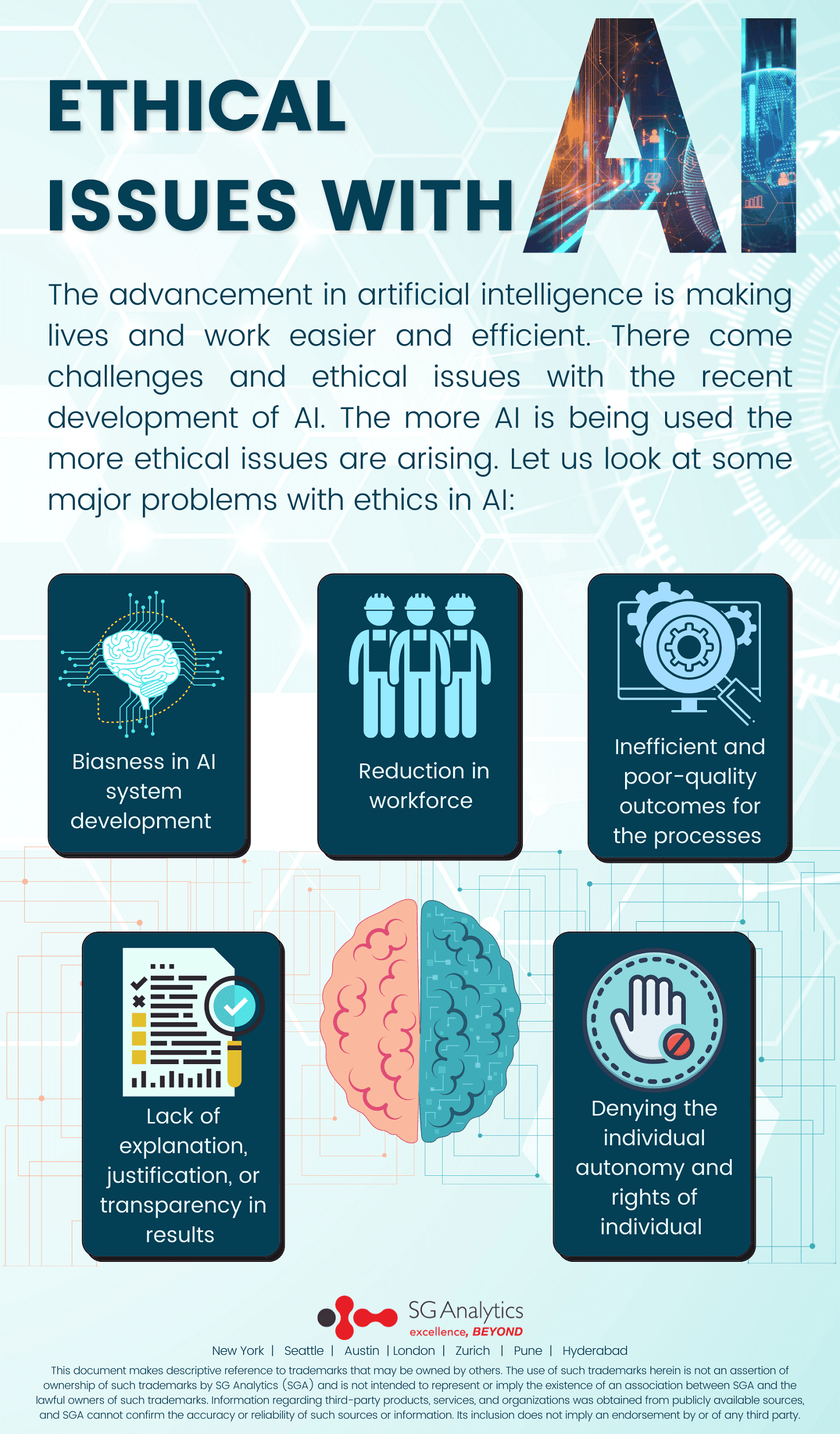

Tackling Bias and Fairness

Moreover, LLMs are not immune to the biases present in their training data. Ensuring the fairness and neutrality of these models is pivotal, as their outputs can influence public opinion and decision-making processes. The diversity in data sources and the meticulous design of algorithms are steps towards mitigating these biases, but they remain a pressing issue in the development and deployment of LLMs.

Technical Challenges in LLM Development

From a technical standpoint, the complexity of LLMs often leads to a lack of transparency and explainability. Understanding why a model generates a particular output is crucial for trust and efficacy, especially in critical applications. Furthermore, the issue of model robustness and security against adversarial attacks is an area of ongoing research, ensuring models behave predictably in unforeseen situations.

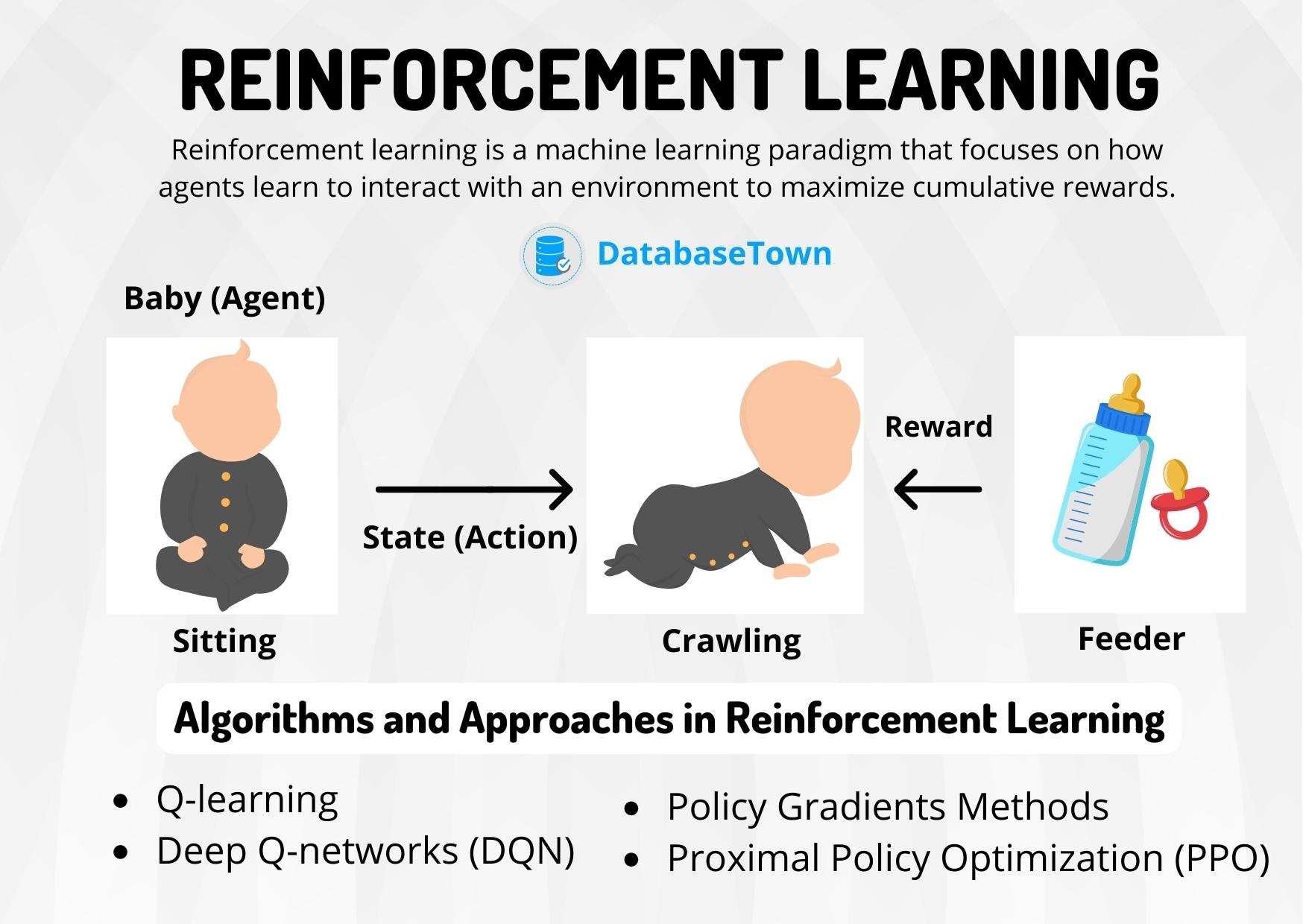

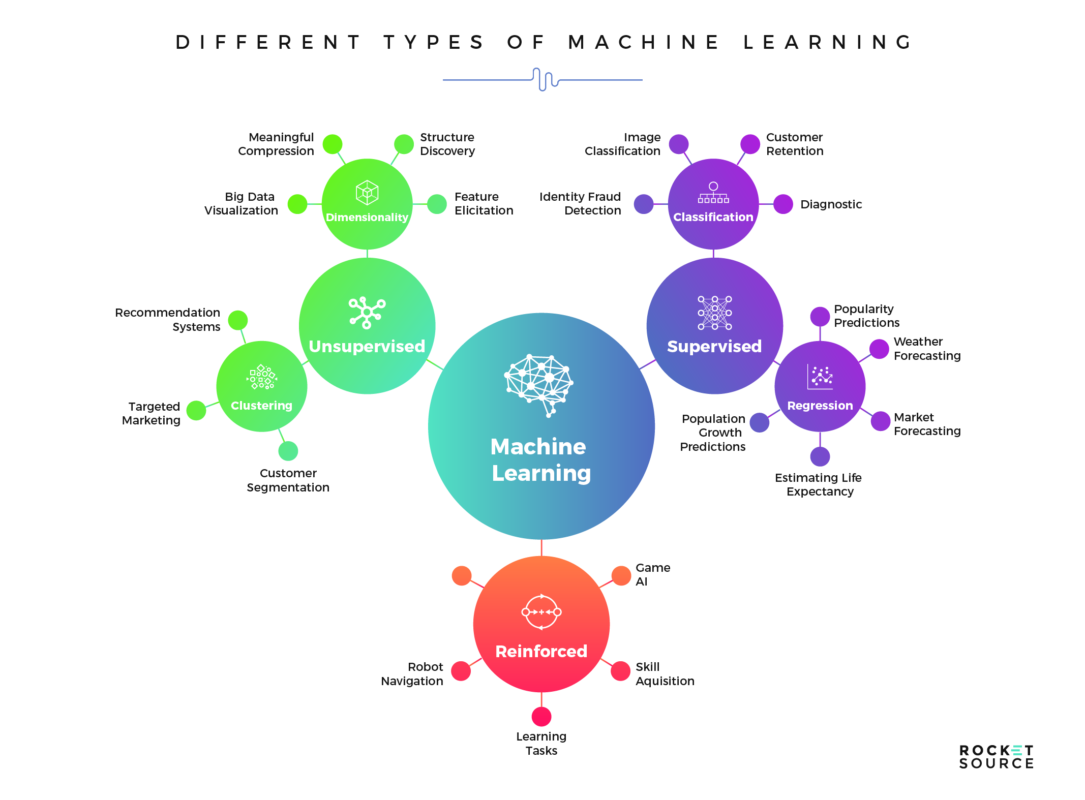

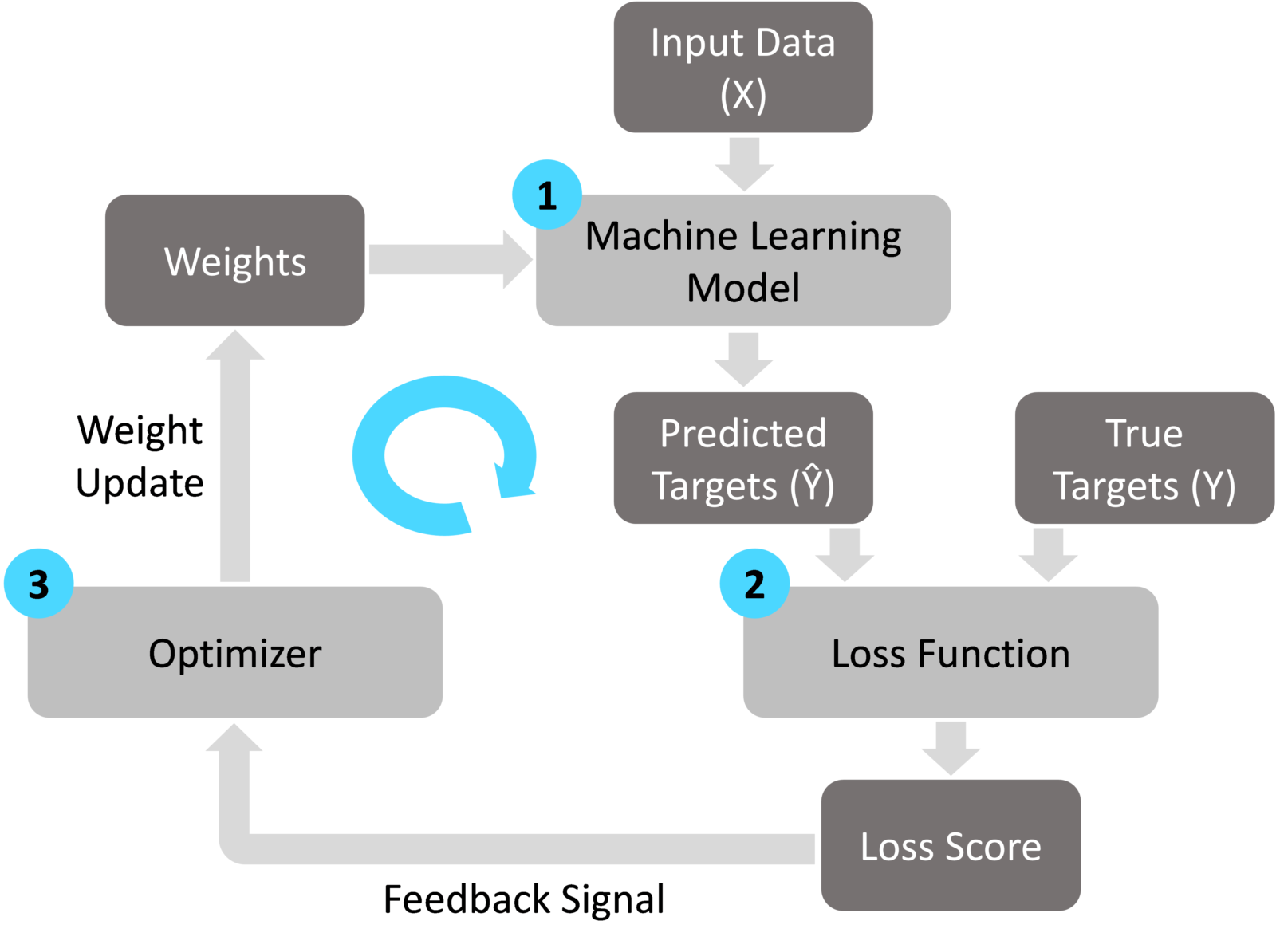

Deeper into Machine Learning Problems

Beyond LLMs, the broader field of Machine Learning faces its array of problems. Data scarcity and data quality pose significant hurdles to training effective models. In many domains, collecting sufficient, high-quality data that is representative of all possible scenarios a model may encounter is implausible. Techniques like data augmentation and transfer learning offer some respite, but the challenge persists.

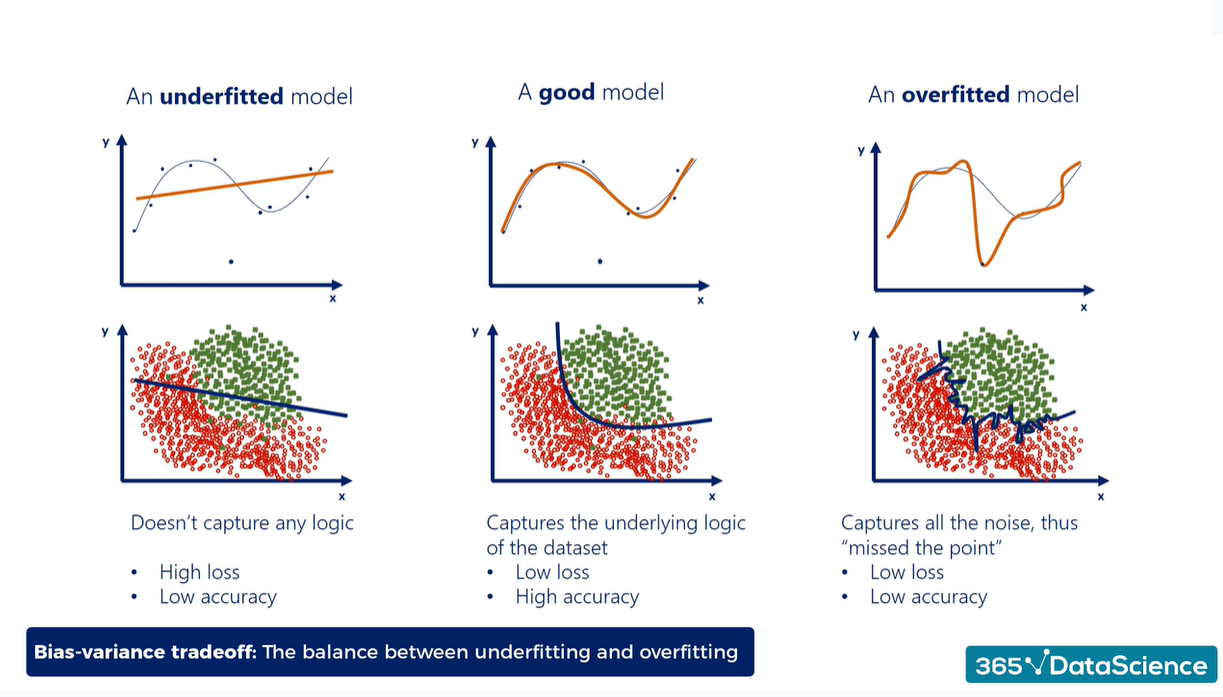

Additionally, the generalization of models to perform well on unseen data remains a fundamental issue in ML. Overfitting, where a model learns the training data too well, including its noise, to the detriment of its performance on new data, contrasts with underfitting, where the model cannot capture the underlying trends adequately.

Where We Are Heading: ML’s Evolution

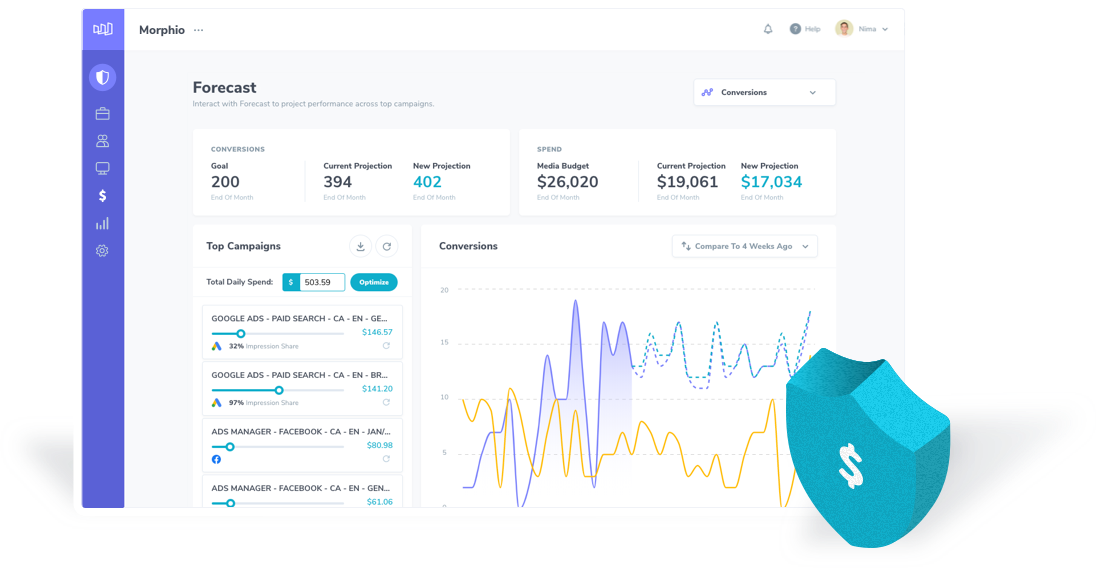

The evolution of machine learning and LLMs is intertwined with the progression of computational capabilities and the refinement of algorithms. With the advent of quantum computing and other technological advancements, the potential to overcome existing limitations and unlock new applications is on the horizon.

In my experience, both at DBGM Consulting, Inc., and through academic pursuits at Harvard University, I’ve seen firsthand the power of advanced AI and machine learning models in driving innovation and solving complex problems. As we advance, a critical examination of ethical implications, responsible AI utilization, and the pursuit of sustainable AI development will be paramount.

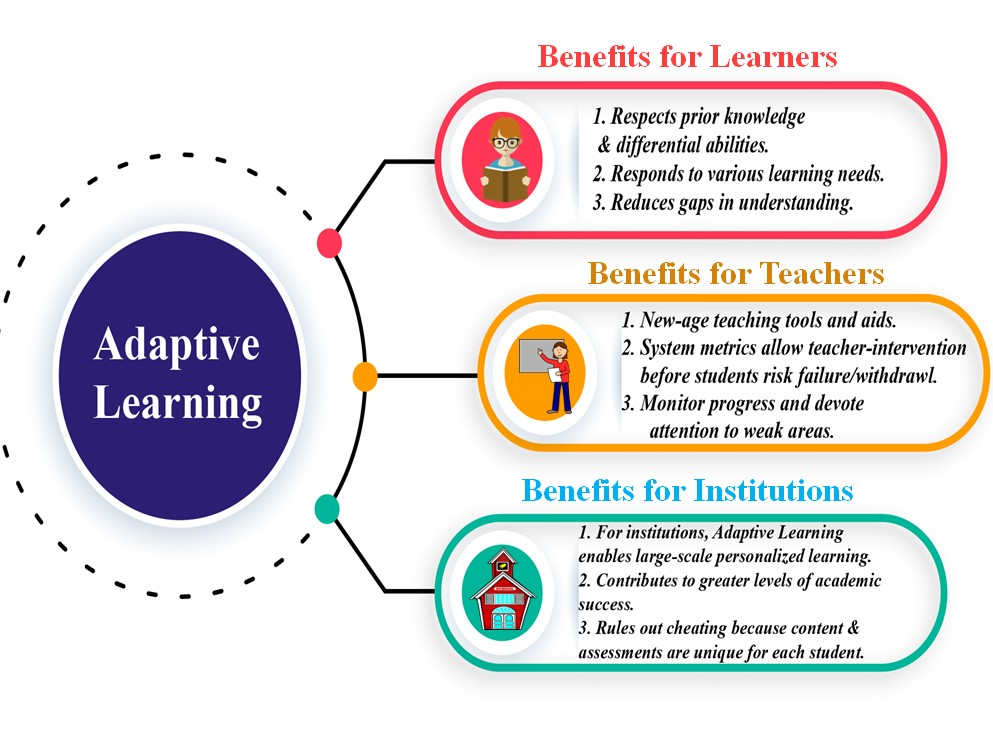

Adopting a methodical and conscientious approach to overcoming these challenges, machine learning, and LLMs in particular, hold the promise of substantial contributions across various sectors. The potential for these technologies to transform industries, enhance decision-making, and create more personalized and intuitive digital experiences is immense, albeit coupled with a responsibility to navigate the intrinsic challenges judiciously.

In conclusion, as we delve deeper into the intricacies of machine learning problems, understanding and addressing the complexities of large language models is critical. Through continuous research, thoughtful ethical considerations, and technological innovation, the future of ML is poised for groundbreaking advancements that could redefine our interaction with technology.

>

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

>