Enhancing Machine Learning Through Human Collaboration: A Deep Dive

As the boundaries of artificial intelligence (AI) and machine learning (ML) continue to expand, the integration between human expertise and algorithmic efficiency has become increasingly crucial. Building on our last discussion on the expansive potential of large language models in ML, this article delves deeper into the pivotal role that humans play in training, refining, and advancing these models. Drawing upon my experience in AI and ML, including my work on machine learning algorithms for self-driving robots, I aim to explore how collaborative efforts between humans and machines can usher in a new era of technological innovation.

Understanding the Human Input in Machine Learning

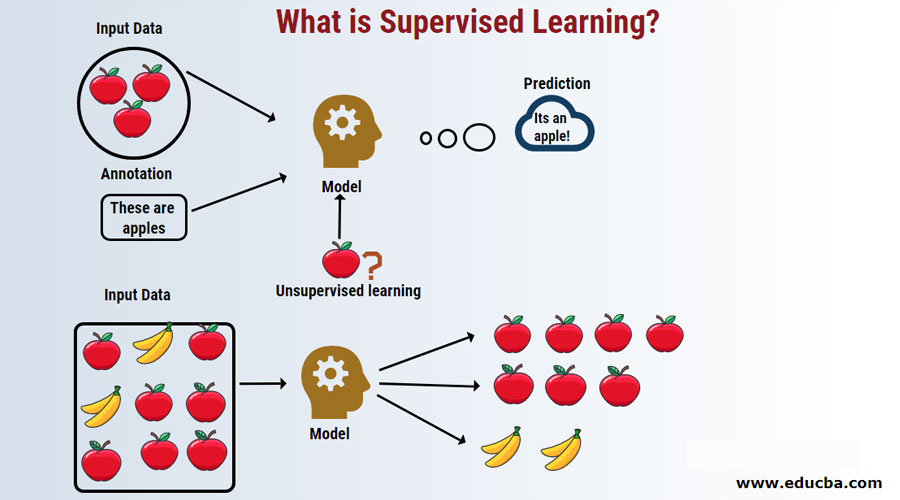

At its core, machine learning is about teaching computers to learn from data, mimicking the way humans learn. However, despite significant advancements, machines still lack the nuanced understanding and flexible problem-solving capabilities inherent to humans. This is where human collaboration becomes indispensable. Through techniques such as supervised learning, humans guide algorithms by labeling data, setting rules, and making adjustments based on outcomes.

Case Study: Collaborative Machine Learning in Action

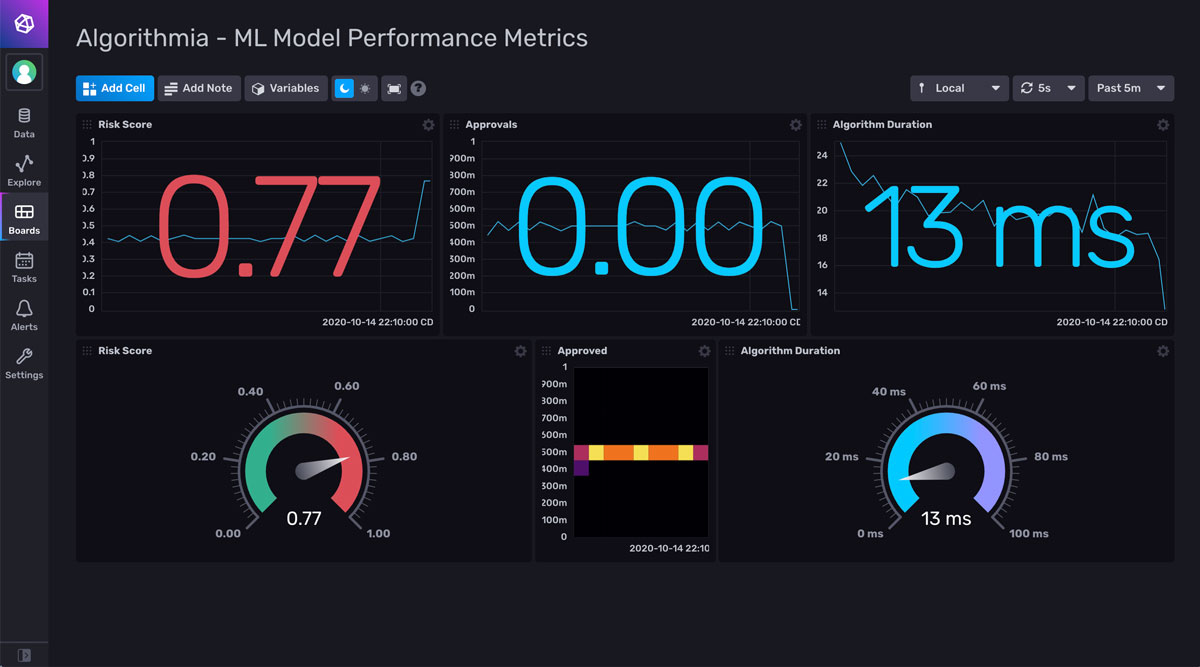

During my tenure at Microsoft, I observed firsthand the power of combining human intuition with algorithmic precision. In one project, we worked on enhancing Intune and MECM solutions by incorporating feedback loops where system administrators could annotate system misclassifications. This collaborative approach not only improved the system’s accuracy but also significantly reduced the time needed to adapt to new threats and configurations.

Addressing AI Bias and Ethical Considerations

One of the most critical areas where human collaboration is essential is in addressing bias and ethical concerns in AI systems. Despite their capabilities, ML models can perpetuate or even exacerbate biases if trained on skewed datasets. Human oversight, therefore, plays a crucial role in identifying, correcting, and preventing these biases. Drawing inspiration from philosophers like Alan Watts, I believe in approaching AI development with mindfulness and respect for diversity, ensuring that our technological advancements are inclusive and equitable.

Techniques for Enhancing Human-AI Collaboration

To harness the full potential of human-AI collaboration, several strategies can be adopted:

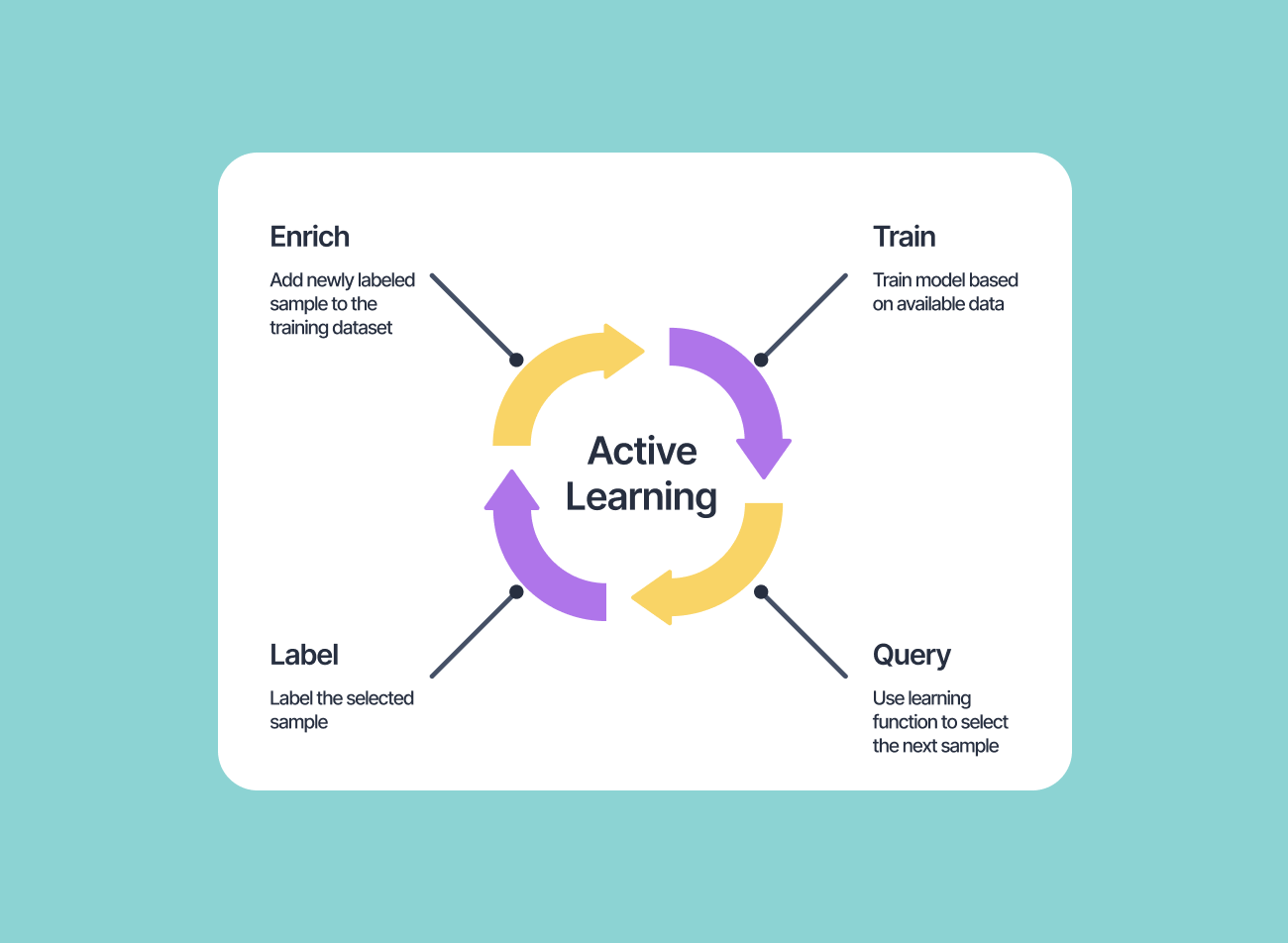

- Active Learning: This approach involves algorithms selecting the most informative data points for human annotation, optimizing the learning process.

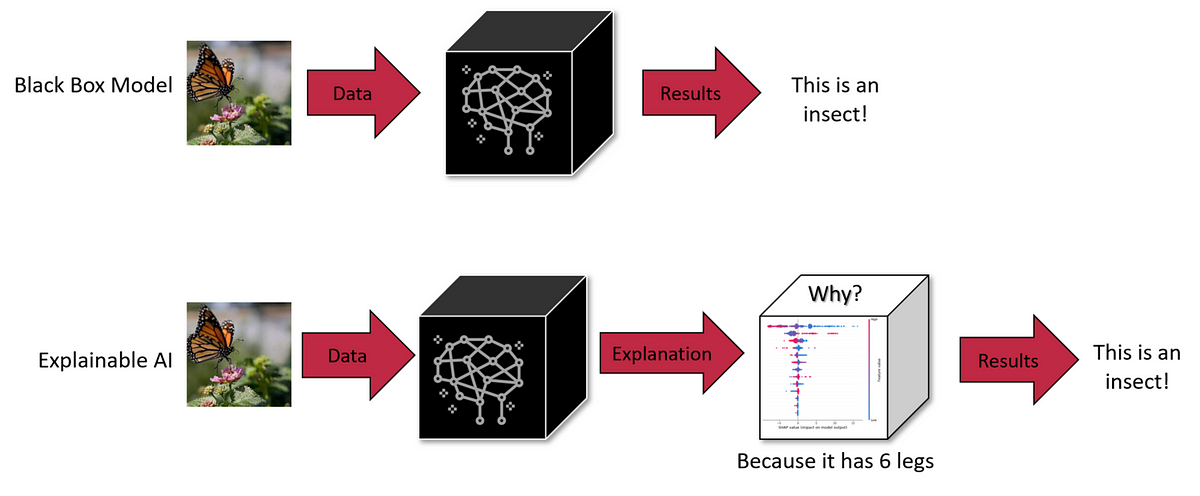

- Explainable AI (XAI): Developing models that provide insights into their decision-making processes makes it easier for humans to trust and manage AI systems.

- Human-in-the-loop (HITL): A framework where humans are part of the iterative cycle of AI training, fine-tuning models based on human feedback and corrections.

Future Directions: The Convergence of Human Creativity and Machine Efficiency

The integration of human intelligence and machine learning holds immense promise for solving complex, multidimensional problems. From enhancing creative processes in design and music to addressing crucial challenges in healthcare and environmental conservation, the synergy between humans and AI can lead to groundbreaking innovations. As a practitioner deeply involved in AI, cloud solutions, and security, I see a future where this collaboration not only achieves technological breakthroughs but also fosters a more inclusive, thoughtful, and ethical approach to innovation.

Conclusion

In conclusion, as we continue to explore the depths of machine learning and its implications for the future, the role of human collaboration cannot be overstated. By combining the unique strengths of human intuition and machine efficiency, we can overcome current limitations, address ethical concerns, and pave the way for a future where AI enhances every aspect of human life. As we delve deeper into this fascinating frontier, let us remain committed to fostering an environment where humans and machines learn from and with each other, driving innovation forward in harmony.