Artificial Intelligence: The Current Reality and Challenges for the Future

In recent years, Artificial Intelligence (AI) has triggered both significant excitement and concern. As someone deeply invested in the AI sphere through both my consulting firm, DBGM Consulting, Inc., and my academic endeavors, I have encountered the vast potential AI holds for transforming many industries. Alongside these possibilities, however, come challenges that we must consider if we are to responsibly integrate AI into everyday life.

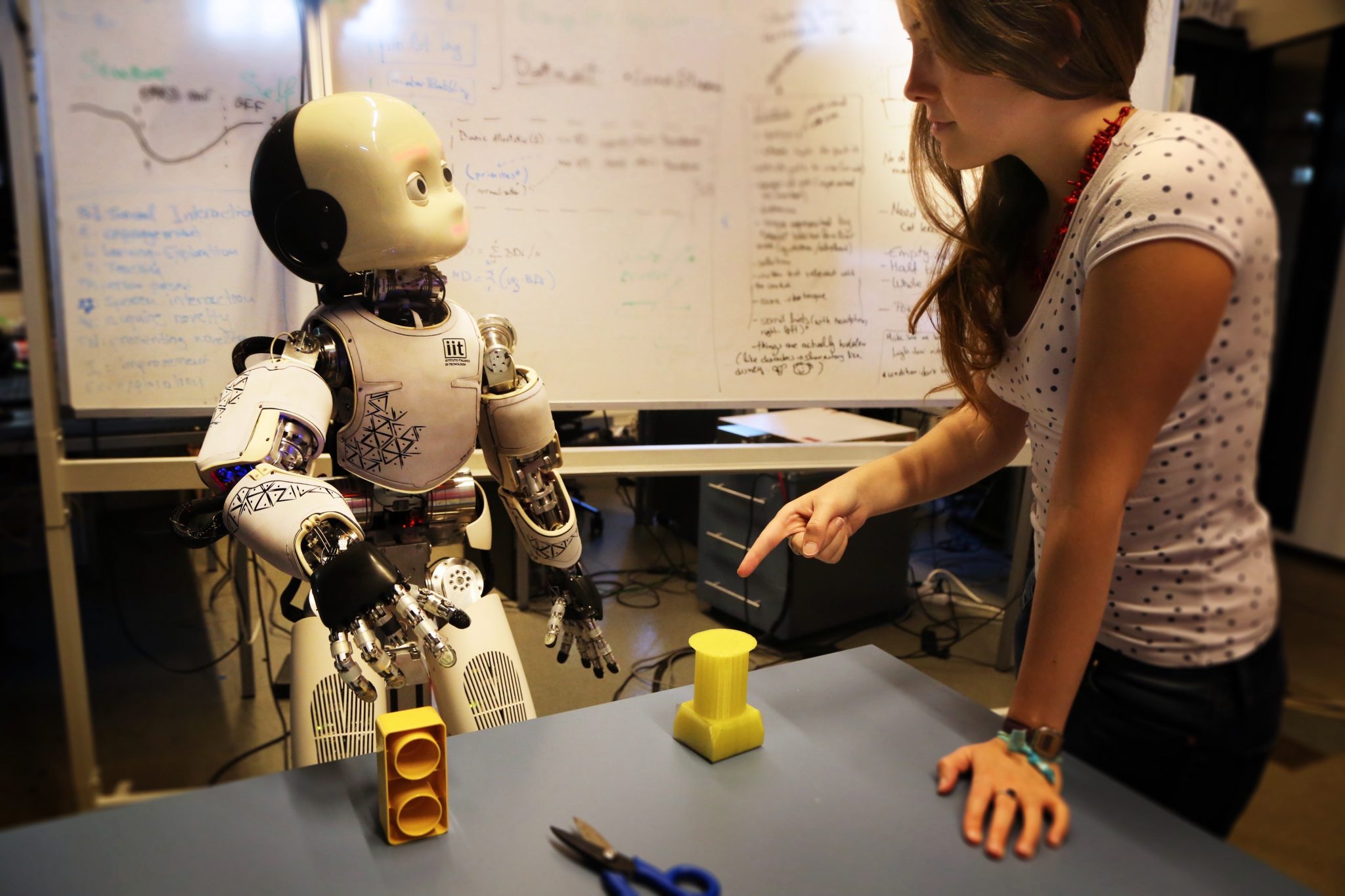

AI, in its current state, is highly specialized. While many people envision AI as a human-like entity that can learn and adapt to all forms of tasks, the reality is that we are still relying chiefly on narrow AI—designed to perform specific, well-defined tasks better than humans can. At DBGM Consulting, we implement AI-driven process automations and machine learning models, but these solutions are limited to predefined outcomes, not general intelligence.

The ongoing development of AI presents both opportunities and obstacles. For instance, in cloud solutions, AI can drastically improve the efficiency of infrastructure management, optimize complex networks, and streamline large-scale cloud migrations. However, the limitations of current iterations of AI are something I have seen first-hand—especially during client projects where unpredictability or complexity is introduced.

Understanding the Hype vs. Reality

One of the challenges in AI today is managing the expectations of what the technology can do. In the commercial world, there is a certain level of hype around AI, largely driven by ambitious marketing claims and the media. Many people imagine AI solving problems like general human intelligence, ethical decision-making, or even the ability to create human-like empathy. However, the reality is quite different.

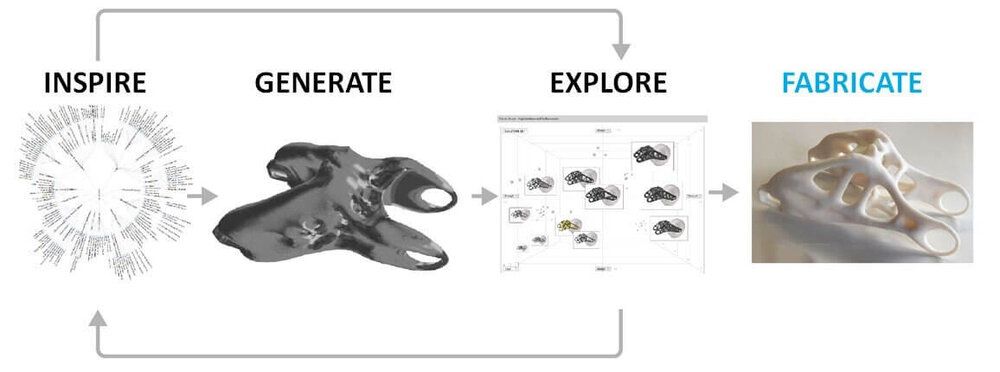

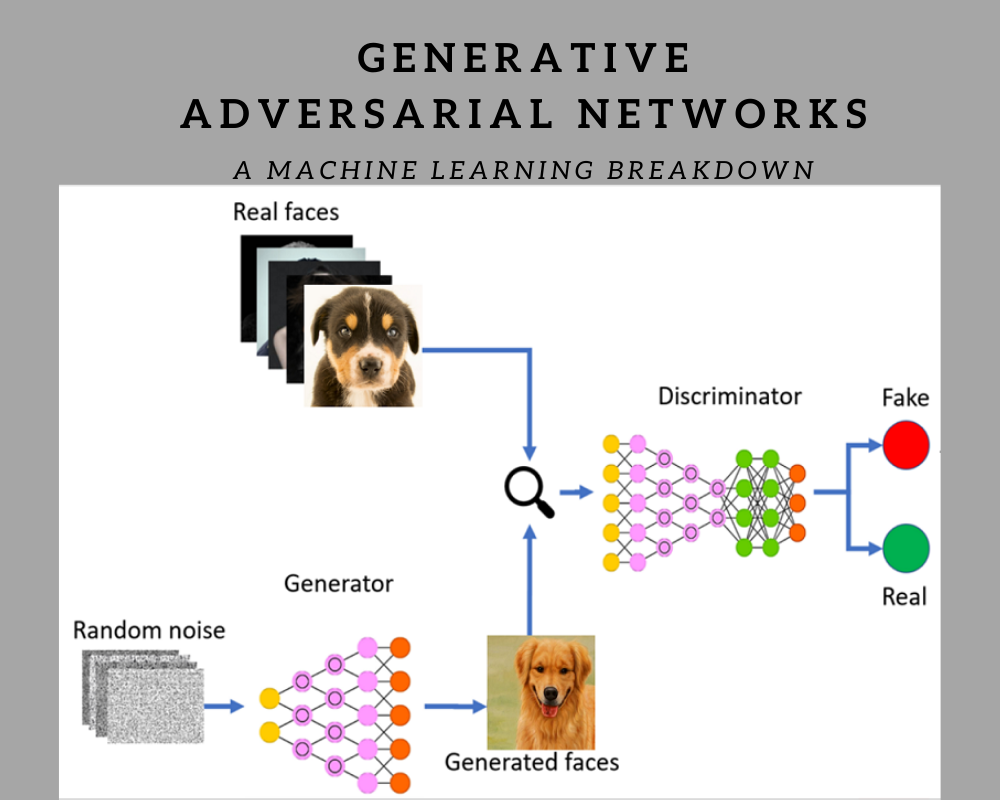

To bridge the gap between these hopes and current capabilities, it’s essential to understand the science behind AI. Much of the work being done is based on powerful algorithms that identify patterns within massive datasets. While these algorithms perform incredibly well in areas like image recognition, language translation, and recommendation engines, they don’t yet come close to understanding or reasoning like a human brain. For example, recent AI advancements in elastic body simulations have provided highly accurate models in physics and graphics processing, but the systems governing these simulations are still far from true “intelligence”.

Machine Learning: The Core of Today’s AI

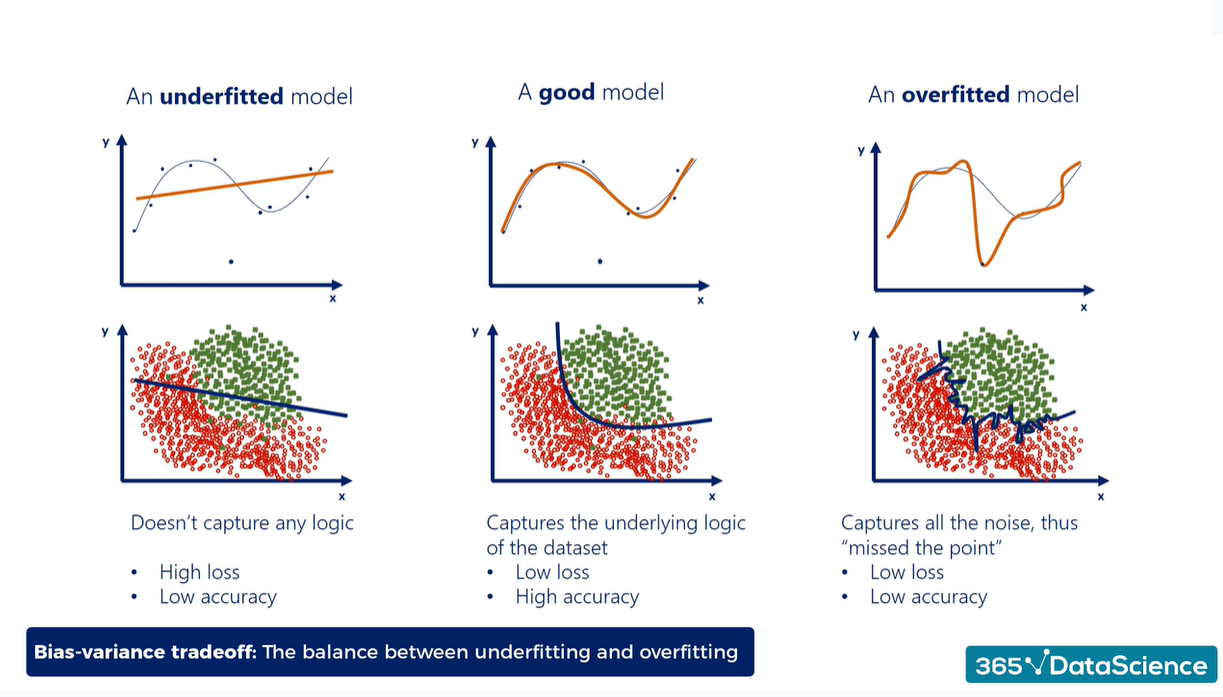

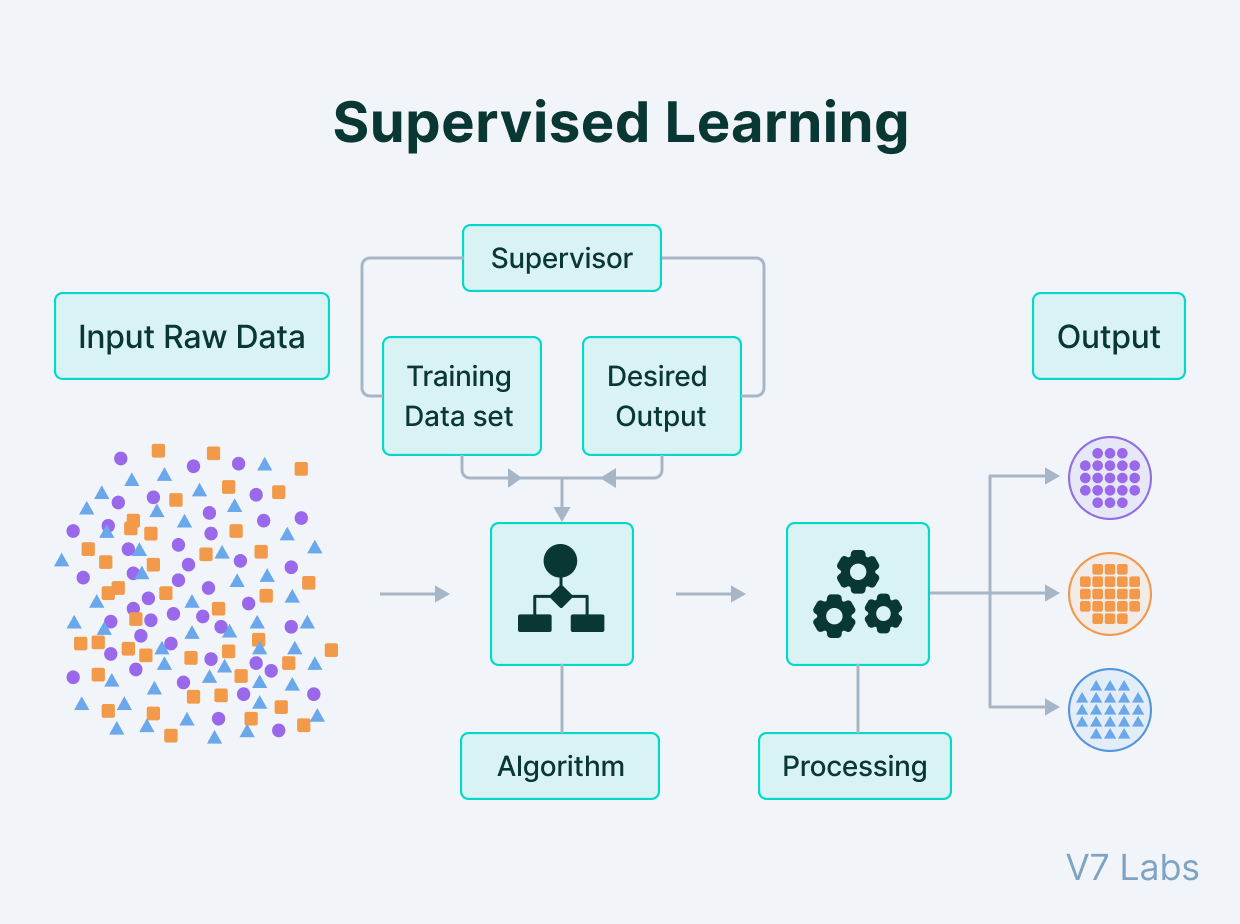

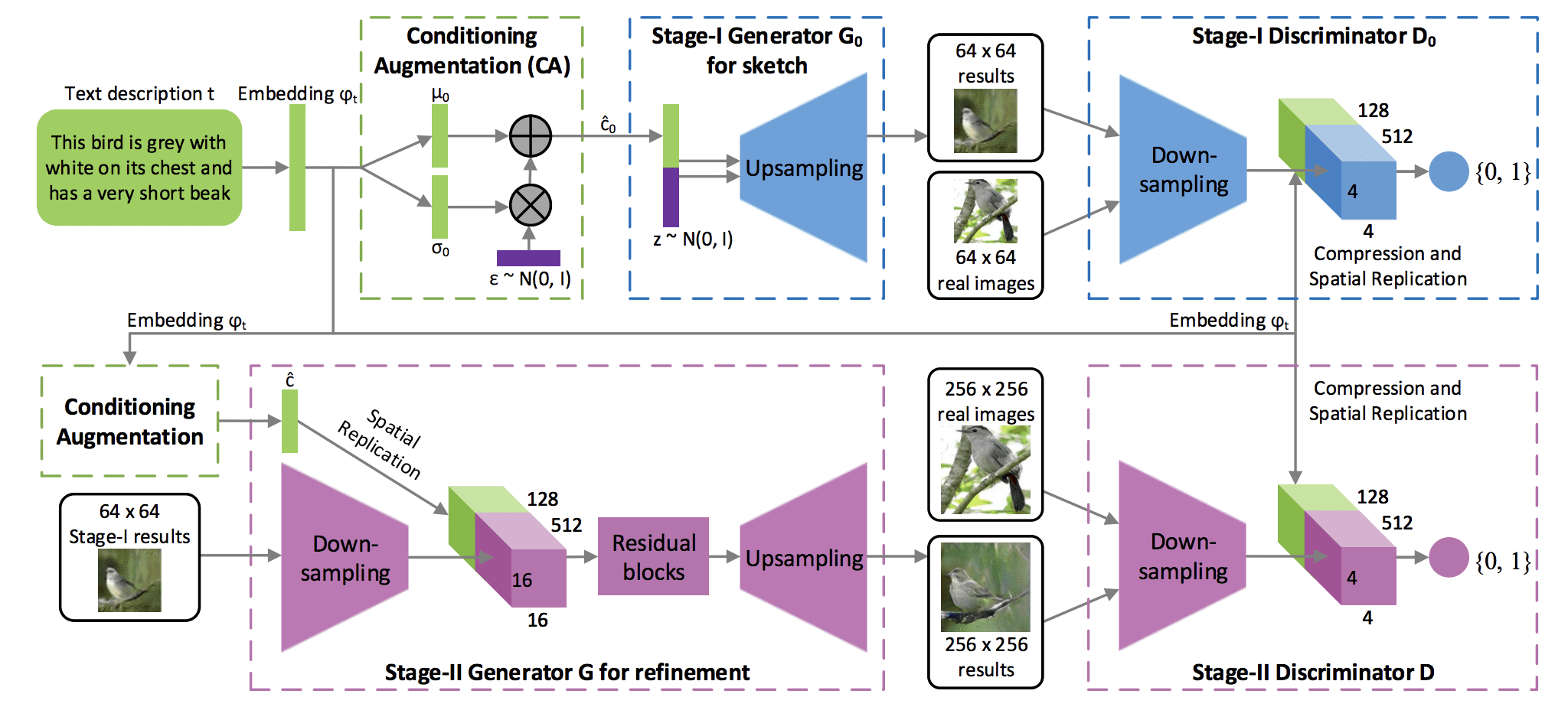

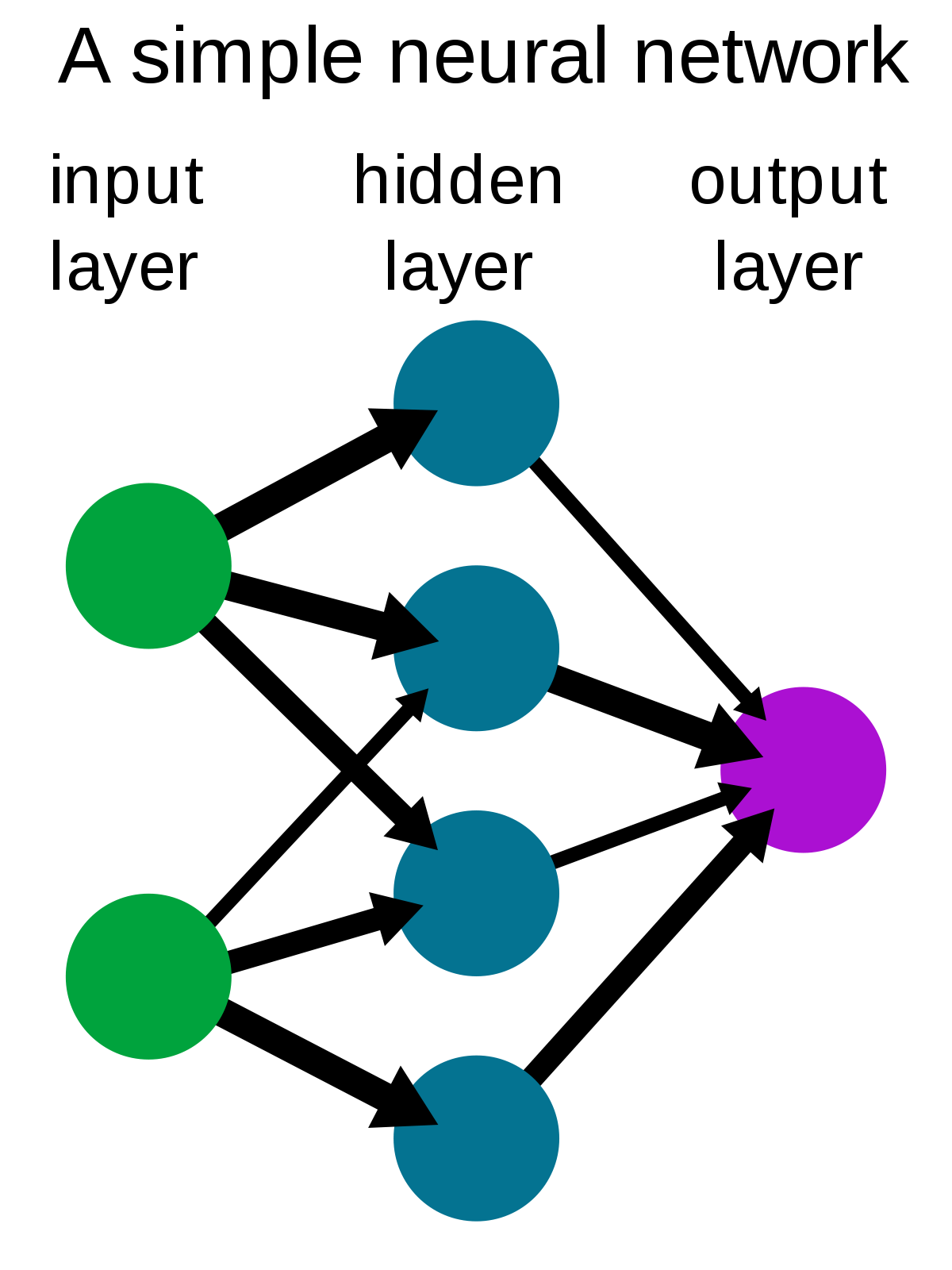

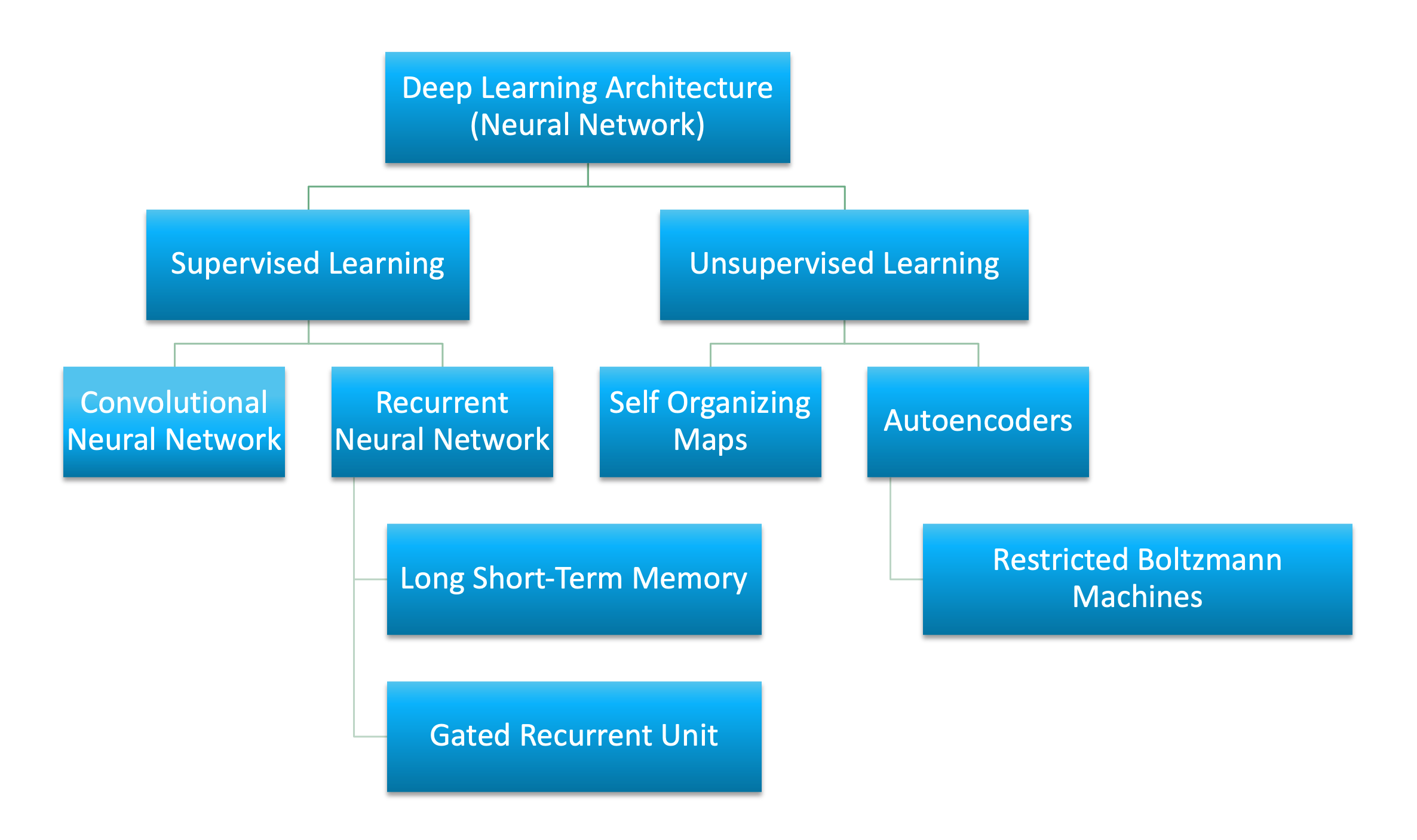

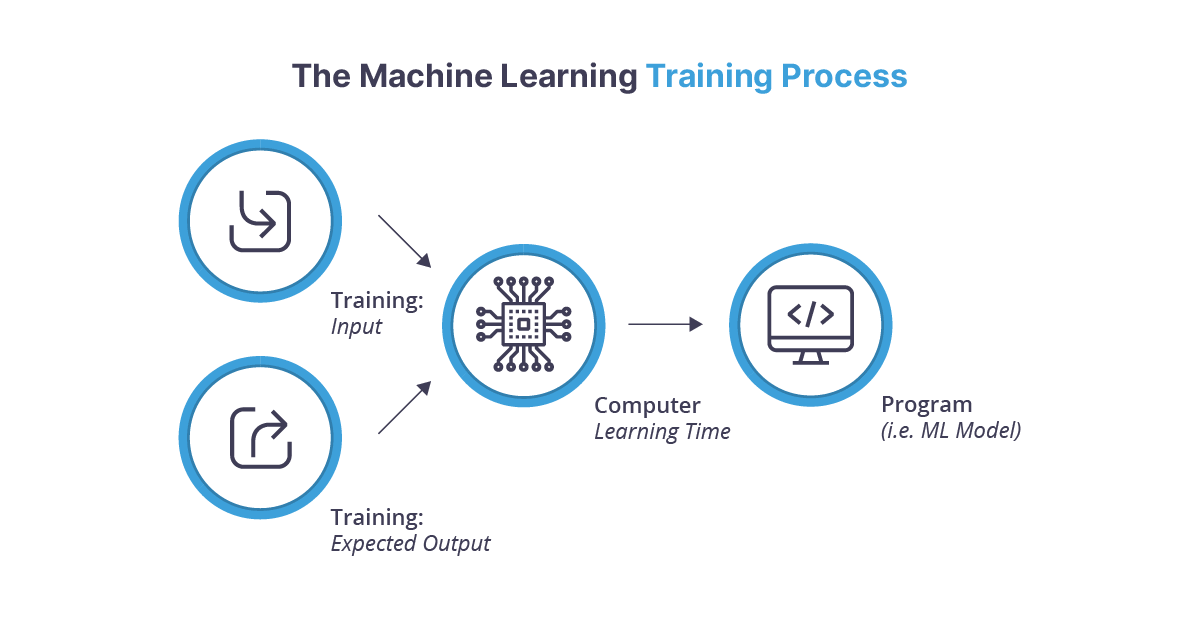

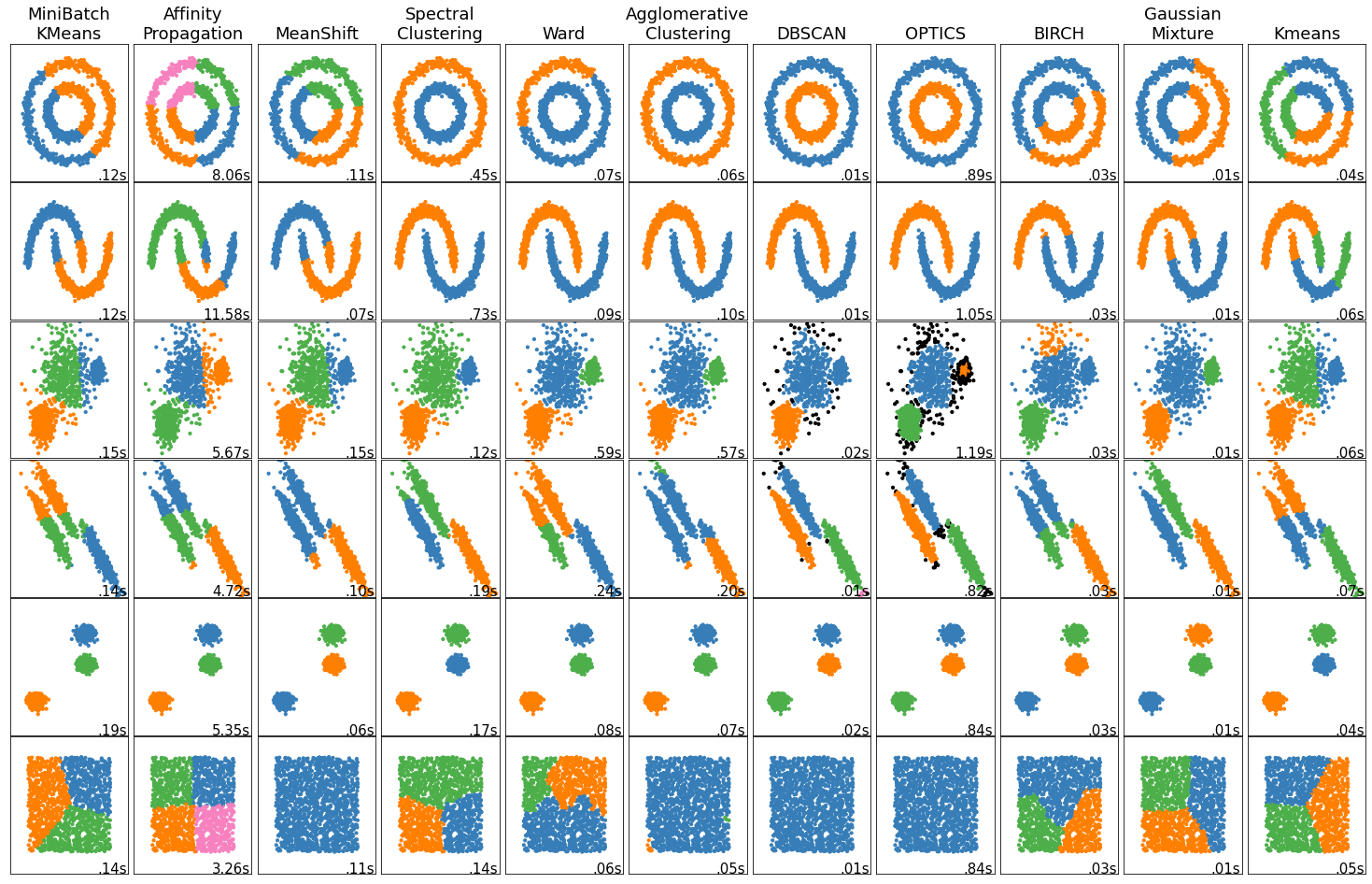

If you follow my work or have read previous articles regarding AI development, you already know that machine learning (ML) lies at the heart of today’s AI advancements. Machine learning, a subset of AI, constructs models that can evolve as new information is gathered. At DBGM Consulting, many of our AI-based projects use machine learning to automate processes, predict outcomes, or make data-driven decisions. However, one crucial point that I often emphasize to clients is that ML systems are only as good as the data they train on. A poorly trained model with biased datasets can actually introduce more harm than good.

ML provides tremendous advantages when the task is well-understood, and the data is plentiful and well-curated. Problems begin to emerge, however, when data is chaotic or when the system is pushed beyond its training limits. This is why, even in domains where AI shines—like text prediction in neural networks or self-driving algorithms—there are often lingering edge cases and unpredictable outcomes that human oversight must still manage.

Moreover, as I often discuss with my clients, ethical concerns must be factored into the deployment of AI and ML systems. AI models, whether focused on cybersecurity, medical diagnoses, or even customer service automation, can perpetuate harmful biases if not designed and trained responsibly. The algorithms used today mostly follow linear approaches built on statistical patterns, which means they’re unable to fully understand context or check for fairness without human interventions.

Looking Toward the Future of AI

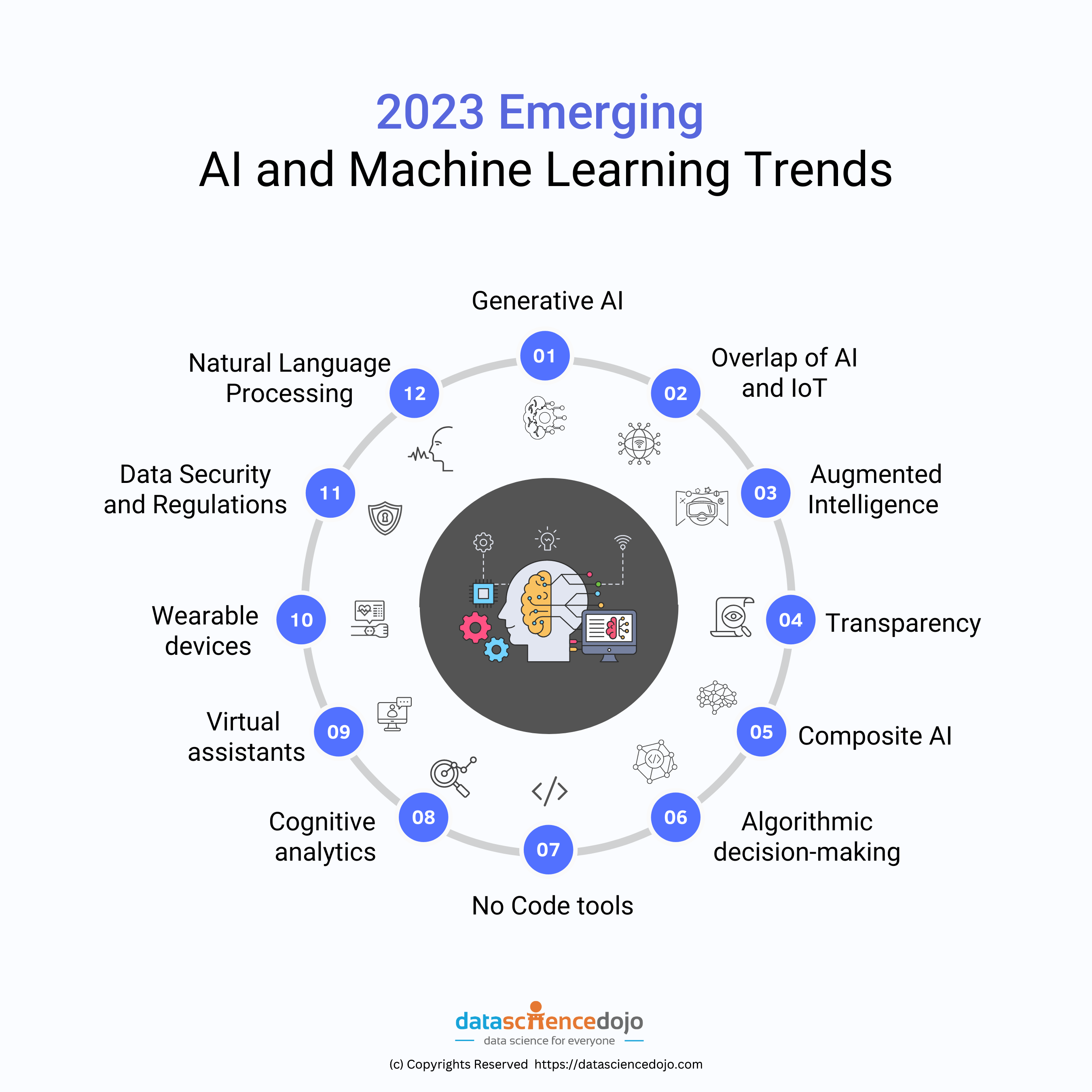

As a technologist and consultant, my engagement with AI projects keeps me optimistic about the future, but it also makes me aware of the many challenges still in play. One area that particularly fascinates me is the growing intersection of AI with fields like quantum computing and advanced simulation technologies. From elastic body simulation processes reshaping industries like gaming and animation to AI-driven research helping unlock the mysteries of the universe, the horizons are endless. Nevertheless, the road ahead is not without obstacles.

Consider, for instance, my experience in the automotive industry—a field I have been passionate about since my teenage years. AI is playing a more prominent role in self-driving technologies as well as in predictive maintenance analytics for vehicles. But I continue to see AI limitations in real-world applications, especially in complex environments where human intuition and judgment are crucial for decision-making.

Challenges We Must Address

Before we can unlock the full potential of artificial intelligence, several critical challenges must be addressed:

- Data Quality and Bias: AI models require vast amounts of data to train effectively. Biased or incomplete datasets can lead to harmful or incorrect predictions.

- Ethical Concerns: We must put in place regulations and guidelines to ensure AI is built and trained ethically and is transparent about decision-making processes.

- Limitations of Narrow AI: Current AI systems are highly specialized and lack the broad, generalized knowledge that many people expect from AI in popular media portrayals.

- Human Oversight: No matter how advanced AI may become, keeping humans in the loop will remain vital to preventing unforeseen problems and ethical issues.

These challenges, though significant, are not insurmountable. It is through a balanced approach—one that understands the limitations of AI while still pushing forward with innovation—that I believe we will build systems that not only enhance but also coexist healthily with our societal structures.

Conclusion

As AI continues to evolve, I remain cautiously optimistic. With the right practices, ethical considerations, and continued human oversight, I believe AI will enhance various industries—from cloud solutions to autonomous vehicles—while also opening up new avenues that we haven’t yet dreamed of. However, for AI to integrate fully and responsibly into our society, we must remain mindful of its limitations and the real-world challenges it faces.

It’s crucial that as we move towards this AI-driven future, we also maintain an open dialogue. Whether through hands-on work implementing enterprise-level AI systems or personal exploration with machine learning in scientific domains, I’ve always approached AI with both enthusiasm and caution. I encourage you to follow along as I continue to unpack these developments, finding the balance between hype and reality.

Focus Keyphrase: Artificial Intelligence Challenges

>

> >

> >

> >

> >

>

>

> >

> >

>