The Art of Debugging Machine Learning Algorithms: Insights and Best Practices

One of the greatest challenges in the field of machine learning (ML) is the debugging process. As a professional with a deep background in artificial intelligence through DBGM Consulting, I often find engineers dedicating extensive time and resources to a particular approach without evaluating its effectiveness early enough. Let’s delve into why effective debugging is crucial and how it can significantly speed up project timelines.

Focus Keyphrase: Debugging Machine Learning Algorithms

Understanding why models fail and how to troubleshoot them efficiently is critical for successful machine learning projects. Debugging machine learning algorithms is not just about identifying the problem but systematically implementing solutions to ensure they work as intended. This iterative process, although time-consuming, can make engineers 10x, if not 100x, more productive.

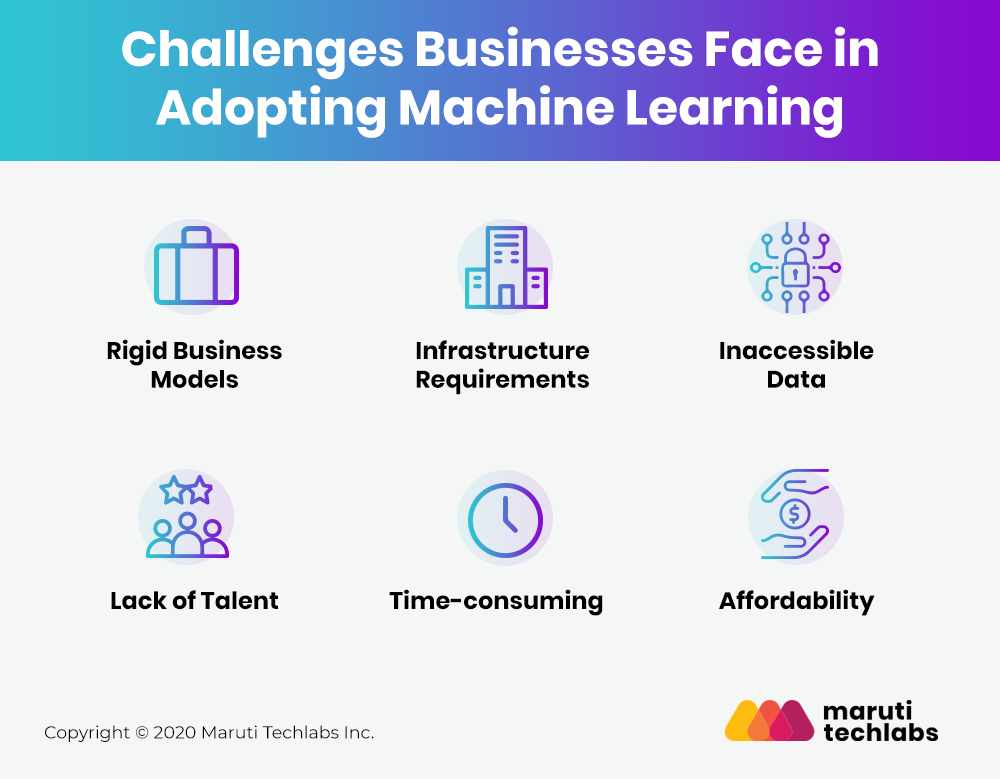

Common Missteps in Machine Learning Projects

Often, engineers fall into the trap of collecting more data under the assumption that it will solve their problems. While data is a valuable asset in machine learning, it is not always the panacea for every issue. Running initial tests can save months of futile data collection efforts, revealing early whether more data will help or if architectural changes are needed.

Strategies for Effective Debugging

The art of debugging involves several strategies:

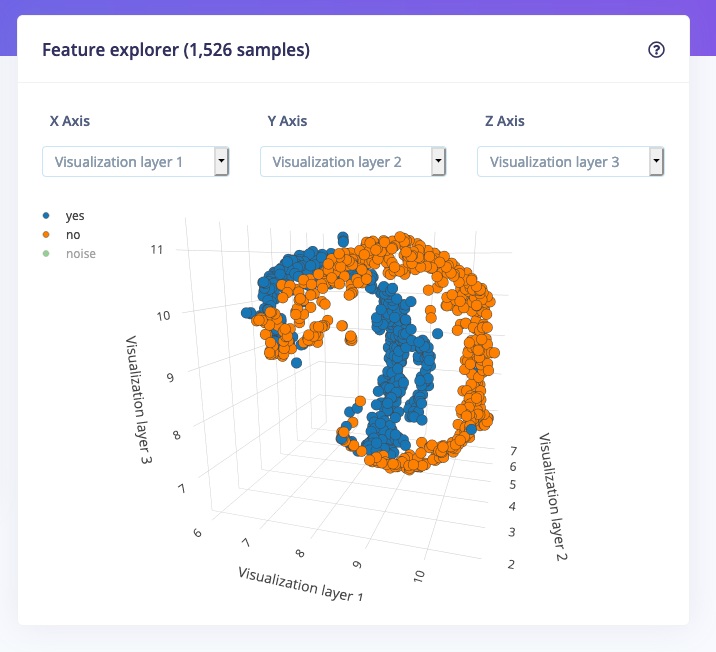

- Evaluating Data Quality and Quantity: Ensure the dataset is rich and varied enough to train the model adequately.

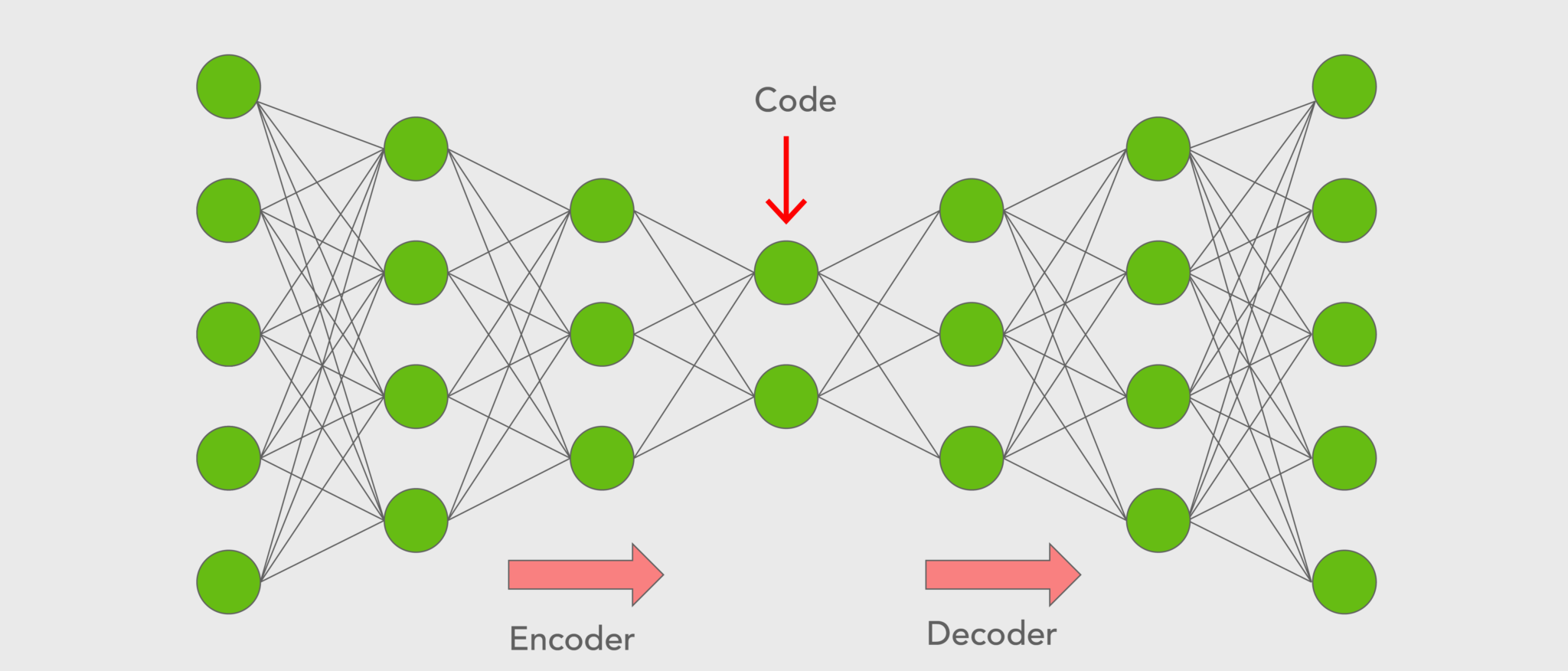

- Model Architecture: Experiment with different architectures. What works for one problem may not work for another.

- Regularization Techniques: Techniques such as dropout or weight decay can help prevent overfitting.

- Optimization Algorithms: Select the right optimization algorithms. Sometimes, changing from SGD to Adam can make a significant difference.

- Cross-Validation: Practicing thorough cross-validation can help assess model performance more accurately.

Getting Hands Dirty: The Pathway to Mastery

An essential element of mastering machine learning is practical experience. Theoretical knowledge is vital, but direct hands-on practice teaches the nuances that textbooks and courses might not cover. Spend dedicated hours dissecting why a neural network isn’t converging instead of immediately turning to online resources for answers. This deep exploration leads to better understanding and, ultimately, better problem-solving skills.

The 10,000-Hour Rule

The idea that one needs to invest 10,000 hours to master a skill is highly relevant to machine learning and AI. By engaging consistently with projects and consistently troubleshooting, even when the going gets tough, you build a unique set of expertise. During my time at Harvard University focusing on AI and information systems, I realized persistent effort—often involving long hours of debugging—was the key to significant breakthroughs.

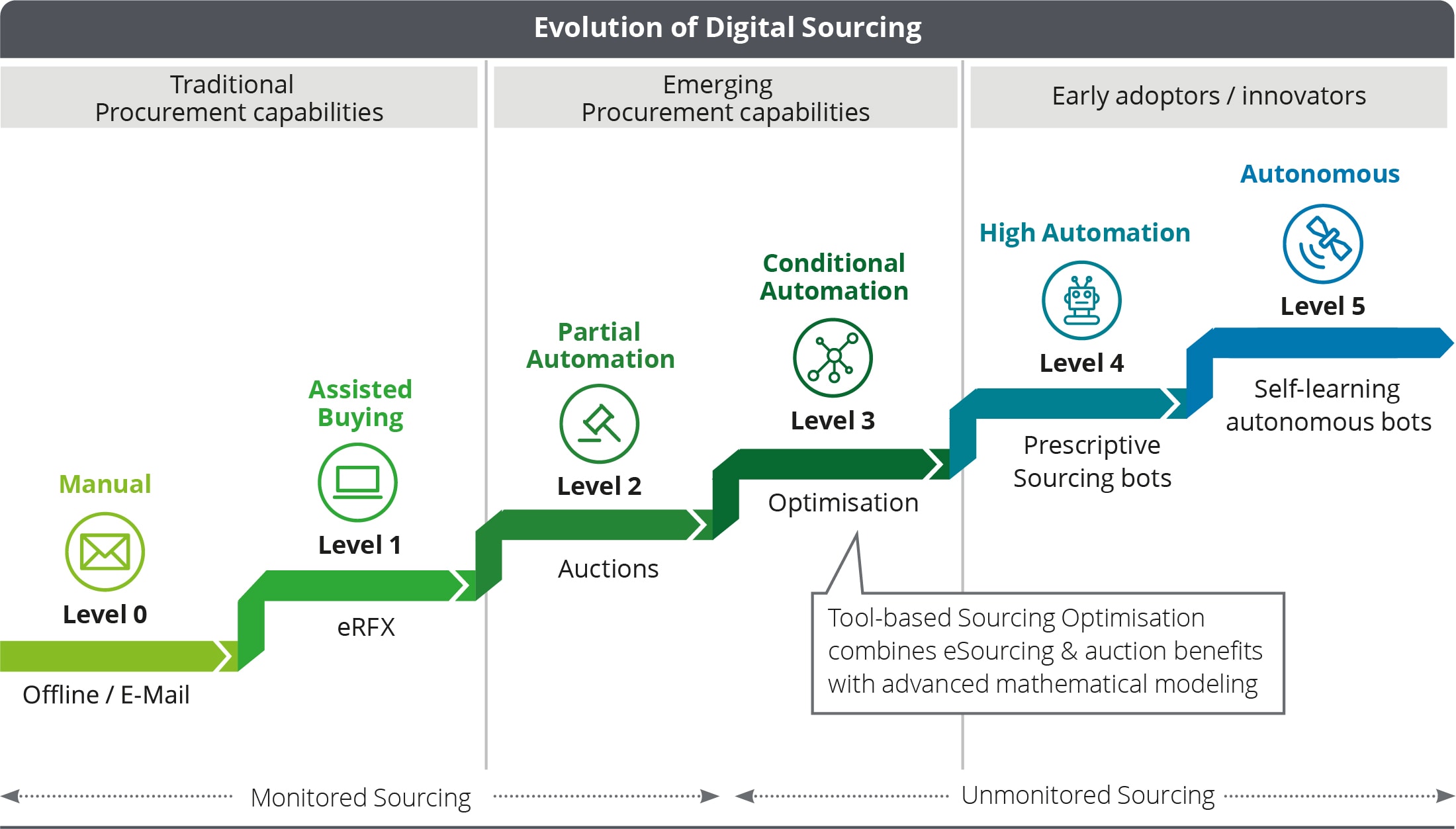

The Power of Conviction and Adaptability

One concept often underestimated in the field is the power of conviction. Conviction that your model can work, given the right mix of data, computational power, and architecture, often separates successful projects from abandoned ones. However, having conviction must be balanced with adaptability. If an initial approach doesn’t work, shift gears promptly and experiment with other strategies. This balancing act was a crucial learning from my tenure at Microsoft, where rapid shifts in strategy were often necessary to meet client needs efficiently.

Engaging with the Community and Continuous Learning

Lastly, engaging with the broader machine learning community can provide insights and inspiration for overcoming stubborn problems. My amateur astronomy group, where we developed a custom CCD control board for a Kodak sensor, is a testament to the power of community-driven innovation. Participating in forums, attending conferences, and collaborating with peers can reveal solutions to challenges you might face alone.

Key Takeaways

In summary, debugging machine learning algorithms is an evolving discipline that requires a blend of practical experience, adaptability, and a systematic approach. By focusing on data quality, experimenting with model architecture, and engaging deeply with the hands-on troubleshooting process, engineers can streamline their projects significantly. Remembering the lessons from the past, including my work with self-driving robots and machine learning models at Harvard, and collaborating with like-minded individuals, can pave the way for successful AI implementations.

Focus Keyphrase: Debugging Machine Learning Algorithms

>

> >

> >

> >

> >

> >

>