The Evolution of Ray Tracing: From its Origins to Modern-Day Real-Time Algorithms

Ray tracing, a rendering technique that has captivated the computer graphics world for decades, has recently gained mainstream attention thanks to advances in real-time gaming graphics. But the core algorithm that powers ray tracing has surprisingly deep roots in computing history. Although modern-day GPUs are just beginning to effectively handle real-time ray tracing, this technology was conceptualized far earlier — and it’s worth exploring how this sophisticated light simulation technique has evolved.

The Birth of Ray Tracing

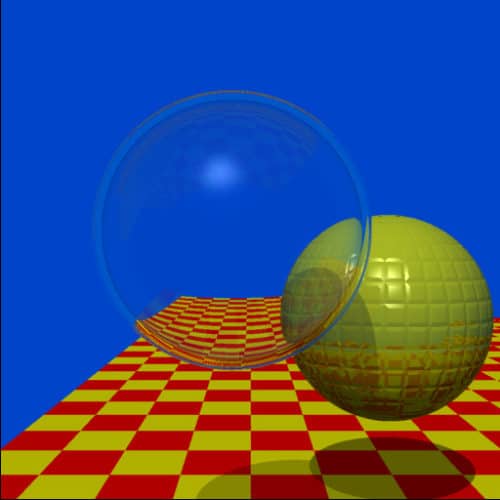

The concept of ray tracing dates as far back as 1979, when computer scientist Jay Turner Whitted introduced us to the first recursive ray tracer. While today we’re used to seeing ray-traced visuals in video games and film in real-time, the computational power needed to simulate these visuals in the late 1970s was immense. Back then, generating a single image wasn’t a matter of seconds but potentially weeks of computation. This immense effort resulted in relatively simple but groundbreaking visuals, as it was the first time light-matter interactions like reflections, shadows, and refractions were simulated more realistically.

What made Whitted’s recursive ray tracing special was its ability to simulate more advanced light behaviors, such as reflection and refraction, by “tracing” the path of individual light rays through a scene. This wasn’t just a technical advance — it was a paradigm shift in how we think about simulating light.

Ray Casting vs Ray Tracing: Improving Light Simulation

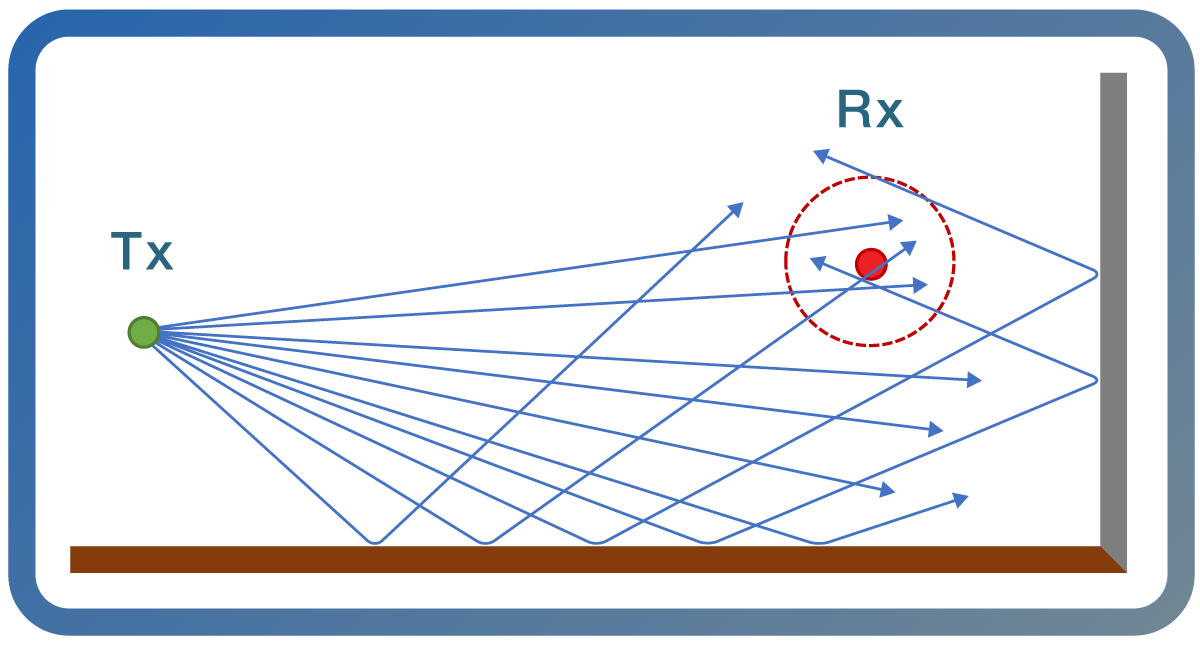

Before recursive ray tracing took off, simpler methods such as ray casting were used. Ray casting works by shooting a ray from a viewpoint, determining where the ray intersects an object, and then calculating the light at that point. This worked fine for simple, direct lighting but fell short when attempting to simulate more complex phenomena like reflections and refractions.

- Ray Casting: Basic light simulation technique that returns color based on a single ray hitting an object.

- Ray Tracing: Recursive approach allowing simulations of reflections, refractions, and shadows by tracing multiple rays as they bounce around the scene.

The limitation of ray casting lies in its inability to handle effects where light interacts multiple times with a surface (reflection) or distorts as it passes through a transparent surface (refraction). To handle these interactions, a more complex method — recursive ray tracing — needed to be employed.

Understanding Recursive Ray Tracing

So how does recursive ray tracing work in practice? In simple terms, it involves shooting rays into a scene, but instead of stopping at the closest object they hit, additional “secondary rays” are sent out each time a ray hits a reflective or refractive surface. These secondary rays contribute additional lighting information back to the original ray, allowing for more realistic results.

“It’s fascinating to think that the recursive algorithm used today is fundamentally the same as the one introduced in 1979. While optimizations have been made, the core logic is still the same.”

How Recursive Ray Tracing Works:

- Step 1: A camera shoots rays into the scene. When a ray hits an object, it may be reflected, refracted, or simply absorbed, depending on the material it encounters.

- Step 2: If the ray hits a reflective surface, a new ray is fired in the direction of reflection. If it hits a transparent surface, refraction occurs, and both a reflected and refracted ray are generated.

- Step 3: These secondary rays continue to interact with the scene until they either hit a light source, another object or escape to the background.

- Step 4: The color from all these interactions is combined using a lighting model (such as the Blinn–Phong model) to form a pixel in the final image.

As a result, recursive ray tracing is capable of creating visually stunning effects like those seen in glass or mirrored surfaces, even in scenes with complex lighting setups.

Overcoming the Challenges of Recursive Ray Tracing

While ray tracing produces breathtaking imagery, recursion can lead to computational issues, particularly in real-time scenarios. Infinite recursion occurs when rays continuously bounce, leading to exponential growth in the number of calculations. To avoid this, we typically limit how many secondary rays may be generated. For practical applications such as real-time gaming or interactive media, this helps prevent the process from consuming too much time or memory.

- Bounding Function: A limiter function that prevents infinite recursion by stopping secondary ray calculations after a given depth (usually 3-5 bounces).

- Ray Count Culling: A technique where only a subset of rays is traced to save on resources in real-time applications.

Balancing Ray Tracing and Rasterization in Modern Graphics

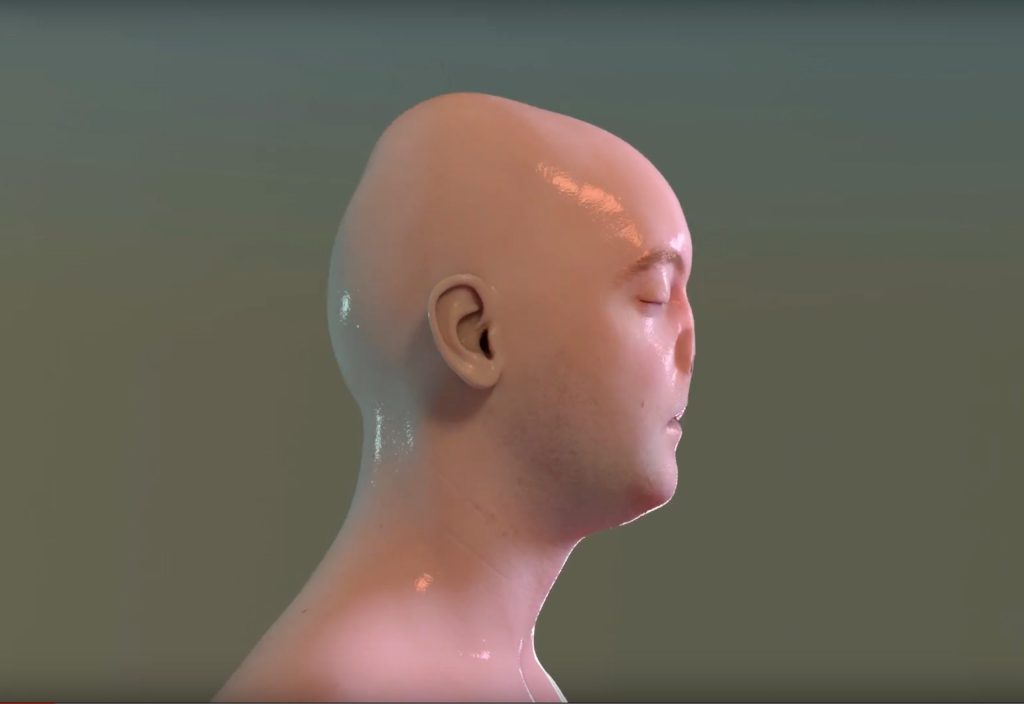

While recursive ray tracing offers unparalleled realism, it remains resource-intensive. In real-time applications, such as video games, a mix of traditional rasterization and ray tracing is often used. Rasterization, which efficiently determines visible surfaces but struggles with complex light interactions, is still the preferred method for most of the scene. Ray tracing is only used for specific areas such as reflective surfaces or global illumination.

This balance between rasterization and ray tracing is why we’re only now seeing game-ready GPUs that are capable of handling the high computational load in real time. Graphics cards from companies like NVIDIA (with their “RTX” line) now have dedicated ray-tracing cores that handle these tasks efficiently — making real-time ray tracing in games a reality.

If you’re aiming for groundbreaking fidelity in simulations, recursive ray tracing is the way to go. But for practical, real-time applications, developers often use hybrid methods: rasterization for the bulk of the scene and ray tracing for specific effects like reflections and shadows.

The Future: Path Tracing and Beyond

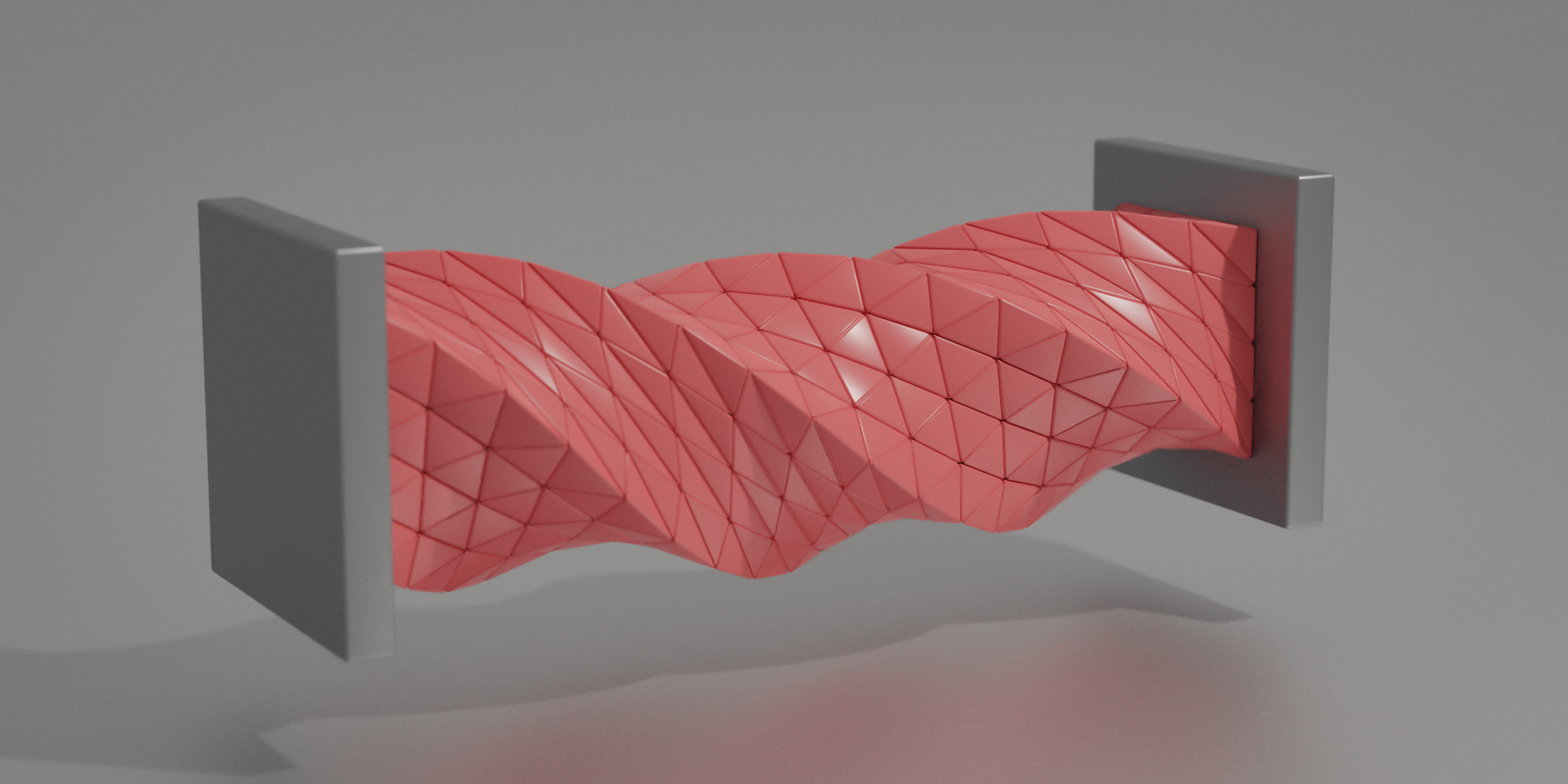

While recursive ray tracing is often groundbreaking, there are still limitations with its ability to represent indirect lighting, diffuse inter-reflection, and caustics. For this reason, advanced methods like path tracing are increasingly used in settings where ultimate realism is needed.

- Path Tracing: A global illumination algorithm that traces rays but also allows them to bounce around the scene, gathering complex lighting information for even more realistic results, especially with indirect light.

Path tracing computes far more light paths than traditional ray tracing, which can result in near-photo-realistic images, albeit at the cost of even more processing time.

Conclusion: The Importance of Ray Tracing in Modern Computing

Looking back at where ray tracing began in the 1970s, it’s truly awe-inspiring to see how far the technology has come. Originally, generating a ray-traced image could take weeks of computation, yet today’s GPUs deliver real-time results. However, while the limitations were once more about computational power, today’s challenges stem from finding the right blend between fidelity and performance in cutting-edge graphics.

Whether we’re watching the interplay of light through a glass sphere or exploring dynamic lighting environments in video games, ray tracing—and its recursive variants—remain a fundamental technique shaping modern digital imagery.

For those interested in more about how light and computational algorithms affect our understanding of the universe, I’ve previously written about neutron stars and other astronomical phenomena. There’s a fascinating link between the precision required in rendering visual data and the precision of measurements in astrophysics.

Focus Keyphrase: Recursive Ray Tracing

>

> >

> >

>