Simulating Elastic Bodies: The Wonders and Challenges of Modern Computer Graphics

In the world of computer graphics and artificial intelligence, one of the most marveling yet complex areas is the simulation of elastic or deformable bodies. Imagine trying to simulate an environment where millions of soft objects like balls, octopi, or armadillos are interacting with one another, with collisions happening at every nanosecond. As an individual who has deep experience with artificial intelligence and process automation, I constantly find myself awestruck at how modern techniques have pushed the boundaries of what’s computationally possible. In the realm of elastic body simulations, the breakthroughs are nothing short of miraculous.

Elastic Body Simulations: Nature’s Dance in the Digital World

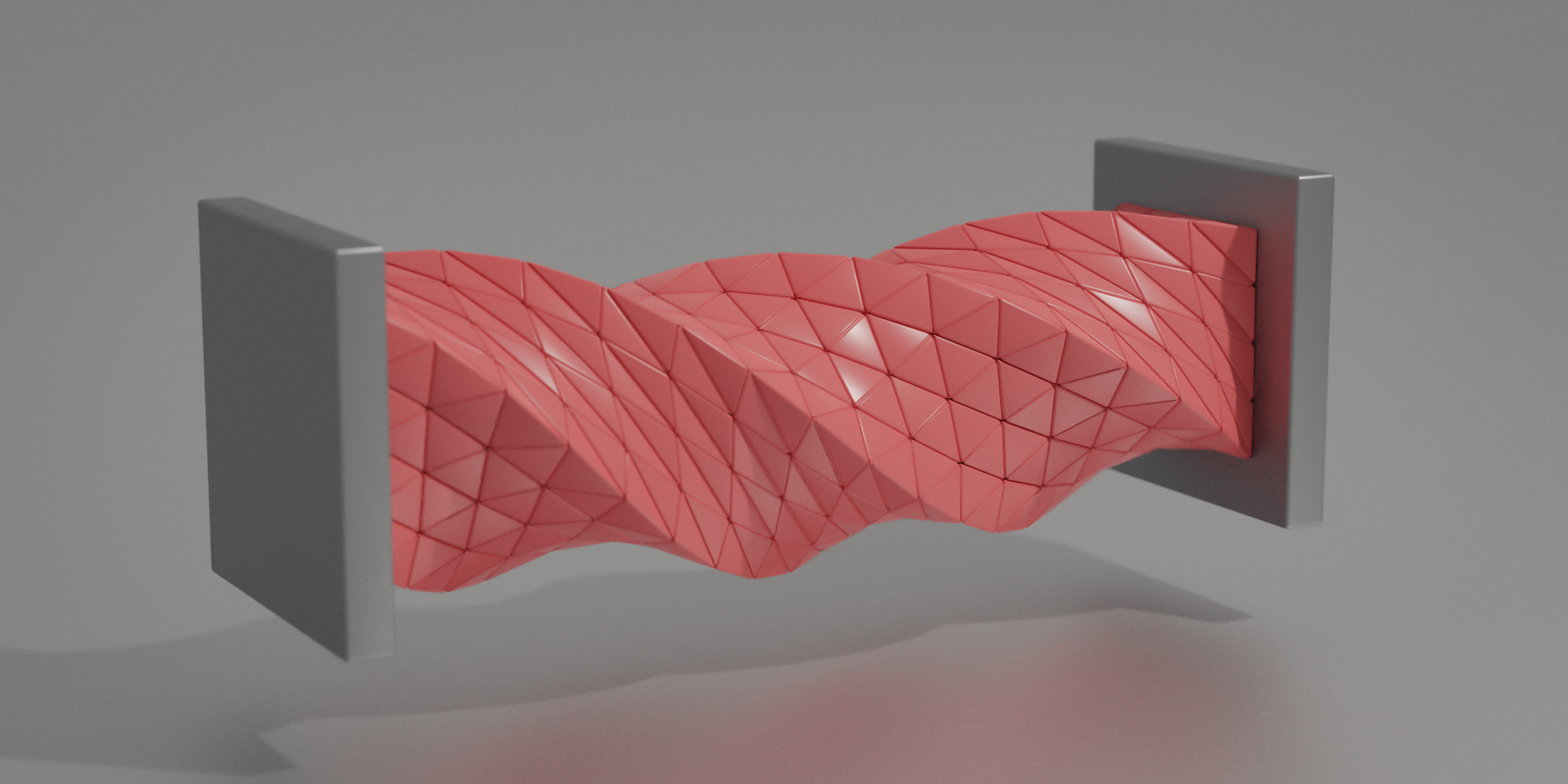

Elastic body simulation revolves around rendering soft objects that collide, stretch, compress, and deform according to physical laws. These simulations are fascinating not only for their visual beauty but also for the sheer computational complexity involved. Picture an airport bustling with a million people, each a soft body colliding with others, or rain pouring over flexible, deforming surfaces. Modeling the flex and finesse of real-world soft objects digitally requires careful consideration of physics, mechanical properties, and sheer computational power.

During my own academic journey and professional work at DBGM Consulting, Inc., I have time and again seen these challenges in vivid detail, whether working on machine learning models for autonomous robots or building complex AI processes. What really caught my eye recently is how sophisticated algorithms and techniques have made it possible to simulate millions of collisions or interactions—computational feats that would have been unthinkable not too long ago.

< >

>

The Complexity of Collision Calculations

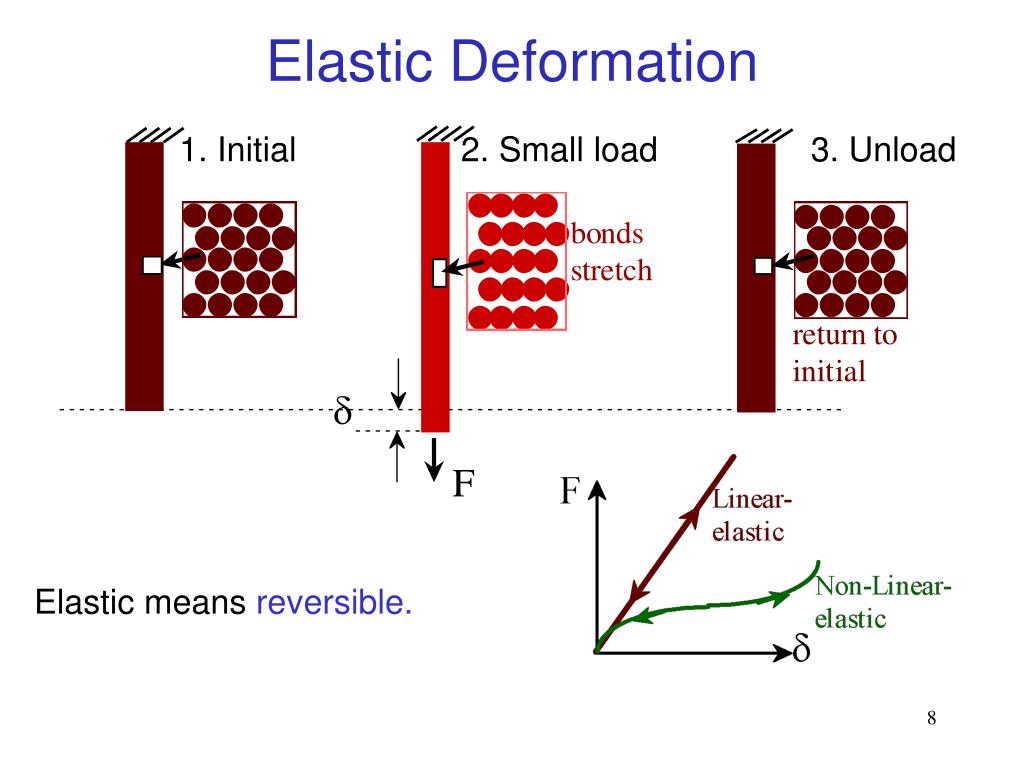

One crucial part of elastic body simulation is calculating the point of collisions. When we think of soft materials bumping into hard ones or each other (say, squishy balls in a teapot), we must calculate the source, duration, and intensity of each collision. With millions or more points of interaction, what becomes extremely challenging is maintaining the stability of the simulation.

An excellent example of this can be seen in simulation experiments involving glass enclosures filled with elastic objects. As soft bodies fall on top of each other, they compress and apply weight upon one another, creating a “wave-like behavior” in the material. This is difficult to solve computationally because you can’t compromise by ignoring the deformation of objects at the bottom. Every part of the model remains active and influential, ensuring that the whole system behaves as expected, no matter how complex the interactions.

The implications of these simulations stretch far beyond entertainment or visual effects. Accurate elastic body simulations have significant applications in various fields such as biomedical engineering, automotive crash testing, robotics, and even quantum physics simulations—fields I’ve been passionate about for much of my life, especially as referenced in previous articles such as Exploring the Challenges with Loop Quantum Gravity.

< >

>

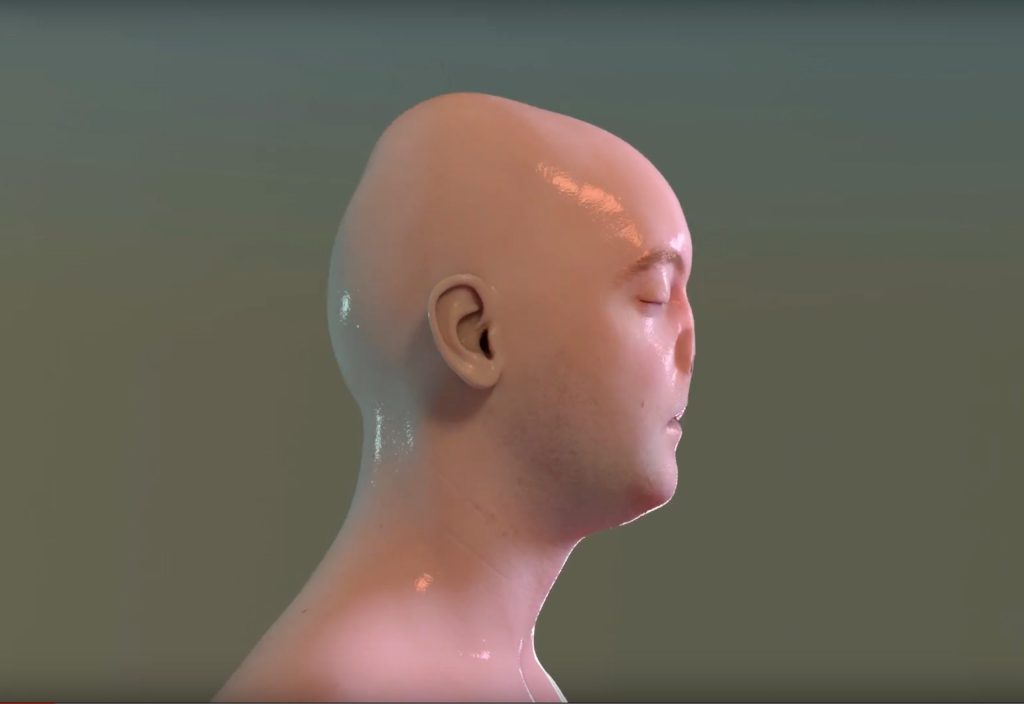

From Octopi to Armadillos: A Torture Test for Simulators

One of the more amusing and exciting types of experiments in elastic body simulation involves creatures like octopi or armadillos. In these setups, researchers and developers run “torture tests” on their simulators to expose their limitations. When I saw an armadillo being flattened and then watching it ‘breathe’ back to its original form, I was in awe. It reminded me of the intricate AWS machine-learning models I’ve worked on, where simulating unexpected or extreme conditions is paramount to testing system stability.

In another experiment, dropping elastic octopi into glass enclosures demonstrated how multiple materials interact in a detailed environment. This kind of simulation isn’t just fun to watch; it’s deeply informative. Understanding how materials interact—compressing, stretching, and re-aligning under stress—provides valuable insights into how to design better systems or products, from safer vehicles to more durable fabrics. It’s another reason why simulation technology has become such a cornerstone in modern engineering and design.

<

>

Unbelievable Computational Efficiency: A Giant Leap Forward

As if creating stable soft-body simulations wasn’t challenging enough, modern research has managed to push these technologies to extreme levels of efficiency. These simulations—which might once have taken hours or days—are now executing in mere seconds per frame. It’s an extraordinary achievement, especially given the scale. We’re not just talking about twice as fast here; we’re looking at gains of up to 100-1000x faster than older techniques!

Why is this important? Imagine simulating surgery dynamics in real-time for a robotic-assist platform, or evaluating how materials bend and break during a crash test. The time savings don’t just lead to faster results—they allow for real-time interactivity, greater detail, and significantly more accurate simulations. These kinds of improvements unlock opportunities where the real and digital worlds overlap more freely—autonomous systems, predictive modeling, and even AI-focused research such as the machine learning models I’ve detailed in previous posts like Understanding the Differences Between Artificial Intelligence and Machine Learning.

Future Applications of Elastic Body Simulations

With these advancements, the flexibility of elastic body simulations opens up new horizons. For instance, the ability to modify material properties such as friction and topological changes (like tearing), makes this technology valuable across various industries. Whether it’s creating life-like graphics for films, developing robots capable of mimicking human or animal behaviors, or helping architects and engineers with structural design, simulations of this kind are foundational to the creation of lifelike, dynamic environments.

In fact, in my travels and photography experiences when working for Stony Studio, I’ve often found inspiration from natural forms and movements that can now be replicated by computer simulations. This blending of art, science, and technology, in many ways, encapsulates the kind of interdisciplinary thinking that drives innovation forward.

< >

>

The Human Ingenuity Behind Simulation Technology

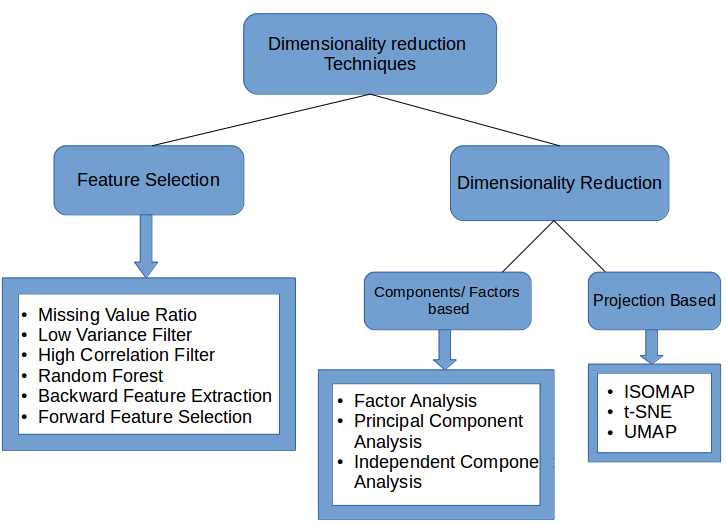

What I find most exciting about these developments is that they reflect the best of human ingenuity. Programmers, scientists, and engineers are constantly pushing what’s possible. Techniques involving the subdivision of large problems into smaller, more manageable ones, alongside the use of Gauss-Seidel iterations (which I’m all too familiar with from my AI work), allow for nearly magical results in simulation.

Even more breathtaking is how incredibly computationally fast these methods have become over the past decade. These developments remind me of the efficiency gains seen in the tech space from automation software that I’ve implemented in my consulting work. Faster, smarter, and more dynamic optimizations in AI and simulation translate into real-world impact. It’s like reprogramming reality itself—an astonishing achievement that literally transforms our understanding of the physical world and digital simulations alike.

<

>

As we continue progressing in this extraordinary field, the possible applications for elastic body simulation will expand further into areas such as autonomous driving, medical robotics, and smart wearables. Truly, what a time to be alive!

Focus Keyphrase: Elastic Body Simulation

>

> >

> >

>