Understanding High-Scale AI Systems in Autonomous Driving

In recent years, we have seen significant advancements in Artificial Intelligence, particularly in the autonomous driving sector, which relies heavily on neural networks, real-time data processing, and machine learning algorithms. This growing field is shaping up to be one of the most complex and exciting applications of AI, merging data science, machine learning, and engineering. As someone who has had a direct hand in machine learning algorithms for robotics, I find this subject both technically fascinating and critical for the future of intelligent systems.

Autonomous driving technology works at the intersection of multiple disciplines: mapping, sensor integration, decision-making algorithms, and reinforcement learning models. In this article, we’ll take a closer look at these components and examine how they come together to create an AI-driven ecosystem.

Core Components of Autonomous Driving

Autonomous vehicles rely on a variety of inputs to navigate safely and efficiently. These systems can be loosely divided into three major categories:

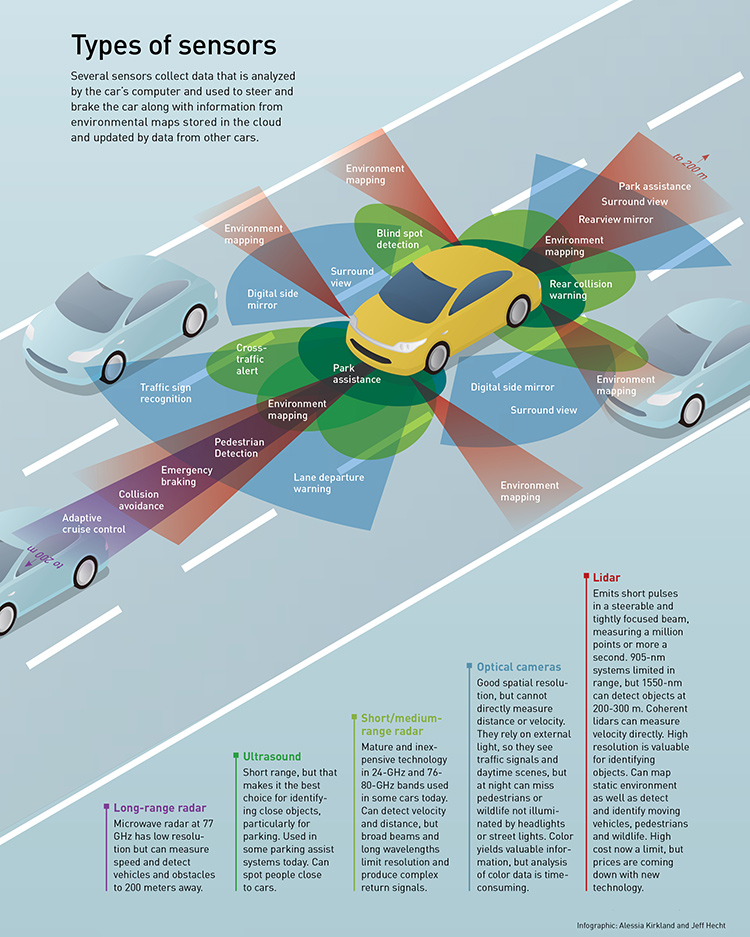

- Sensors: Vehicles are equipped with LIDAR, radar, cameras, and other sensors to capture real-time data about their environment. These data streams are crucial for the vehicle to interpret the world around it.

- Mapping Systems: High-definition mapping data aids the vehicle in understanding static road features, such as lane markings, traffic signals, and other essential infrastructure.

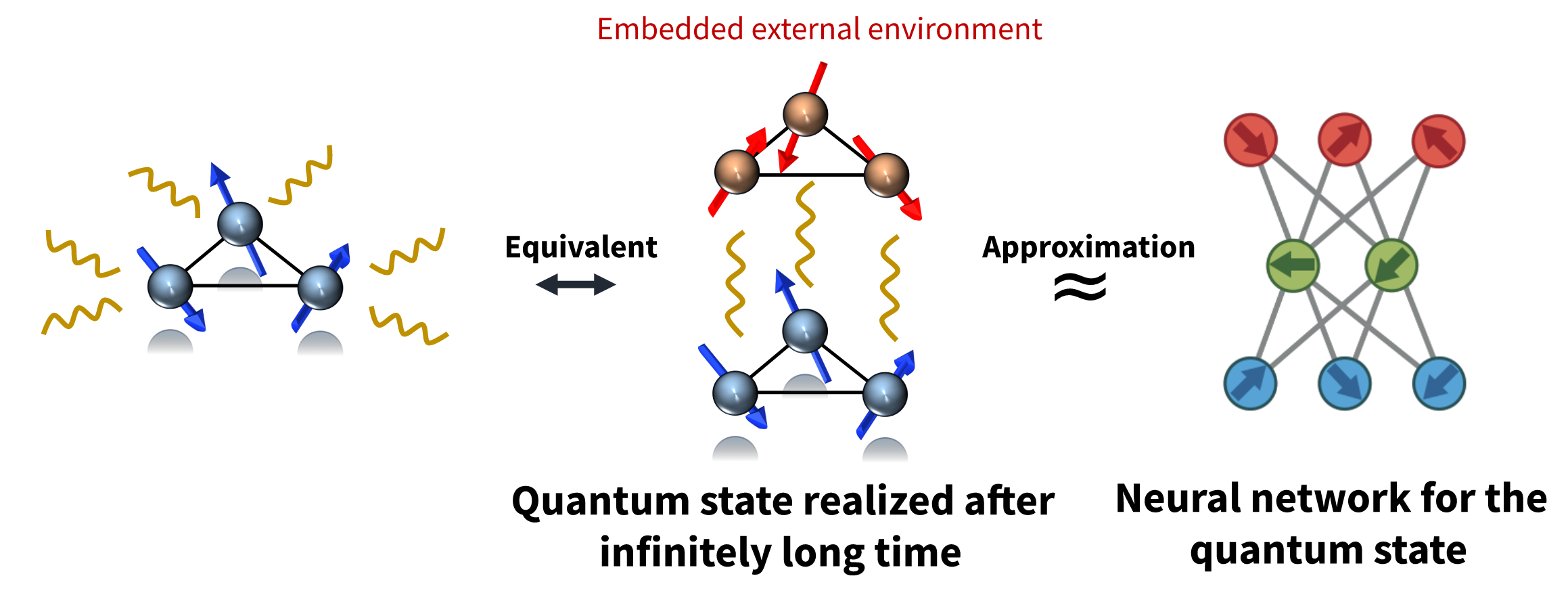

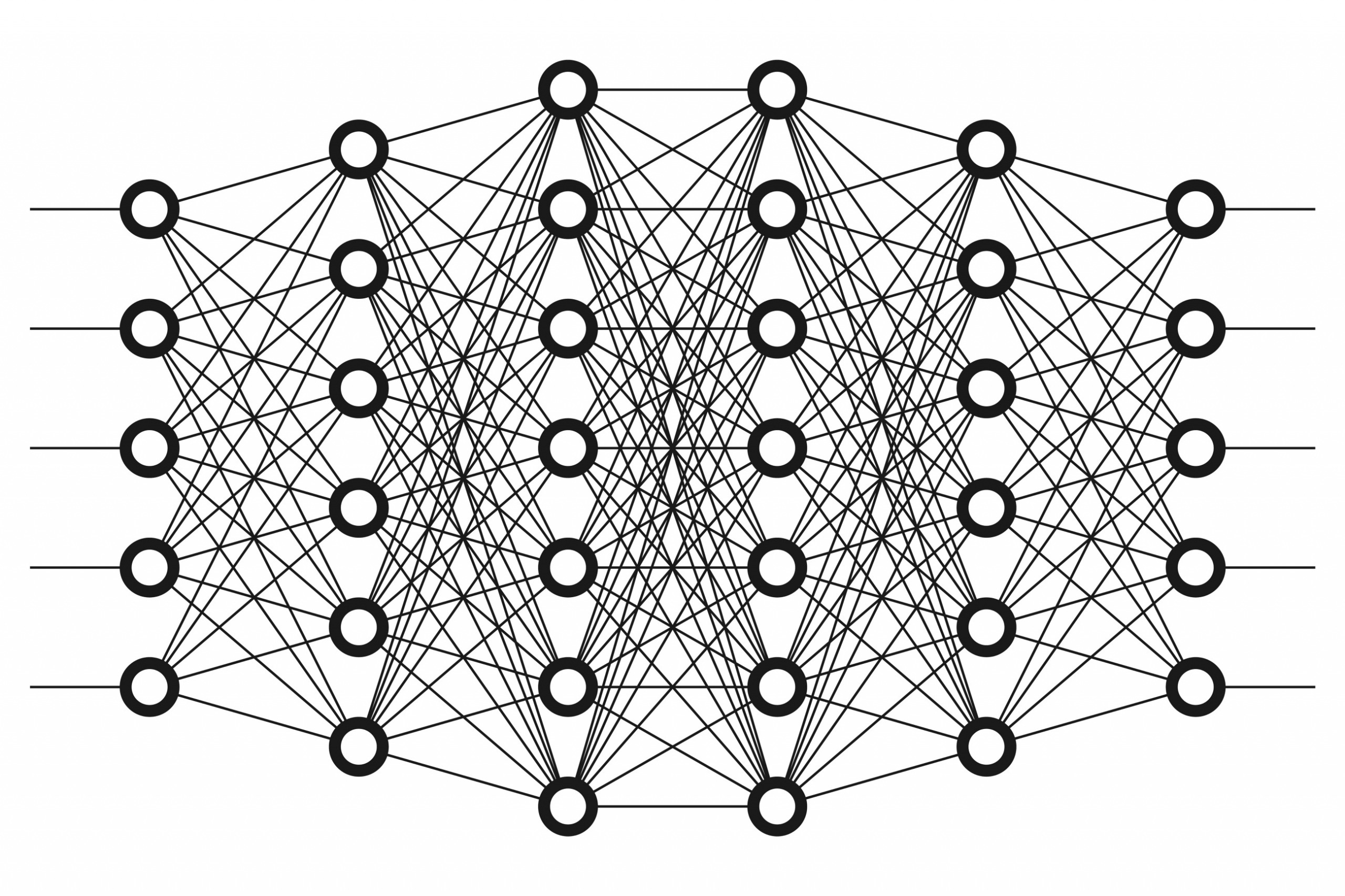

- Algorithms: The vehicle needs sophisticated AI to process data, learn from its environment, and make decisions based on real-time inputs. Neural networks and reinforcement learning models are central to this task.

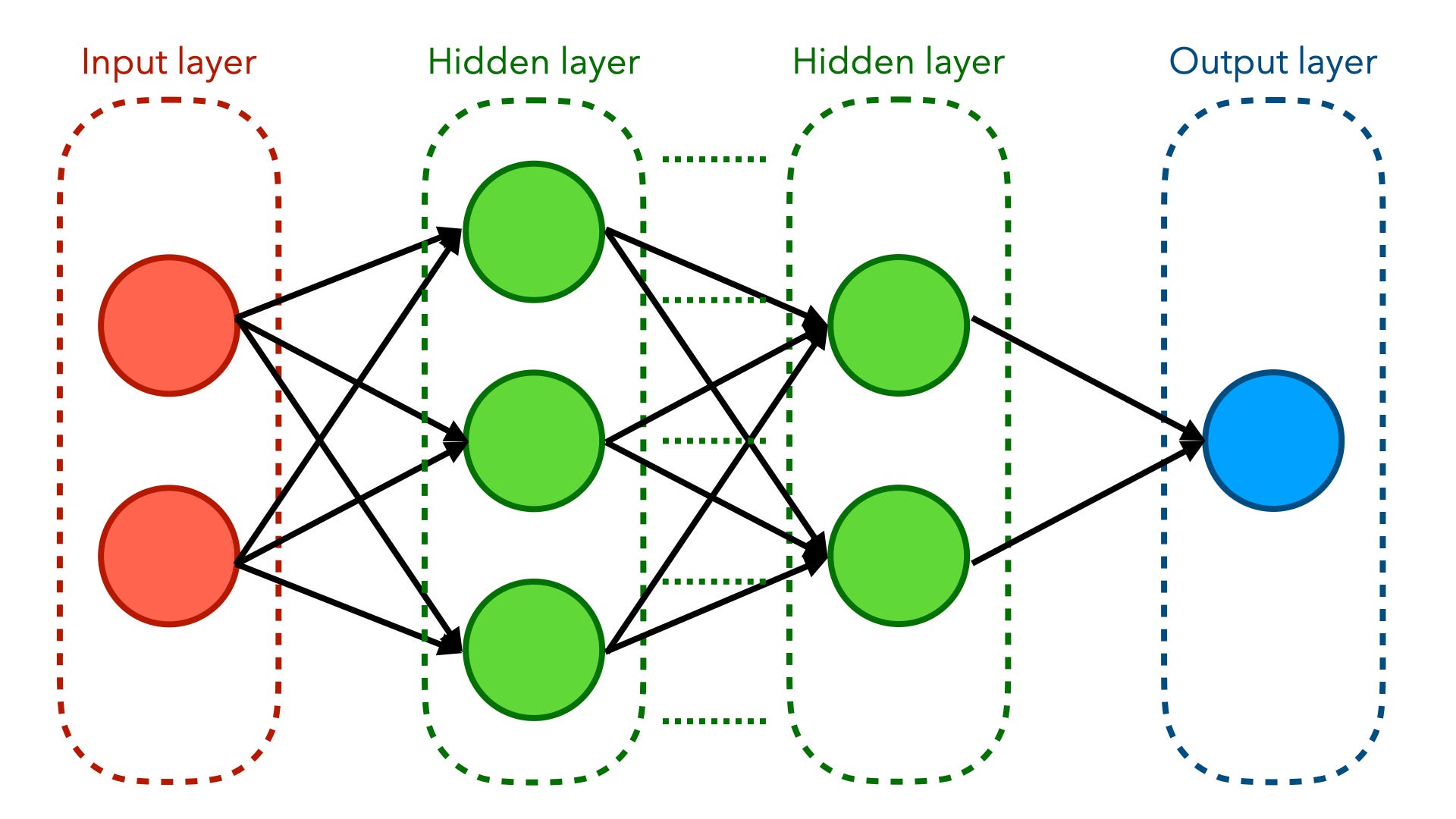

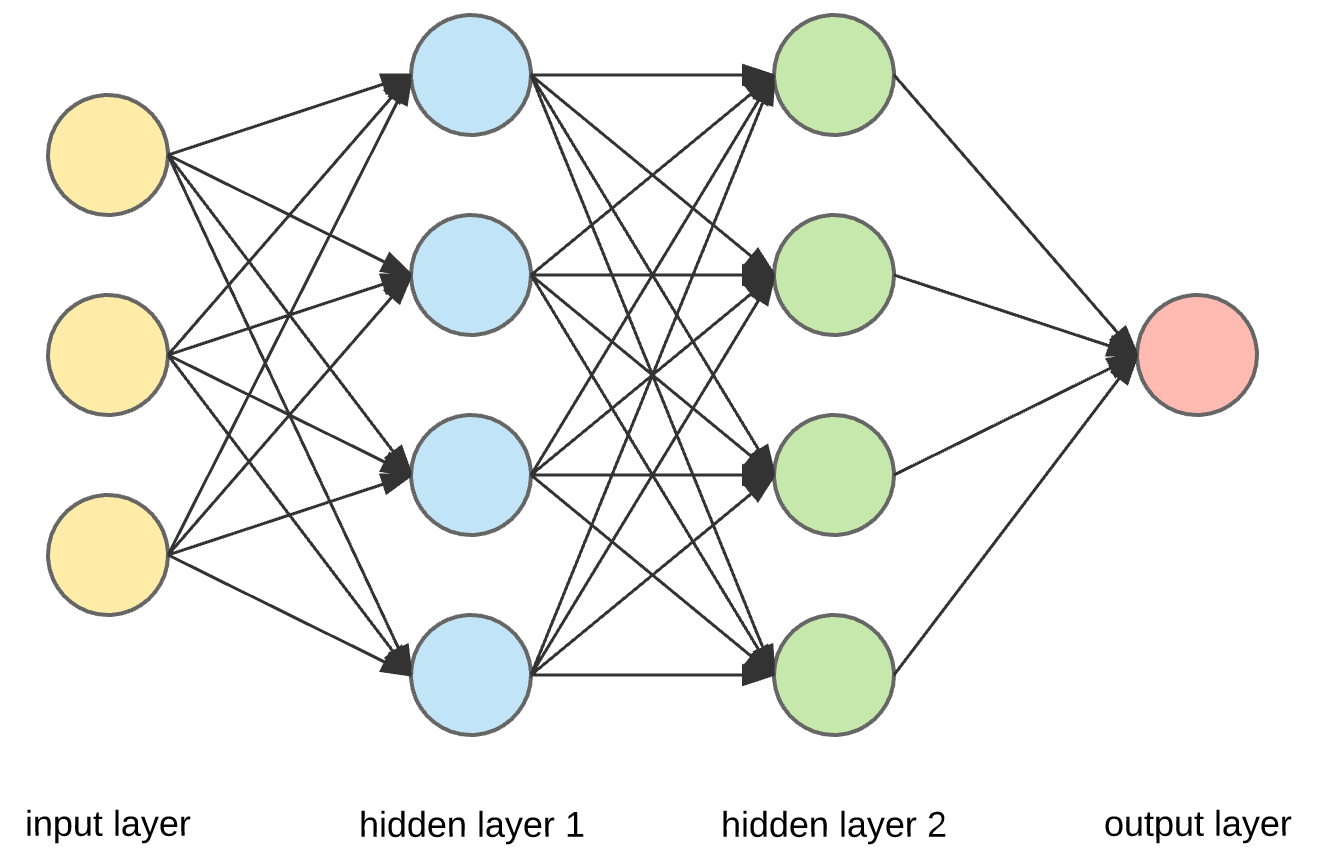

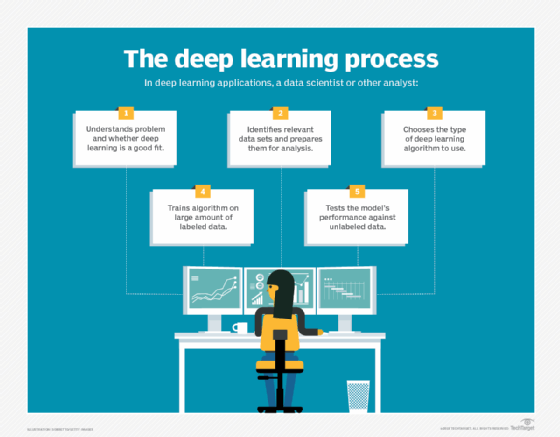

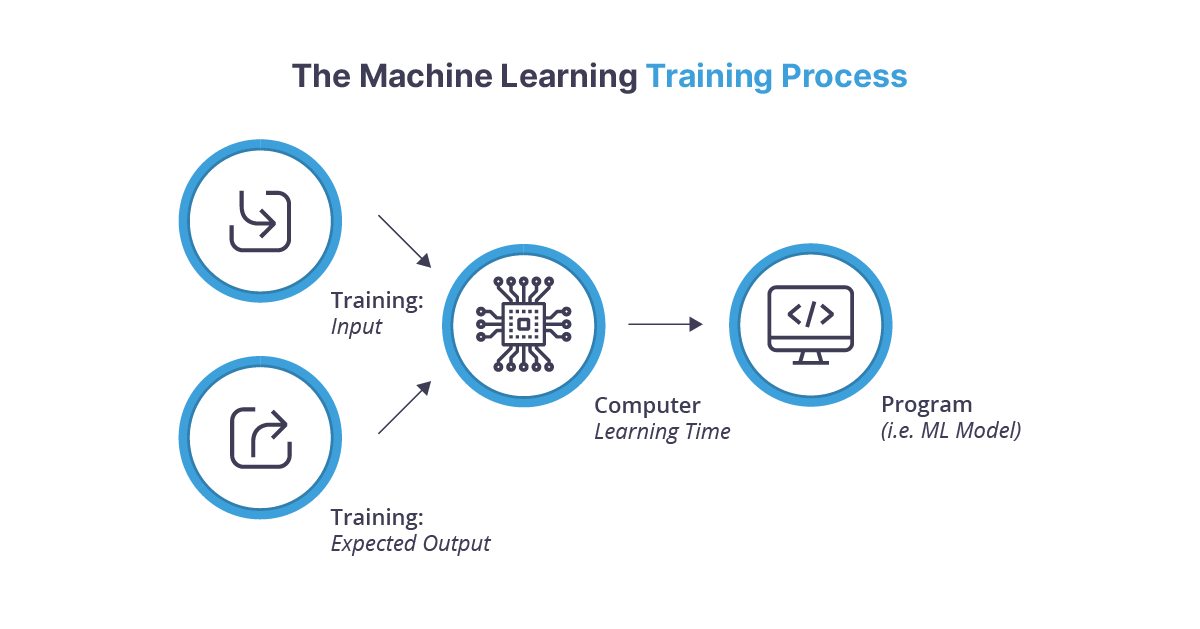

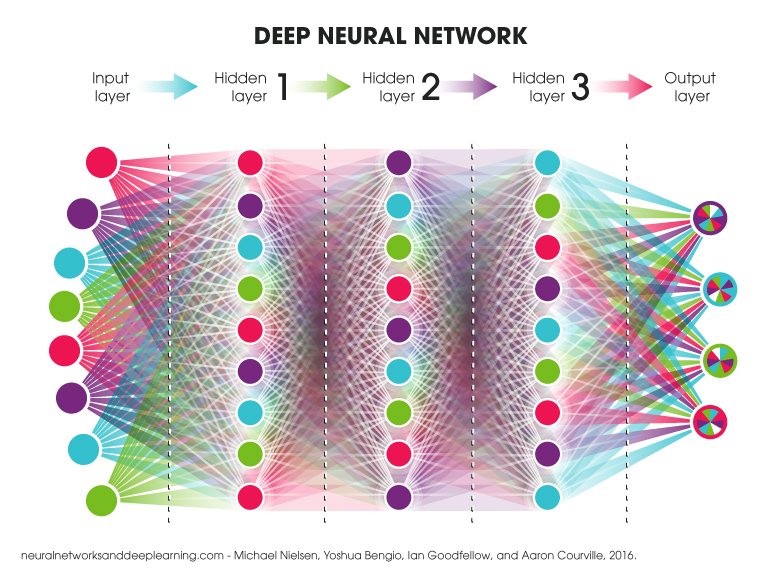

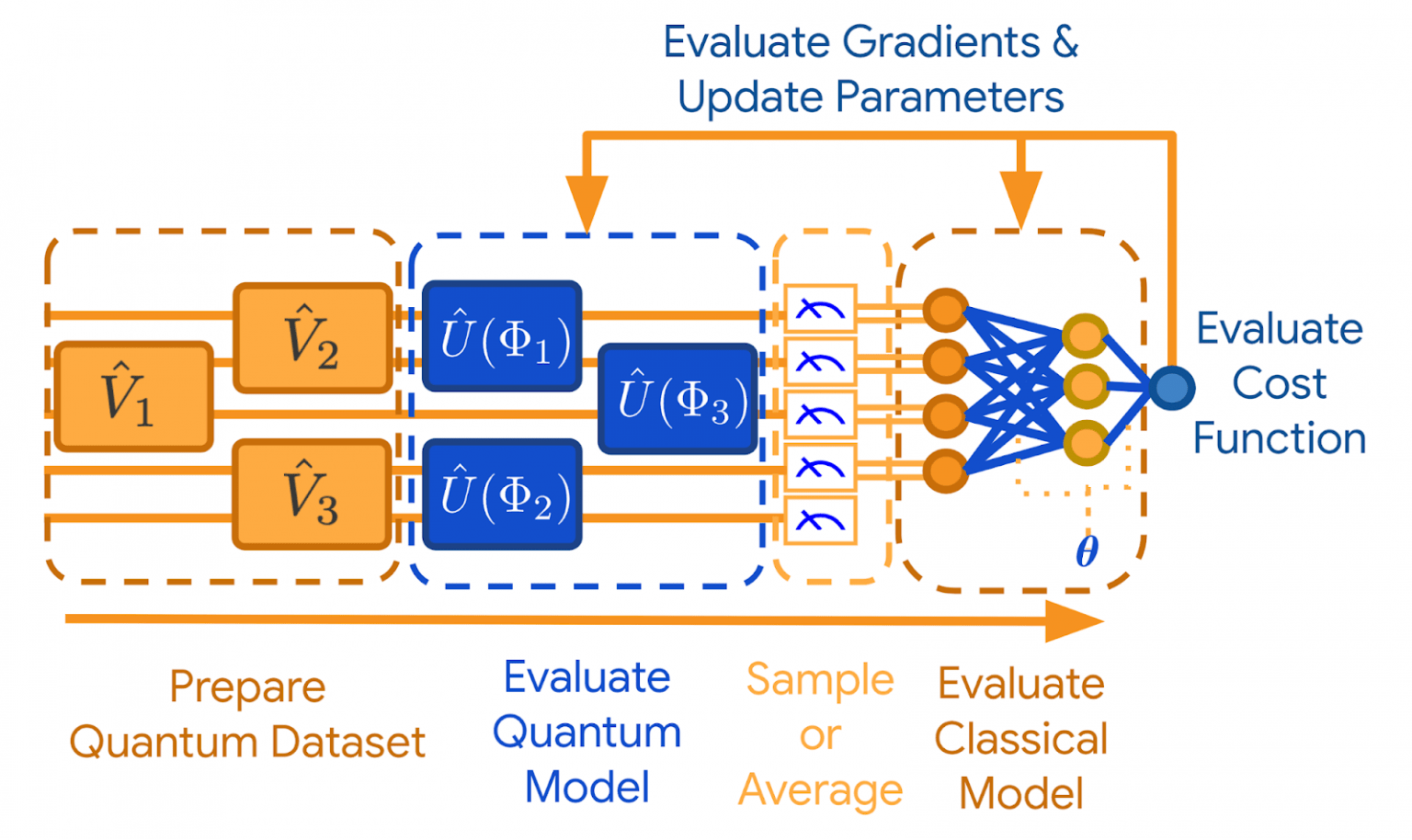

For anyone familiar with AI paradigms, the architecture behind autonomous driving systems resembles a multi-layered neural network approach. Various types of deep learning techniques, including convolutional neural networks (CNN) and reinforcement learning, are applied to manage different tasks, from lane detection to collision avoidance. It’s not merely enough to have algorithms that can detect specific elements like pedestrians or road signs—the system also needs decision-making capabilities. This brings us into the realm of reinforcement learning, where an agent (the car) continually refines its decisions based on both positive and negative feedback from its simulated environment.

Machine Learning and Real-Time Decision Making

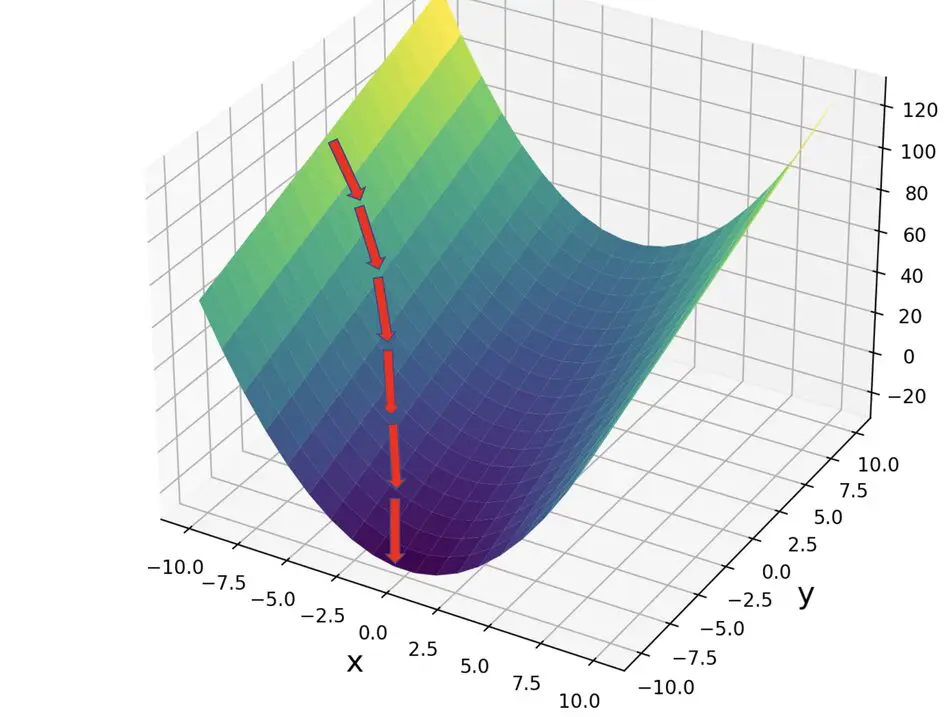

One of the chief challenges of autonomous driving is the need for real-time decision-making under unpredictable conditions. Whether it’s weather changes or sudden road anomalies, the AI needs to react instantaneously. This is where models trained through reinforcement learning truly shine. These models teach the vehicle to react optimally while also factoring in long-term outcomes, striking the perfect balance between short-term safe behavior and long-term efficiency in travel.

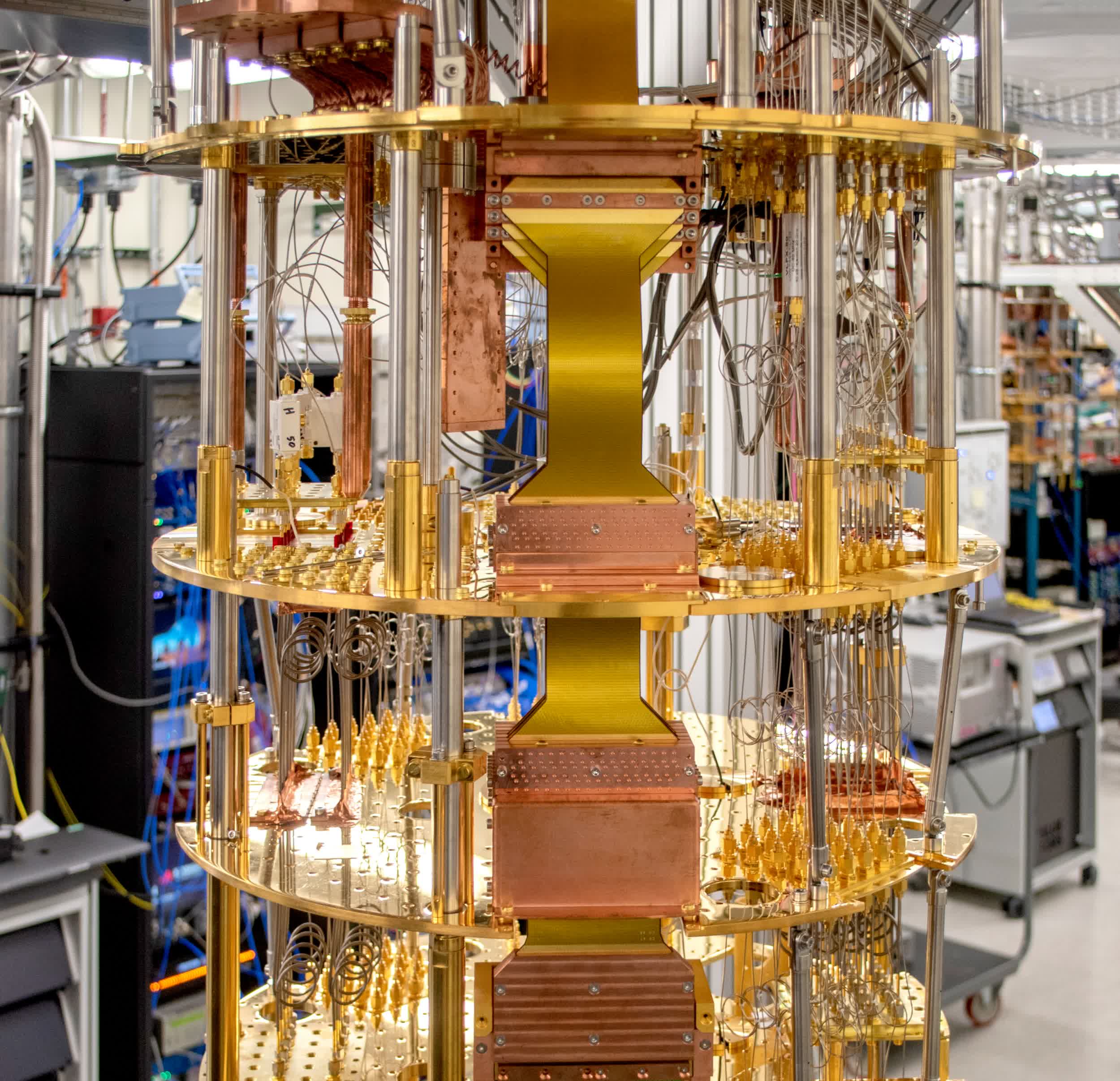

Let me draw a connection here to some of my past work in machine learning models for self-driving robots. The parallels are significant, especially in the aspect of edge computing where machine learning tasks have to be performed in real-time without reliance on cloud infrastructure. My experience in working with AWS in these environments has taught me that efficiency in computation, battery life, and scaling these models for higher-level transportation systems are crucial elements that must be considered.

Ethical and Safety Considerations

Another critical aspect of autonomous driving is ensuring safety and ethical decision-making within these systems. Unlike human drivers, autonomous vehicles need to be programmed with explicit moral choices, particularly in no-win situations—such as choosing between two imminent collisions. Companies like Tesla and Waymo have been grappling with these questions, which also bring up legal and societal concerns. For example, should these AI systems prioritize the car’s passengers or pedestrians on the street?

These considerations come alongside the rigorous testing and certification processes that autonomous vehicles must go through before being deployed on public roads. The coupling of artificial intelligence with the legal framework designed to protect pedestrians and passengers alike introduces a situational complexity rarely seen in other AI-driven industries.

Moreover, as we’ve discussed in a previous article on AI fine-tuning (“The Future of AI Fine-Tuning: Metrics, Challenges, and Real-World Applications”), implementing fine-tuning techniques can significantly reduce errors and improve reinforcement learning models. Platforms breaking new ground in the transportation industry need to continue focusing on these aspects to ensure AI doesn’t just act fast, but acts correctly and with certainty.

Networking and Multi-Vehicle Systems

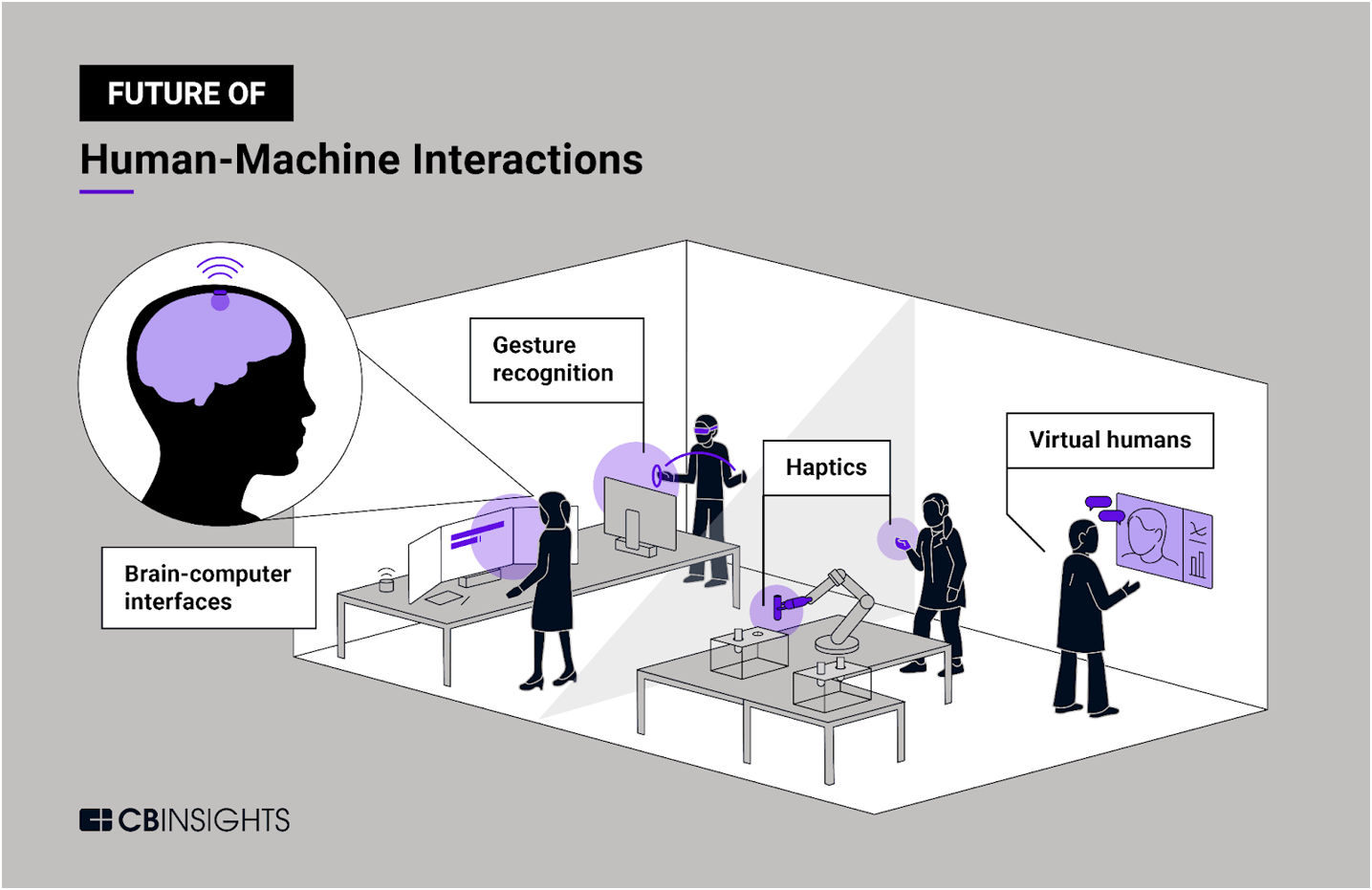

The future of autonomous driving lies not just in individual car intelligence but in inter-vehicle communication. A large part of the efficiency gains from autonomous systems can come when vehicles anticipate each other’s movements, coordinating between themselves to optimize traffic flow. Consider Tesla’s Full Self-Driving (FSD) system, which is working toward achieving this “swarm intelligence” via enhanced automation.

These interconnected systems closely resemble the multi-cloud strategies I’ve implemented in cloud migration consulting, particularly when dealing with communication and data processing across distributed systems. Autonomous “networks” of vehicles will need to adopt a similar approach, balancing bandwidth limitations, security claims, and fault tolerance to ensure optimal performance.

Challenges and Future Developments

While autonomy is progressing rapidly, complex challenges remain:

- Weather and Terrain Adaptations: Self-driving systems often struggle in adverse weather conditions or on roads where marking is not visible or data from previous sensors becomes corrupted.

- Legal Frameworks: Countries are still working to establish consistent regulations for driverless vehicles, and each region’s laws will affect how companies launch their products.

- AI Bias Mitigation: Like any data-driven system, biases can creep into the AI’s decision-making processes if the training data used is not sufficiently diverse or accurately tagged.

- Ethical Considerations: What should the car do in rare, unavoidable accident scenarios? The public and insurers alike want to know, and so far there are no easy answers.

We also need to look beyond individual autonomy toward how cities themselves will fit into this new ecosystem. Will our urban planning adapt to self-driving vehicles, with AI systems communicating directly with smart roadways and traffic signals? These are questions that, in the next decade, will gain importance as autonomous and AI-powered systems become a vital part of transportation infrastructures worldwide.

Conclusion

The marriage of artificial intelligence and transportation has the potential to radically transform our lives. Autonomous driving brings together countless areas—from machine learning and deep learning to cloud computing and real-time decision-making. However, the challenges are equally daunting, ranging from ethical dilemmas to technical hurdles in multi-sensor integration.

In previous discussions we’ve touched on AI paradigms and their role in developing fine-tuned systems (“The Future of AI Fine-Tuning: Metrics, Challenges, and Real-World Applications”). As we push the boundaries toward more advanced autonomous vehicles, refining those algorithms will only become more critical. Will an autonomous future usher in fewer accidents on the roads, more efficient traffic systems, and reduced emissions? Quite possibly. But we need to ensure that these systems are carefully regulated, exceptionally trained, and adaptable to the diverse environments they’ll navigate.

The future is bright, but as always with AI, it’s crucial to proceed with a clear head and evidence-based strategies.

Focus Keyphrase: Autonomous driving artificial intelligence

>

> >

> >

> >

> >

> >

> >

> >

> >

>

>

> >

> >

>