Neural Networks: The Pillars of Modern AI

The field of Artificial Intelligence (AI) has witnessed a transformative leap forward with the advent and application of neural networks. These computational models have rooted themselves as foundational components in developing intelligent machines capable of understanding, learning, and interacting with the world in ways that were once the preserve of science fiction. Drawing from my background in AI, cloud computing, and security—augmented by hands-on experience in leveraging cutting-edge technologies at DBGM Consulting, Inc., and academic grounding from Harvard—I’ve come to appreciate the scientific rigor and engineering marvels behind neural networks.

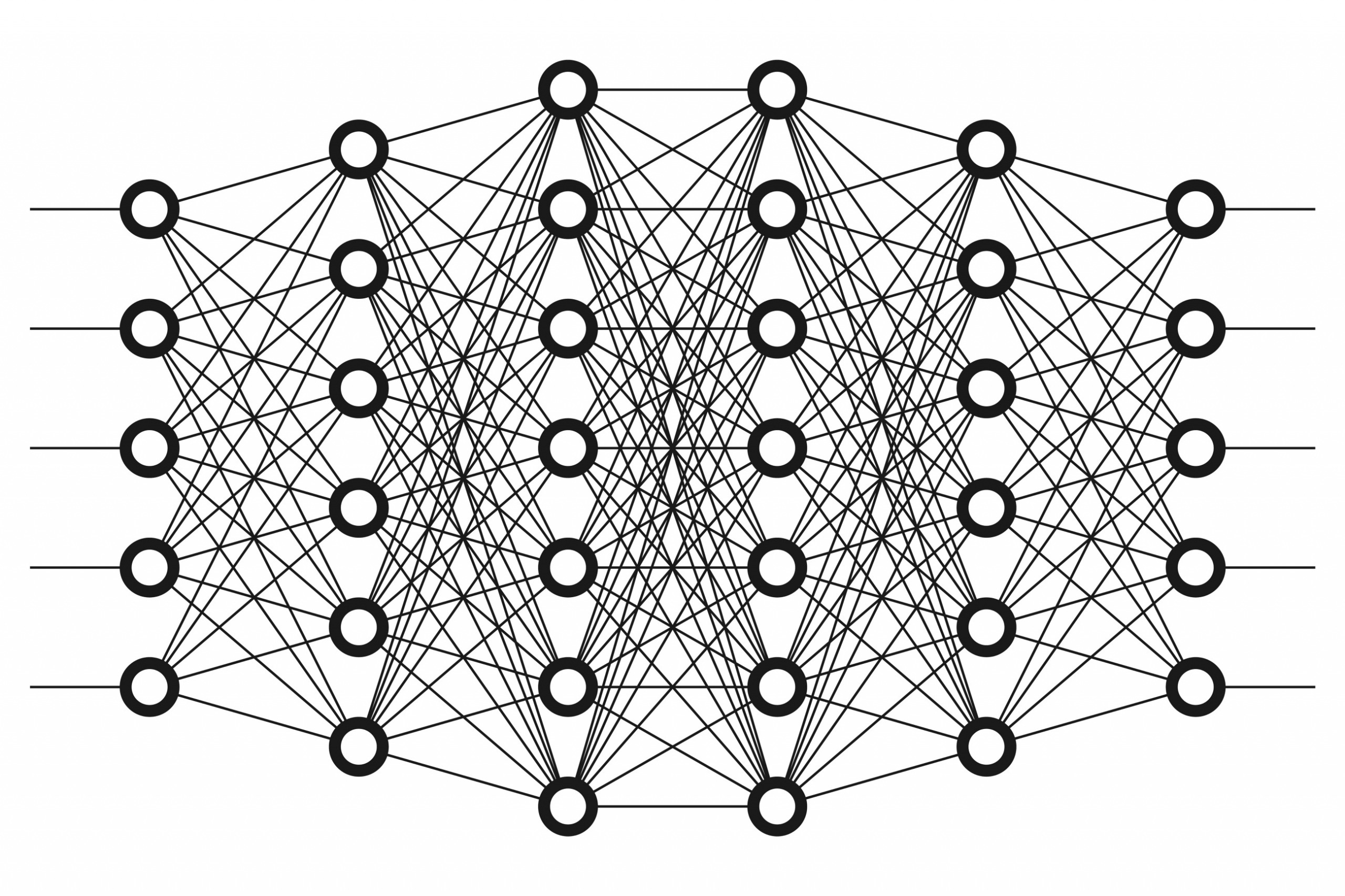

Understanding the Crux of Neural Networks

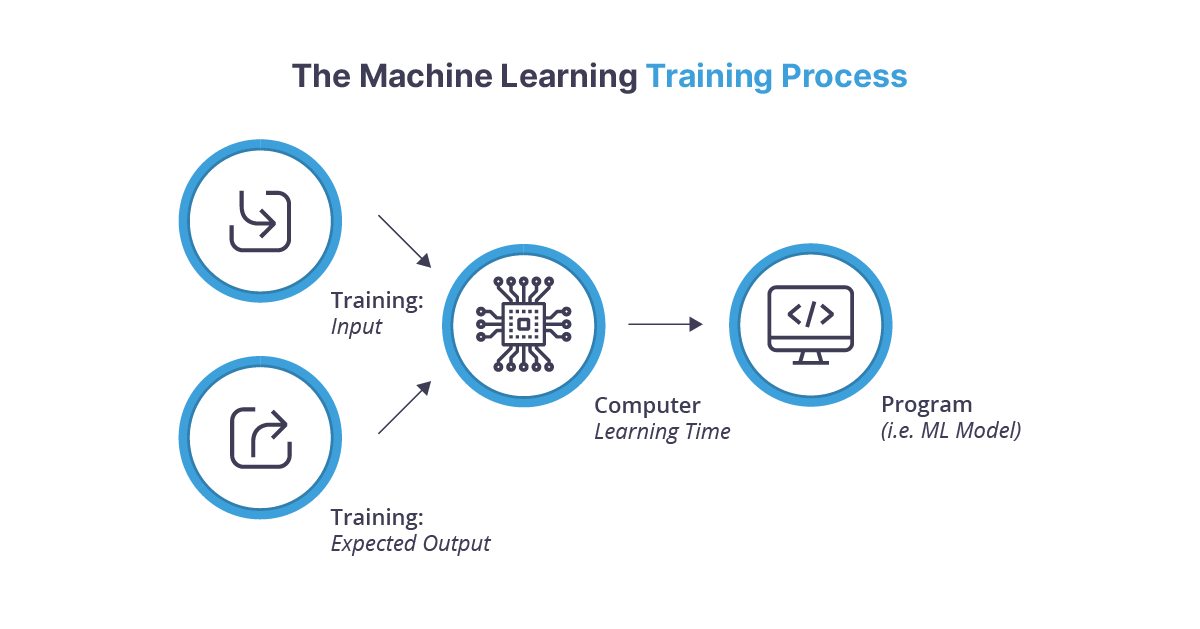

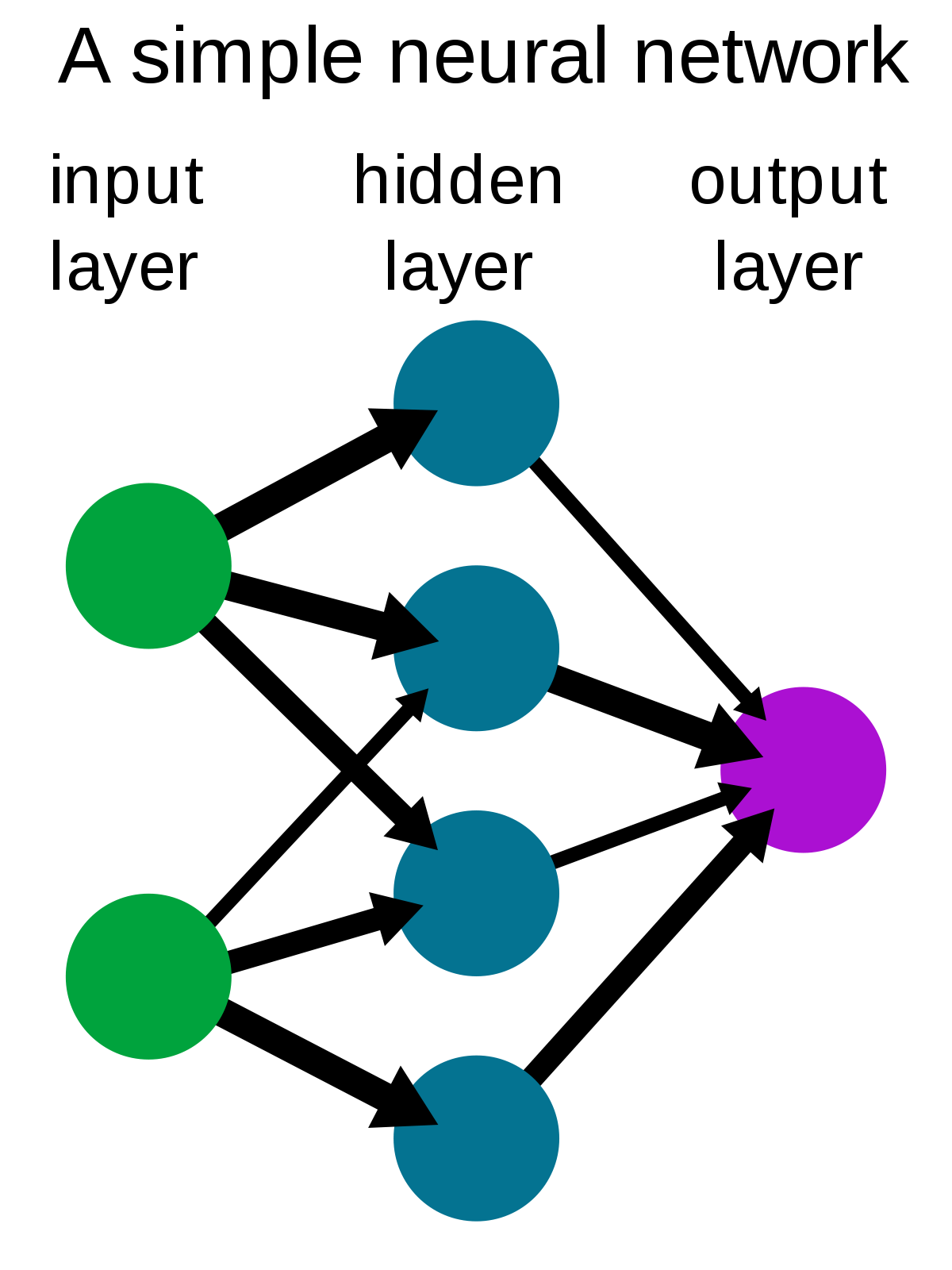

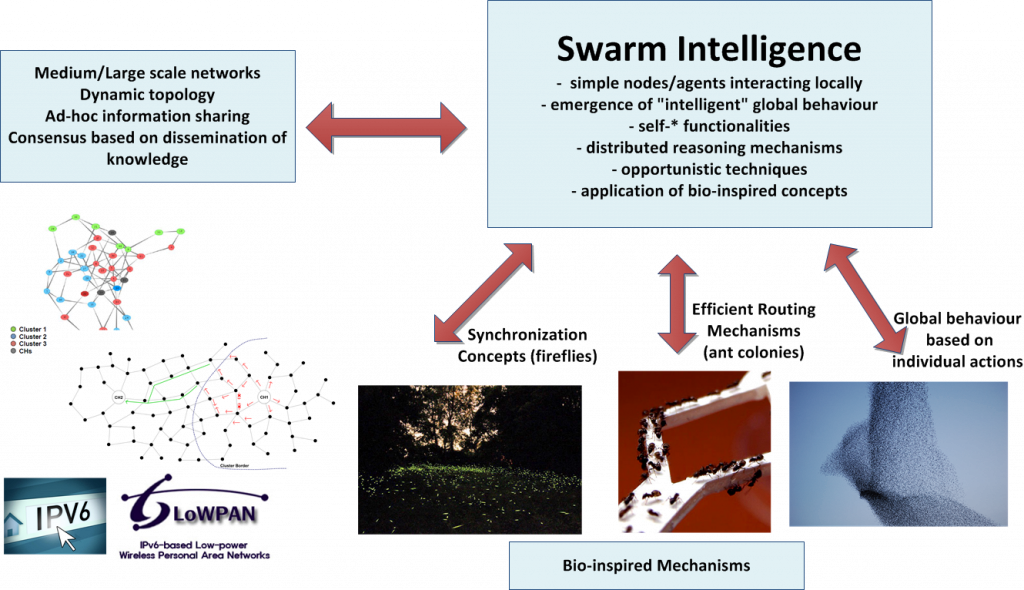

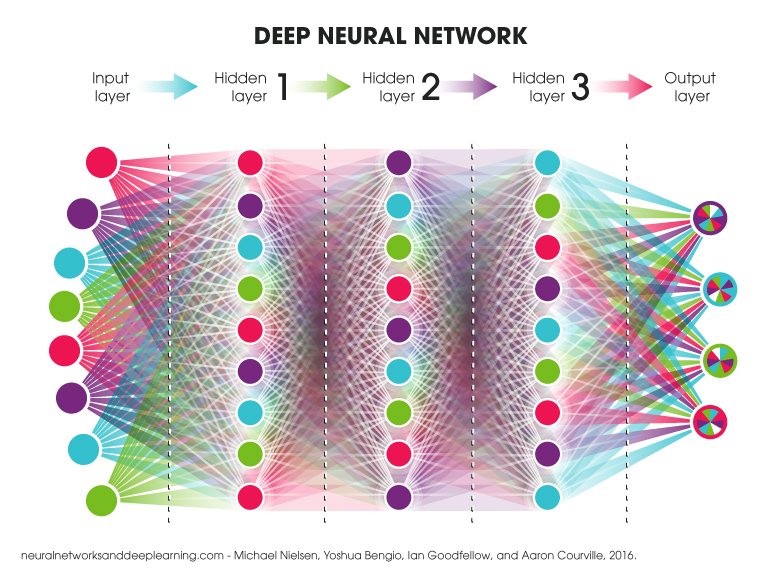

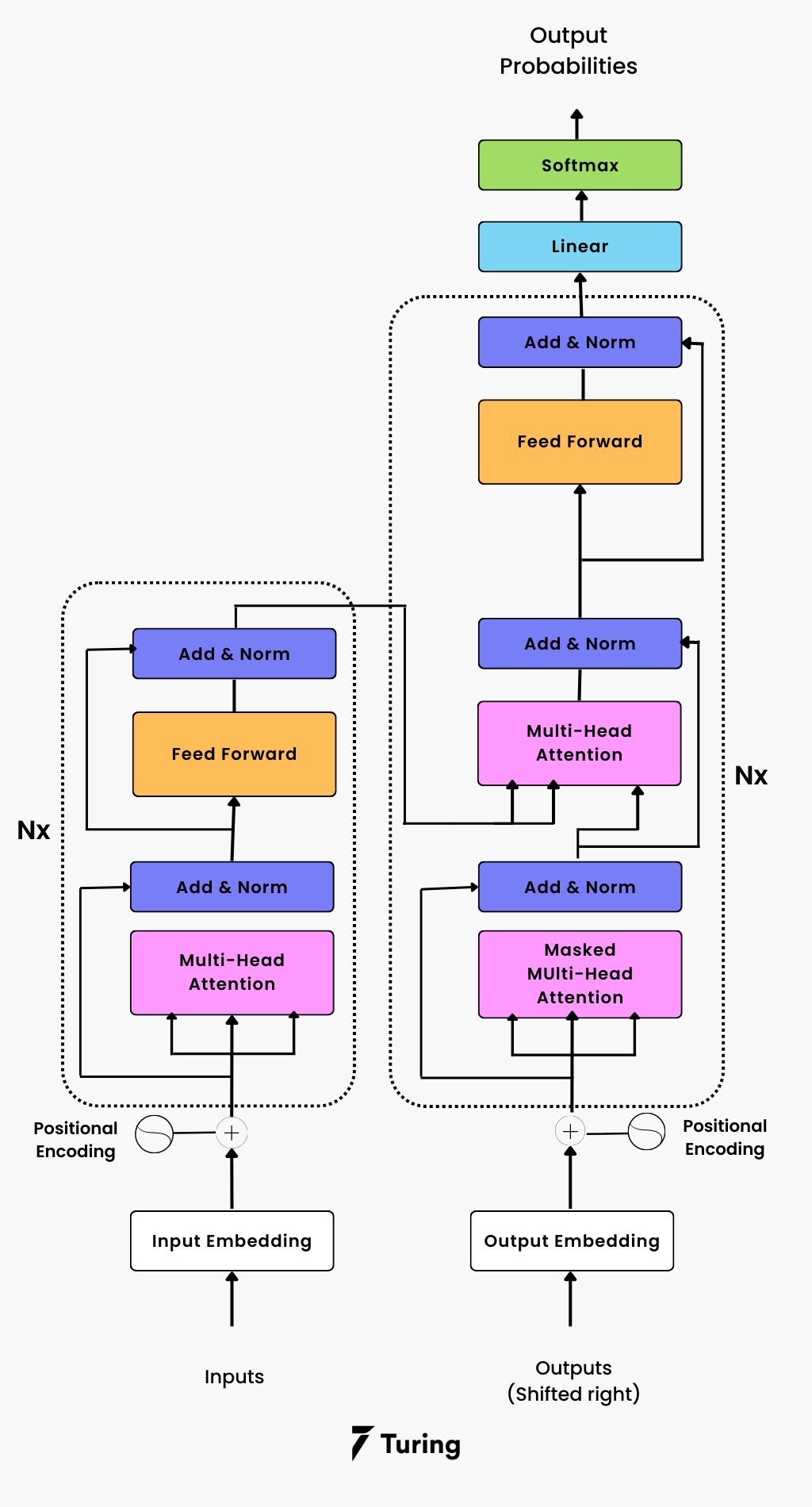

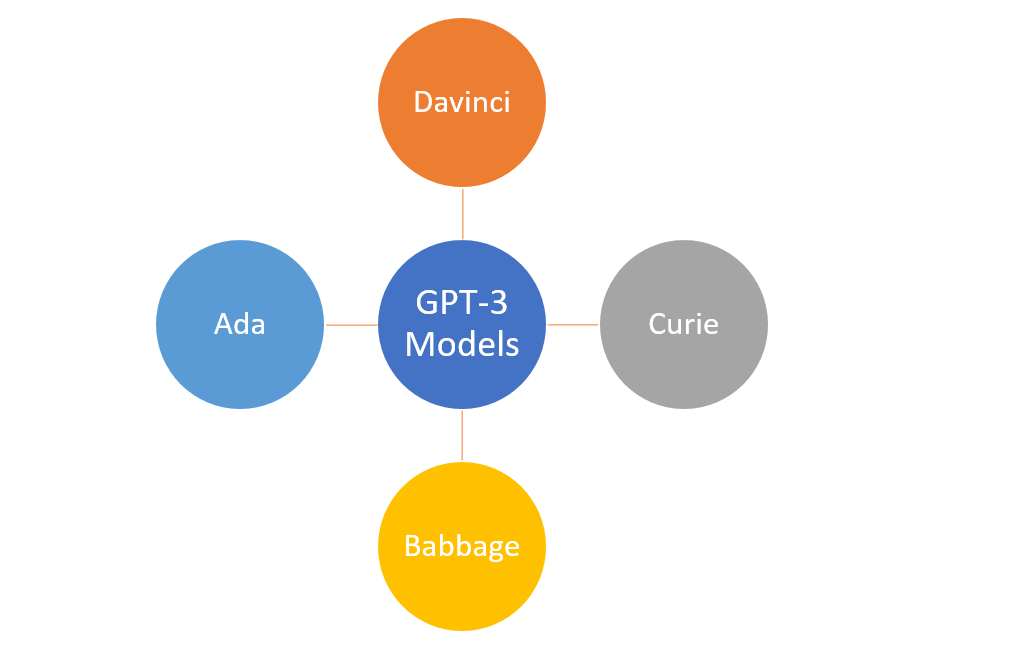

At their core, neural networks are inspired by the human brain’s structure and function. They are composed of nodes or “neurons”, interconnected to form a vast network. Just as the human brain processes information through synaptic connections, neural networks process input data through layers of nodes, each layer deriving higher-level features from its predecessor. This ability to automatically and iteratively learn from data makes them uniquely powerful for a wide range of applications, from speech recognition to predictive analytics.

< >

>

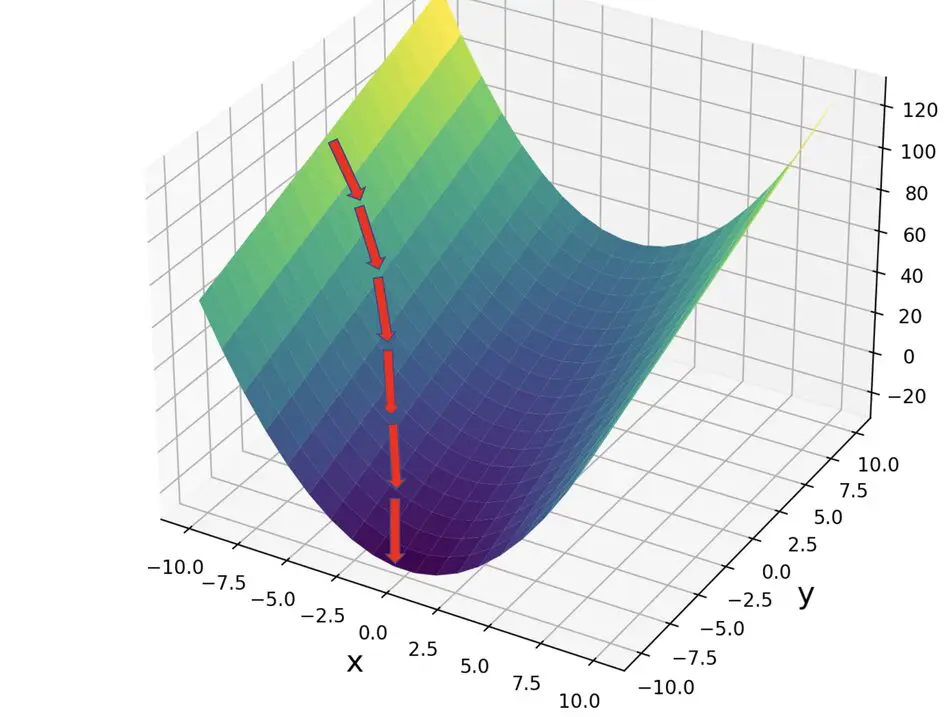

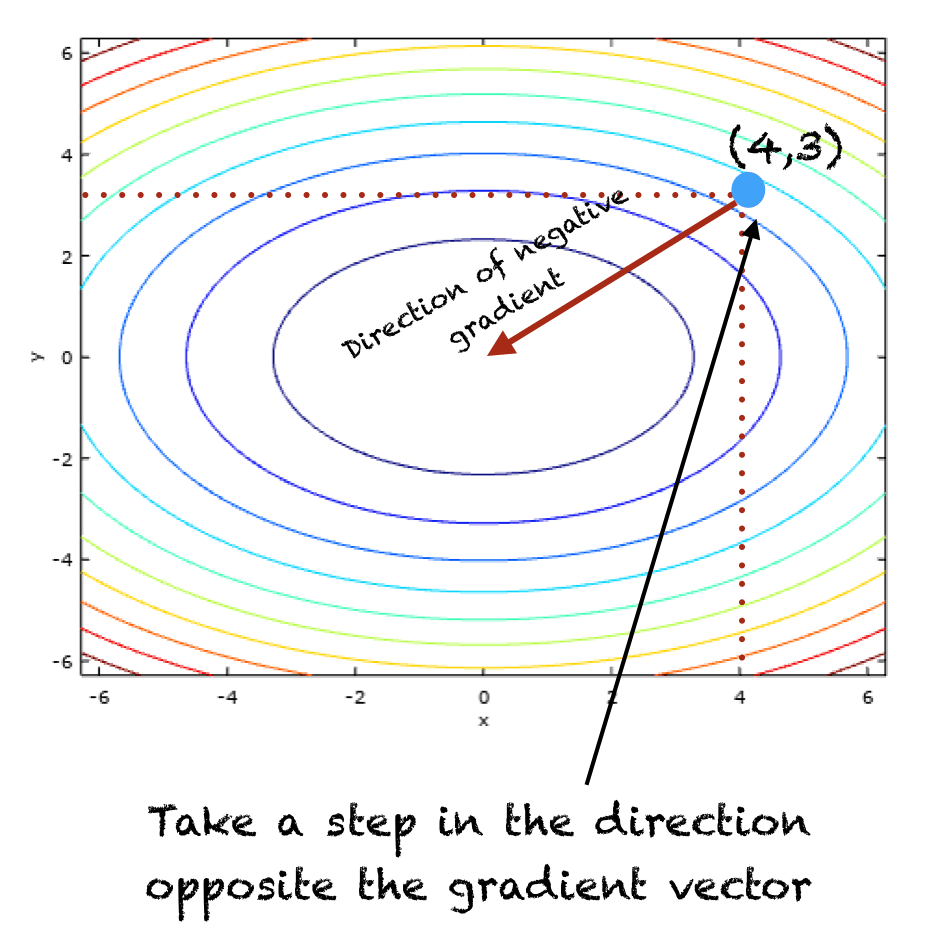

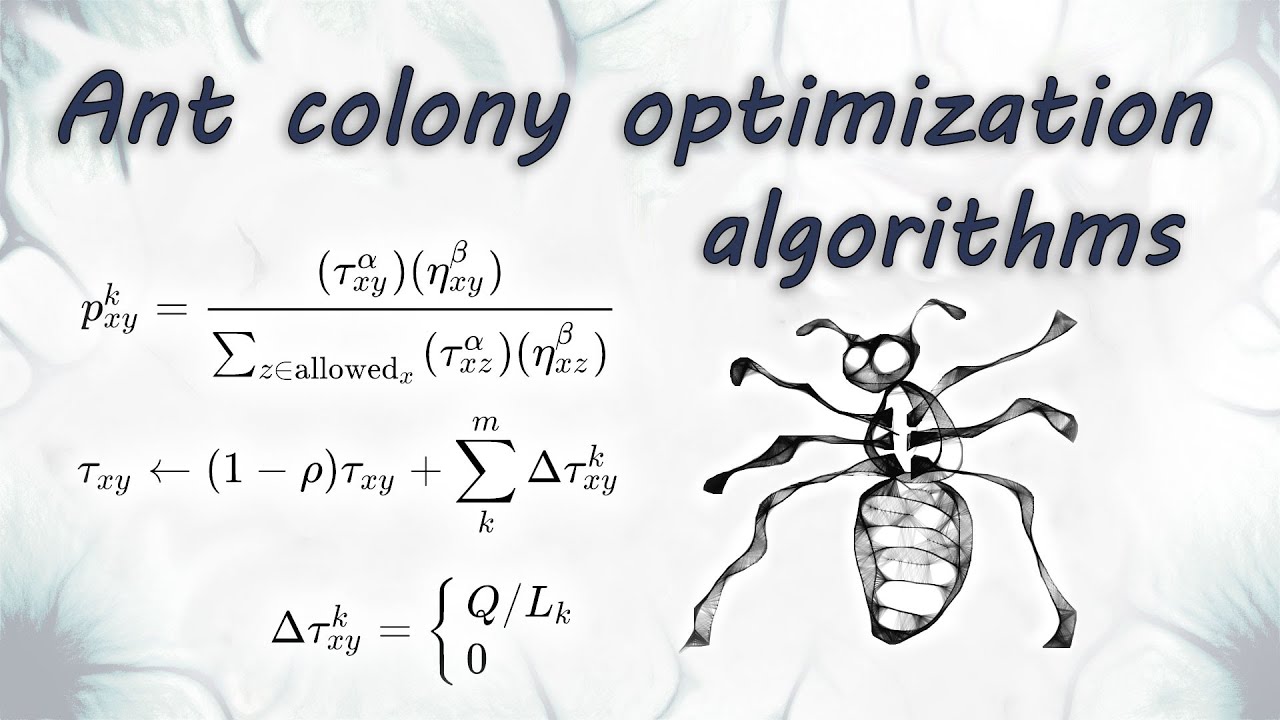

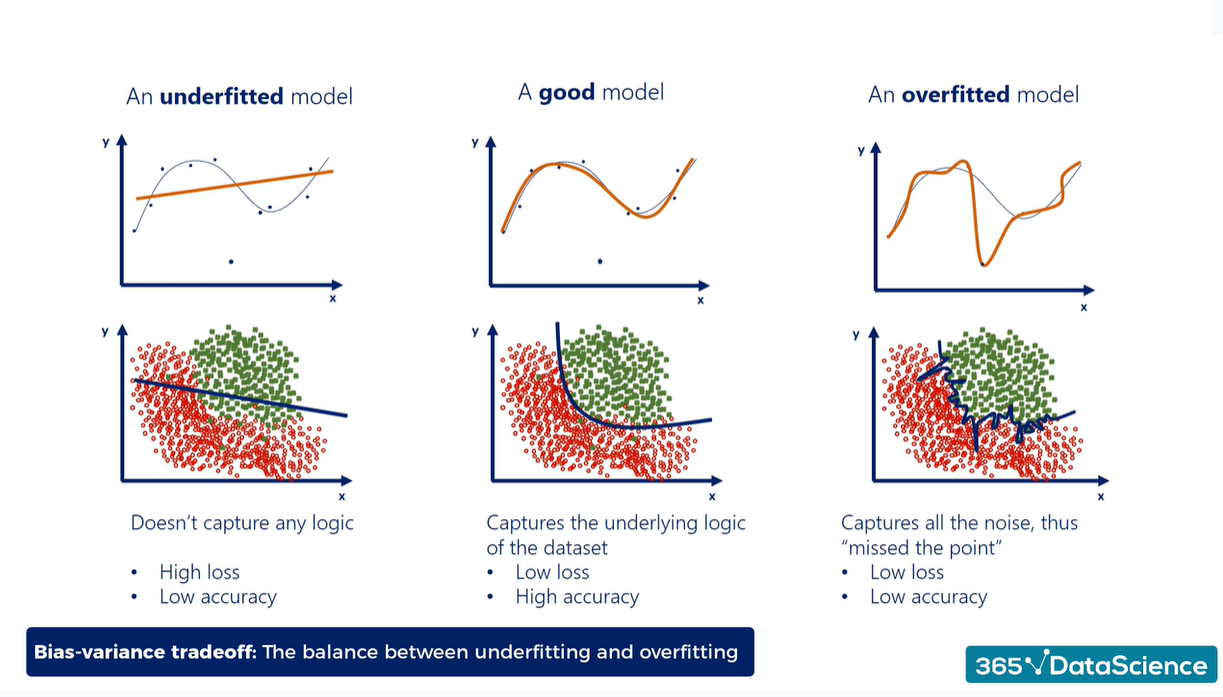

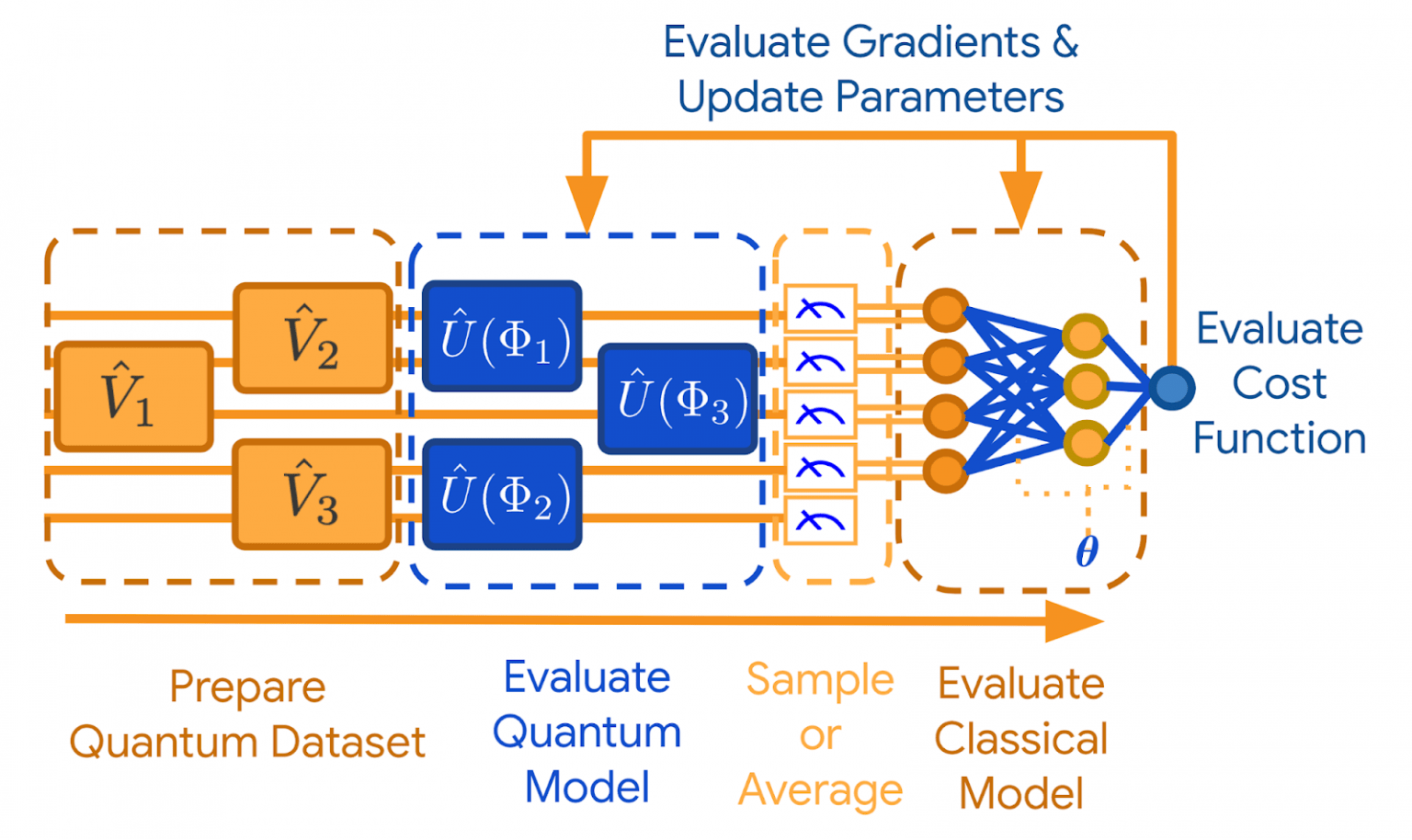

My interest in physics and mathematics, particularly in the realms of calculus and probability theory, has provided me with a profound appreciation for the inner workings of neural networks. This mathematical underpinning allows neural networks to learn intricate patterns through optimization techniques like Gradient Descent, a concept we have explored in depth in the Impact of Gradient Descent in AI and ML.

Applications and Impact

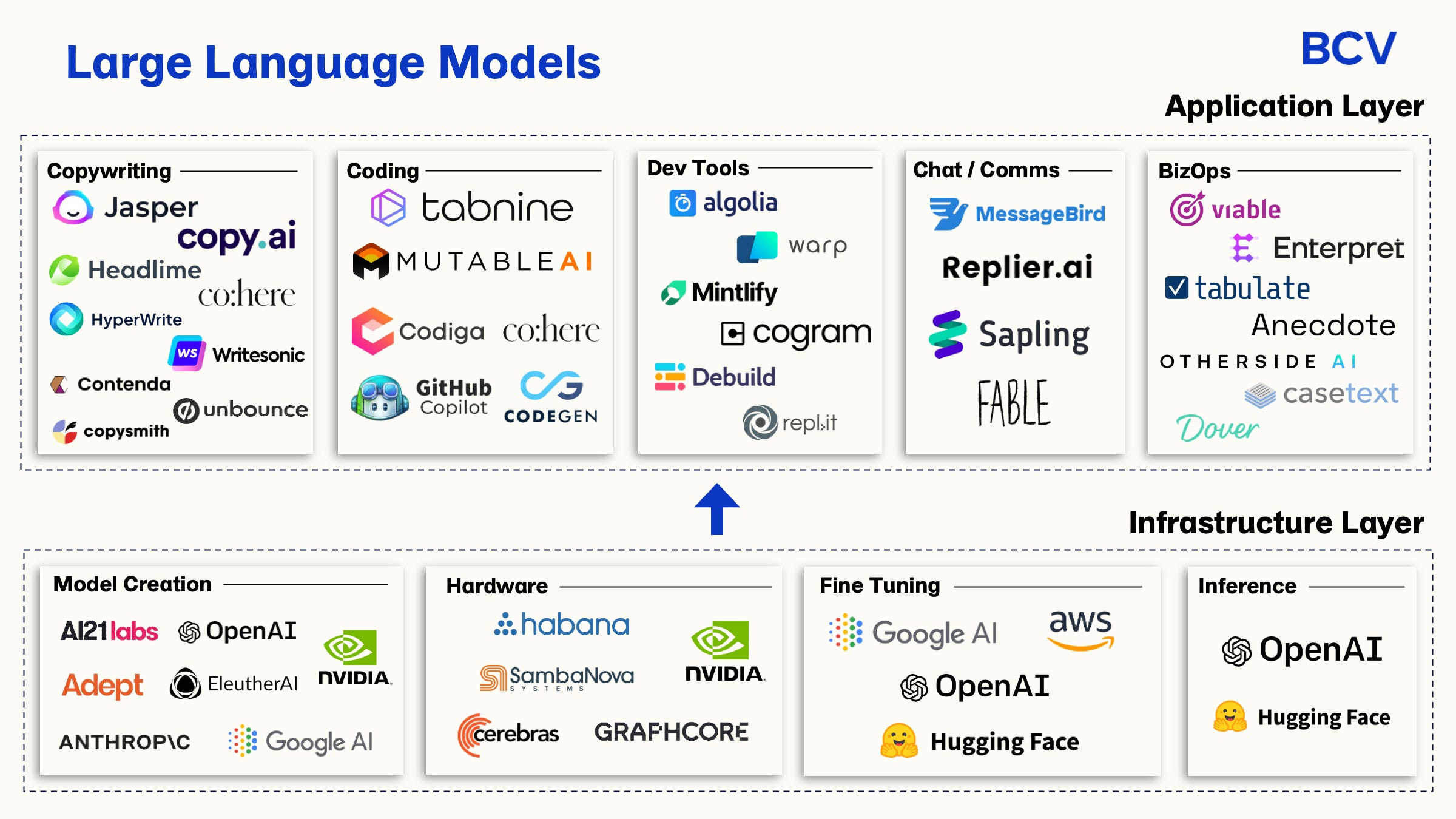

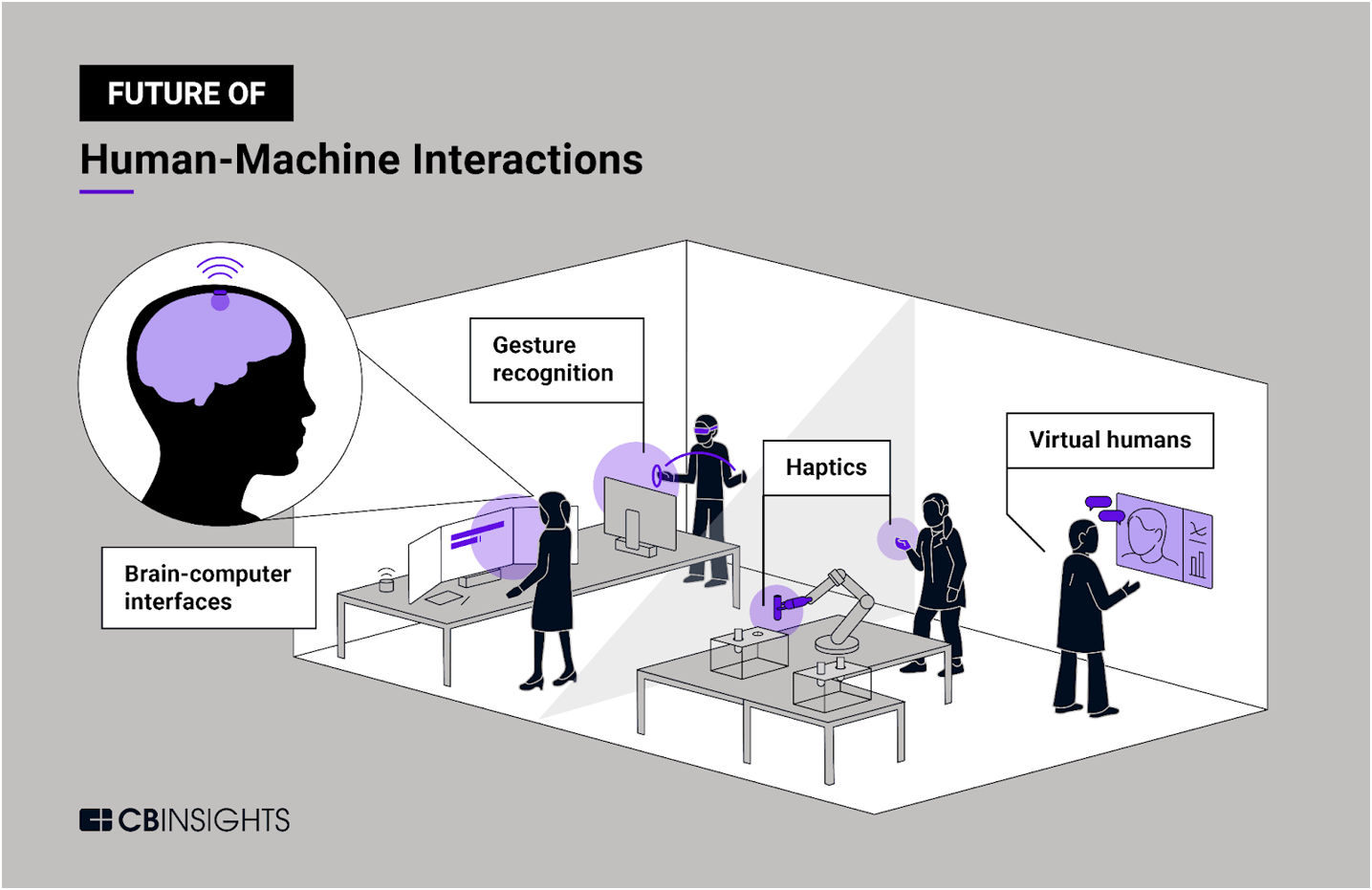

The applications of neural networks in today’s society are both broad and impactful. In my work at Microsoft and with my current firm, I have seen firsthand how these models can drive efficiency, innovation, and transformation across various sectors. From automating customer service interactions with intelligent chatbots to enhancing security protocols through anomaly detection, the versatility of neural networks is unparalleled.

Moreover, my academic research on machine learning algorithms for self-driving robots highlights the critical role of neural networks in enabling machines to navigate and interact with their environment in real-time. This symbiosis of theory and application underscores the transformative power of AI, as evidenced by the evolution of deep learning outlined in Pragmatic Evolution of Deep Learning: From Theory to Impact.

< >

>

Potential and Caution

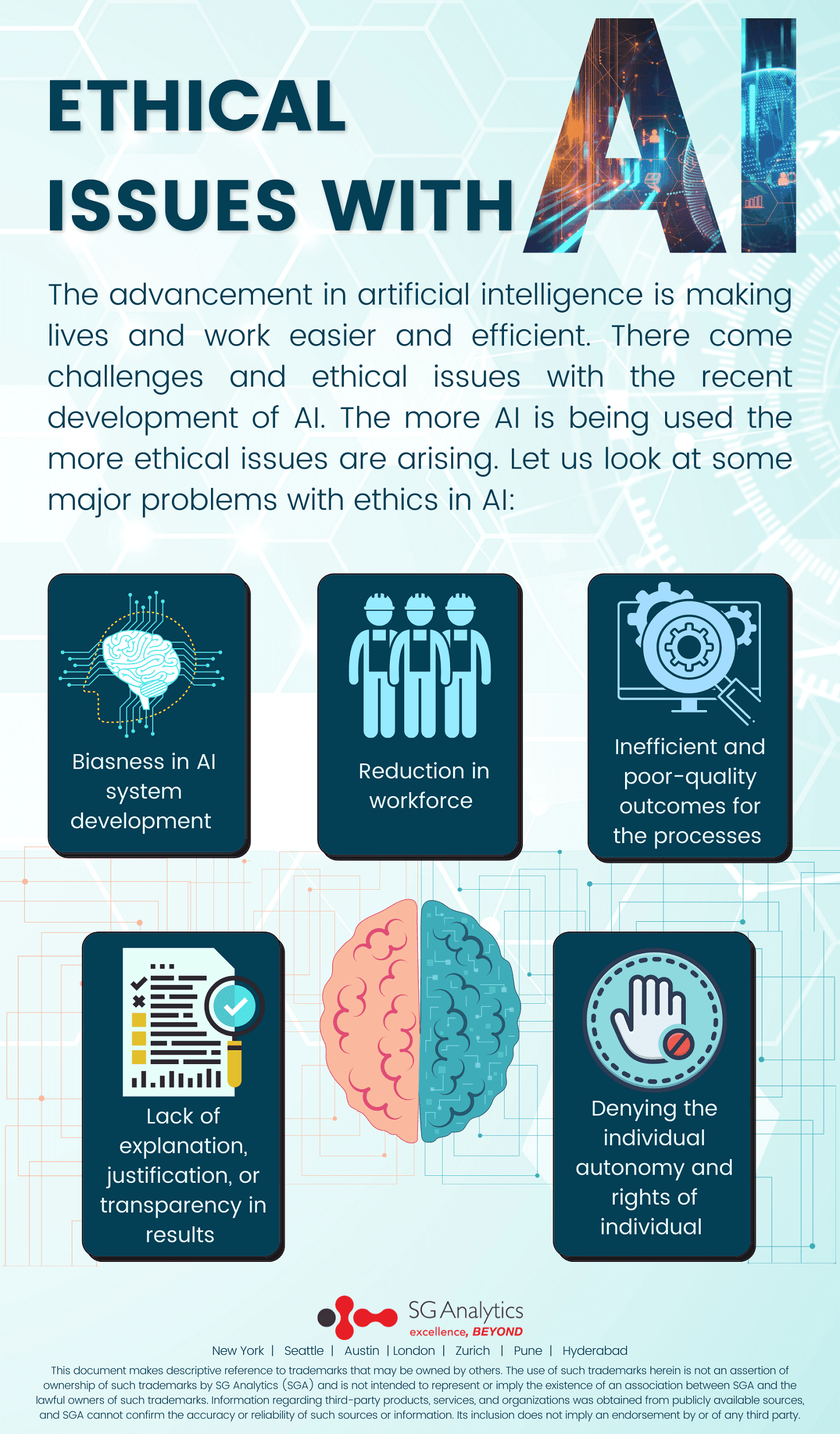

While the potential of neural networks and AI at large is immense, my approach to the technology is marked by both optimism and caution. The ethical implications of AI, particularly concerning privacy, bias, and autonomy, require careful consideration. It is here that my skeptical, evidence-based outlook becomes particularly salient, advocating for a balanced approach to AI development that prioritizes ethical considerations alongside technological advancement.

The balance between innovation and ethics in AI is a theme I have explored in previous discussions, such as the ethical considerations surrounding Generative Adversarial Networks (GANs) in Revolutionizing Creativity with GANs. As we venture further into this new era of cognitive computing, it’s imperative that we do so with a mindset that values responsible innovation and the sustainable development of AI technologies.

< >

>

Conclusion

The journey through the development and application of neural networks in AI is a testament to human ingenuity and our relentless pursuit of knowledge. Through my professional experiences and personal interests, I have witnessed the power of neural networks to drive forward the frontiers of technology and improve countless aspects of our lives. However, as we continue to push the boundaries of what’s possible, let us also remain mindful of the ethical implications of our advancements. The future of AI, built on the foundation of neural networks, promises a world of possibilities—but it is a future that we must approach with both ambition and caution.

As we reflect on the evolution of AI and its profound impact on society, let’s continue to bridge the gap between technical innovation and ethical responsibility, fostering a future where technology amplifies human potential without compromising our values or well-being.

>

> >

> >

>

>

> >

> >

> >

> >

> >

>