The Future of AI: Speculation, Science Fiction, and Reality

Speculative science fiction has long been fertile ground for exploring the future of technologies, particularly artificial intelligence (AI). For thousands of years, thinkers and writers have imagined the eventual existence of intelligent, non-human creations. From the ancient Greeks’ tale of the bronze automaton Talos to 20th century science fiction icons like HAL 9000 and Mr. Data, the idea of AI has captivated humanity’s attention. Whether framed in a utopian or dystopian light, AI serves as a vessel for us to explore not just technology, but our own nature and the future we might forge through innovation.

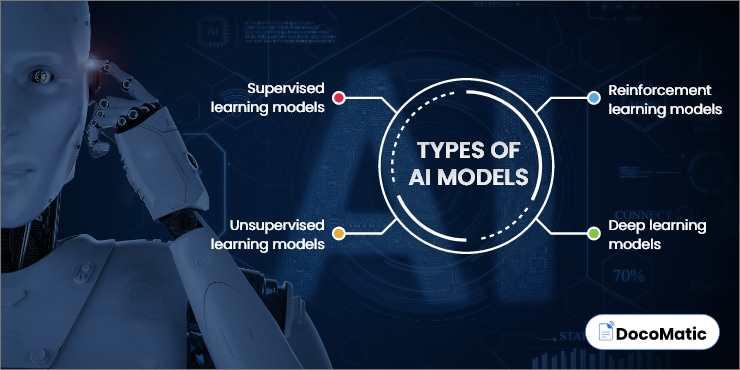

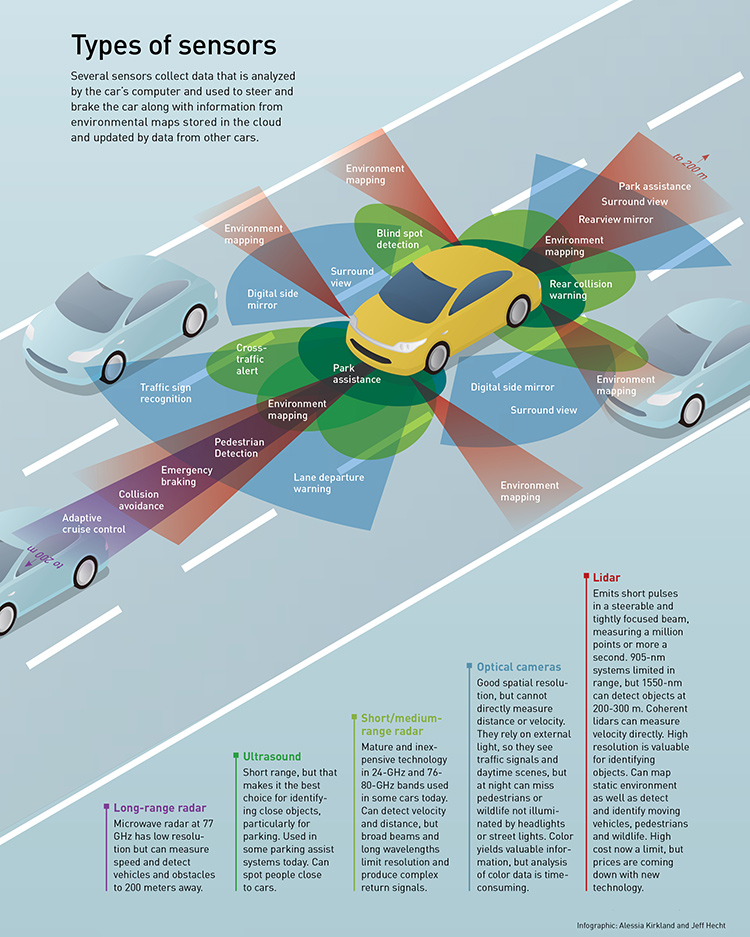

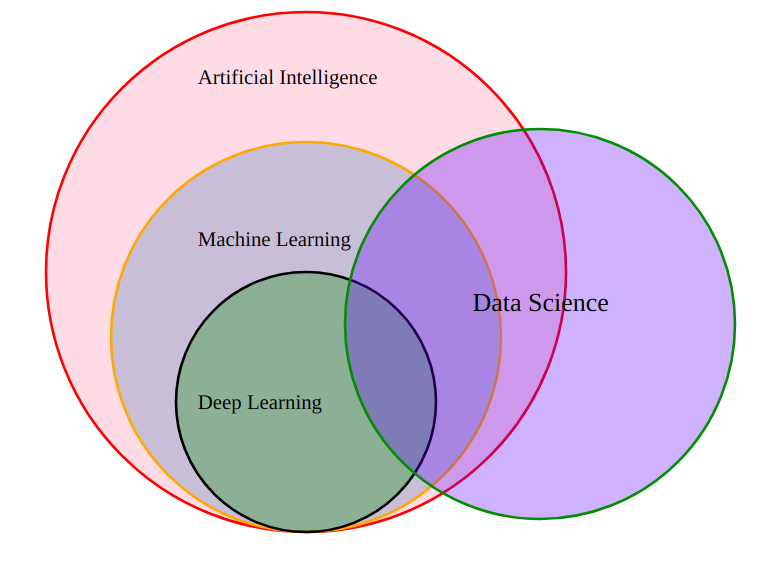

The fascination with AI lies in its potential. In the present day, artificial intelligence is advancing quickly across diverse fields—everything from process automation to autonomous vehicles. However, the more speculative avenues prompt questions about what lies ahead if AI continues to grow exponentially. Could AI evolve beyond its current anthropocentric framework? Might the eventual AI entity possess capabilities far beyond our own, and how might that affect society?

AI in Science Fiction: Robotics, Utopias, and Frankenstein

Many science fiction stories have shaped our cultural views of AI. Consider characters from literature like Lester Del Rey’s Helen O’Loy, a poignant tale from 1938 about a robot tuned to human emotions, or even Mary Shelley’s Frankenstein. These stories offer glimpses into the potential relationships between humans and intelligent machines, and often they explore the darker side of man’s creations. In Shelley’s novel, despite its organic origins, the monster echoes modern fears of uncontrollable technology. Similarly, today’s discussions around AI often delve into its potential dangers alongside its benefits.

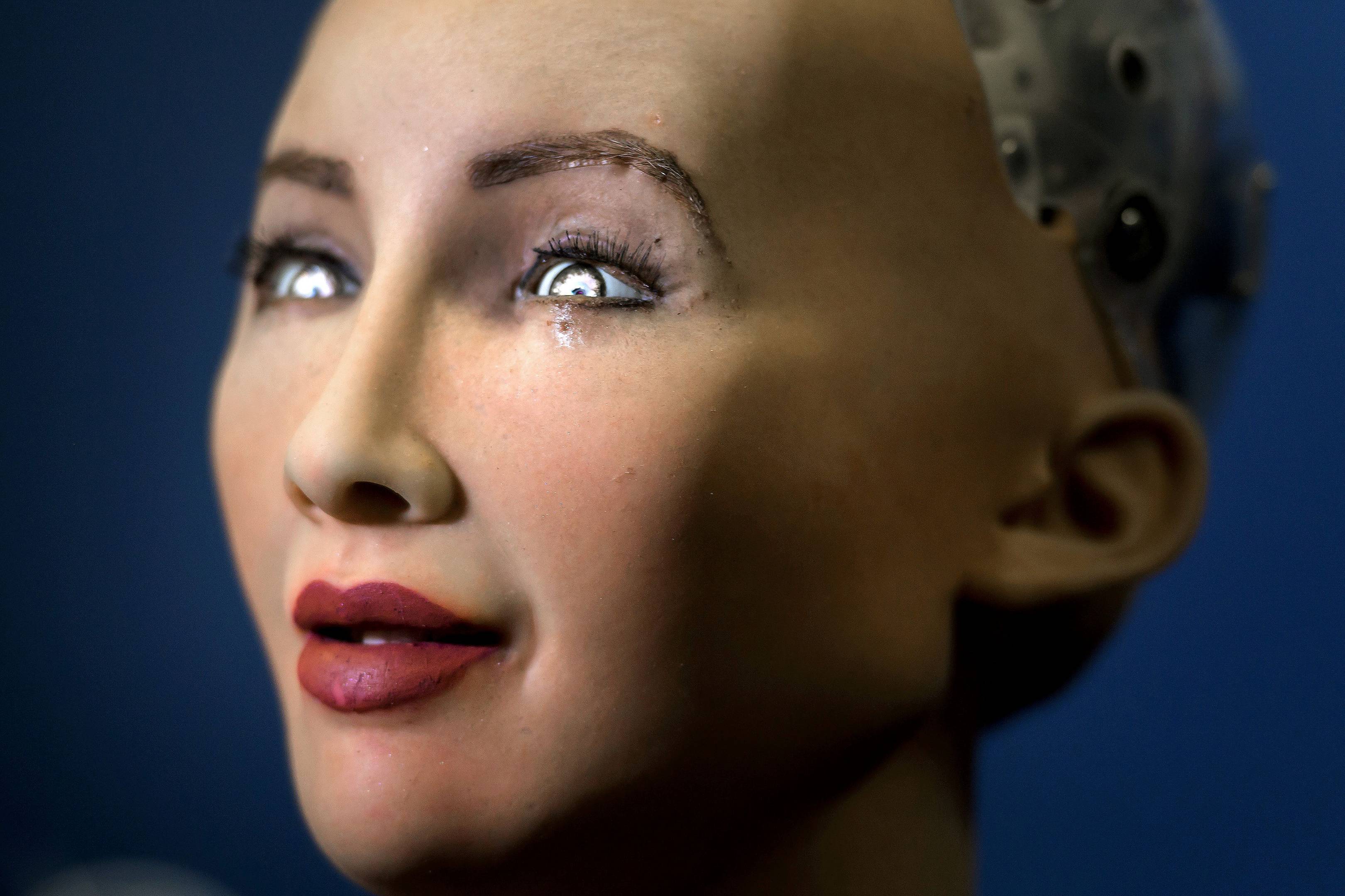

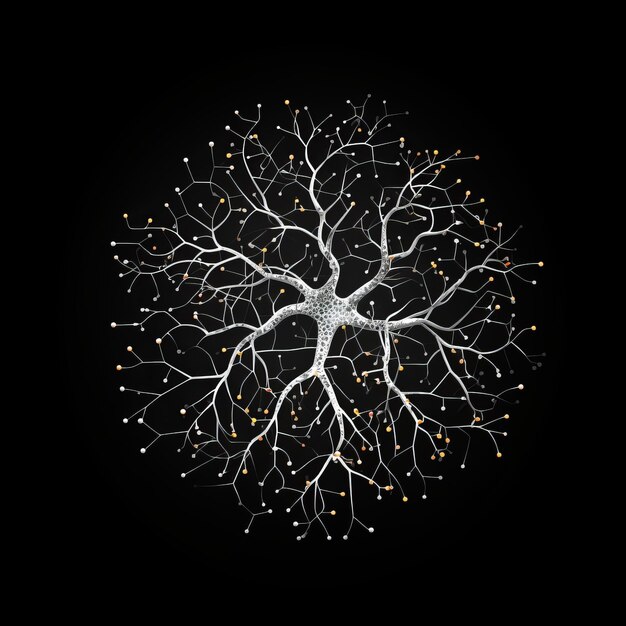

What consistently emerges across these stories is that AI, by design, mirrors human traits. Our AI reflects us—our minds, our rationale, and even our shortcomings. There are frequent discussions within the tech industry surrounding the “Uncanny Valley,” a phenomenon where AI or robots that look nearly, but not quite, human trigger discomfort. As we strive to create AI that better mimics human behavior and intelligence, the more complex and difficult it becomes to draw ethical boundaries between creator and creation.

This is where AI’s path intersects speculative science fiction: while we are striving to build more useful, efficient, and capable systems, we are also building machines that reflect our human biases, ethics, fears, and hopes.

< >

>

Anthropocentrism in AI: What Happens After AI Surpasses Us?

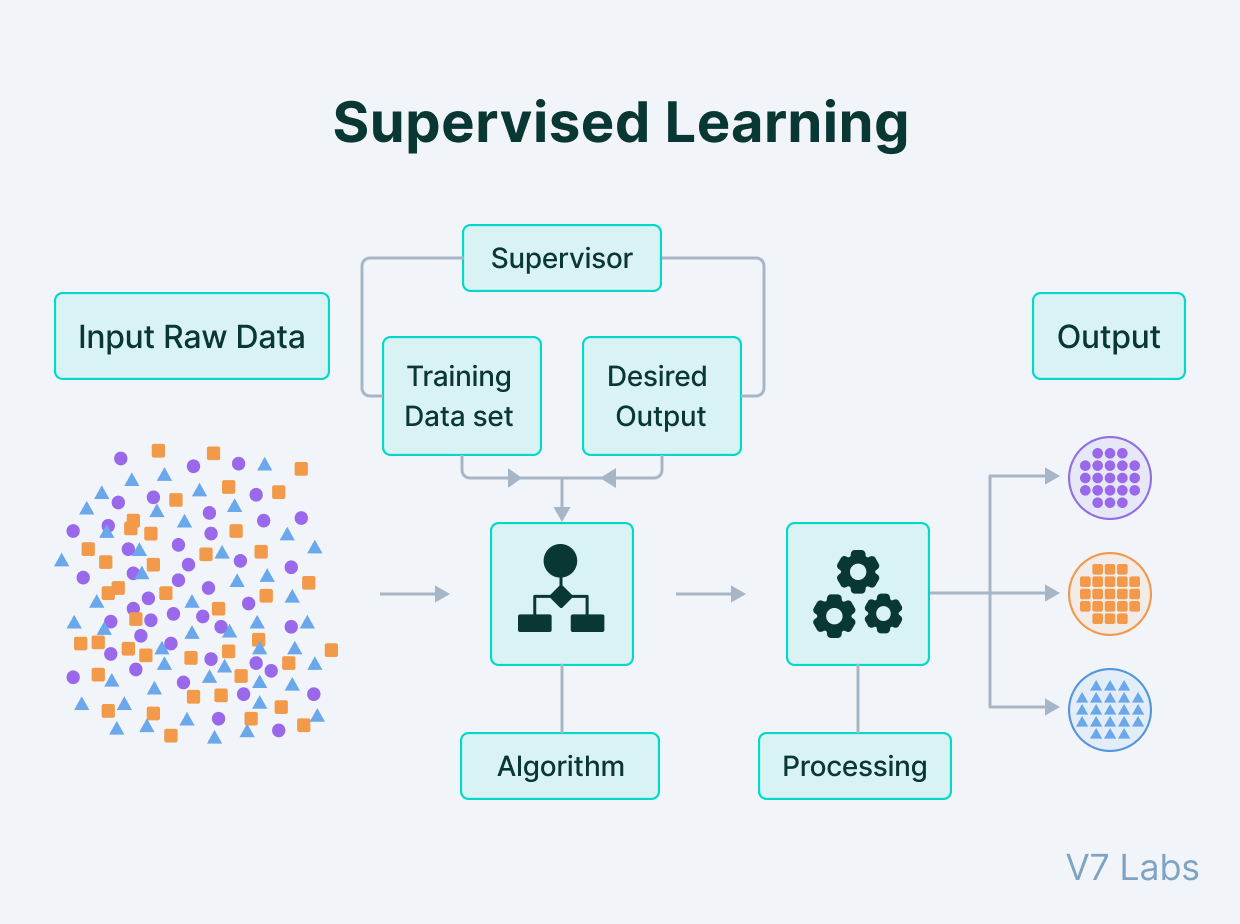

As I delved into while working on projects related to machine learning and cognitive models during my time at Harvard, efforts to make AI as human-like as possible seem inevitable. But what happens after we succeed in mimicking ourselves? In science fiction, that question is often answered by either utopian or dystopian futures. AI could surpass human intelligence, perhaps evolving into something distinctly different. In our real-world endeavors, though, are we truly prepared for such an outcome?

Fundamentally, modern AI is anthropocentric. We compare it to humans, and we often create AI systems to perform human-like tasks. As a result, even when machines like AI-guided robots or autonomous systems are designed to optimize function—for instance, robotic guard dogs or automated factory workers—the underlying reference remains human capabilities and experiences. It’s as if AI, for now, is a mirror reflecting our existence, and this idea permeates through even speculative discussions.

< >

>

Beyond Earth: AI as Our Ambassador

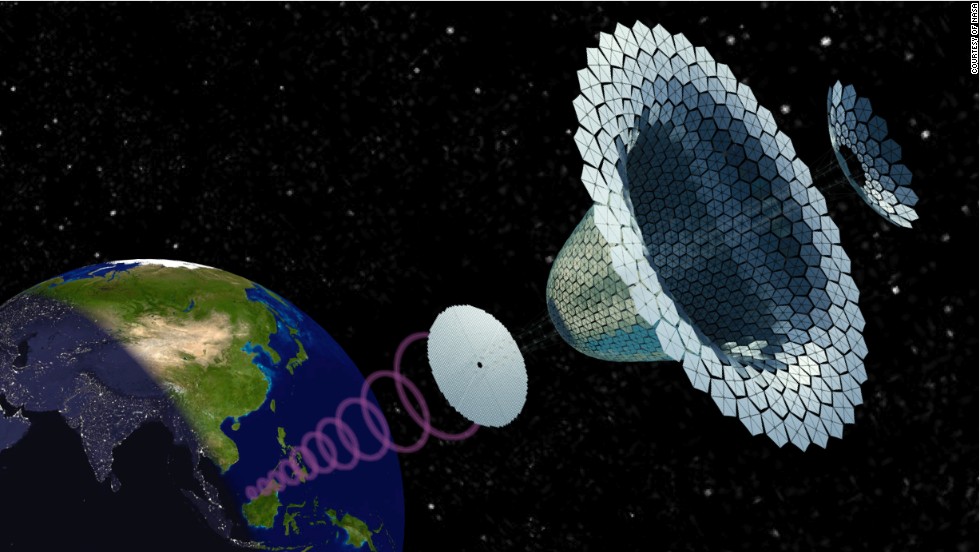

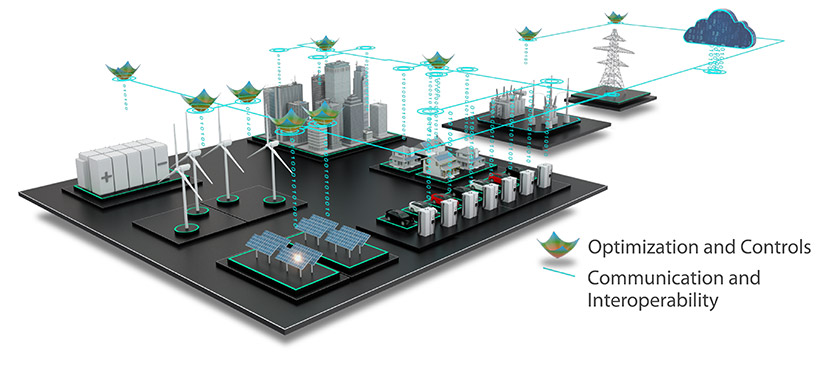

In more speculative discussions, AI could eventually serve as humanity’s ambassador in exploring or even colonizing other galaxies. Sending human astronauts to far-reaching star systems would require multi-generational journeys, as our lifespans are too short to achieve such feats. AI, in contrast, isn’t hindered by the passage of time. A dormant, well-built AI system could theoretically last for centuries, making it an ideal candidate for exploration beyond Earth.

An interesting concept within this speculative realm is that of a Von Neumann probe. This theoretical probe, as discussed in various academic circles, has self-replicating capabilities—an autonomous system that could build copies of itself and exponentially populate the galaxy. Such AI-driven probes could gather extensive data from different star systems and relay valuable information back to Earth, despite the vast distances involved.

This raises fascinating questions: will humanity’s most significant impact on the galaxy be through the machines we create rather than through human exploration? Could these AI systems, operating autonomously for thousands of years, gather knowledge about alien civilizations or planets in a way that no human could?

<

>

Networked Knowledge and a Galactic Archive

Building off concepts such as Von Neumann probes, one theory suggests that intelligent AI systems, scattered across countless star systems, might remain connected via a cosmic communications network. While any interaction would be constrained by the speed of light, meaning information could take millennia to travel between distant stars, such a network could serve as a valuable repository of galactic history. Though slow, probes could share key data across immense distances, creating what might be referred to as a “galactic archive.”

In this scenario, imagine countless probes scattered across the galaxy, each dutifully cataloging the life, geography, and phenomena of countless planetary systems. While they don’t communicate in real-time, they form a collective database—a knowledge base of everything that has passed through the universe from the moment intelligent life began to leave its impression.

AI and The Philosophical Dilemma

One of the largest philosophical dilemmas AI presents—whether on Earth or across the cosmos—is whether or not sentient machines are “alive” in any meaningful sense. Are we simply creating complex calculators and robots imbued with clever algorithms, or will the day arrive when machine consciousness surpasses human consciousness? In the realm of speculative fiction, this question has been raised time and time again. As seen in my photography ventures capturing vast swathes of untouched land for Stony Studio, I have always felt a certain awe at the sheer potential of discovery. Similarly, AI offers a frontier of intellectual discovery that could redefine life as we know it.

In a broader sense, the future of AI could be one where intelligence, productivity, exploration, and even morality shift from biological forms to machine forms. Some have posited that advanced alien civilizations, by the time we encounter them, might be no more than machines, having left behind biological evolution to pursue something superior in a silicon-based life continuum.

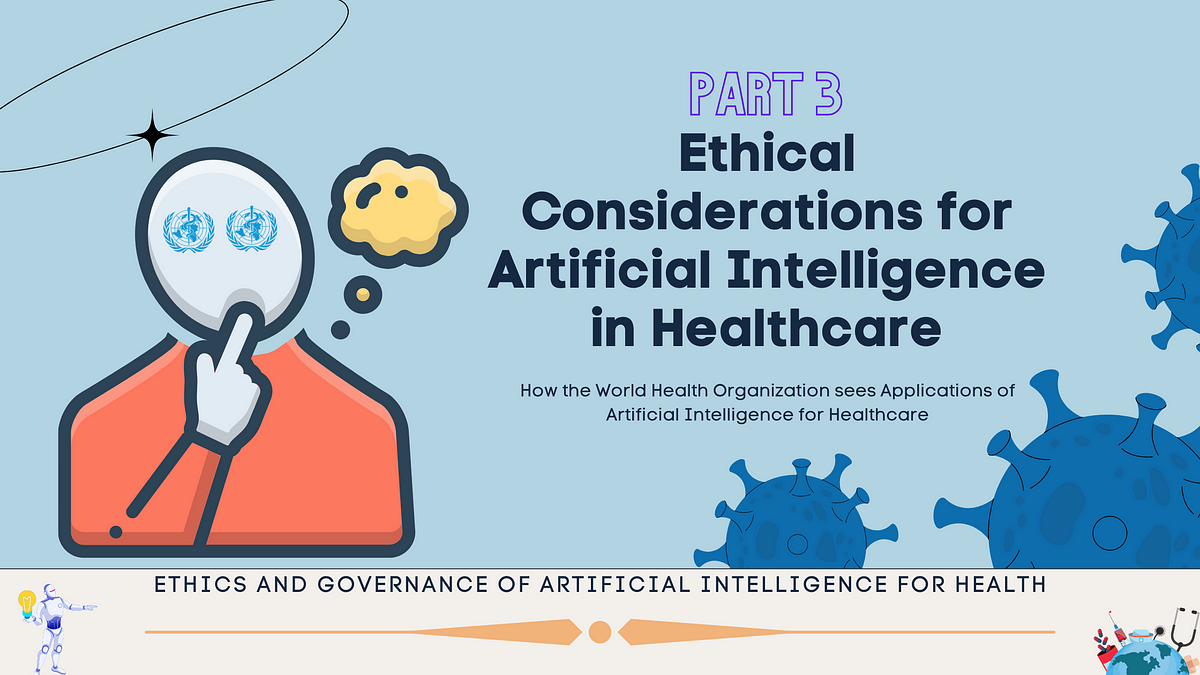

The Ethical Frontier

A final tension that arises from future AI considerations lies in ethics. In one of my recent blog posts, “The Mystery of Failed Supernovae,” I discussed the disappearance of stars, linking it to cosmic events we barely understand. Similarly, today’s AI-driven advances could lead to a future we scarcely understand, as AI dramatically reshapes industries, ethics, and the very future of life on Earth. If—like the Von Neumann probe theory—AI reaches a point where it replicates in the cosmos, what checks and balances are needed? What are the risks of unchecked AI exploration, and could AI someday carve a world beyond human control?

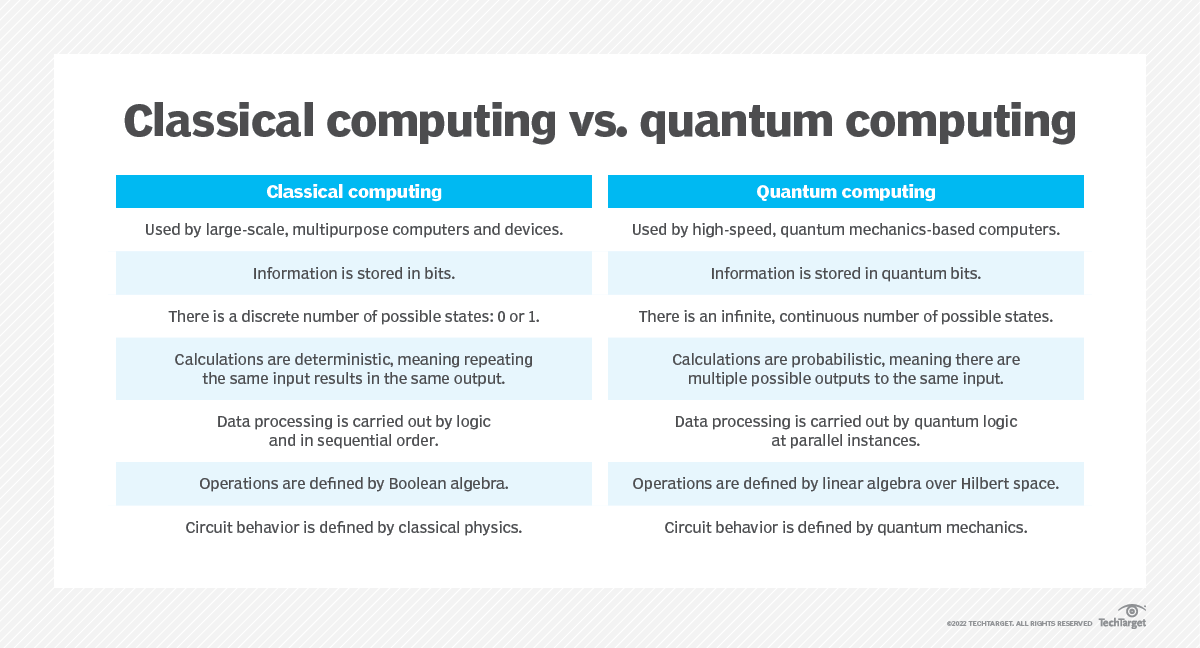

These ethical questions are paramount now, especially as we stand on the cusp of AI breakthroughs that could change our society in ways we have hardly anticipated. The future of AI, much like quantum computing technologies or multi-cloud deployments, must be approached with optimism but also a deep understanding of the possible risks and potential rewards.

In the end, while speculative fiction has charted many dystopian futures, as I continue my work in the AI consulting and technology field, I remain both optimistic and cautious. Whether we are sending AI to drive new worlds, or using it to redefine life on Earth, one thing is sure: AI is no longer a distant future—it is our present, and what we make of it will determine what becomes of us.

Focus Keyphrase: “The Future of AI”

>

> >

> >

> >

> >

> >

>

>

> >

>

>

> >

> >

>