The Future of Self-Driving Cars and AI Integration

In the ever-evolving landscape of artificial intelligence (AI), one area generating significant interest and promise is the integration of AI in self-driving cars. The complex combination of machine learning algorithms, real-world data processing, and technological advancements has brought us closer to a future where autonomous vehicles are a common reality. In this article, we will explore the various aspects of self-driving cars, focusing on their technological backbone, the ethical considerations, and the road ahead for AI in the automotive industry.

The Technological Backbone of Self-Driving Cars

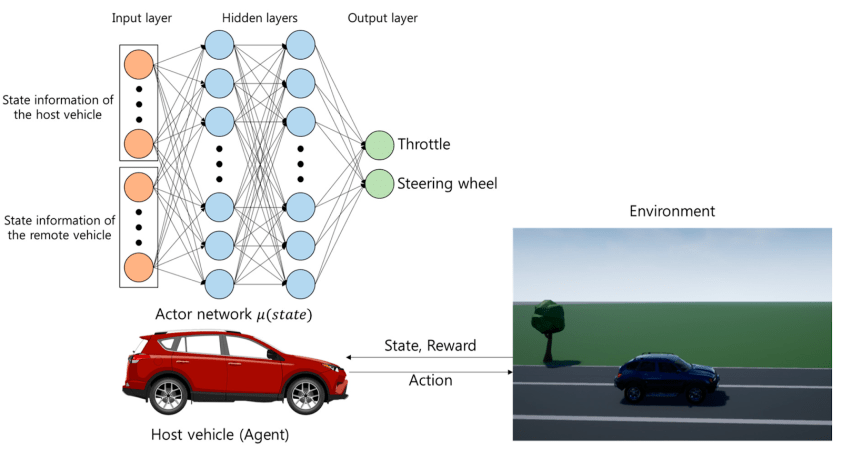

At the heart of any self-driving car system lies a sophisticated array of sensors, machine learning models, and real-time data processing units. These vehicles leverage a combination of LiDAR, radars, cameras, and ultrasound sensors to create a comprehensive understanding of their surroundings.

- LiDAR: Produces high-resolution, three-dimensional maps of the environment.

- Cameras: Provide crucial visual information to recognize objects, traffic signals, and pedestrians.

- Radars: Detect distance and speed of surrounding objects, even in adverse weather conditions.

- Ultrasound Sensors: Aid in detecting close-range obstacles during parking maneuvers.

These sensors work in harmony with advanced machine learning models. During my time at Harvard University, I focused on machine learning algorithms for self-driving robots, providing a solid foundation for understanding the intricacies involved in autonomous vehicle technology.

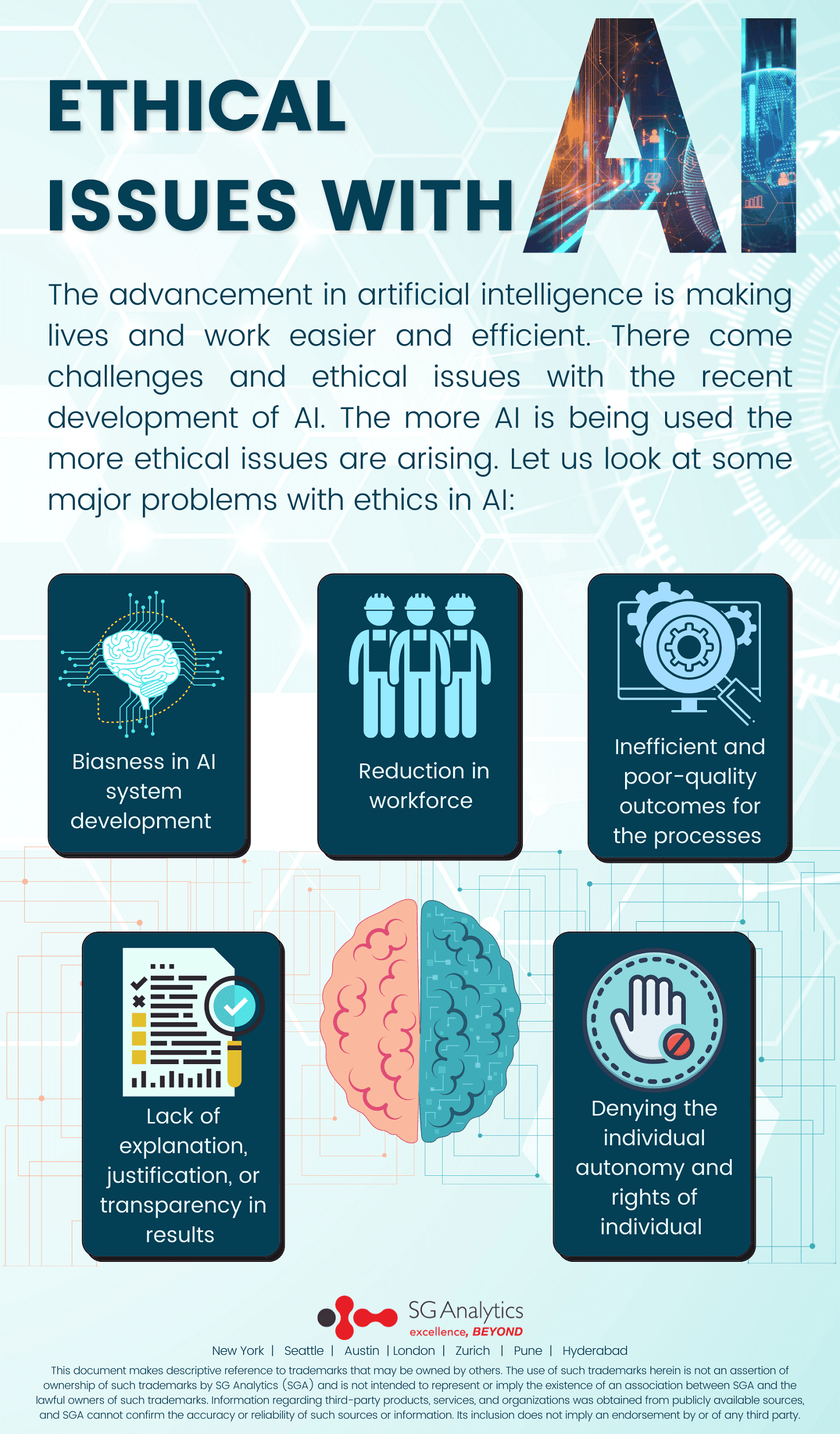

Ethical Considerations in Autonomous Driving

While the technical advancements in self-driving cars are remarkable, ethical considerations play a significant role in shaping their future. Autonomous vehicles must navigate complex moral decisions, such as choosing the lesser of two evils in unavoidable accident scenarios. The question of responsibility in the event of a malfunction or accident also creates significant legal and ethical challenges.

As a lifelong learner and skeptic of dubious claims, I find it essential to scrutinize how AI is programmed to make these critical decisions. Ensuring transparency and accountability in AI algorithms is paramount for gaining public trust and fostering sustainable innovation in autonomous driving technologies.

The Road Ahead: Challenges and Opportunities

The journey towards fully autonomous vehicles is fraught with challenges but also presents numerous opportunities. As highlighted in my previous articles on Powering AI: Navigating Energy Needs and Hiring Challenges and Challenges and Opportunities in Powering Artificial Intelligence, energy efficiency and skilled workforce are critical components for the successful deployment of AI-driven solutions, including self-driving cars.

- Energy Efficiency: Autonomous vehicles require enormous computational power, making energy-efficient models crucial for their scalability.

- Skilled Workforce: Developing and implementing AI systems necessitates a specialized skill set, highlighting the need for advanced training and education in AI and machine learning.

Moreover, regulatory frameworks and public acceptance are also vital for the widespread adoption of self-driving cars. Governments and institutions must work together to create policies that ensure the safe and ethical deployment of these technologies.

Conclusion

The integration of AI into self-driving cars represents a significant milestone in the realm of technological evolution. Drawing from my own experiences in both AI and automotive design, the potential of autonomous vehicles is clear, but so are the hurdles that lie ahead. It is an exciting time for innovation, and with a collaborative approach, the dream of safe, efficient, and ethical self-driving cars can soon become a reality.

As always, staying informed and engaged with these developments is crucial. For more insights into the future of AI and its applications, continue following my blog.

Focus Keyphrase: Self-driving cars and AI integration

>

> >

> >

>