Is Superintelligence Humanity’s Greatest Tool or Its Greatest Threat?

As someone deeply involved in the AI space both professionally and academically, I’ve observed rapid developments in superintelligent systems that prompt an important question: Is superintelligence destined to be humanity’s greatest tool or its greatest existential threat? This has been a topic of intense debate among computer scientists, ethicists, and even philosophers. My own perspective aligns largely with a cautious optimism, though the nuanced realities demand a deeper look into both the potential benefits and risks associated with superintelligent AI.

What is Superintelligence?

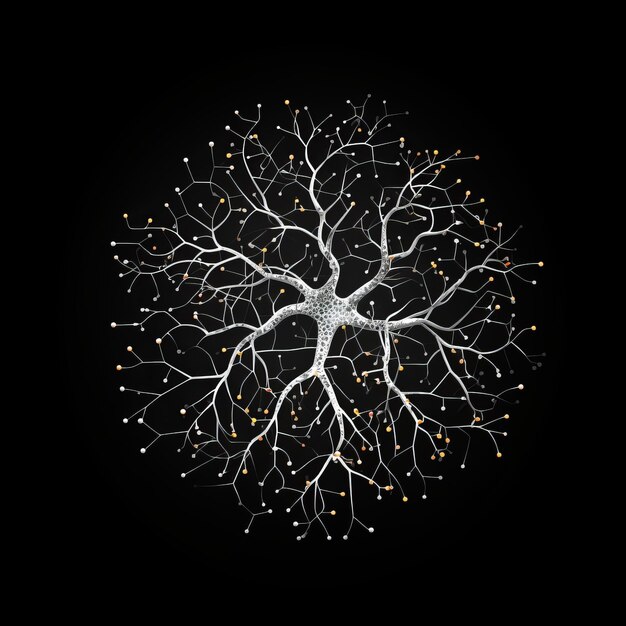

First, let’s define “superintelligence.” It refers to a form of artificial intelligence that surpasses human intelligence in every possible cognitive domain—ranging from mathematics and creativity to problem-solving and social interaction. Pioneered by thinkers like Nick Bostrom, superintelligence has been envisioned as a potential evolutionary leap, but it comes with heavy ethical and control dilemmas. Imagine an entity capable of calculating solutions to global issues such as climate change or economic inequality in seconds. The promise is alluring, but when we look at how AI is already reshaping systems, both in cloud computing and in autonomous decision-making models at my firm DBGM Consulting, Inc., we can also see reasons for concern.

Potential Benefits of Superintelligence

At its best, superintelligence could be the ultimate tool for addressing some of humanity’s deep-set challenges:

- Accelerated Scientific Discovery: AI has already proven its merit in projects like DeepMind’s AlphaFold that significantly advanced protein folding understanding, which has vast implications for medical research and drug discovery.

- Global Problem Solving: From optimizing resource allocation to creating climate change models, superintelligence could model complex systems in ways that no human brain or current technical team could ever hope to match.

- Enhanced Human Creativity: Imagine working alongside AI systems that enhance human creativity by offering instant advice in fields such as art, music, or engineering. Based on my experiences in AI workshops, I’ve seen how even today’s AI models are assisting humans in design and photography workflows, unlocking new possibilities.

It’s easy to see why a superintelligent entity could change everything. From a business standpoint, superintelligent systems could revolutionize sectors such as healthcare, finance, and environmental studies, offering profound advancements in operational efficiency and decision-making processes.

< >

>

Risks and Threats of Superintelligence

However, the spectrum of risk is equally broad. If left unchecked, superintelligence can present existential dangers that go beyond simple “AI going rogue” scenarios popularized by Hollywood. The very nature of superintelligence entails that its actions and understanding could rapidly evolve beyond human control or comprehension.

- Alignment Problem: One of the major challenges is what’s known as the “alignment problem” — ensuring that AI’s objectives sync harmoniously with human values. Misalignment, even in well-intentioned systems, could lead to catastrophic outcomes if AI interprets its objectives in unintended ways.

- Economic Displacement: While job automation is gradually shifting the workforce landscape today, a superintelligent entity could cause mass disruptions across industries, rendering human input obsolete in fields that once required expert decision-making.

- Concentration of Power: We’re already seeing the centralization of AI development in large tech companies and organizations. Imagine the competitive advantage that an organization or government could gain by monopolizing a superintelligent system. Such control could have devastating effects on global power dynamics.

These risks have been widely debated, notably in publications such as OpenAI’s explorations on industry safeguards. Additionally, my experience working with AI-driven process automation at DBGM Consulting, Inc. has shown me how unintended consequences, even on smaller scales, can have a ripple effect across systems, a point that only magnifies when we consider superintelligence.

The Tipping Point: Controlling Superintelligent Systems

Control mechanisms for superintelligence remain a billion-dollar question. Can we effectively harness a level of intelligence that, by definition, exceeds our own? Current discussions involve concepts such as:

- AI Alignment Research: Efforts are underway in technical fields to ensure that AI goals remain aligned with human ethics and survival. This branch of research seeks to solve not only simple utility tasks but complex judgment calls that require a moral understanding of human civilization.

- Regulation and Governance: Multiple tech leaders, including Elon Musk and organizations like the European Union, have called for stringent regulations on mass AI deployment. Ethical and legal standards are key to preventing an all-powerful AI from being weaponized.

- Control Architectures: Proposals, such as “oracle AI,” aim to build superintelligent systems that are capable of answering questions and making decisions but lack the agency to initiate actions outside of prescribed boundaries. This could be a safeguard in preventing an autonomous takeover of human systems.

Ethical discussions surrounding superintelligence also remind me of past philosophy topics we’ve touched on, including prior articles where we discussed quantum mechanics and string theory. The complexity of superintelligence regulation evokes similar questions about governing phenomena we barely understand—even as we push technology beyond human limitations.

< >

>

Learning from History: Technological Advances and Societal Impacts

Looking back, every technological leap—from the steam engine to the internet—came with both progress and unintended consequences. AI is no different. In probability theory, which we’ve discussed in earlier blogs, we can apply mathematical models to predict future outcomes of complex systems. However, when dealing with the unknowns of a superintelligence system, we move into a realm where probabilities become less certain. Famous astrophysicist Neil deGrasse Tyson once said, “Not only is the universe stranger than we imagine, it is stranger than we can imagine.” I would argue the same holds true for superintelligent AI.

<

>

Conclusion: A Balanced Approach to an uncertain Future

As we stand on the cusp of a potentially superintelligent future, we need to balance both opportunism and caution. Superintelligence has transformative potential, but it should not be pursued without ethical considerations or safeguards in place. I have worked hands-on with AI enough to understand both its brilliance and its limits, though superintelligence is a different playing field altogether.

Perhaps what we need most moving forward is limited autonomy for AI systems until we can ensure more robust control mechanisms. Task-driven superintelligence may become one of humanity’s most vital tools—if managed carefully. In the end, superintelligence represents not just a technological advancement but a philosophical challenge that forces us to redefine what it means to coexist with a superior intellect.

Focus Keyphrase: superintelligence risks