Can AI Ever Truly Reason? A Deep Dive Into Current Limitations and Future Potential

The debate about whether AI models can eventually develop the capability to reason like humans has been heating up in recent years. Many computer scientists believe that if we make AI models large enough and feed them incremental amounts of data, emergent abilities—like reasoning—will come to fruition. This hypothesis, while attractive, still faces significant challenges today, as I will explore in this article.

In this context, when I refer to “reasoning,” I mean the ability to follow basic laws of logic and perform simple math operations without error. Consider something fundamental like “If pizza, then no pineapple.” This is a basic conditional logic anyone could easily understand, and yet AI systems struggle with such things.

Over my own career, I’ve worked extensively with artificial intelligence, machine learning algorithms, and neural networks, both at Microsoft and through my AI consultancy, DBGM Consulting, Inc. So, naturally, the question of AI’s ability to reason is something I’ve approached with both curiosity and skepticism. This skepticism has only increased in light of the recent research conducted by DeepMind and Apple, which I’ll elaborate on further in this article.

How AI Models Learn: Patterns, Not Logic

Modern AI models—such as large language models (LLMs)—are based on deep neural networks that are trained on enormous amounts of data. The most well-known examples of these neural networks include OpenAI’s GPT models. These AIs are highly adept at recognizing patterns within data and interpolating from that data to give the appearance of understanding things like language, and to some degree, mathematics.

However, this process should not be mistaken for reasoning. As researchers pointed out in a groundbreaking study from DeepMind and Apple, these AIs do not inherently understand mathematical structures, let alone logic. What’s worse is that they are prone to generating “plausible” but incorrect answers when presented with even slightly altered questions.

For example, take a simple mathematical problem asking for “the smallest integer whose square is larger than five but smaller than 17.” When I posed this question to one such large language model, its responses were garbled, suggesting numbers that didn’t meet the criteria. This happened because the AI was not using reasoning skills to reach its conclusion but instead drawing from language patterns that were close but not entirely accurate.

Emergent Abilities: The Promise and the Problem

There’s a strong belief in the AI field that as AI models grow larger, they begin to demonstrate what are called “emergent abilities”—capabilities the models weren’t explicitly taught but somehow develop once they reach a certain size. For instance, we have seen models learn to unscramble words or improve their geographic mapping abilities. Some computer scientists argue that logic and reasoning will also emerge if we keep scaling up the models.

However, the DeepMind and Apple study found that current models falter when faced with simple grade-school math questions, particularly if the questions are altered by changing names, introducing distractions, or varying numerical values. This indicates that the models are more about memorization than true reasoning. They excel in spotting patterns but struggle when asked to apply those “rules” to fresh, unseen problems.

Where Do We Go From Here? The Future of AI and Reasoning

So, why do today’s AIs struggle with reasoning, especially when placed in contexts requiring logical or mathematical accuracy? A significant reason lies in the limitations of language as a tool for teaching logic. Human languages are incredibly nuanced, ambiguous, and fraught with exceptions—none of which are conducive to the sort of unambiguous conclusions logic demands.

If we want to build AI systems that genuinely understand reasoning, I believe that integrating structured environments like physics simulations and even fundamental mathematics could help. AI models need to get a better grasp of the physical world’s rules because reality itself obeys the principles of logic. In my experience, developing machine learning models for robotics and AI tends to tie well with physics, engineering, and mathematical rule sets. The more exposure LLMs get to these structured forms of knowledge, the likelier it is they will at least partially develop reasoning abilities.

At DBGM Consulting, my focus has long been on applying AI where it can improve automation processes, build smarter algorithms, and enhance productivity in cloud solutions. But this question of reasoning is crucial because AI without proper reasoning functions can pose real-world dangers. Consider examples like autonomous vehicles or AI systems controlling vital infrastructure—failure to make logical decisions could have catastrophic outcomes.

Real-World Applications that Require Reasoning

Beyond the hypothetical, there are several domains where reasoning AI could either represent great benefit or pose significant risk:

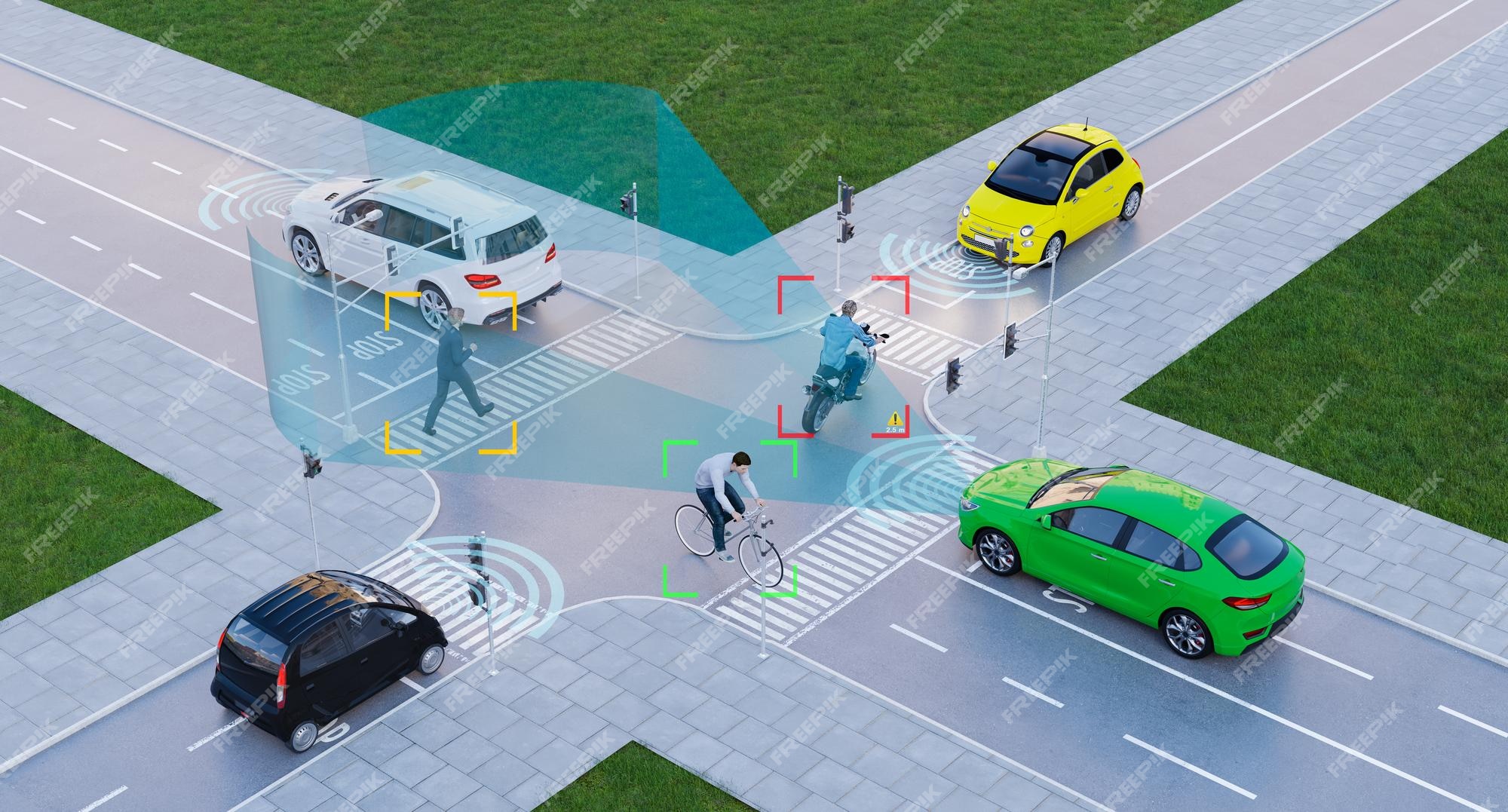

1. **Autonomous Vehicles**: As most AI enthusiasts know, vehicle autonomy relies heavily on AI making split-second decisions that obey logic, but current systems largely depend on pattern recognition rather than sound reasoning.

2. **AI in Governance and Military**: Imagine policymakers using AI systems to make decisions on diplomacy or warfare. A lack of reasoning here could escalate conflicts or lead to poor outcomes based on incorrect assumptions.

3. **Supply Chains and Automation**: If AI manages complex logistics or automation tasks, calculations need to be precise. Today’s AI, in contrast, still struggles with basic results when the context of a problem changes.

While AI has seen successful applications, from chatbots to personalized services, it still cannot replace human reasoning, especially in complex, multi-variable environments.

Tying Back to Generative Models and GANs: Will They Help?

In a previous article on generative adversarial networks (GANs), I discussed their ability to generate outputs that are creatively compelling. However, GANs operate in a fundamentally different manner from systems rooted in logic and reason. While GANs provide the appearance of intelligence by mimicking complex patterns, they are far from being “thinking” entities. The current limitations of GANs highlight how pattern generation alone—no matter how advanced—cannot entirely capture the intricacies of logical reasoning. Therefore, while GAN technology continues to evolve, it will not solve the reasoning problem on its own.

Conclusion: What’s Next for AI and Human-Like Reasoning?

It’s clear that, as impressive as AI has become, we are a long way from AI systems that can reason as humans do. For those of us invested in the future of AI, like myself, there remains cautious optimism. Someday, we might program AI to be capable of more than what they can learn from patterns. But until then, whether advising governments or simply calculating how much pineapple to put on a pizza, AI models must develop a better understanding of fundamental logic and reasoning—a challenge that researchers will continue grappling with in the years to come.