The Vanishing Night: How Light Pollution is Erasing Our Stars

For millennia, humanity has looked to the night sky for guidance, inspiration, and scientific discovery. The constellations have been our maps, our myths, and our muses. But today, that sky is fading—not because the stars are disappearing, but because we are drowning them in artificial light.

The Changing Night Sky

Light pollution is an insidious and rapidly growing problem. Unlike other forms of pollution that require dedicated cleanup efforts, restoring a dark sky would take nothing more than turning off our lights. Yet, in most places, that remains an unthinkable act.

The 1994 earthquake in Los Angeles demonstrated this reality strikingly. When power outages temporarily plunged the city into darkness, residents saw the Milky Way for the first time—some were so shocked they called observatories to ask about the “giant silver cloud” above them. This momentary glimpse into the unpolluted sky highlights how much we have already lost.

From 2011 to 2022, the brightness of the artificial night sky increased by an astonishing 10% per year, effectively doubling within that time. What you remember seeing as a child is no longer what you see today.

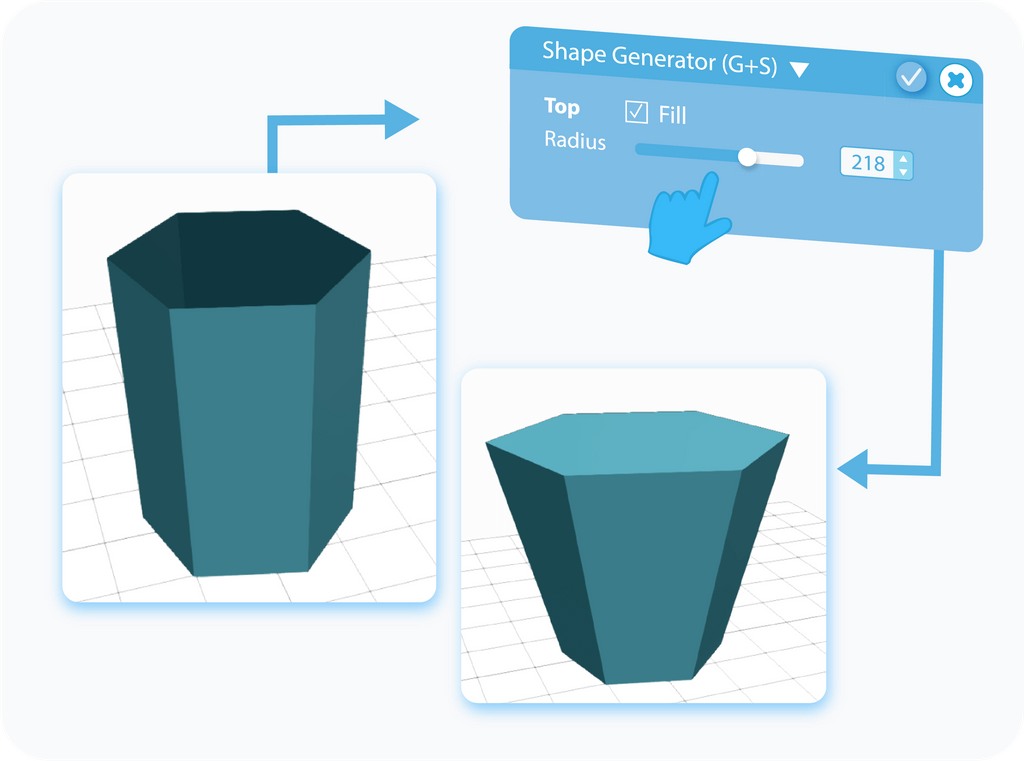

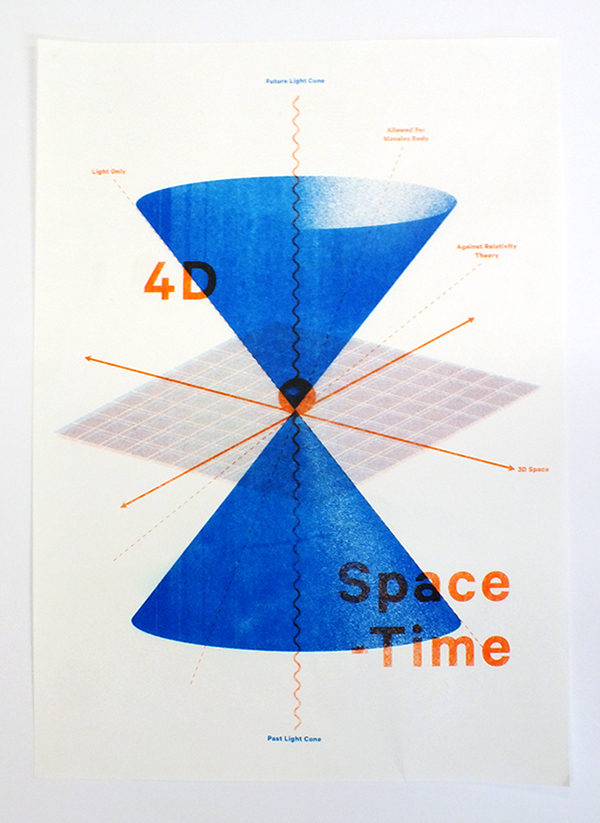

The Bortle Scale: Measuring the Loss

The quality of the night sky is rated on the Bortle Scale, a 1-9 ranking of darkness:

- Bortle 9 (Inner-city sky): Only about 100 of the brightest stars are visible.

- Bortle 7 (Urban areas): The Orion Nebula is barely discernible.

- Bortle 3 (Rural areas): The Andromeda Galaxy becomes visible, along with deeper star clusters.

- Bortle 1 (Truly dark skies): Thousands of stars appear, constellations seem buried within a sea of points, and even interplanetary dust scattering sunlight becomes visible.

For the majority of people living in urban areas, a truly dark sky is no longer something they will ever experience without traveling hours away from civilization.

Image: [1, Light pollution over a city at night]

The Harm Caused by Light Pollution

Scientific Research at Stake

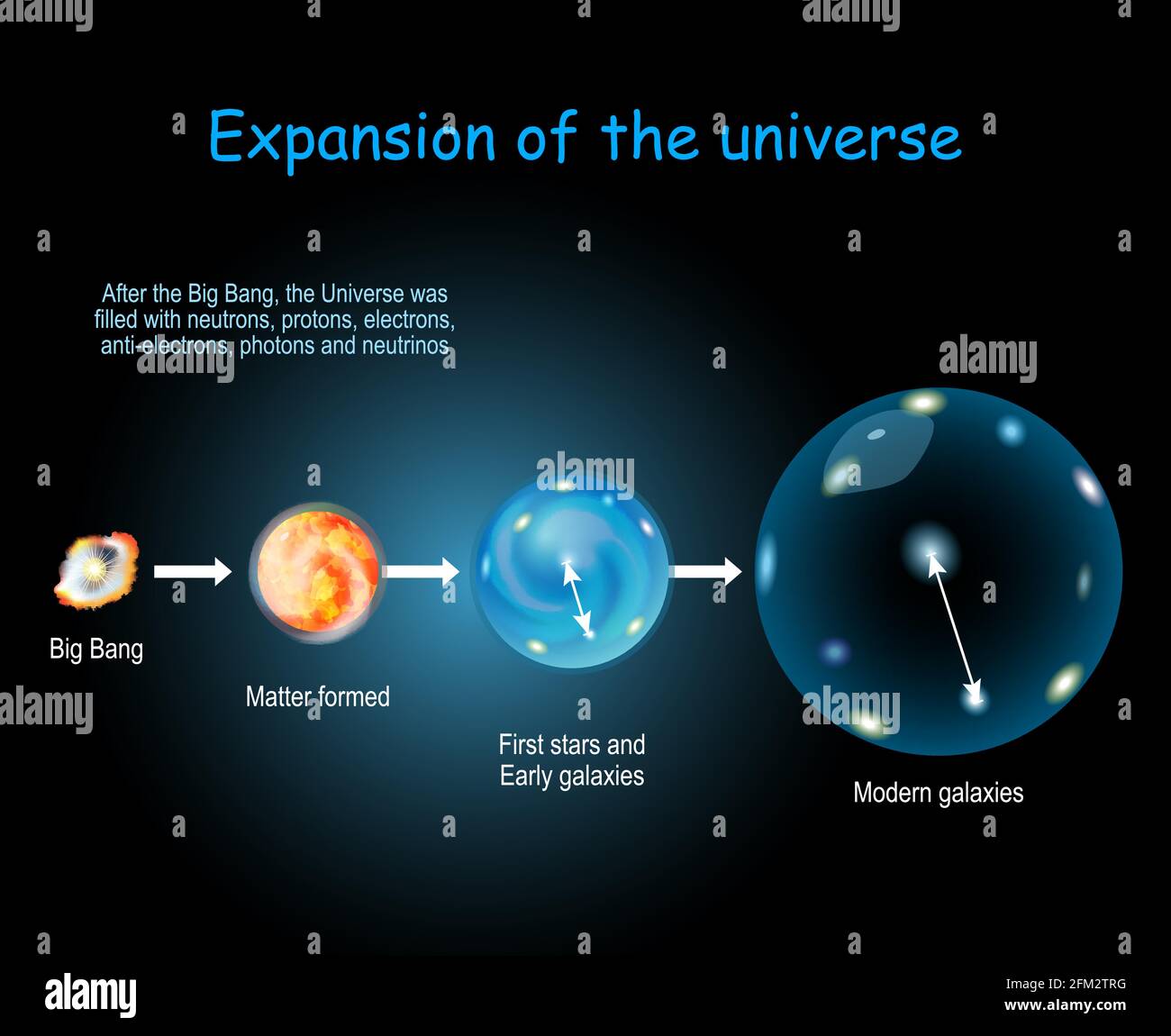

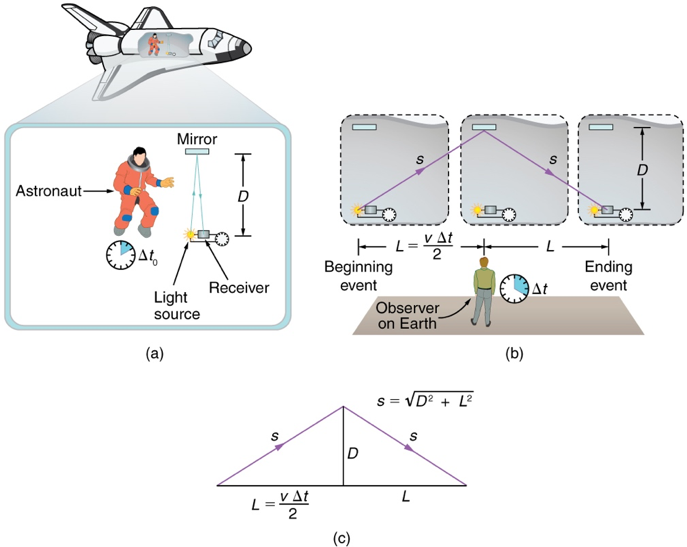

Light pollution is more than an aesthetic loss; it disrupts scientific discovery. Modern astronomers rely on dark skies to detect faint galaxies, exoplanets, and even subtle cosmic signals such as the cosmic microwave background—the remnants of the Big Bang, which were accidentally discovered because of interference with a radio antenna.

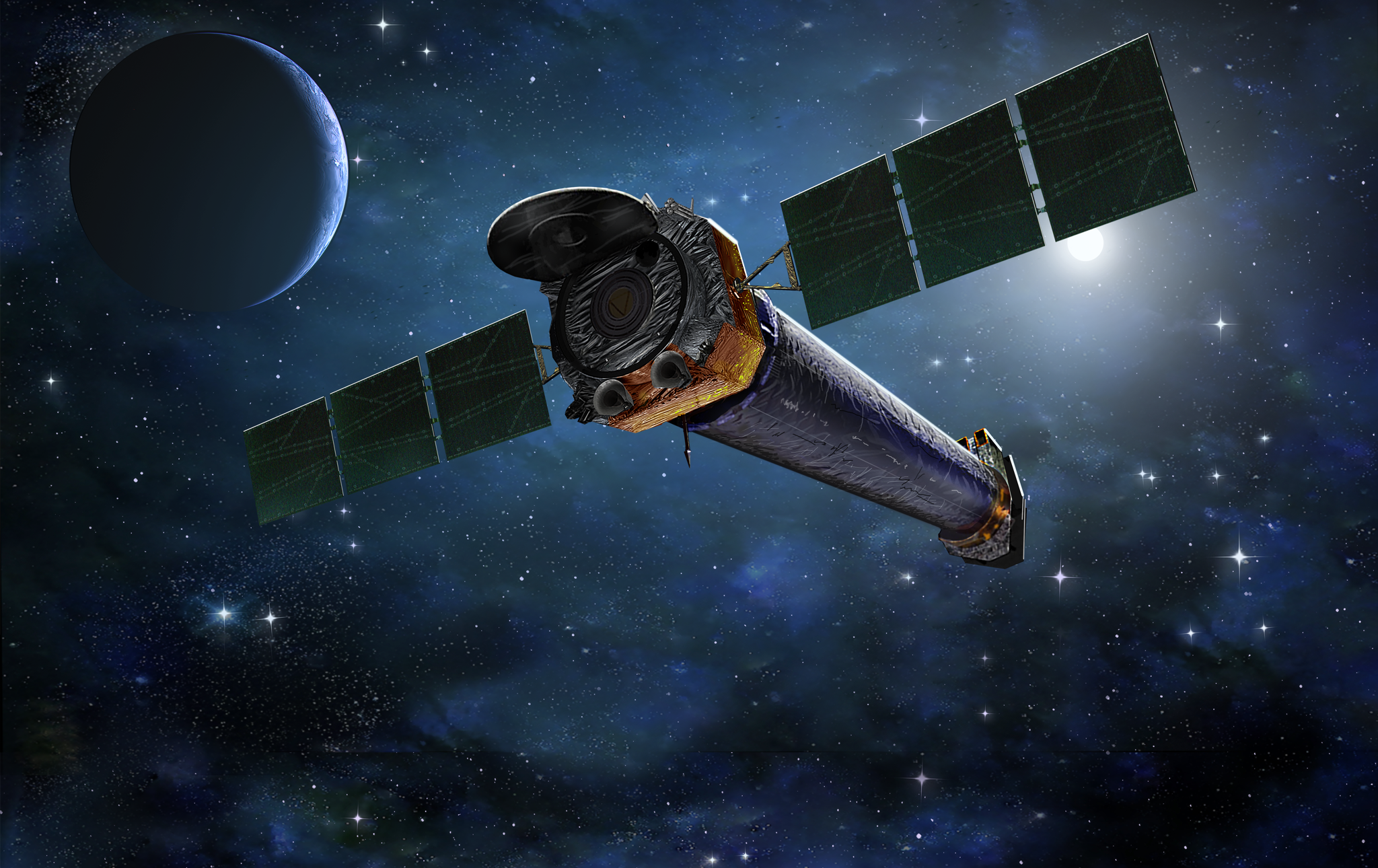

With the rise of bright LED lighting and an exponential increase in satellites cluttering low Earth orbit, telescopes worldwide are struggling to get clear readings. Astrophotographs are frequently ruined by bright streaks from passing satellites, and the soft glow of artificial light washing over telescope domes reduces the contrast necessary to detect distant celestial bodies.

The Impact on Human Health

The human body evolved under the natural cycle of day and night. Artificial lighting disrupts that balance. Exposure to bright artificial light—especially high-frequency blue light from LEDs—delays melatonin production, affects sleep schedules, and disrupts circadian rhythms, which in turn correlate with higher risks of metabolic disorders, mood disturbances, and even cancer.

Our eyes, too, have changed in response. Humans possess two types of vision: photopic (daylight, color vision from cone cells) and scotopic (low-light, monochrome vision from rod cells). Our sensitivity to dim light is rapidly diminishing as we are continuously exposed to artificial brightness, leading to a generation that has nearly lost the ability to experience true darkness.

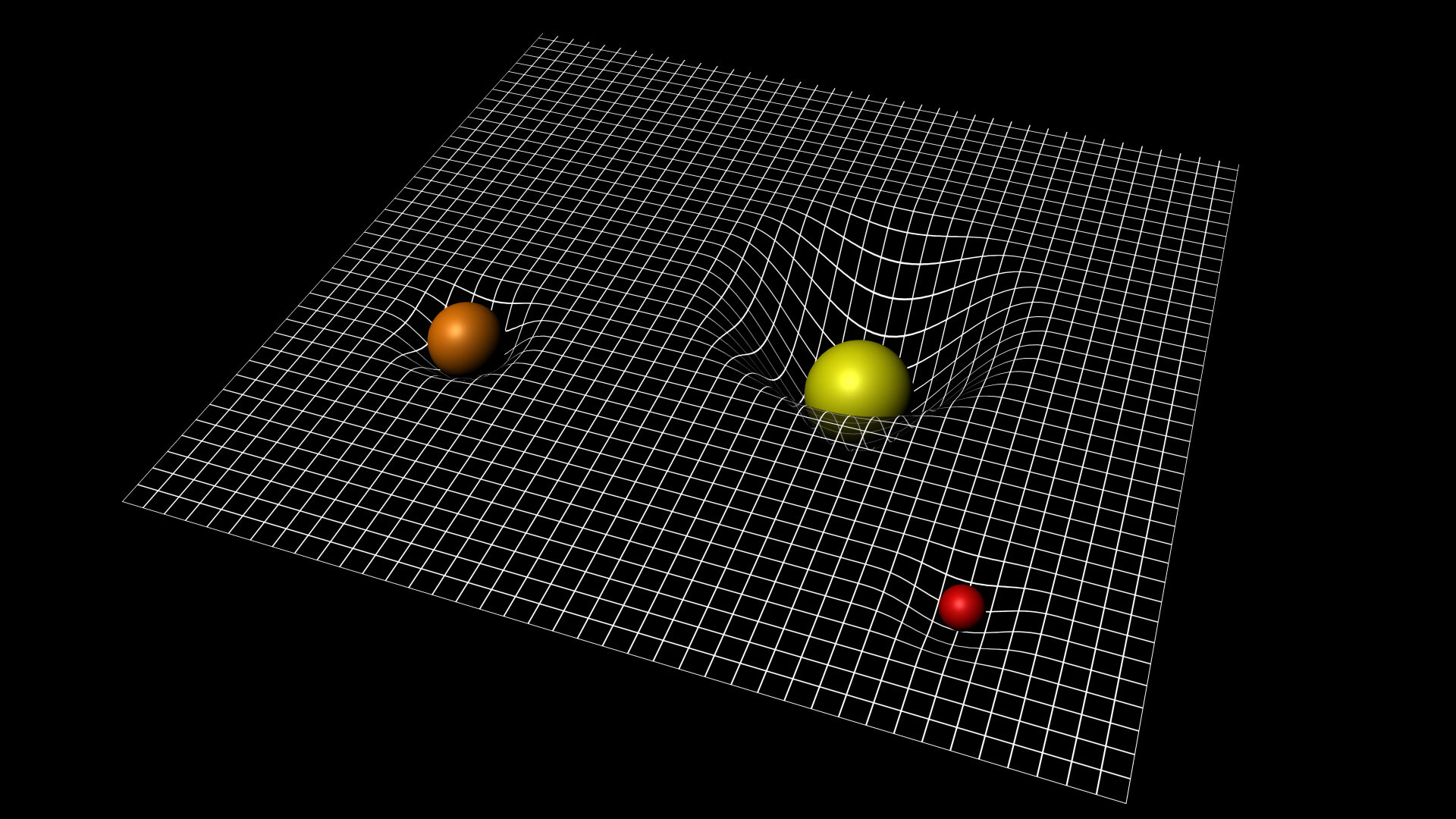

Ecological Consequences

Artificial light disrupts wildlife behavior, interfering with migration patterns, hunting patterns, and reproductive cycles.

- Sea turtles, which use moonlight to navigate to the ocean, are now confused by bright urban lights and sometimes head inland, leading to needless deaths.

- Birds that migrate at night collide with brightly lit buildings due to disorientation.

- Fireflies—whose bioluminescent mating signals are drowned out by artificial lights—are facing dramatic population declines.

- Even trees are affected, with urban lights tricking them into keeping their leaves too long, preventing proper seasonal adaptation.

Simply put, life on Earth is still evolving under moonlit nights, while humans have irreversibly altered that cycle with artificial lighting.

Image: [2, Sea turtle hatchlings disoriented by city lights]

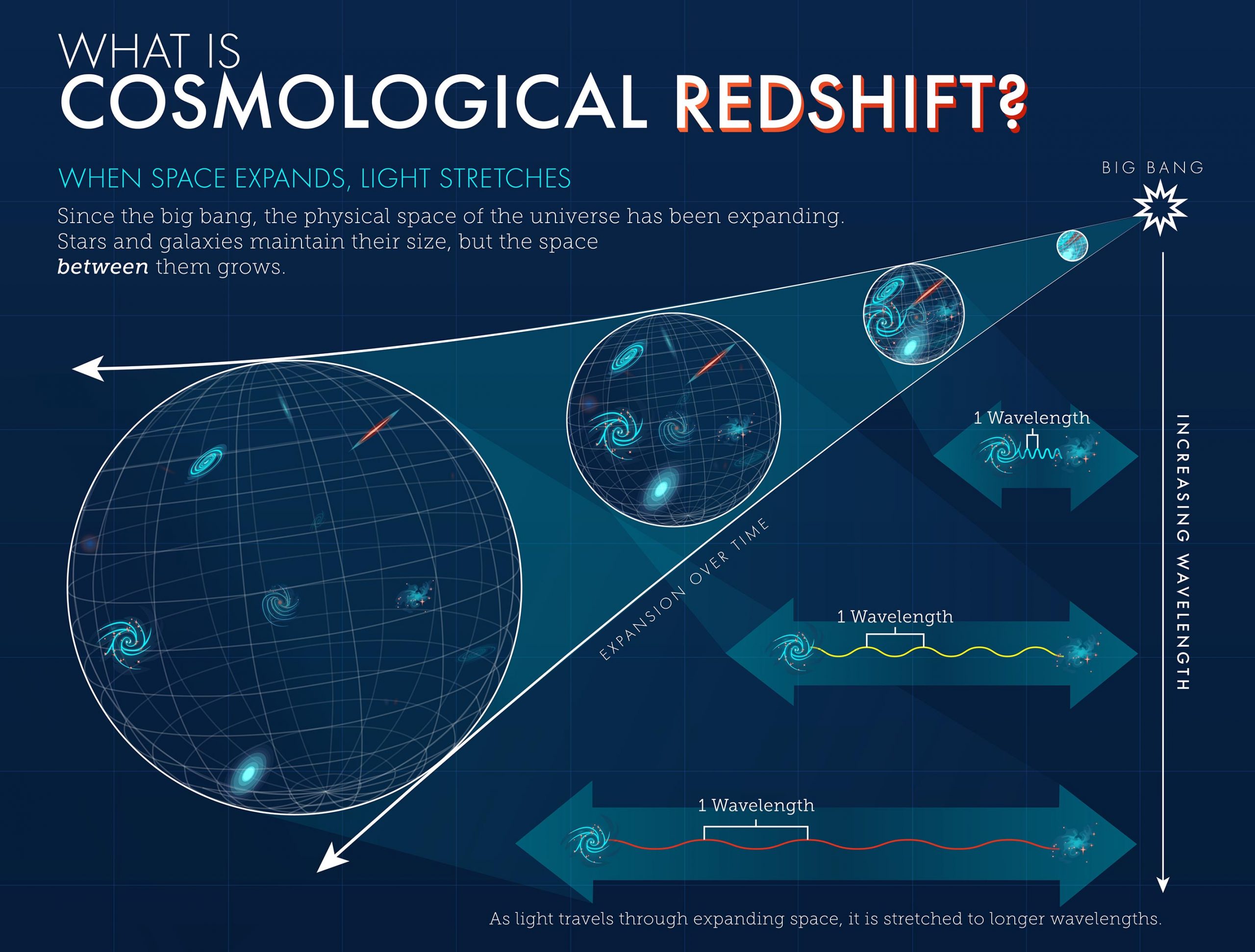

The New Enemy: Space-Based Light Pollution

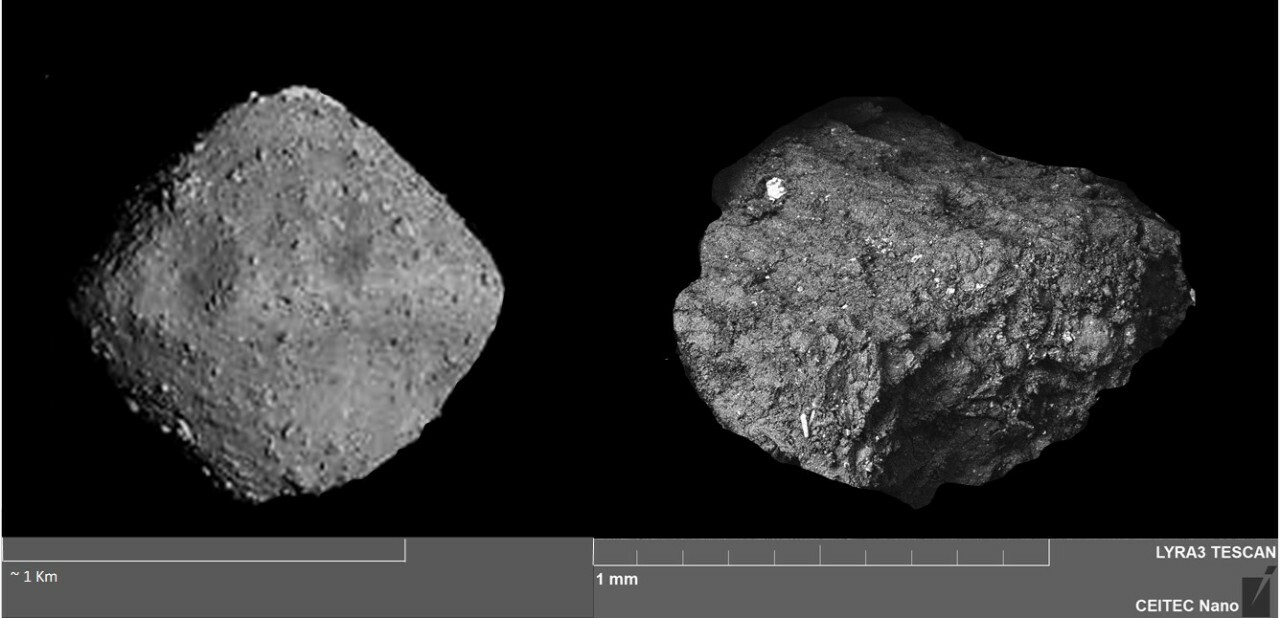

For decades, artificial lighting on the ground was the primary issue. But now, something more insidious is threatening dark skies: satellites.

Since 2018, SpaceX alone has launched nearly 7,000 satellites, more than doubling all existing satellites in orbit. By some estimates, over 100,000 satellites could be launched in the next decade.

These objects pose multiple problems:

– They cause skyglow, an expanding layer of artificial brightness from scattered reflections.

– They appear as streaks in telescope images, rendering many scientific observations useless.

– Their radio transmissions interfere with radio astronomy, making the search for faint cosmic signals more difficult.

Ironically, some of the biggest astronomical discoveries—such as the Wow! Signal, a mysterious radio burst detected in 1977 that some speculate could be extraterrestrial—might go unnoticed in today’s crowded electromagnetic environment.

This has prompted new initiatives like the Square Kilometer Array, a radio telescope system built in remote areas to minimize interference. But as satellite constellations expand, even isolated locations may no longer offer escape from human-made signals.

Image: [3, Starlink satellites streaking across the night sky]

What Can Be Done?

Revisiting Our Relationship with Light

The good news? Light pollution is one of the easiest environmental issues to solve. The solutions are simple and cost-effective:

- Reduce unnecessary outdoor lighting by turning lights off when not absolutely needed.

- Use warm-colored LEDs (3000K or lower) instead of high-frequency blue-white lights.

- Install shielded lighting that directs light downward, preventing excess scattering into the sky.

- Implement smart lighting policies in cities, where streetlights dim during off-peak hours.

Many regions have already begun adopting “Dark Sky” initiatives, enforcing responsible lighting ordinances to preserve views of the cosmos. But more aggressive global action is necessary to counteract the growing impact of artificial constellations in low Earth orbit.

The Night Sky of the Future

As we look ahead, the changes to the night sky will not stop—some natural, others artificial. Over the coming millennia and billions of years:

- In 10,000 years, the North Star will no longer be Polaris, but Vega.

- In 100,000 years, constellations will deform as stars move in unpredictable directions.

- In 5 billion years, the Andromeda Galaxy will collide with the Milky Way, merging them into one galactic mass.

- In 120 trillion years, star formation will cease, and the universe will slide into its dark era, with only black holes remaining.

For now, we cannot stop the cosmic dance, but we can slow the artificial brightening of our skies. If we do nothing, the fading of the stars will be humanity’s first step toward disconnecting from the universe itself.

Final Thought

The loss of the night sky is not inevitable. It is a choice. If we wish to preserve our window to the past—and our inspiration for the future—it falls upon us to rekindle the darkness. The stars are waiting. We just have to let them shine.

>

> >

> >

>