Blending Unreal Engine’s C++ and Blueprints for Optimal Game Development

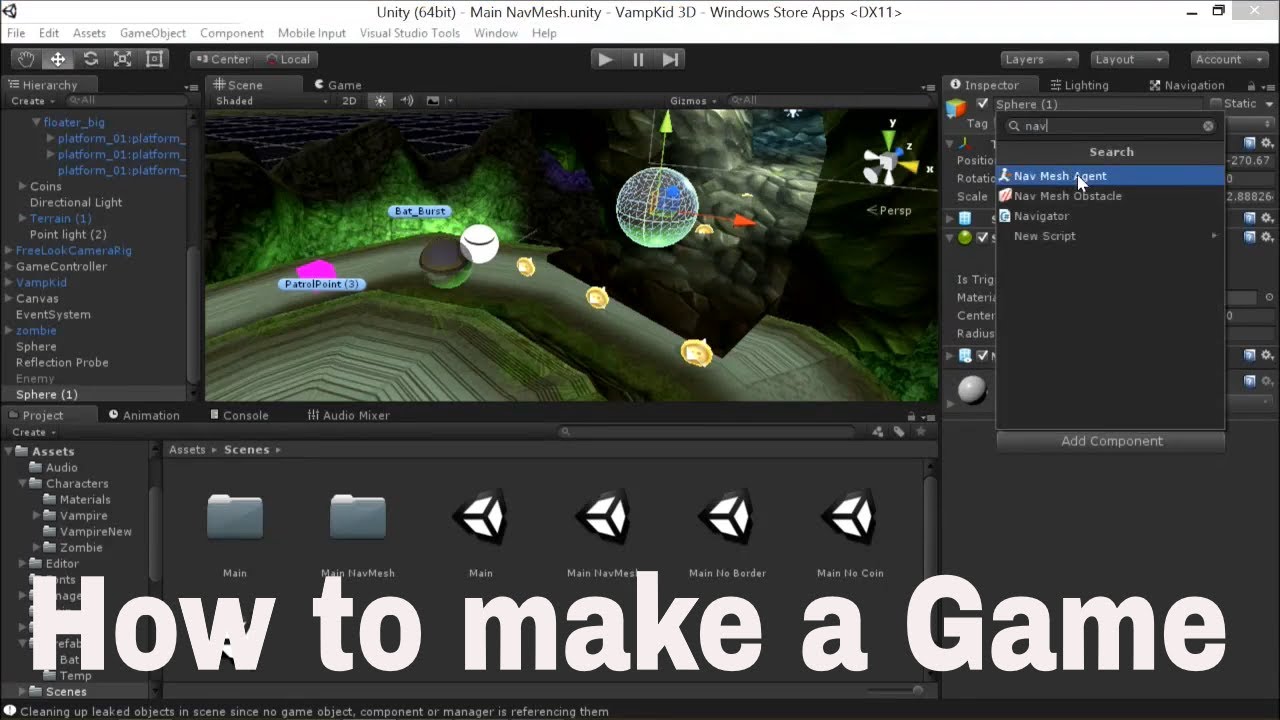

As a newcomer to the world of game development, embracing the capabilities of Unreal Engine has been both exhilarating and challenging. Starting out, I leaned heavily on Unreal’s visual scripting system, Blueprints, for its accessibility and ease of use. However, as my project evolved, particularly when implementing complex mathematical functions such as damage calculations that consider various elements like armor or magic resistance, I encountered limitations. This situation prompted me to reevaluate the balance between using Blueprints and delving into more traditional C++ coding for performance-intensive tasks.

The Case for Blueprints

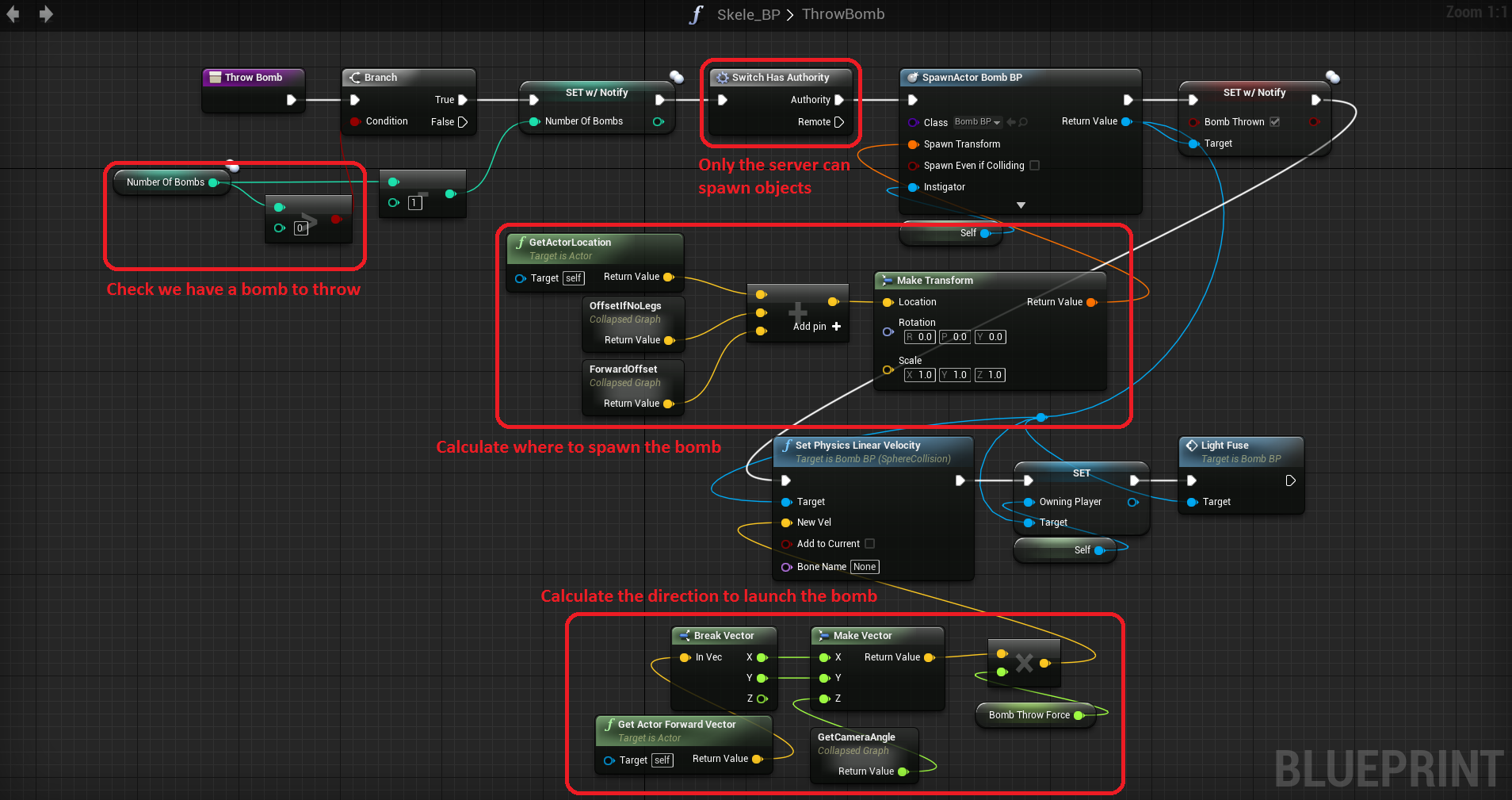

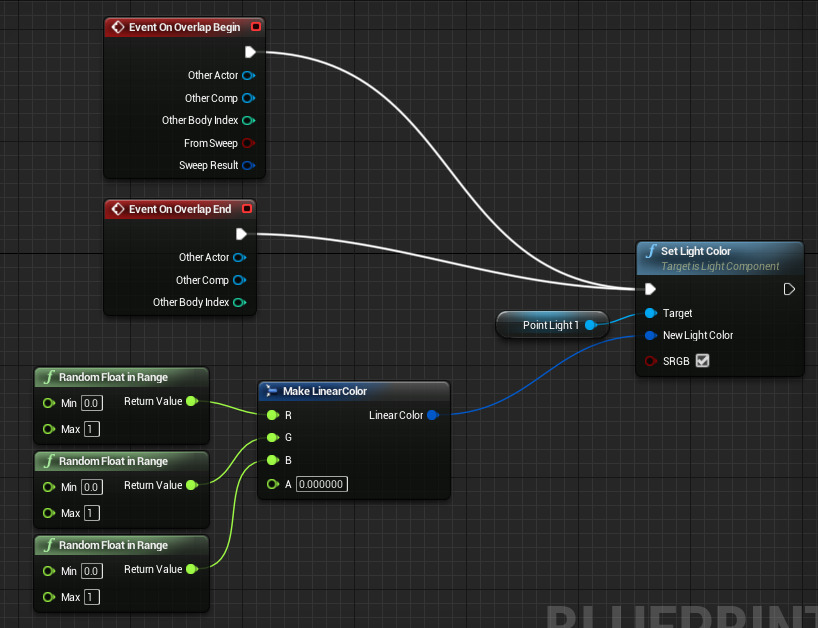

Blueprints stand out for their user-friendly design, allowing developers to visually script gameplay elements without deep programming knowledge. This accessibility accelerates the initial development phase, enabling rapid prototyping and iteration of game mechanics. For many scenarios, particularly those not heavily reliant on complex calculations, Blueprints provide sufficient power and flexibility.

< >

>

When to Consider C++

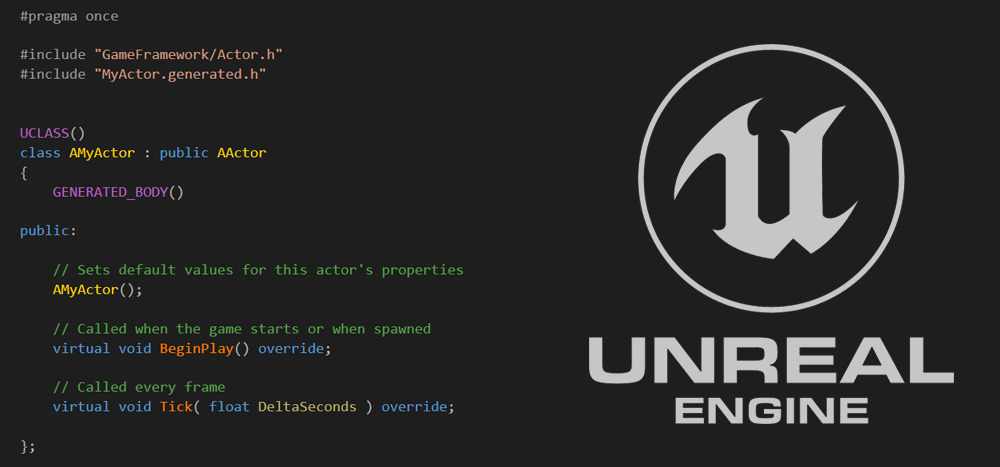

Despite the advantages of Blueprints, C++ takes the lead for optimization and handling resource-intensive processes. C++, with its lower-level access and higher execution speed, becomes indispensable for operations demanding precision and efficiency, such as intricate math functions and AI calculations. Utilizing C++ not only enhances performance but also offers greater control over game mechanics.

< >

>

Combining the Best of Both Worlds

Merging Blueprints and C++ within an Unreal Engine project presents a synergistic approach, leveraging the strengths of each. For instance, using C++ for the development of core gameplay mechanics, especially those involving complex mathematics or performance-critical systems, ensures optimal performance. Blueprints can then be employed for higher-level game logic and event handling, enabling rapid iterations and creative flexibility. This blend also permits a more flexible development pipeline, accommodating team members with varying levels of programming expertise.

Practical Integration Strategy

1. Core Mechanics in C++: Implement foundational and performance-critical elements in C++, ensuring the best possible execution speed.

2. Blueprints for Game Logic: Use Blueprints for designing game rules, UI interactions, and non-critical game mechanics, taking advantage of their visual nature for quick adjustments.

3. Data Communication: Efficiently manage data exchange between Blueprints and C++ scripts, utilizing Unreal Engine’s native support for interoperability.

< >

>

Learning from Previous Experiences

In revisiting mathematical challenges, such as those presented in previous discussions around number theory within gaming contexts, it becomes clear that mastering the use of both Blueprints and C++ is invaluable. Whether fine-tuning damage calculations or exploring probabilistic outcomes within game environments, the seamless integration of visual scripting with the power of traditional programming can elevate the development process significantly.

Conclusion

The dynamic nature of game development in Unreal Engine necessitates a flexible approach to scripting and programming. By balancing the intuitive design of Blueprints with the robust capabilities of C++, developers can harness the full potential of Unreal Engine. This hybrid method not only streamlines the development process but also opens up new possibilities for innovation and creativity in game design. Incidentally, it underscores the imperative of a solid foundation in both programming logic and mathematical principles, echoing my personal journey from number theory to the practical application of those concepts in sophisticated game environments.

Through the practical combination of Blueprints and C++, I am now better positioned to tackle complex challenges, push the boundaries of game development, and bring my unique visions to life within the Unreal ecosystem.

<

>

Focus Keyphrase: Unreal Engine C++ and Blueprints

>

> >

>

>

> >

>

>

> >

> >

> >

> >

> >

> >

> >

>