Samsung’s Remarkable 10-Fold Profit Surge: A Reflection of AI’s Growing Impact on Tech

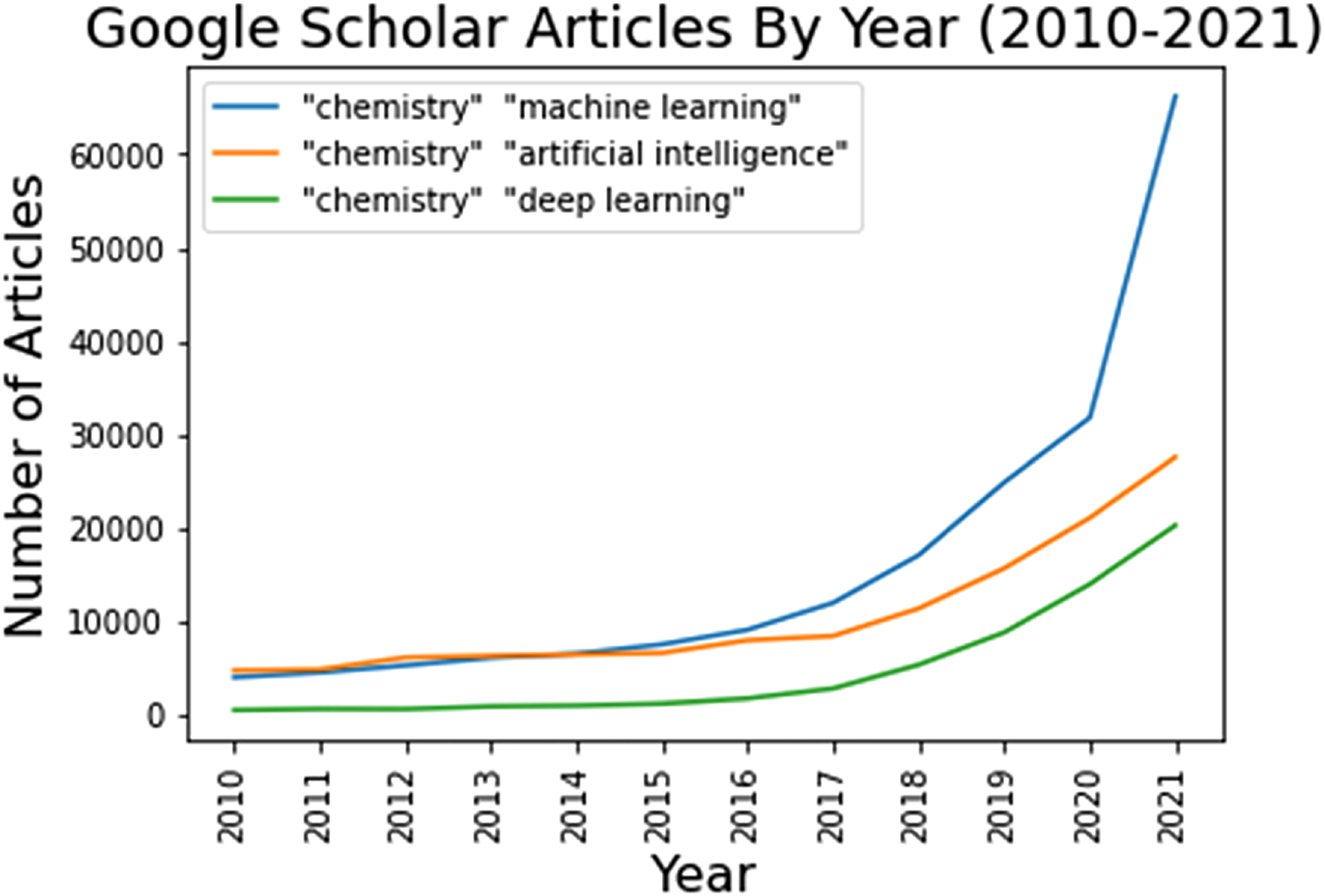

As someone deeply entrenched in the world of Artificial Intelligence and technology, it’s fascinating to observe how AI’s rapid expansion is reshaping industry landscapes. Notably, Samsung Electronics’ recent financial forecast provides a compelling snapshot of this transformation. The company’s anticipation of a 10-fold increase in first-quarter operating profit sparks a conversation not just about numbers, but about the underpinning forces driving such outcomes.

The Catalyst Behind the Surge

Samsung’s preliminary earnings report illuminates a staggering leap to an operating profit of 6.600 trillion won ($4.88 billion), up from KRW640.00 billion a year earlier. This performance, marking its strongest in one-and-a-half years, significantly overshadows the FactSet-compiled consensus forecast of KRW5.406 trillion. This uptick isn’t merely numerical; it signals a hopeful reversal in Samsung’s flagship semiconductor business after four consecutive quarters in the red.

![]()

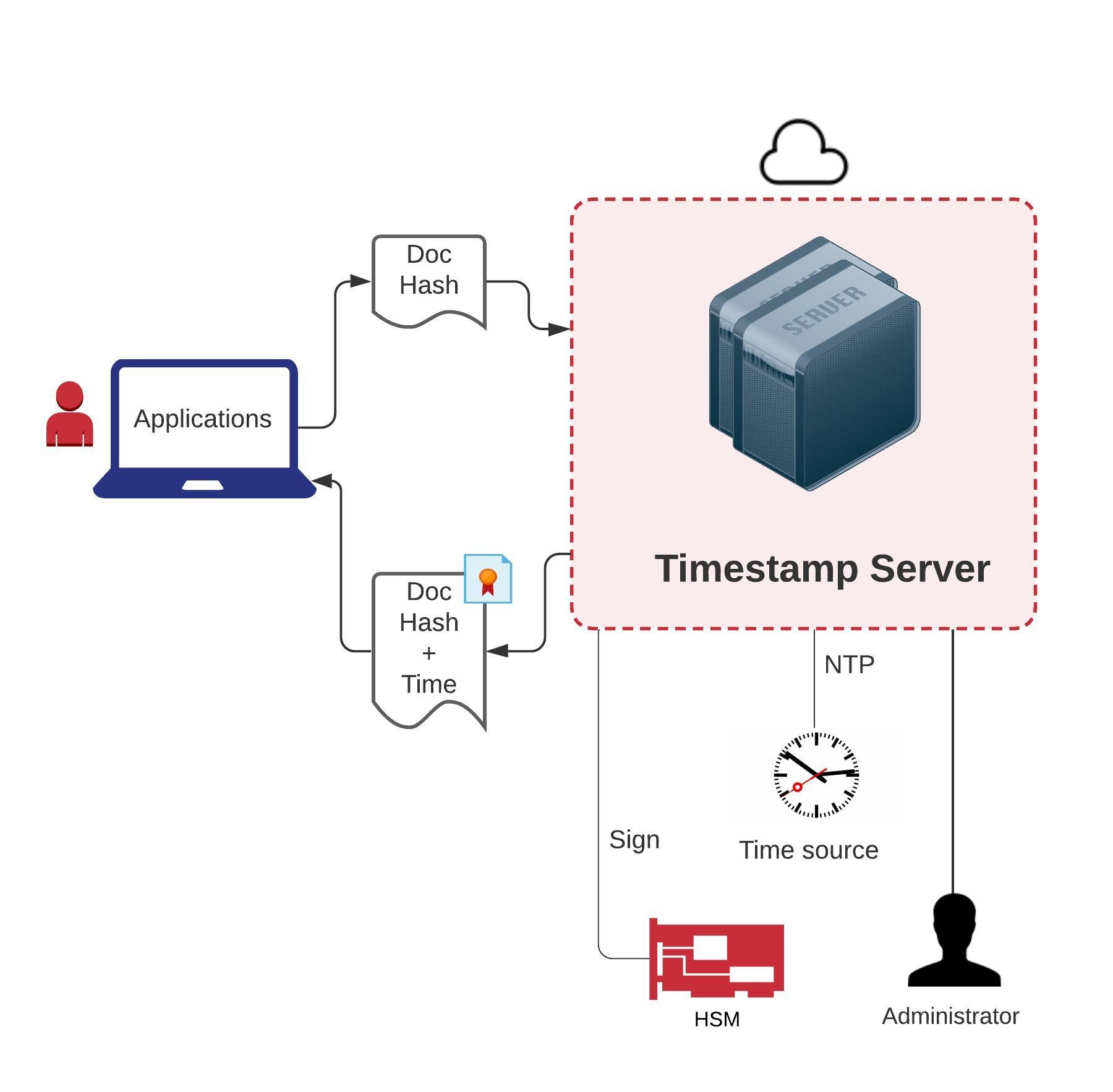

What’s particularly noteworthy is the role of the artificial intelligence boom in reviving demand for memory chips, driving up prices and, consequently, Samsung’s profit margins. This echoes sentiments I’ve shared in previous discussions on AI’s pervasive influence, notably how technological advancements catalyze shifts in market dynamics and corporate fortunes.

AI: The Competitive Arena

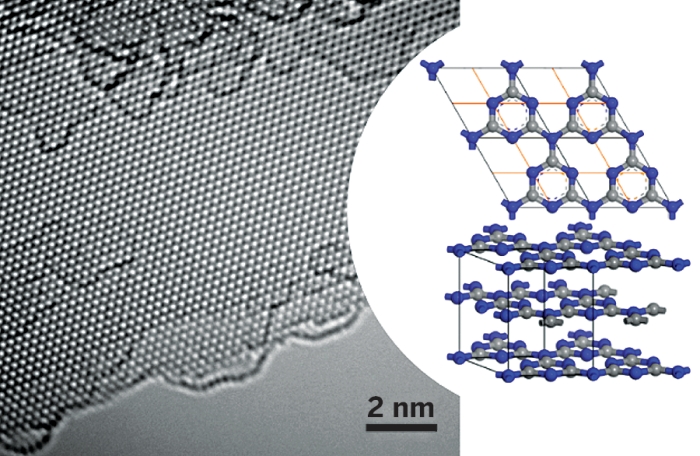

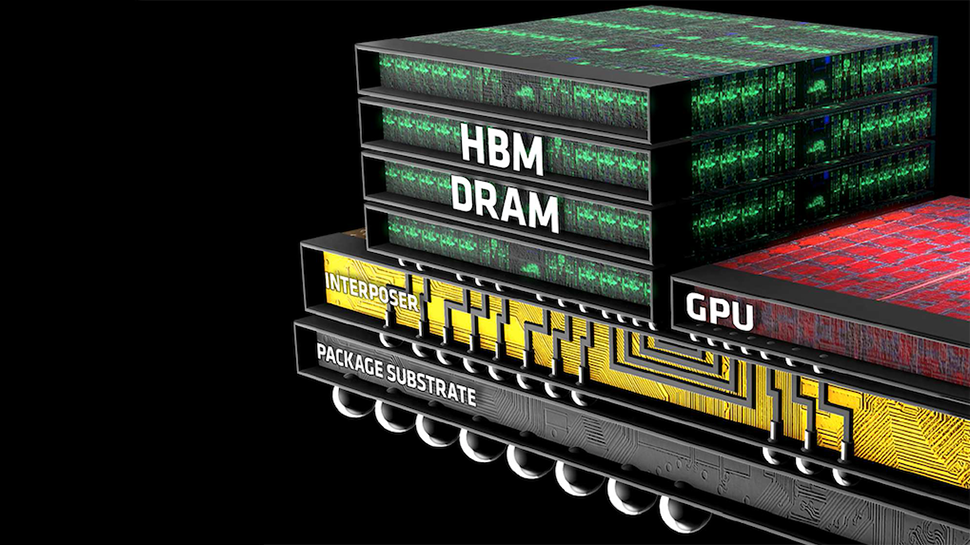

Samsung’s semiconductor trajectory spotlights a fierce contest among tech giants to lead in developing advanced high-bandwidth memory chips, crucial for AI and high-performance computing systems. This is where industry collaboration and interdependencies come into stark relief. Reports of AI chip titan Nvidia testing Samsung’s next-gen HBM chips underscore the strategic alliances shaping future technology landscapes.

Implications for the Future

Such developments beg a broader reflection on the future trajectory of AI and its societal impacts. As someone who navigates the intersections of AI, cloud solutions, and legacy infrastructures, the unfolding narrative of tech giants like Samsung serves as valuable case studies. They highlight not only the economic and technological implications but also the ethical and strategic dimensions of AI’s integration into our global ecosystem.

Merging Horizons: AI and Global Tech Leadership

The narrative of Samsung’s financial forecast intertwines with broader themes explored in our discussions on AI, such as its role in space exploration and counterterrorism strategies. Samsung’s endeavor to lead in high-performance computing through advanced chip technology is emblematic of the broader ambitions driving tech giants globally. It reflects a collective stride towards harnessing AI’s potential to revolutionize not just individual sectors but our society as a whole.

Concluding Thoughts

As we ponder Samsung’s anticipated financial resurgence, it’s imperative to contextualize this within the AI-driven renaissance shaping technology sectors. This illustrates the pivotal, albeit turbulent, journey AI and related technologies are on, influencing everything from semiconductor businesses to global tech leadership dynamics. For enthusiasts and professionals alike, staying attuned to these shifts is not just beneficial—it’s essential.

Together, let’s continue to explore, challenge, and contribute to these conversations, fostering an environment where technology is not just about advancement but about creating a more informed, ethical, and interconnected world.

Focus Keyphrase: AI’s Growing Impact on Tech

>

> >

> >

> >

> >

>

>

> >

>