The Story of BLC1: A Cautionary Tale for SETI and the Search for Alien Life

SETI, the Search for Extraterrestrial Intelligence, has long captivated the public’s imagination with the possibility of finding alien civilizations. However, the recent reemergence of the BLC1 signal in discussions highlights both the complexities and the cautionary tales inherent in interpreting such signals. Many may remember BLC1 as a potential “alien signal,” yet an in-depth analysis reveals a far more mundane explanation: interference from Earth-based technologies.

Understanding the BLC1 Signal

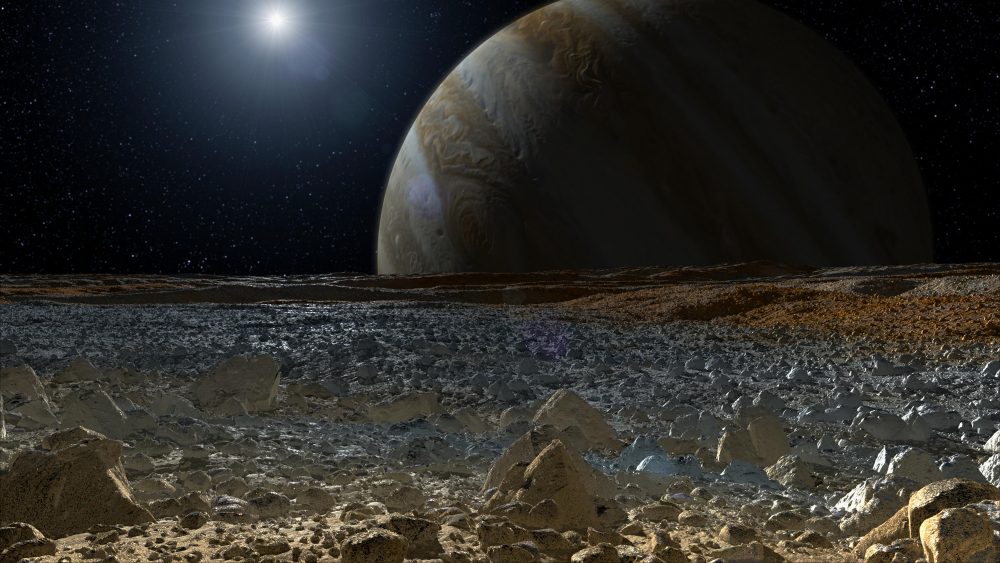

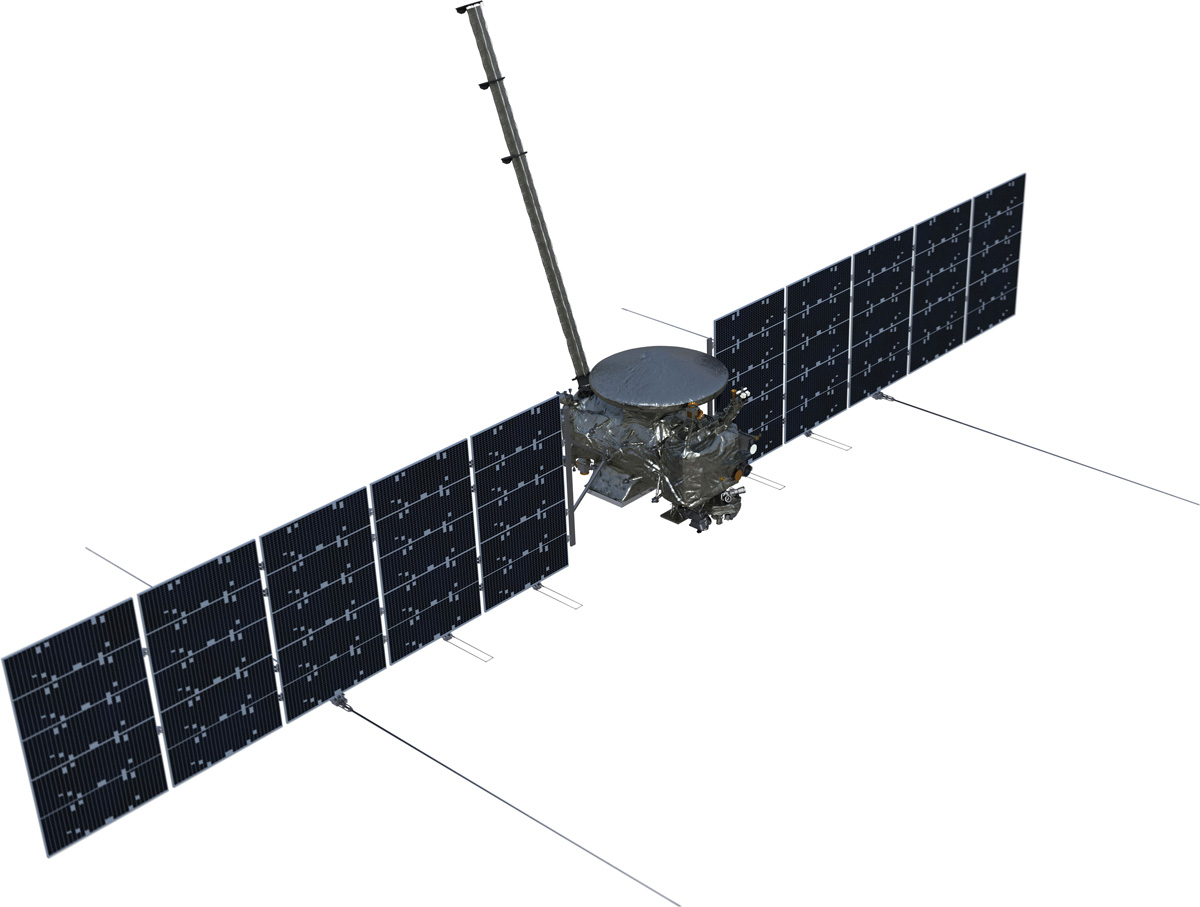

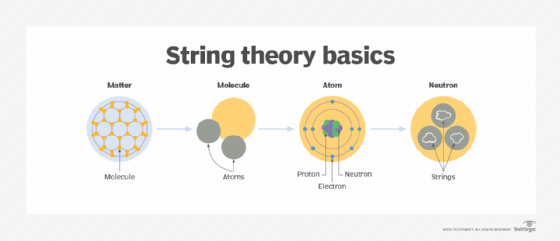

BLC1 stands for “Breakthrough Listen Candidate 1,” a designation given to a signal that was detected in 2019 by the Breakthrough Listen project. This ambitious initiative, funded by private individuals, aims to search for alien technosignatures across vast swatches of the radio spectrum. The signal was detectable for about 30 hours during part of April and May of that year. At first glance, many were intrigued, particularly because it seemed to originate from the vicinity of Proxima Centauri, the closest star system to Earth.

However, Proxima Centauri’s proximity raised immediate suspicion. The odds of two civilizations developing advanced radio technologies in neighboring star systems at roughly the same time are incredibly small. Such an event would imply a galaxy teeming with intelligent life—something we clearly do not see, given the “Great Silence” that characterizes our current observational data from the cosmos. And while theories like the “Zoo Hypothesis” or “Galactic Colonization” have circled the scientific community, the evidence so far points against these fanciful ideas.

A Closer Look Reveals Interference

The actual frequency of the BLC1 signal—a transmission beaming at 982.002 MHz—was another red flag. This part of the UHF spectrum is cluttered with Earth-based technology, including mobile phones, radar, and even microwave ovens. As noted in many SETI investigations, the risk of human interference in this frequency range is incredibly high. Besides, SETI generally focuses on quieter areas of the spectrum — such as the hydrogen line at 1420 MHz — for their investigations. BLC1 failed to reside in a notably “quiet” part of the spectrum.

Then, of course, there’s the issue of the signal’s Doppler shift. The signal’s frequency appeared to shift in an unexpected direction: it increased, whereas natural signals from space tend to decrease due to the motion of the Earth. This wasn’t the behavior you’d expect from a legitimate alien transmission. Even more damaging to BLC1’s credibility was the fact that it has never been detected again. Much like the famous “Wow Signal,” which also remains a one-off anomaly, BLC1’s fleeting existence makes it difficult to confirm or deny without further observations.

< >

>

The Challenges of Radio Contamination

This isn’t the first time that scientists have grappled with potential interference. One of the more amusing instances occurred in 1998, when Australia’s Parkes Observatory detected what looked like brief radio bursts. What investigators eventually discovered was that the signals were caused by someone opening a microwave oven in the facility too soon, allowing radio energy to briefly escape. BLC1 was also detected by Parkes, though this time SETI researchers were far more methodical in their analysis. To eliminate false positives, astronomers often “wag” the telescope — that is, they point it at the source of the signal and then away — to determine if the signal is consistent. BLC1 did pass this rudimentary test, which initially elevated it above other false alarms.

Despite this, two extensive studies published in 2021 identified multiple signals similar to BLC1 within the same data set. They couldn’t be confirmed as alien because they seemed to originate from human-made devices, likely oscillators present in everyday electronic equipment. They shared key characteristics with BLC1, further diminishing its chances of being anything extraordinary. For anyone hoping BLC1 would turn out to be humanity’s first confirmed contact with aliens, these findings were a major disappointment.

<

>

Lessons for the Future of SETI

What can we take away from the BLC1 saga? For starters, it’s a stark reminder of just how challenging the search for extraterrestrial life can be. More often than not, what first appears as fascinating is likely to be Earth-based interference. But this also speaks to the meticulous procedures in organizations such as SETI, where every signal is vigorously scrutinized, analyzed, and, in the vast majority of cases, dismissed as noise.

The story demonstrates the inherent dangers of jumping to conclusions. Media outlets eager for sensational headlines contributed to the spread of misinformation surrounding BLC1. Claims that “aliens” had been detected circulated widely, misleading the public. And while it’s unfortunate that BLC1 was not the groundbreaking discovery some had hoped for, there’s an important value in realizing that even false positives add to our understanding of space and our technology. The more we understand how interference occurs, the better we can refine future SETI projects to weed out potential noise efficiently.

< >

>

The Future of Technosignatures and SETI’s Role

One of the most interesting thoughts raised by the search for alien signals is the possibility of deception. Could an advanced civilization deliberately produce false “candidate signals” from somewhere other than their home system? Such ideas delve into the realm of science fiction, yet they highlight the potential lengths to which a highly intelligent species could go to protect its existence.

In that regard, we can’t rule out the idea that decoy signals could mislead us, directing attention elsewhere. While such a notion evokes images of spacefaring civilizations lurking behind invisible boundaries, we must remain grounded in the reality that so far, most signals can be traced back to Earth or mundane celestial phenomena.

< >

>

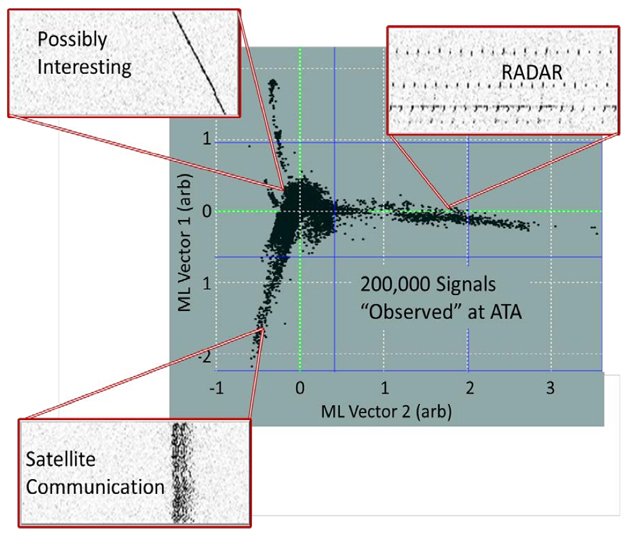

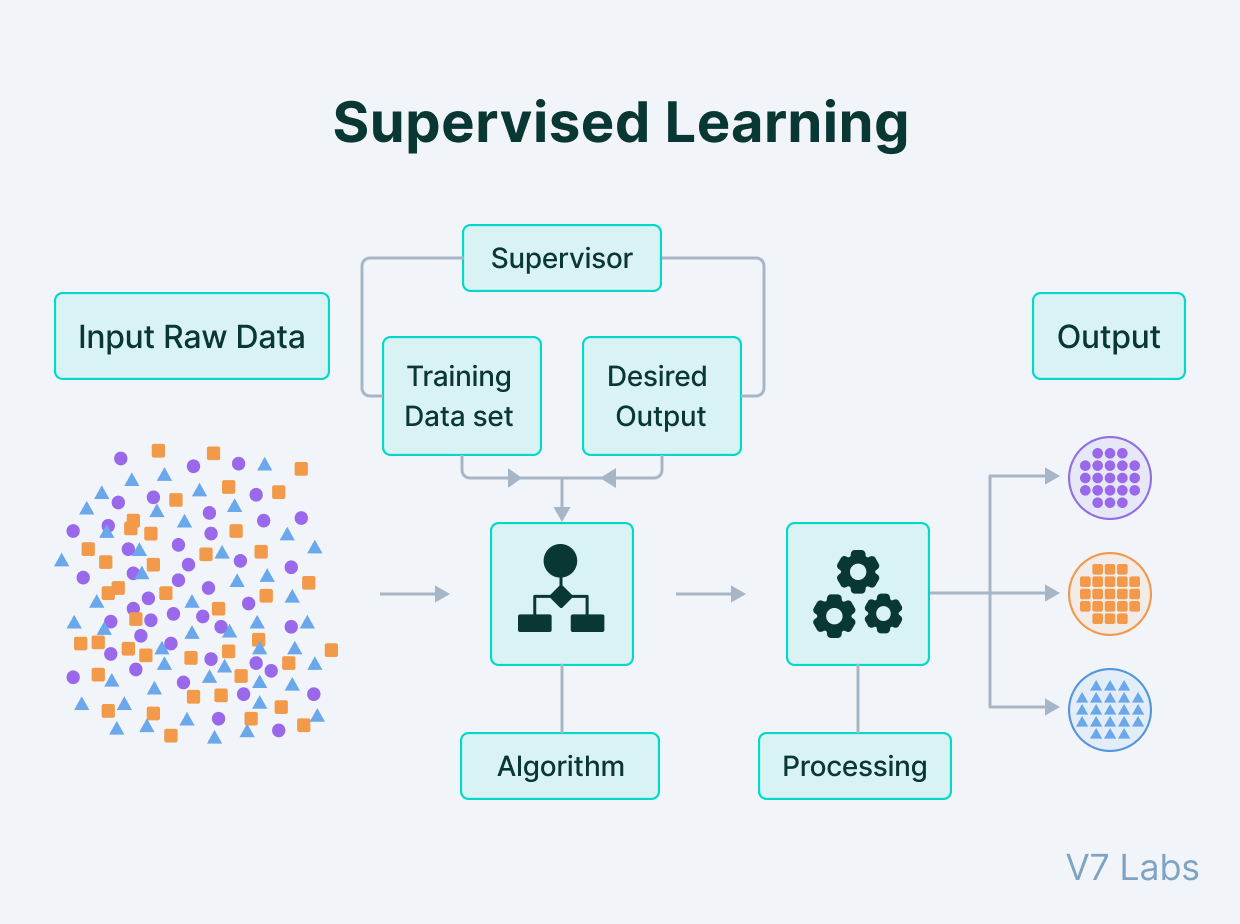

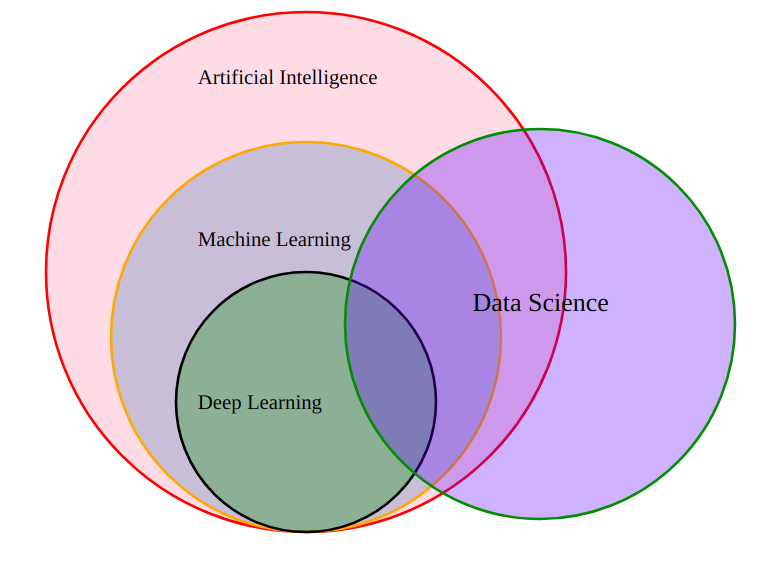

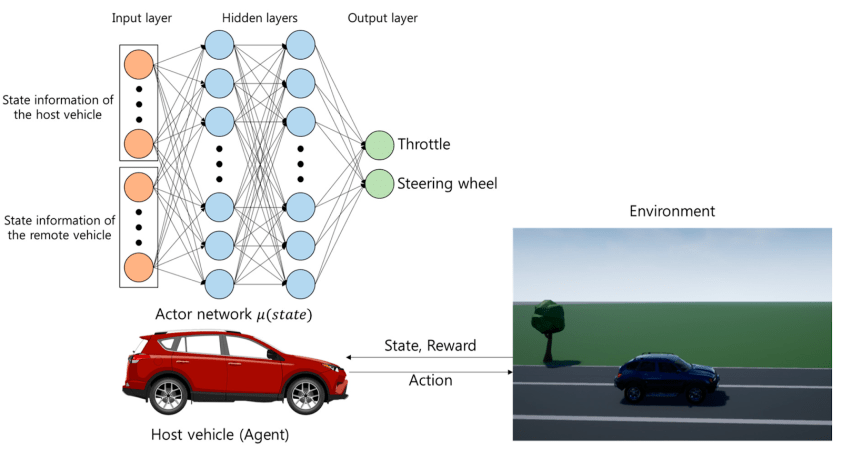

As we refine our technologies—whether through advanced machine learning models or more precise radio filtering algorithms—SETI is well-positioned to continue making headway. In some ways, this ties back to discussions from previous articles I’ve shared. Much like in “Artificial Intelligence: Navigating Challenges and Opportunities”, where AI’s bias and limitations need to be understood before yielding accurate results, so too must we carefully demarcate the limits of our tools in the search for alien intelligence. The process of “learning with humans” emphasizes the importance of collaboration, skepticism, and refinement as we explore such tantalizing frontiers.

While BLC1 wasn’t the signal we were hoping for, it ultimately reminded us of an essential truth: the universe is vast, but also quiet. If extraterrestrial life is out there, the hunt continues, with more tools and lessons learned along the way.

Focus Keyphrase: BLC1 Signal

>

> >

> >

>

>

> >

> >

> >

> >

> >

> >

> >

> >

>

>

> >

> >

>

>

> >

>