Redefining Quantum Machine Learning: A Shift in Understanding and Application

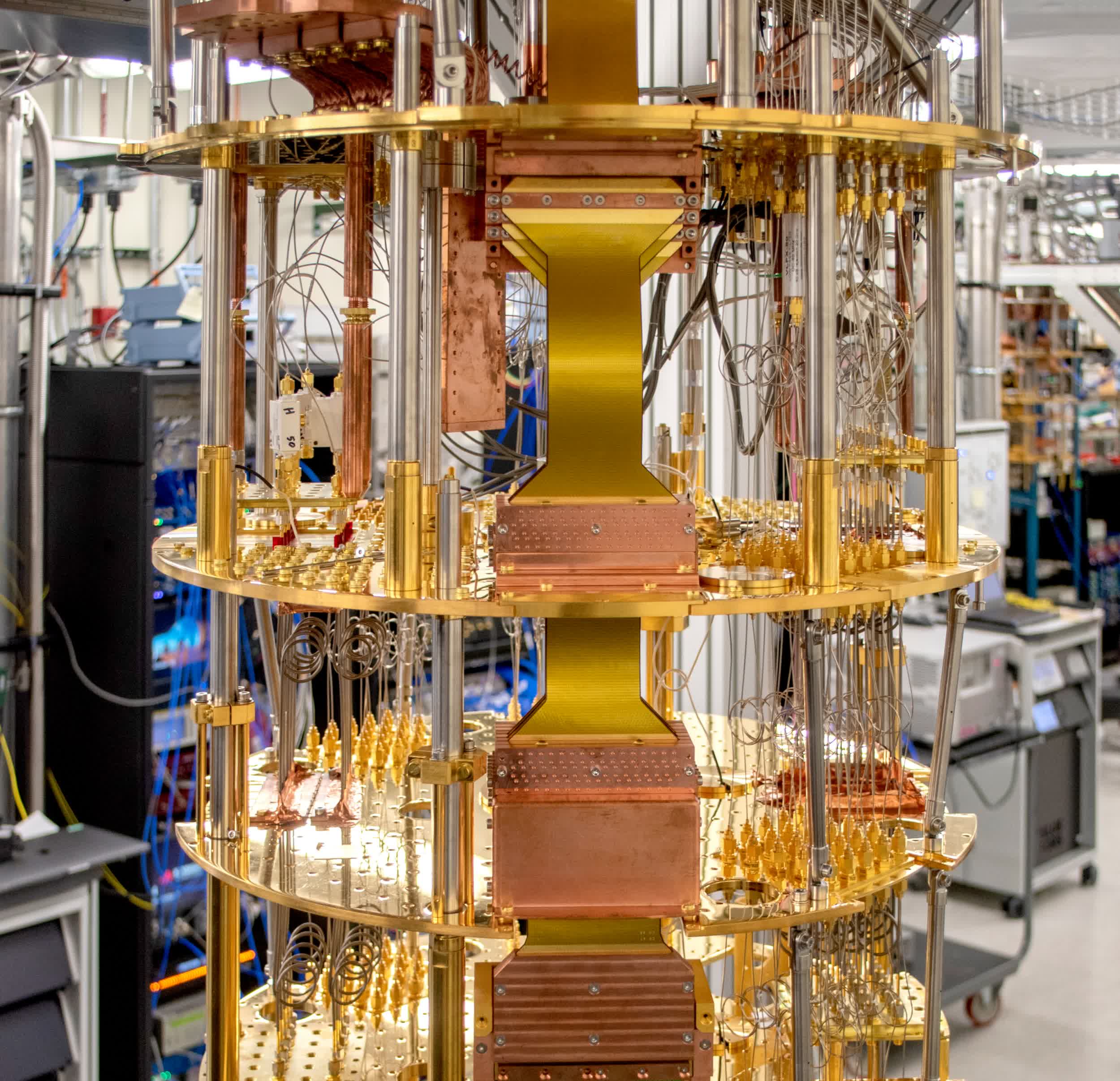

As someone at the forefront of artificial intelligence (AI) and machine learning innovations through my consulting firm, DBGM Consulting, Inc., the latest advancements in quantum machine learning deeply resonate with my continuous pursuit of understanding and leveraging cutting-edge technology. The recent study conducted by a team from Freie Universität Berlin, published in Nature Communications, has brought to light findings that could very well redefine our approach to quantum machine learning.

Quantum Neural Networks: Beyond Traditional Learning

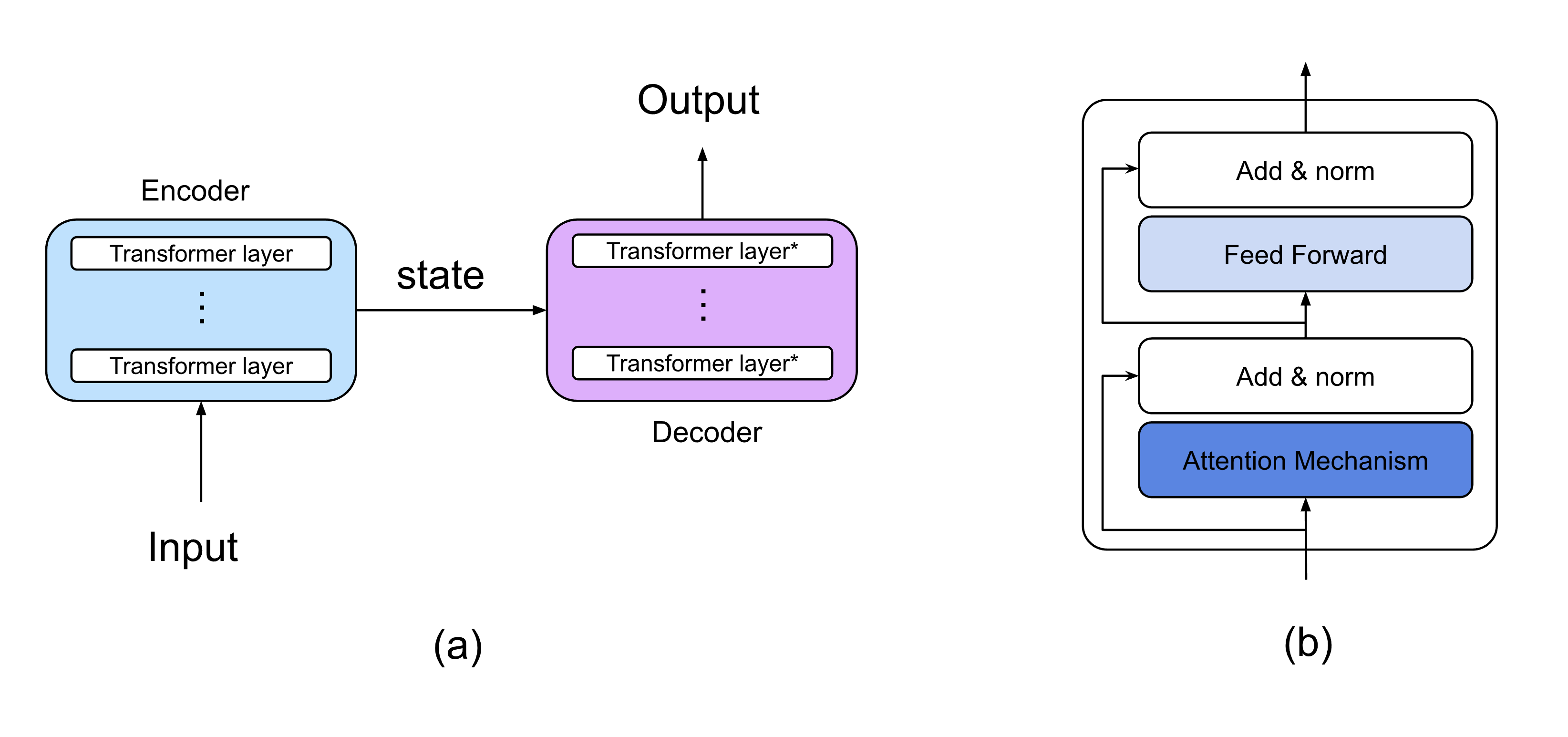

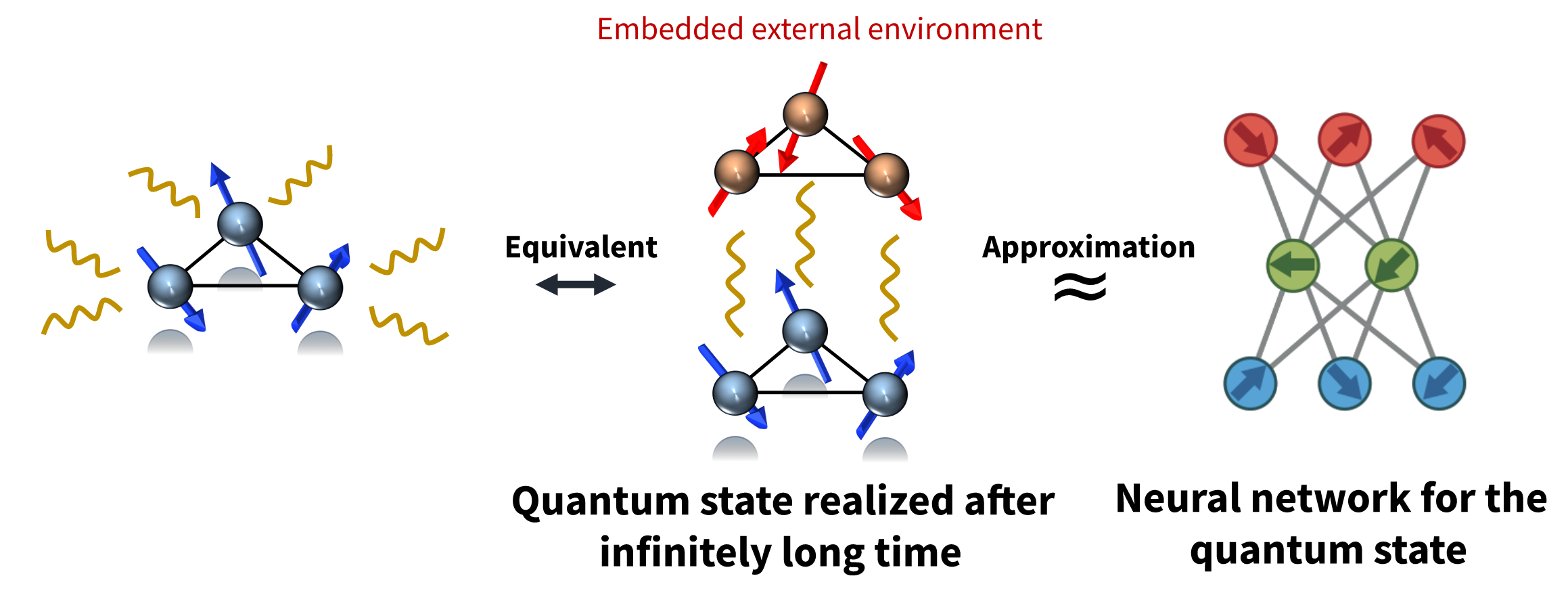

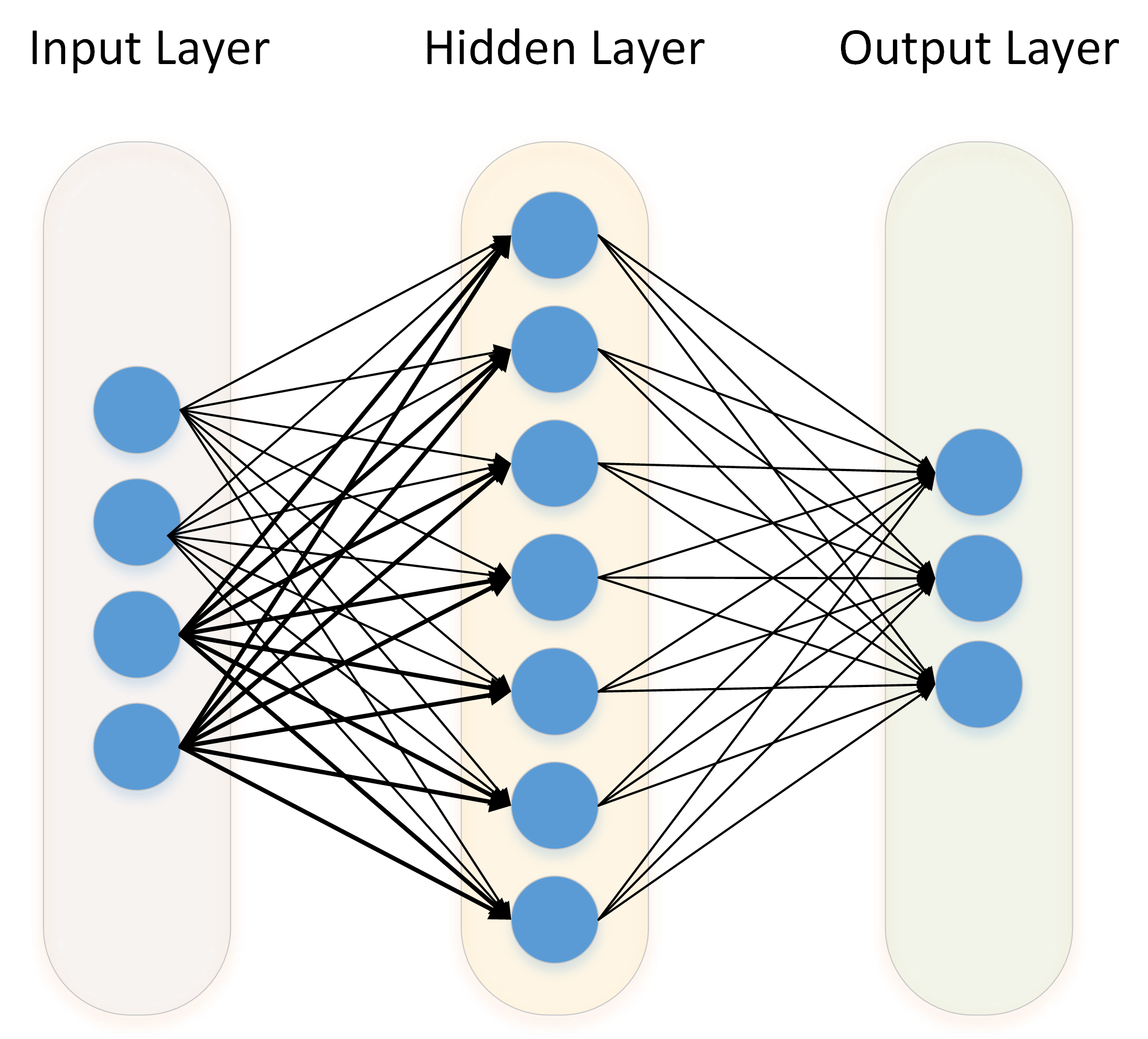

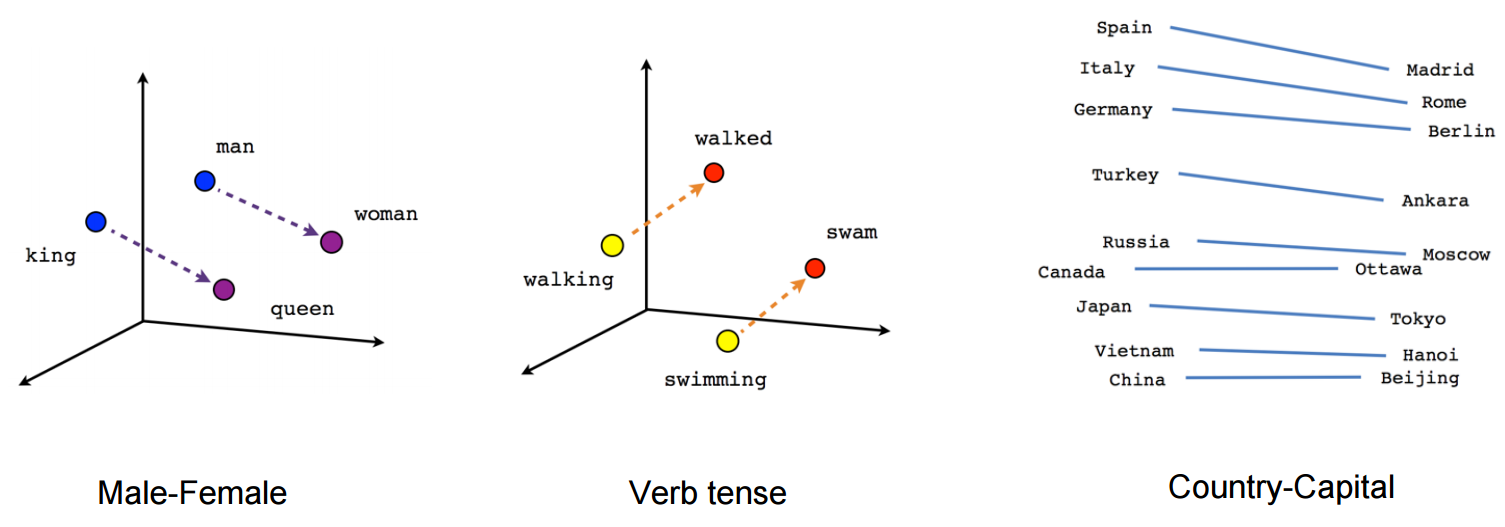

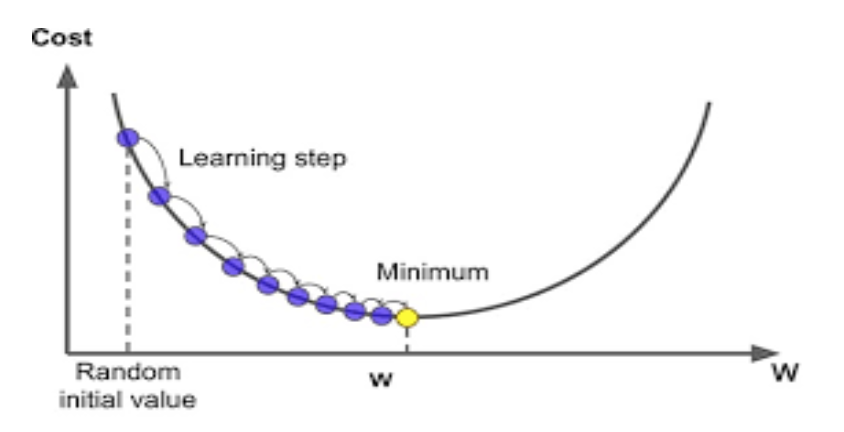

The study titled “Understanding Quantum Machine Learning Also Requires Rethinking Generalization”, has put a spotlight on quantum neural networks, challenging longstanding assumptions within the field. Unlike traditional neural networks which process data linearly or in a fixed sequence, quantum neural networks exploit the principles of quantum mechanics to process information, theoretically enabling them to handle complex problems more efficiently.

< >

>

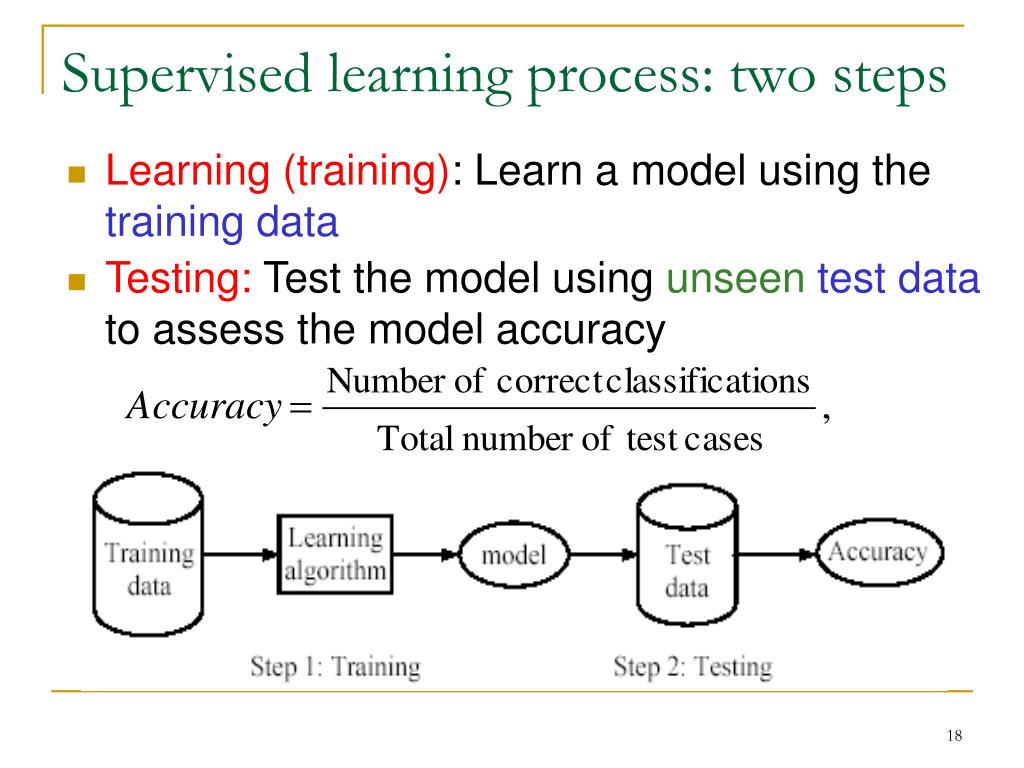

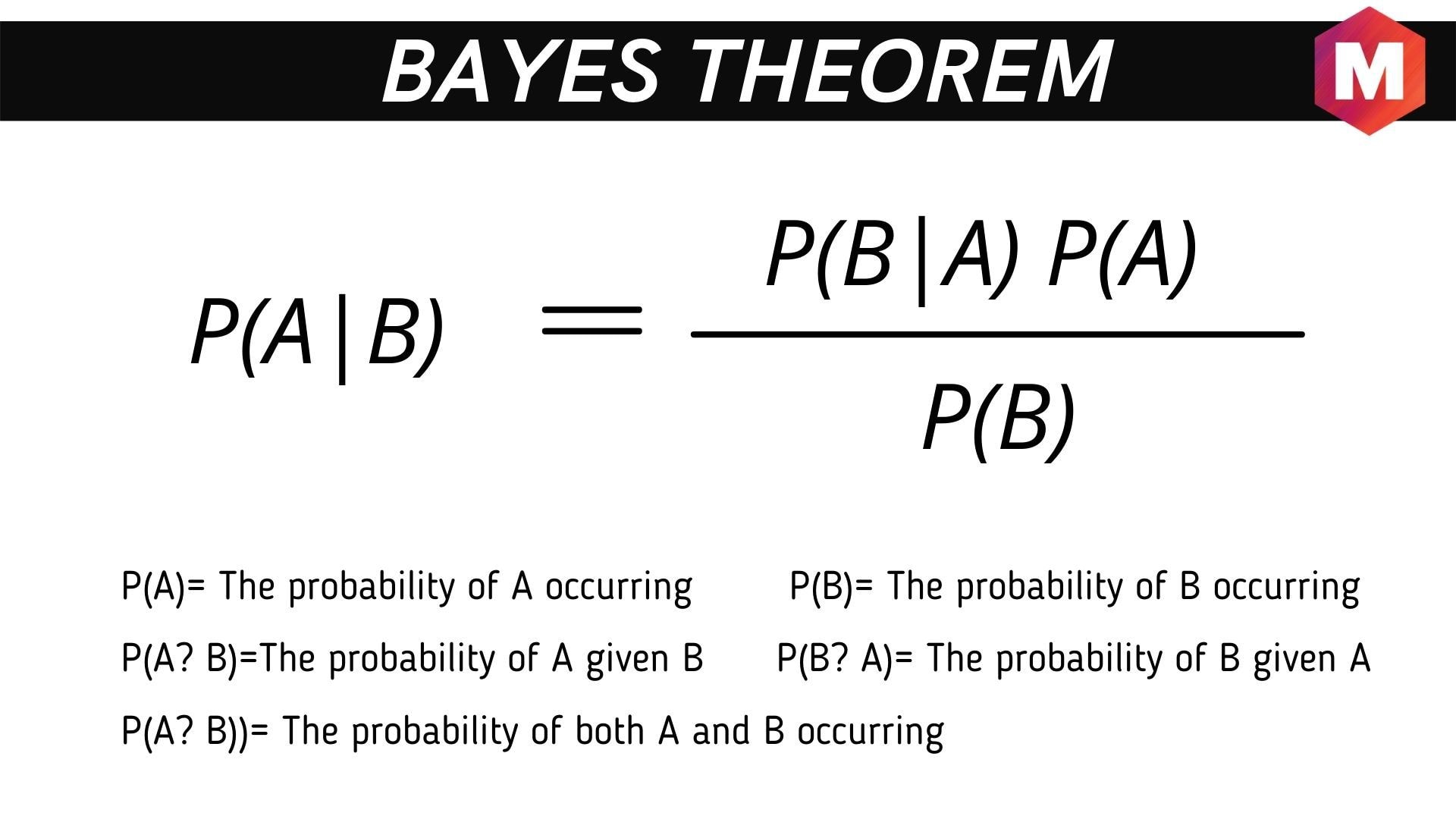

What stands out about this study is its revelation that neuronal quantum networks possess the ability to learn and memorize seemingly random data. This discovery not only challenges our current understanding of how quantum models learn and generalize but also the traditional metrics, like the VC dimension and the Rademacher complexity, used to measure the generalization capabilities of machine learning models.

Implications of the Study

The implications of these findings are profound. Elies Gil-Fuster, the lead author of the study, likens the ability of these quantum neural networks to a child memorizing random strings of numbers while understanding multiplication tables, highlighting their unique and unanticipated capabilities. This comparison not only makes the concept more tangible but also emphasizes the potential of quantum neural networks to perform tasks previously deemed unachievable.

This study suggests a need for a paradigm shift in our understanding and evaluation of quantum machine learning models. Jens Eisert, the research group leader, points out that while quantum machine learning may not inherently tend towards poor generalization, there’s a clear indication that our conventional approaches to tackling quantum machine learning tasks need re-evaluation.

< >

>

Future Directions

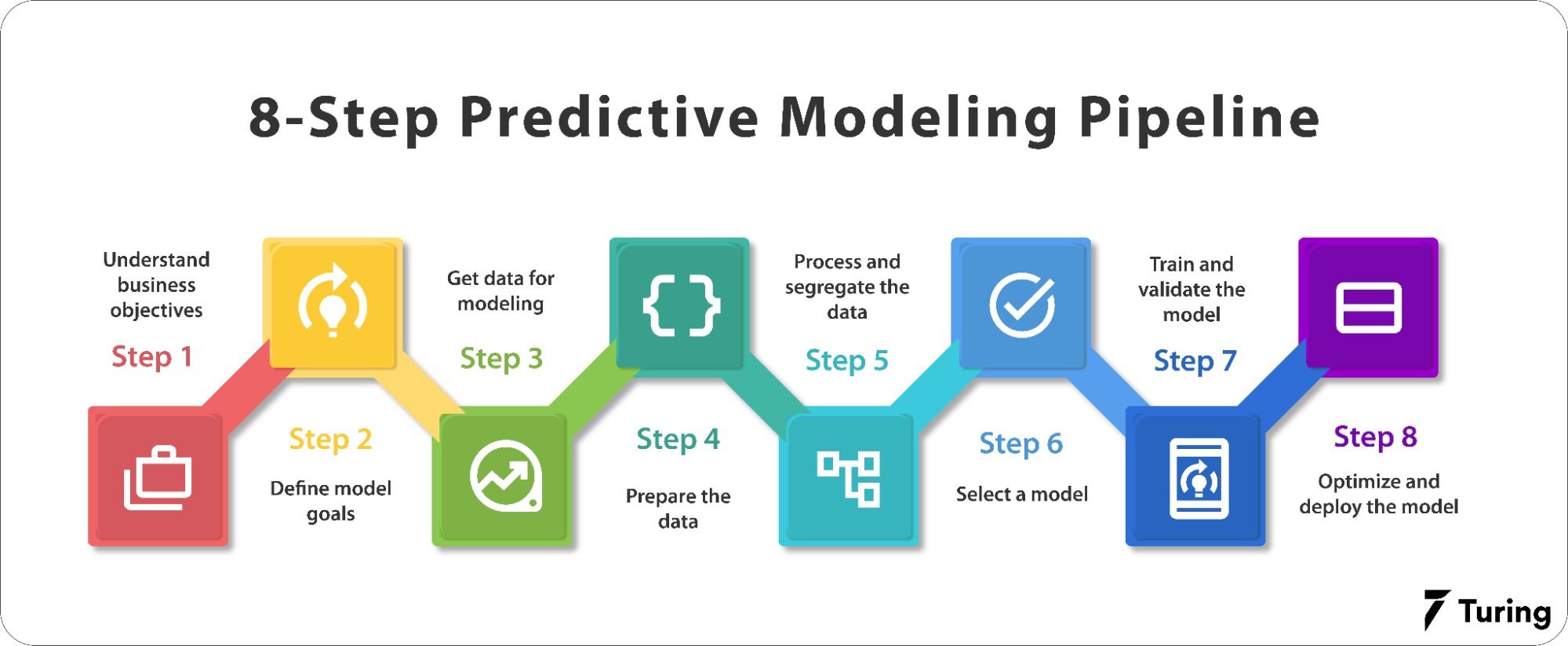

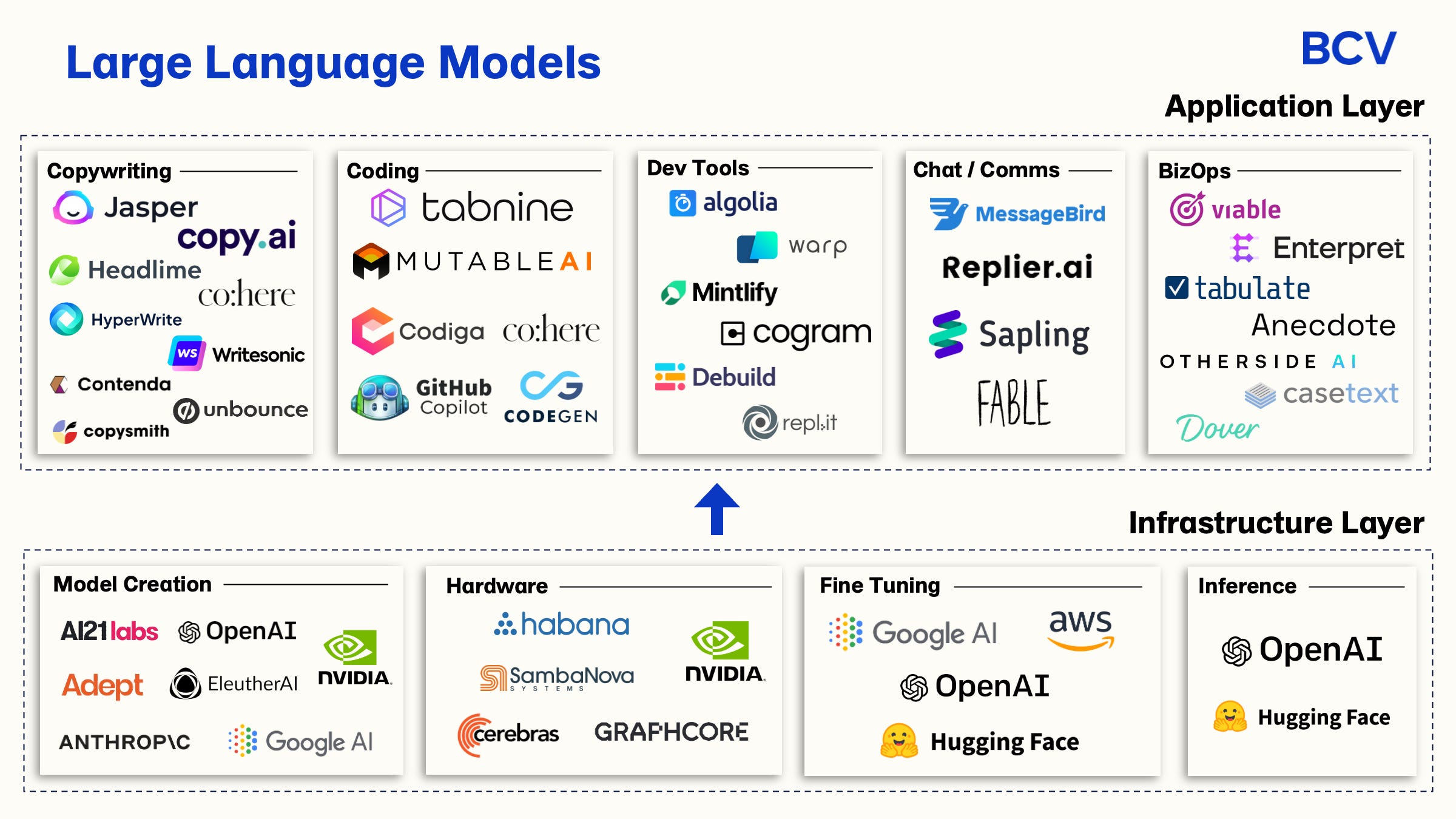

Given my background in AI, cloud solutions, and security, and considering the rapid advancements in AI and quantum computing, the study’s findings present an exciting challenge. How can we, as tech experts, innovators, and thinkers, leverage these insights to revolutionize industries ranging from cybersecurity to automotive design, and beyond? The potential for quantum machine learning to transform critical sectors cannot be understated, given its implications on data processing, pattern recognition, and predictive modeling, among others.

In previous articles, we’ve explored the intricacies of machine learning, specifically anomaly detection within AI. Connecting those discussions with the current findings on quantum machine learning, it’s evident that as we delve deeper into understanding these advanced models, our approach to anomalies, patterns, and predictive insights in data will evolve, potentially offering more nuanced and sophisticated solutions to complex problems.

< >

>

Conclusion

The journey into quantum machine learning is just beginning. As we navigate this territory, armed with revelations from the Freie Universität Berlin’s study, our strategies, theories, and practical applications of quantum machine learning will undoubtedly undergo significant transformation. In line with my lifelong commitment to exploring the convergence of technology and human progress, this study not only challenges us to rethink our current methodologies but also invites us to imagine a future where quantum machine learning models redefine what’s possible.

“Just as previous discoveries in physics have reshaped our understanding of the universe, this study could potentially redefine the future of quantum machine learning models. We stand on the cusp of a new era in technology, understanding these nuances could be the key to unlocking further advancements.”

As we continue to explore, question, and innovate, let us embrace this opportunity to shape a future where technology amplifies human capability, responsibly and ethically. The possibilities are as limitless as our collective imagination and dedication to pushing the boundaries of what is known.

<

>

>

> >

> >

> >

>

>

> >

> >

>

>

> >

> >

>

>

> >

> >

>