Title: Simulating the Future: How AI is Redefining Predictive Learning and Robotics

By: David Maiolo

The world of artificial intelligence continues to astonish, with breakthroughs coming at a dizzying pace. Recent research has unveiled a revolutionary AI system that not only predicts possible futures but creates thousands of them with unparalleled fidelity. Leveraging advanced generative models, this novel approach enables industries ranging from autonomous vehicles to robotics to achieve a deeper understanding of the unpredictable, rare scenarios critical for safe and intelligent decision-making. Let’s dive into how this system works and what it means for the future of AI.

The Long-Tail Problem: Why This Innovation is Vital

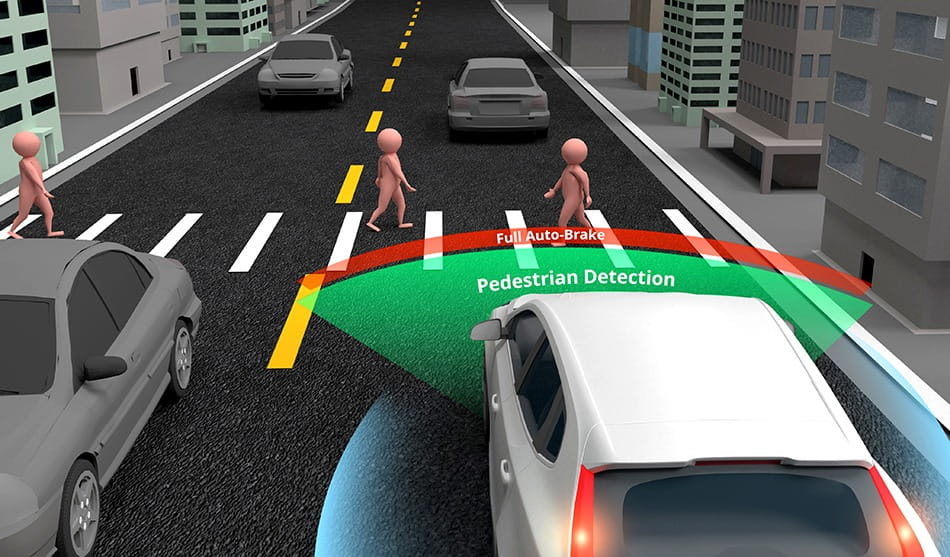

In AI training, especially for systems like self-driving cars and humanoid robots, there is something researchers call the “long-tail problem.” Most scenarios encountered in the real world are well-documented through thousands of videos and datasets. Stopping at a red light or merging onto a highway are standard situations for autonomous vehicles, and AI excels at replicating these behaviors.

However, the real world is messy, full of edge cases that rarely occur but are critical to account for. For instance, imagine a scenario in which a truck transports a set of traffic lights on its flatbed. To the AI, this moving traffic light is a mind-bending anomaly—completely contrary to the fundamental behavior it has learned. While a human can instantly rationalize the situation, AI struggles without vast amounts of training data tailored to these rare events. That’s where this groundbreaking system steps in.

It enables the creation of thousands of unique, nuanced scenarios that AI systems can train on, helping them adapt to even the strangest eventualities. Beyond self-driving cars, this capability is invaluable for training industrial robots, warehouse systems, and even household robots to better interact with their environments.

From Words to Worlds: The Beauty of Generative AI

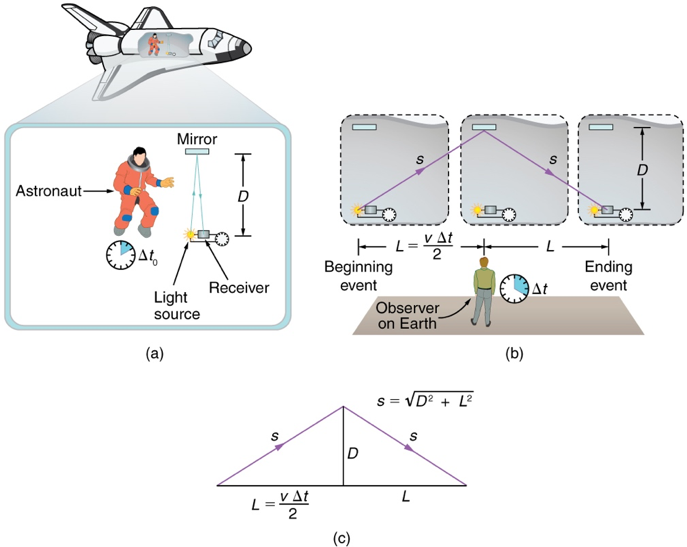

One of the most fascinating aspects of this research is the dual flexibility it offers. At its core, the system combines multiple generative AI models to create video outputs based on text prompts or initial seed images. For instance:

- With an input image and an associated text prompt, the AI generates video continuations of that specific scenario, extending it into a plausible “future.”

- With just a text prompt, the system generates entirely synthetic worlds and events, producing high-quality videos from scratch.

Imagine describing a situation where a robot needs to pick up an apple from various positions in a cluttered environment. Traditionally, you’d need hundreds of real-life recordings of a robot attempting this task to train the neural network effectively. Now, this new method can do it virtually, generating endless variations that AI can train on without using a single physical robot.

And the most incredible part? This new system is open-source, meaning anyone—from researchers to hobbyists—can access and fine-tune it for their unique use cases.

The Challenges of a New Frontier

Despite its promising capabilities, the technology is not without its limitations. The visual outputs, while often impressive, are still far from indistinguishable from reality. Trained on models with 7-14 billion parameters, it requires significant computational resources to generate even a few seconds of video. While a consumer-grade graphics card suffices to run the models, users may have to wait five minutes or more for a single video to render.

What’s more, issues like object permanence—the AI’s understanding that objects persist in the world even when not visible—remain a challenge. In some simulations, objects mysteriously vanish, grow extra appendages, or behave unpredictably. These quirks, while amusing, highlight the work that remains before these systems can fully replicate reality with accuracy.

Applications Beyond Training AI

While the initial focus of this system is on improving AI training data, its potential stretches far beyond that. Consider industries like filmmaking, where directors could generate complex scenes simply by describing them. Architects and urban planners may simulate entirely new cityscapes based on text descriptions or prototype designs. Even video game developers could use this system to populate dynamic, hyper-realistic worlds without manually designing every frame.

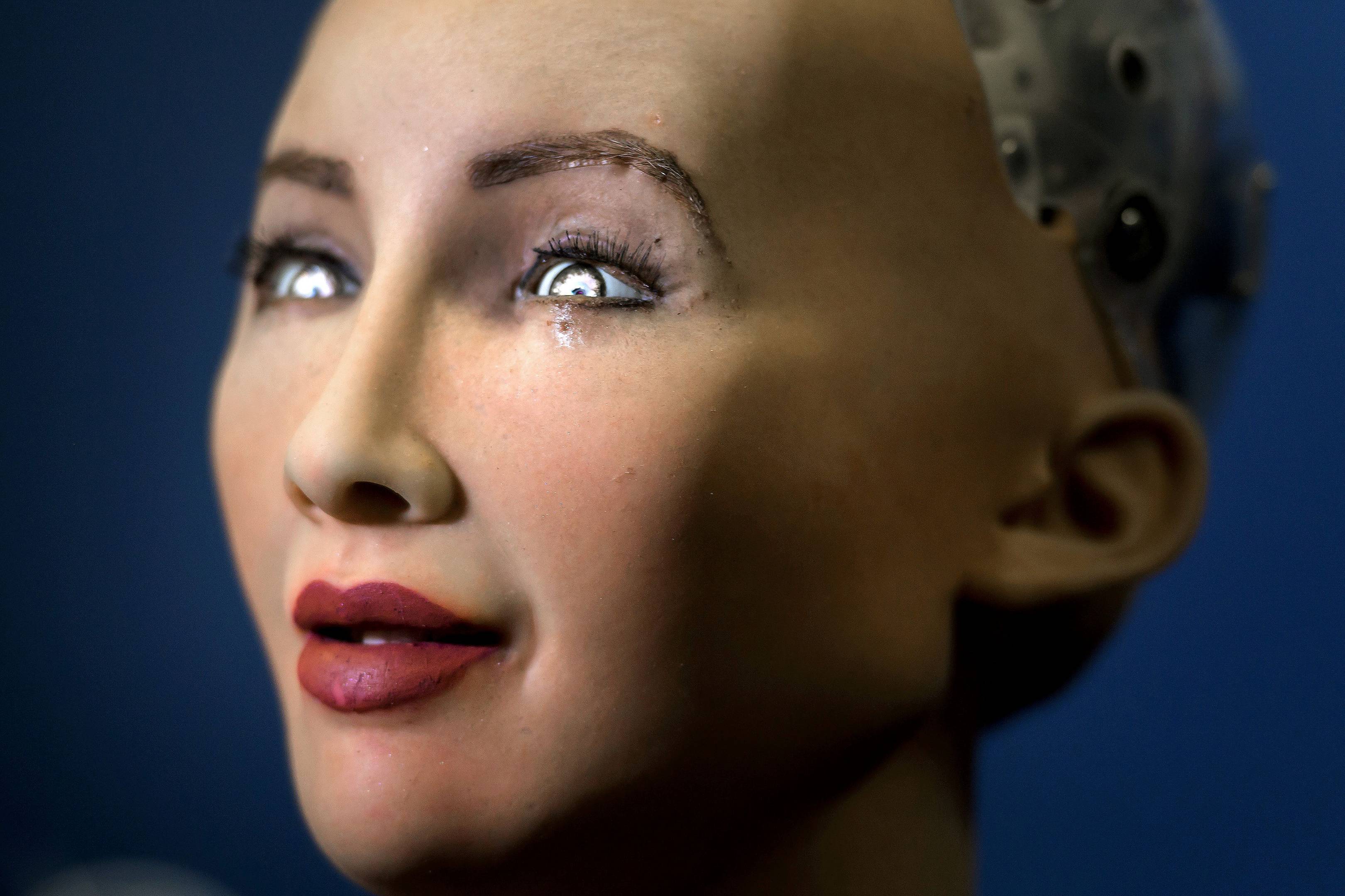

In addition, the technology could play a significant role in enabling robots to gain a deeper “understanding” of the physical world. Warehouse robots could simulate thousands of packing or sorting configurations in various environments, while humanoids could practice navigating unpredictable human spaces virtually.

Research as Process: The First Law of Papers

What stands out most in this development is how it exemplifies the iterative nature of AI research. This is not the final solution, and its limitations are clear. However, as the first law of scientific papers often states: “Do not look at where we are, look at where we will be two more papers down the line.”

Historically, systems like these evolve rapidly. Just a few years ago, the idea of AI instantly creating future scenarios would have been science fiction. Two papers from now, this technology could be exponentially faster, more visually accurate, and efficient, potentially redefining industries as we know them.

In essence, this system represents a stepping stone. The fact that it’s available to researchers globally and open to modifications is critical in accelerating innovation. The collective contributions of the community will ensure that the next iteration brings us closer to a seamless blend of human-like understanding and machine precision.

A Glimpse Into the Future

From generating training data for AI to crafting impossible realities for creative pursuits, this system opens an exciting new chapter in generative AI. While there’s a long road ahead before we can confidently say AI understands the world as intuitively as humans, the progress we’ve made is nothing short of astonishing.

What’s truly remarkable is how democratized this technology has become, bringing cutting-edge capabilities to academics, businesses, and enthusiasts alike. As we look to the horizon, one thing is certain—our future, and thousands of its plausible variations, will be shaped by innovations like this.

>

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

>

>

> >

> >

> >

> >

> >

>