The Deep Dive into Supervised Learning: Shaping the Future of AI

In the evolving arena of Artificial Intelligence (AI) and Machine Learning (ML), Supervised Learning stands out as a cornerstone methodology, driving advancements and innovations across various domains. From my journey in AI, particularly during my master’s studies at Harvard University focusing on AI and Machine Learning, to practical applications at DBGM Consulting, Inc., supervised learning has been an integral aspect of developing sophisticated models for diverse challenges, including self-driving robots and customer migration towards cloud solutions. Today, I aim to unravel the intricate details of supervised learning, exploring its profound impact and pondering its future trajectory.

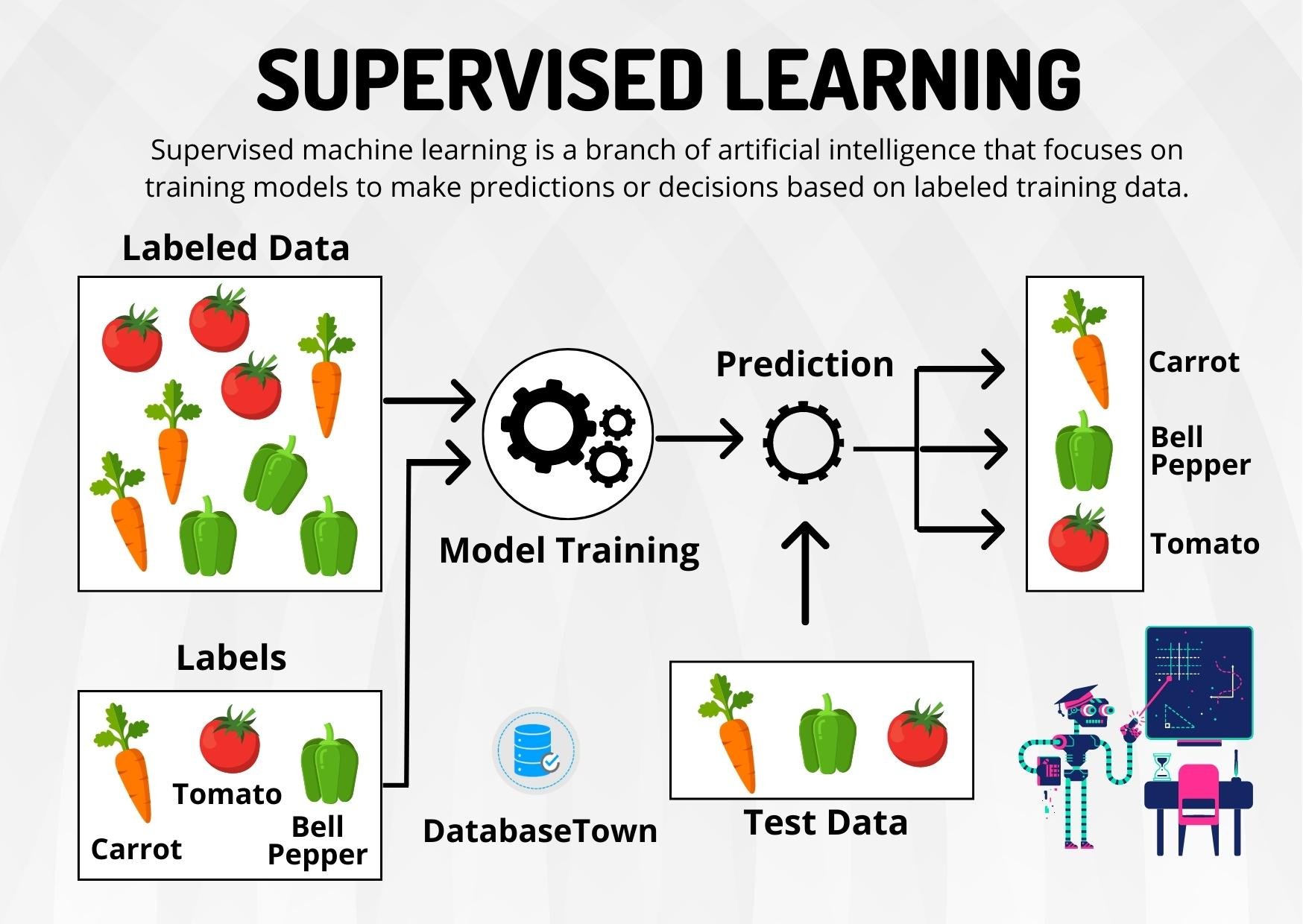

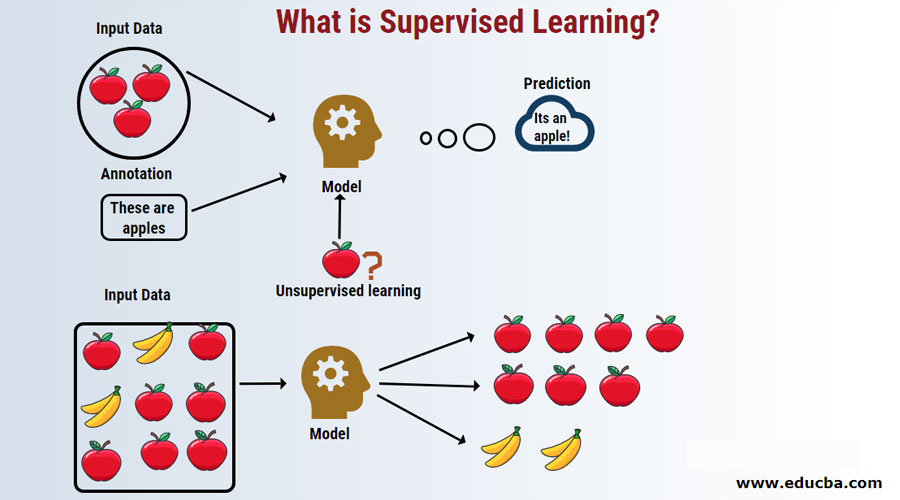

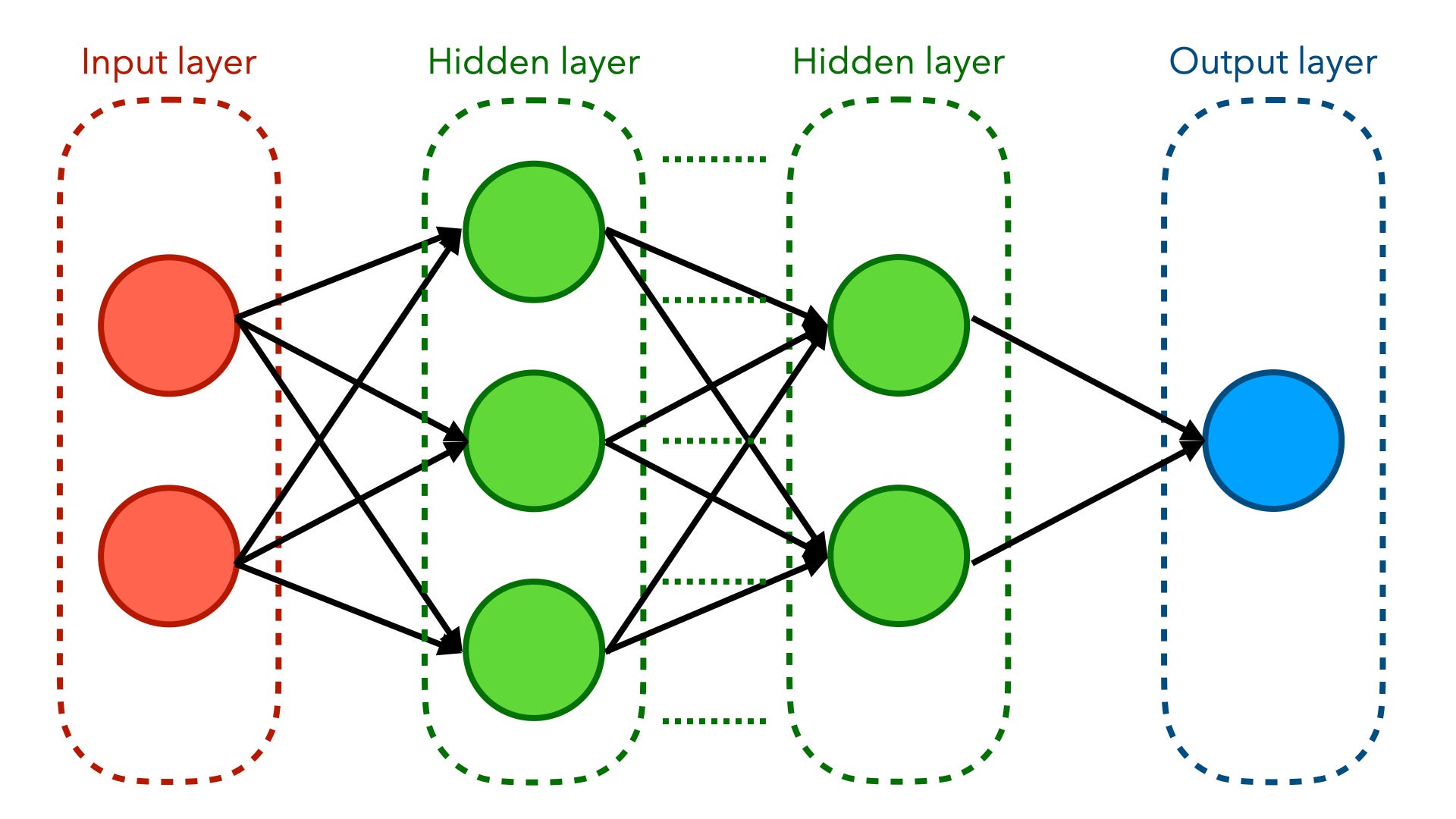

Foundations of Supervised Learning

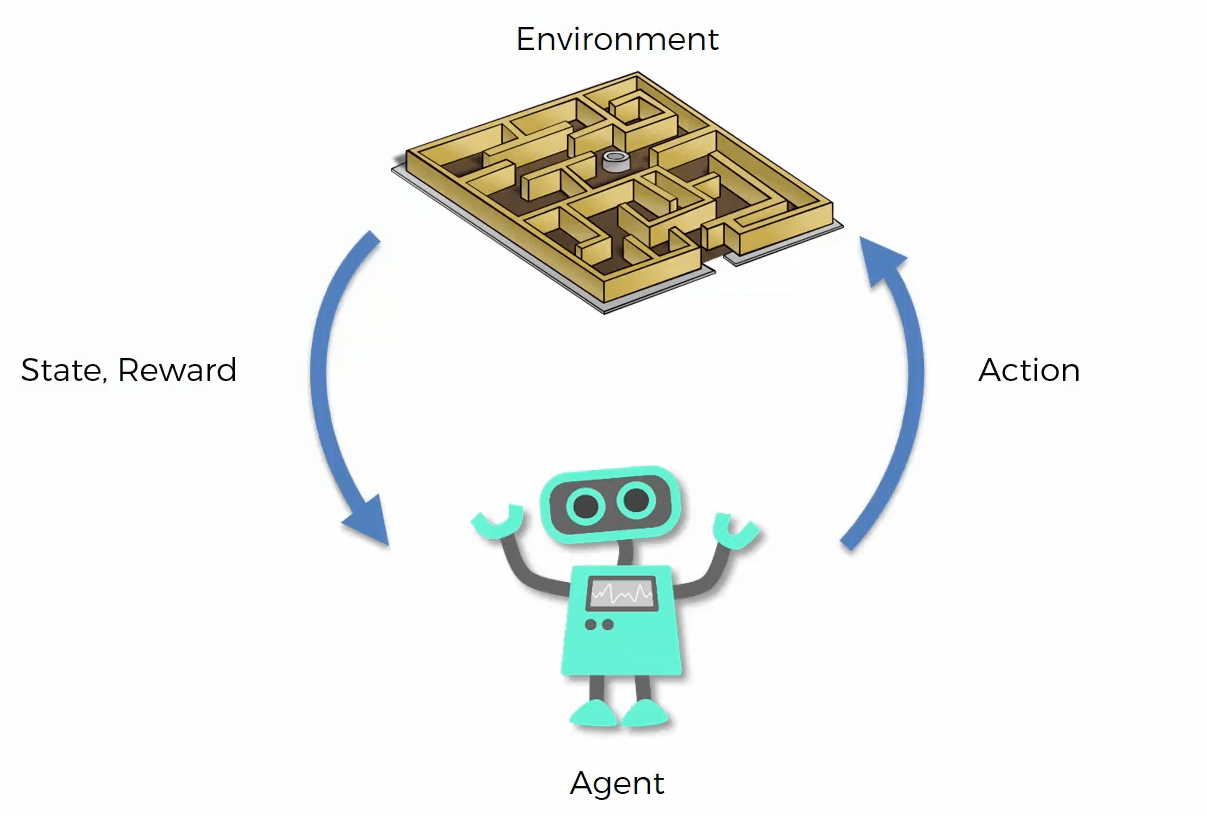

At its core, Supervised Learning involves training a machine learning model on a labeled dataset, which means that each training example is paired with an output label. This approach allows the model to learn a function that maps inputs to desired outputs, and it’s utilized for various predictive modeling tasks such as classification and regression.

Classification vs. Regression

- Classification: Aims to predict discrete labels. Applications include spam detection in email filters and image recognition.

- Regression: Focuses on forecasting continuous quantities. Examples include predicting house prices and weather forecasting.

Current Trends and Applications

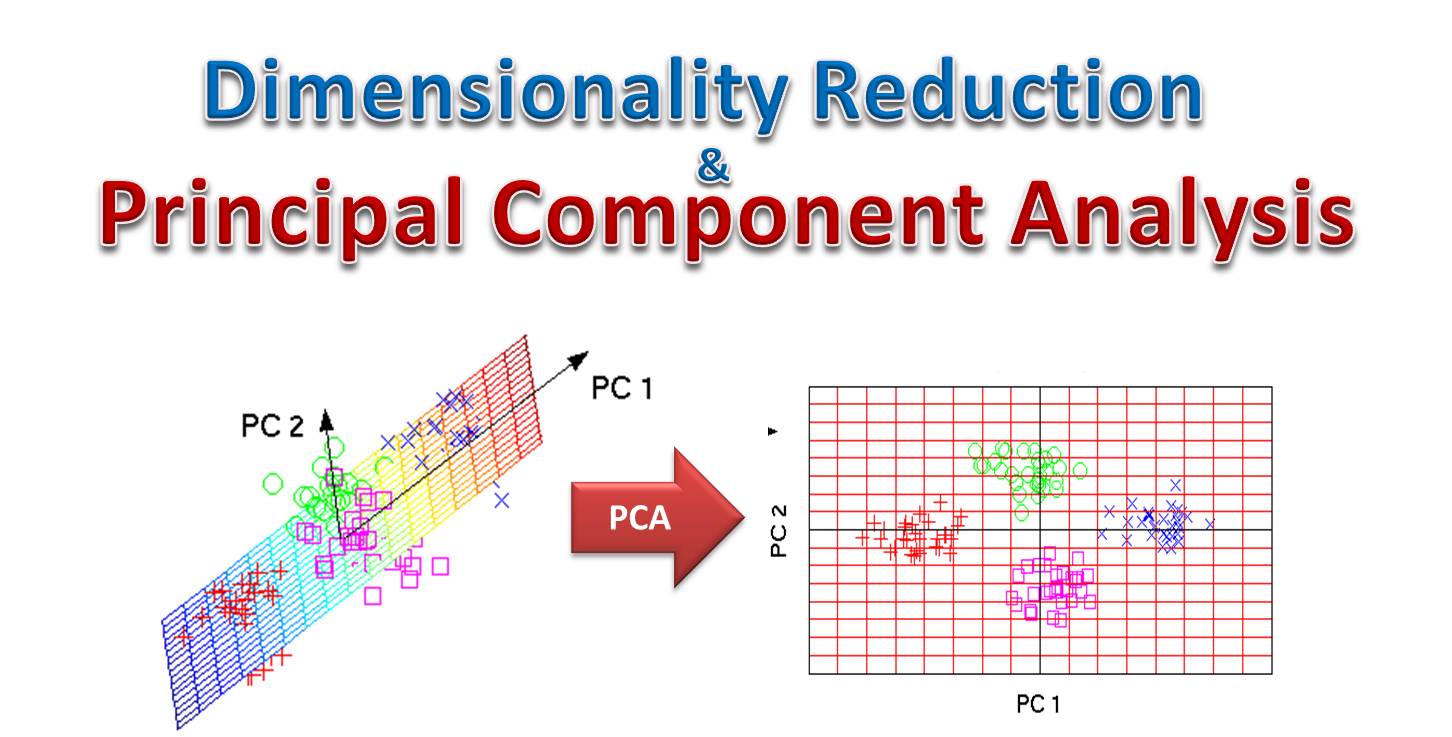

Supervised learning models are at the forefront of AI applications, driving progress in fields such as healthcare, autonomous vehicles, and personalized recommendations. With advancements in algorithms and computational power, we are now able to train more complex models over larger datasets, achieving unprecedented accuracies in tasks such as natural language processing (NLP) and computer vision.

Transforming Healthcare with AI

One area where supervised learning showcases its value is in healthcare diagnostics. Algorithms trained on vast datasets of medical images can assist in early detection and diagnosis of conditions like cancer, often with higher accuracy than human experts. This not only speeds up the diagnostic process but also makes it more reliable.

Challenges and Ethical Considerations

Despite its promise, supervised learning is not without its challenges. Data quality and availability are critical factors; models can only learn effectively from well-curated and representative datasets. Additionally, ethical considerations around bias, fairness, and privacy must be addressed, as the decisions made by AI systems can significantly impact human lives.

A Look at Bias and Fairness

AI systems are only as unbiased as the data they’re trained on. Ensuring that datasets are diverse and inclusive is crucial to developing fair and equitable AI systems. This is an area where we must be vigilant, continually auditing and assessing AI systems for biases.

The Road Ahead for Supervised Learning

Looking to the future, the trajectory of supervised learning is both exciting and uncertain. Innovations in algorithmic efficiency, data synthesis, and generative models promise to further elevate the capabilities of AI systems. However, the path is fraught with technical and ethical challenges that must be navigated with care.

In the spirit of open discussion, I invite you to join me in contemplating these advancements and their implications for our collective future. As someone deeply embedded in the development and application of AI and ML, I remain cautious yet optimistic about the role of supervised learning in shaping a future where technology augments human capabilities, making our lives better and more fulfilling.

Continuing the Dialogue

As AI enthusiasts and professionals, our task is to steer this technology responsibly, ensuring its development is aligned with human values and societal needs. I look forward to your thoughts and insights on how we can achieve this balance and fully harness the potential of supervised learning.

< >

>

< >

>

< >

>

For further exploration of AI and Machine Learning’s impact across various sectors, feel free to visit my previous articles. Together, let’s dive deep into the realms of AI, unraveling its complexities and envisioning a future powered by intelligent, ethical technology.

>

> >

> >

>