Unlocking Complex AI Challenges with Structured Prediction and Large Language Models

Delving Deeper into Structured Prediction and Large Language Models in Machine Learning

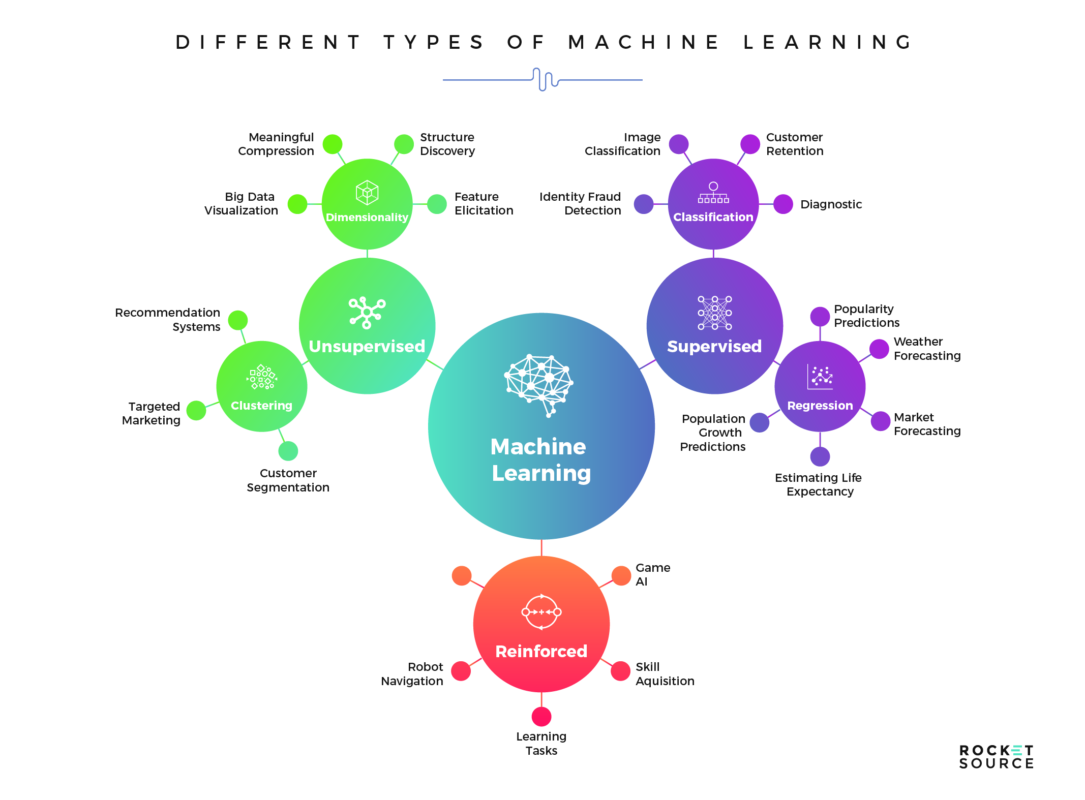

In recent discussions on the advancements and applications of Machine Learning (ML), a particular area of interest has been structured prediction. This technique, essential for understanding complex relationships within data, has seen significant evolution with the advent of Large Language Models (LLMs). The intersection of these two domains has opened up new methodologies for tackling intricate ML challenges, guiding us toward a deeper comprehension of artificial intelligence’s potential. As we explore this intricate subject further, we acknowledge the groundwork laid by our previous explorations into the realms of sentiment analysis, anomaly detection, and the broader implications of LLMs in AI.

Understanding Structured Prediction

Structured prediction in machine learning is a methodology aimed at predicting structured objects, rather than singular, discrete labels. This technique is critical when dealing with data that possess inherent interdependencies, such as sequences, trees, or graphs. Applications range from natural language processing (NLP) tasks like syntactic parsing and semantic role labeling to computer vision for object recognition and beyond.

< >

>

One of the core challenges of structured prediction is designing models that can accurately capture and leverage the complex dependencies in output variables. Traditional approaches have included graph-based models, conditional random fields, and structured support vector machines. However, the rise of deep learning and, more specifically, Large Language Models, has dramatically shifted the landscape.

The Role of Large Language Models

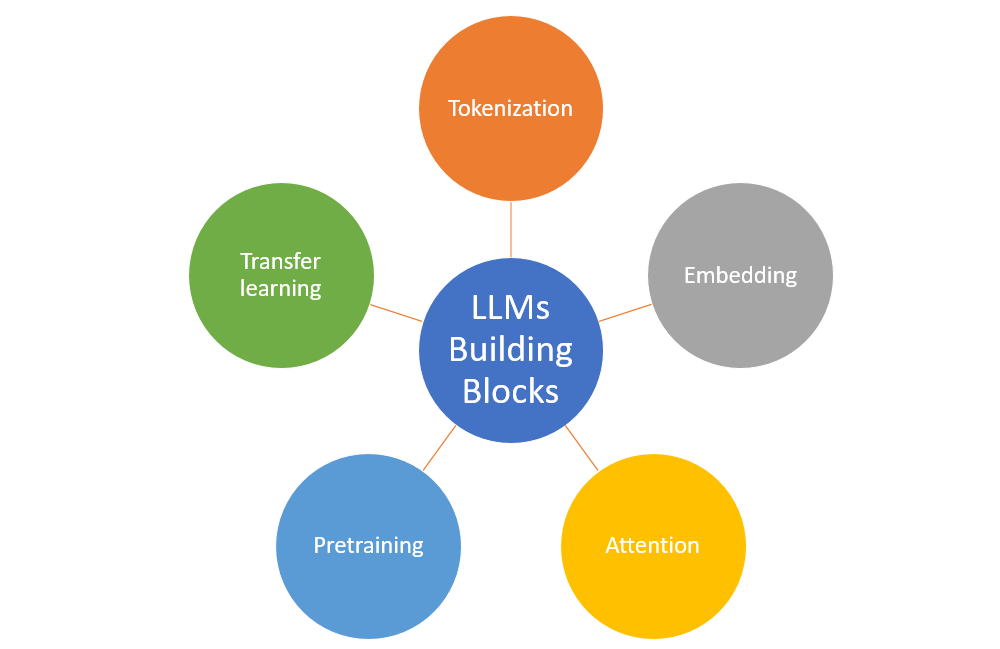

LLMs, such as GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers), have revolutionized numerous fields within AI, structured prediction included. These models’ ability to comprehend and generate human-like text is predicated on their deep understanding of language structure and context, acquired through extensive training on vast datasets.

< >

>

Crucially, LLMs excel in tasks requiring an understanding of complex relationships and patterns within data, aligning closely with the objectives of structured prediction. By leveraging these models, researchers and practitioners can approach structured prediction problems with unparalleled sophistication, benefiting from the LLMs’ nuanced understanding of data relationships.

Integration of LLMs in Structured Prediction

Integrating LLMs into structured prediction workflows involves utilizing these models’ pre-trained knowledge bases as a foundation upon which specialized, task-specific models can be built. This process often entails fine-tuning a pre-trained LLM on a smaller, domain-specific dataset, enabling it to apply its broad linguistic and contextual understanding to the nuances of the specific structured prediction task at hand.

For example, in semantic role labeling—an NLP task that involves identifying the predicate-argument structures in sentences—LLMs can be fine-tuned to not only understand the grammatical structure of a sentence but to also infer the latent semantic relationships, thereby enhancing prediction accuracy.

Challenges and Future Directions

Despite the significant advantages offered by LLMs in structured prediction, several challenges remain. Key among these is the computational cost associated with training and deploying these models, particularly for tasks requiring real-time inference. Additionally, there is an ongoing debate about the interpretability of LLMs’ decision-making processes, an essential consideration for applications in sensitive areas such as healthcare and law.

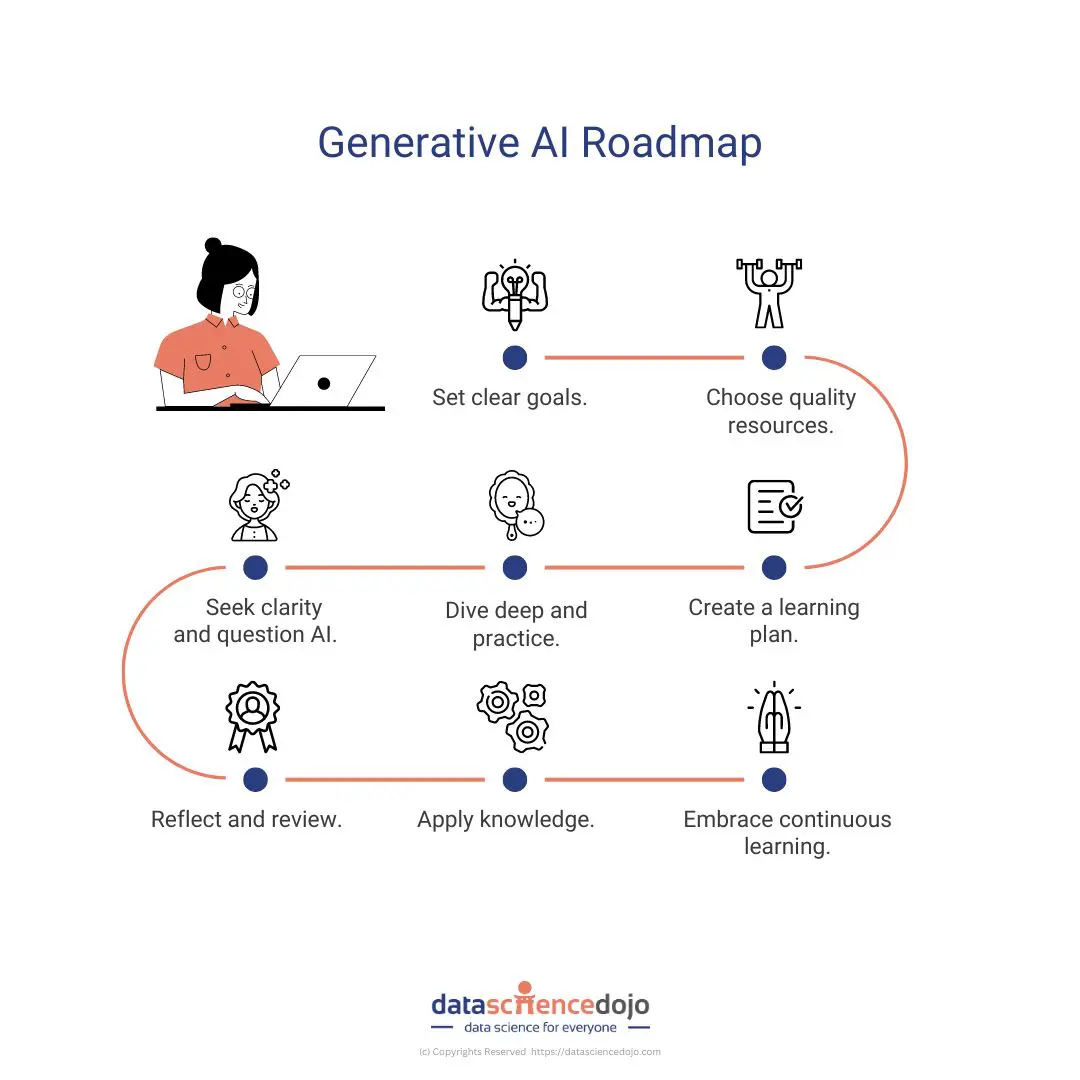

Looking ahead, the integration of structured prediction and LLMs in machine learning will likely continue to be a fertile ground for research and application. Innovations in model efficiency, interpretability, and the development of domain-specific LLMs promise to extend the reach of structured prediction to new industries and problem spaces.

< >

>

In conclusion, as we delve deeper into the intricacies of structured prediction and large language models, it’s evident that the synergy between these domains is propelling the field of machine learning to new heights. The complexity and richness of the problems that can now be addressed underscore the profound impact that these advances are poised to have on our understanding and utilization of AI.

As we navigate this evolving landscape, staying informed and critically engaged with the latest developments will be crucial for leveraging the full potential of these technologies, all while navigating the ethical and practical challenges that accompany their advancement.

Leave a Reply

Want to join the discussion?Feel free to contribute!